7.8 Multisensory Design Applications

Rich multisensory experiences with products, environments, and services are the norm; we do not break down design in terms of vision, smell, touch, hearing, taste, or perceptions of mobility. Good design immerses us in holistic interactions that stimulate us to achieve our goals through optimizing the principles of flow, maintaining focus, managing demands on attention, and sequencing as discussed in the previous section. This section focuses on design applications that target different multisensory abilities and unique multimodal design combinations such as crossmodal and accessible design, sensory congruency, multisensory integrations, synaesthetic influences, and the dynamics of movement.

Crossmodal and Accessible Design

Sensory experiences are not only multi-layered but also unique for each of us. One of the designer’s roles is to design adaptable features that respond to an individual’s sensory variations and capabilities. Sometimes we may find that the physiological operations of one sensory modality affect the physiological operations of another (Fulkerson, 2013). These multimodal experiences are called multimodally emergent (IBID, 2013). For example, when walking on snow and ice, you can both see and feel how slippery the surface is under your feet as you move along. Your perceptions about how you are going to adjust your efforts to the condition of the ice evolve in pace with multimodal visual and tactile emergent feedback as you skate along.

Crossmodal adjustments in response to slippery ice: adjusting poles with prongs for stability

Where some senses complement one another, like taste and smell, others may compensate for one another. Have you ever observed an older adult grab onto a handrail to determine their position on the stairs rather than relying on their sense of kinesthesia (the ability to detect body position)? Some elderly individuals or persons with disabilities will replace or reinforce a blocked or weak sense with a more suitable or accurate sense (Park & Alderman, 2018, Frankel, 2015). In this case, it makes a lot of sense to hold (tactile) the stair railing instead of risking a misstep due to the inability to see (visual) clearly, to judge (cognitive and proprioceptive) distance, or to lift (tactile and kinesthetic) a leg – all of which could lead to a fall. These kinds of compensations are called crossmodal relationships between senses. They relate to evolving sensory capabilities pertaining to ageing, illness, or disability, where one sense fills in (compensates) when the other sense is not working well or at all.

A disability may be permanent, situational, or temporary. Where a person could once dance the night away, they may now be swaying on an assistive device such as a cane in time with the music, no longer able to bend fragile knees without it – but enjoying the dance anyway in an adjusted situational mode. In another situational and temporary activity, a mother may be working on her laptop with one arm while holding her young child in the other. These days, her temporary disability can be supported by voice recognition and voice control software. In any case, designers should be sensitive to how crossmodal accommodations could affect the design of a product, place, or interface.

Accommodating crossmodal and accessible needs with assistive devices and services in different scenarios

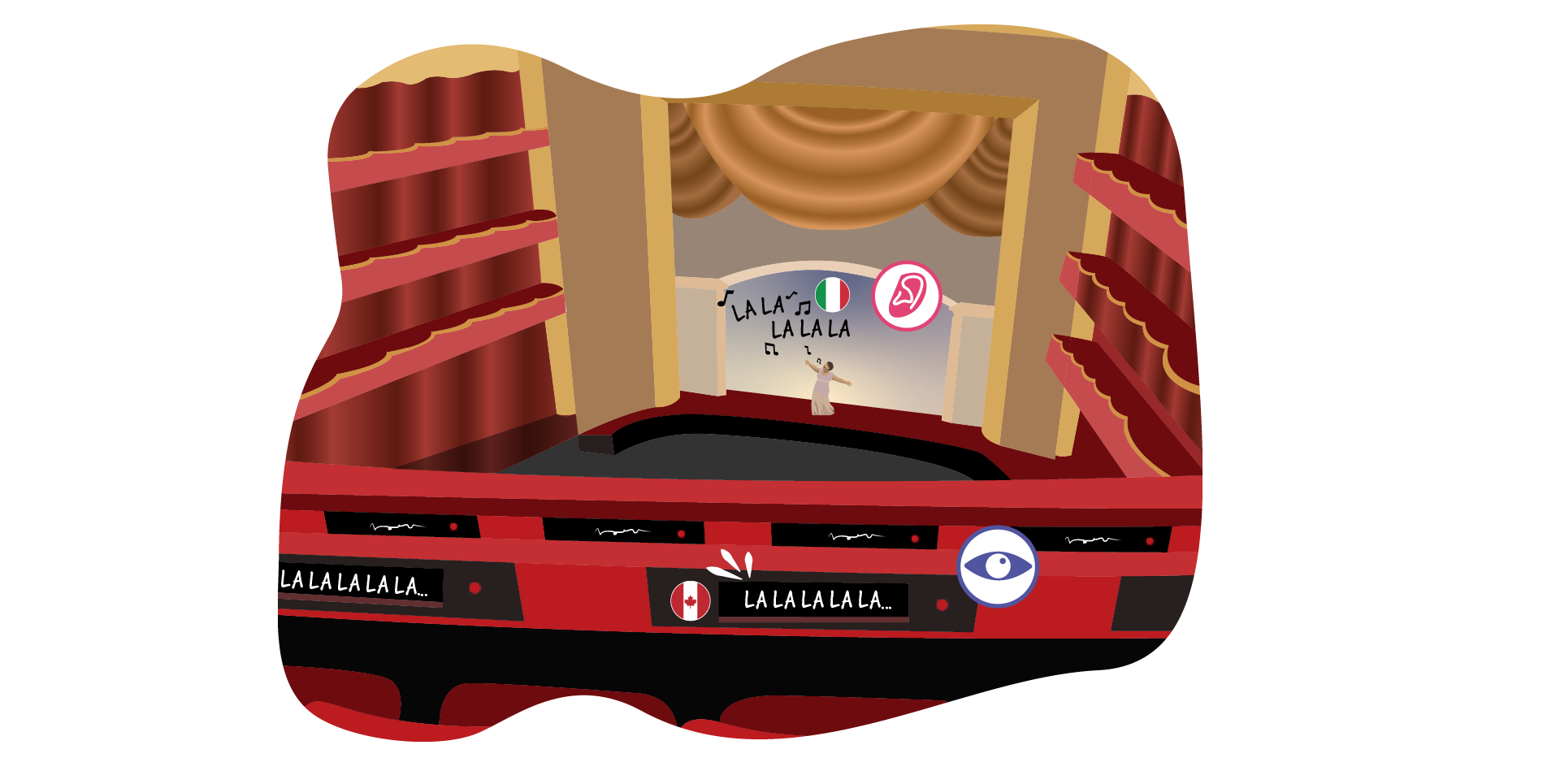

The designer’s key task is to clarify goals for using a product, service, or environment before determining which senses should come into play (Park & Alderman, 2018). Many experiences can be well supported or enhanced through multimodal and accessible design using devices, services, or extra environmental features. Consider the scenario of watching a movie or an opera; the option to select (tactile) a specific language and read the close-captioned translation on a screen (visual) while listening to the scene (auditory) serves a wide audience of people with different sensory and cognitive needs. This substitution strategy addresses sensory and cognitive preferences, and in the end, may improve the experiences for everyone, even those without disabilities (Park & Alderman, 2018).

A close-captioning substitution strategy may improve everyone’s experience

Accessible Design accommodates crossmodal sensory augmentation

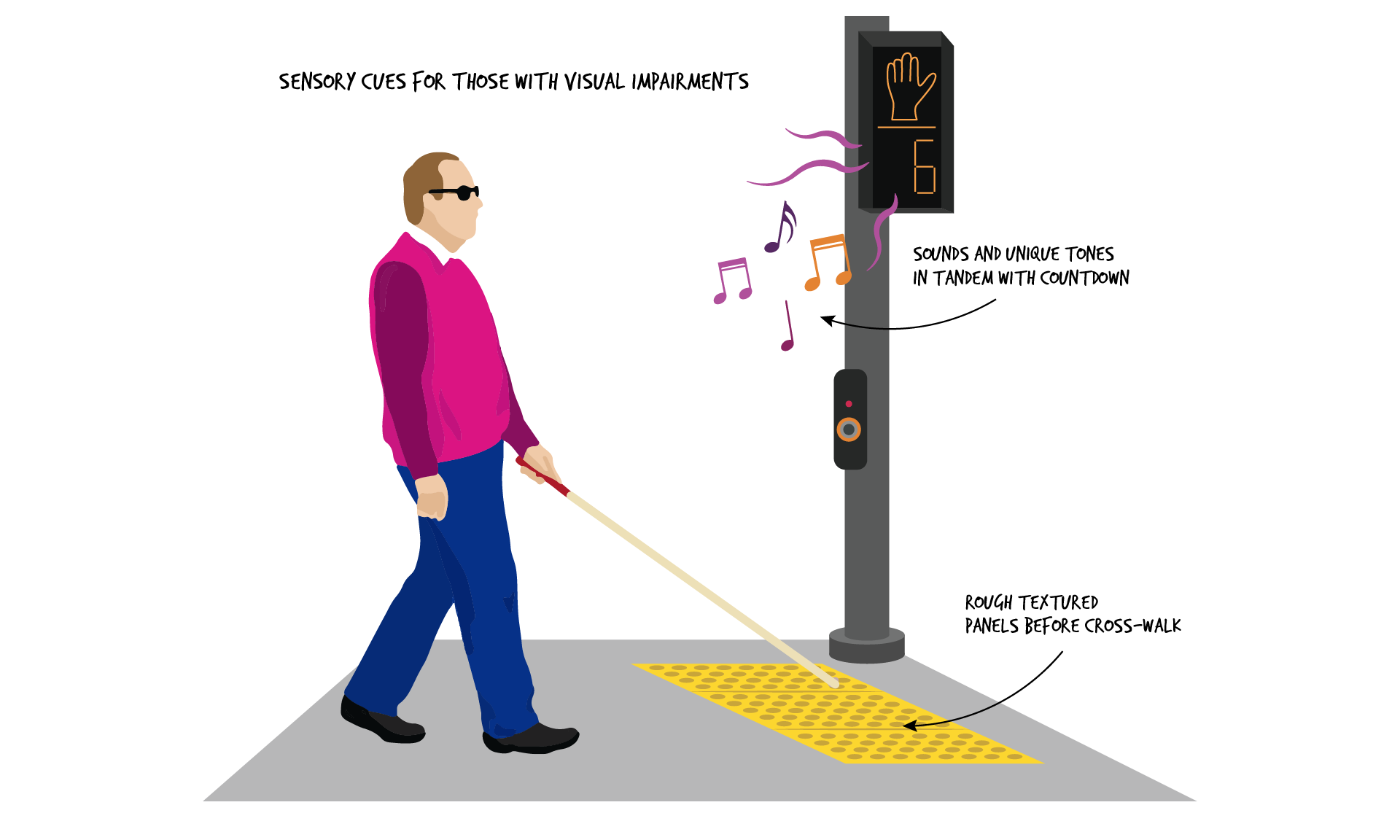

Have you ever noticed a person walking on the sidewalk while tapping with a white cane or holding a seeing-eye dog on a leash? People with vision impairments often turn to other senses to replace or augment vision – feeling for the curb on the side of the road when crossing the street, listening to the sound of the traffic lights to know when and where to enter the intersection, or relying more heavily on their sense of smell to identify weather conditions, like rain. How often do you consider whether the rain will make the streets more slippery and affect your ability to cross when cars are coming? People with vision impairments may be more aware of the overall multisensory feedback in their surroundings than those without visual impairments. Since we all experience our environments differently, flexible and inclusive multimodal features should be designed to accommodate variations in our differing capabilities. Designers are trained to explore alternative ways of sensing and interacting with objects, services, and environments through iterative design development processes. By integrating crossmodal design features into everyday interactions with products, services, and environments, an exploratory design approach can contribute to a better experience for all.

Next section: 7.9 Sensory Congruity and Multisensory Integration