Hessian of a Function

Definition

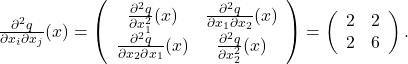

The Hessian of a twice-differentiable function ![]() at a point

at a point ![]() is the matrix containing the second derivatives of the function at that point. That is, the Hessian is the matrix with elements given by

is the matrix containing the second derivatives of the function at that point. That is, the Hessian is the matrix with elements given by

The Hessian of ![]() at

at ![]() is often denoted

is often denoted ![]() .

.

The second derivative is independent of the order in which derivatives are taken. Hence, ![]() for every pair

for every pair ![]() . Thus, the Hessian is a symmetric matrix.

. Thus, the Hessian is a symmetric matrix.

Examples

Hessian of a quadratic function

Consider the quadratic function

The Hessian of ![]() at

at ![]() is given by

is given by

For quadratic functions, the Hessian is a constant matrix, that is, it does not depend on the point at which it is evaluated.

Hessian of the log-sum-exp function

Consider the ‘‘log-sum-exp’’ function ![]() , with values

, with values

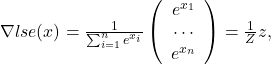

The gradient of ![]() at

at ![]() is

is

where ![]() ,

, ![]() . The Hessian is given by

. The Hessian is given by

More generally, the Hessian of the function ![]() with values

with values

is as follows.

- First the gradient at a point

is (see here):

is (see here):

where ![]() , and

, and ![]() .

.

- Now the Hessian at a point

is obtained by taking derivatives of each component of the gradient. If

is obtained by taking derivatives of each component of the gradient. If  is the

is the  -th component, that is,

-th component, that is,

then

and, for ![]() :

:

More compactly: