Can We See It?

Introduction

One of the interesting things about trust is that you can’t see it. I like to compare it to the wind, or to light. You can only see these things by the effect that they have: trees bend (and if they grow in places where the prevailing wind is pretty constant, they grow sideways). You don’t see the light traveling through the air, or a vacuum for that matter. You only see light when it hits something–the ground, a leaf, particles of smoke. Trust is a lot like that.

You can’t explicitly see trust between two people. You can’t see trust between a person and a computer. You can’t see trust between a person and a dog. What you can see is the effect that trust has on the agents in the interaction. There is a tangible difference between a scene with two people who trust each other talking to one another, over one where the people don’t trust each other. If you are in the same room you can feel the difference. You can feel when you are trusted by a dog, and the dog feels it back.

Look at this picture:

You can see the rainbow (I had to stop to take the picture and it’s a little blurry, but I had never seen a rainbow so red before). What’s a rainbow? Light, refracted through water particles in the sky. Without the water you wouldn’t see the light. Without trust, you don’t see much light either.

While I was researching trust a long time ago I faced a quandary: how to represent it in a way that computers could actually use it. It occurred to me that numbers were probably a good thing. I was taken with Deutsch’s work, which discussed subjective probability. What if you could represent trust that way, I wondered. Simple answer? Probably – after all, look back at Gambetta’s definition.

More complicated answer? Maybe not. Trust isn’t a probabilistic notion. It’s not like the people in a trusting relationship get up every morning and flip a coin every time they want to make a move. So whilst there’s a probability that someone might do something, it’s not up to chance. Let’s put it another way: when I’m in a relationship and considering someone being able to do something, whilst I might say that the person is “more likely than not” to do that thing, it’s not like they roll a d100[1] and do it one day and not the next because the d100 rolled differently.

Bear this in mind. It’s important, and we will come back to it.

The Continuum of Trust

So, using a ‘sort of’ probability is a relatively straightforward way to represent the phenomenon until we get all bogged down with the real way it works. For instance, you could represent trust on a scale from, say, 0 to 1, where 0 is no trust and 1 is full trust. More on that one later because, well, full trust isn’t really trust. You’ll see.

I worked with that for quite some time until it occurred to me that there was something I couldn’t account for. What, I wondered, did distrust look like? Gambetta sees it as symmetrical to trust in his definition. Others (McKnight especially) see it as distinct from trust.

You see, distrust and trust are related but not the same. Whilst you might be thinking to yourself “Whatever. Stop sweating the small stuff. Make 0 to 0.5 distrust and 0.5 to 1 trust” I respectfully suggest that it doesn’t work that way. Distrust is distrust, it’s not a lesser value of trust.

But the two are related.

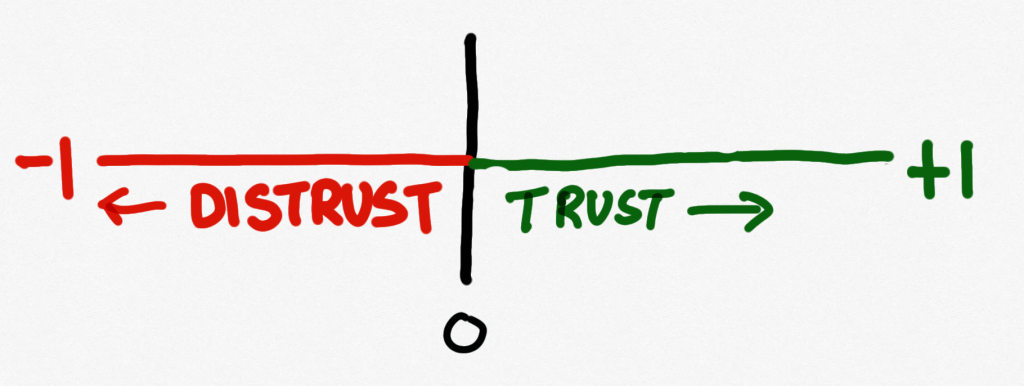

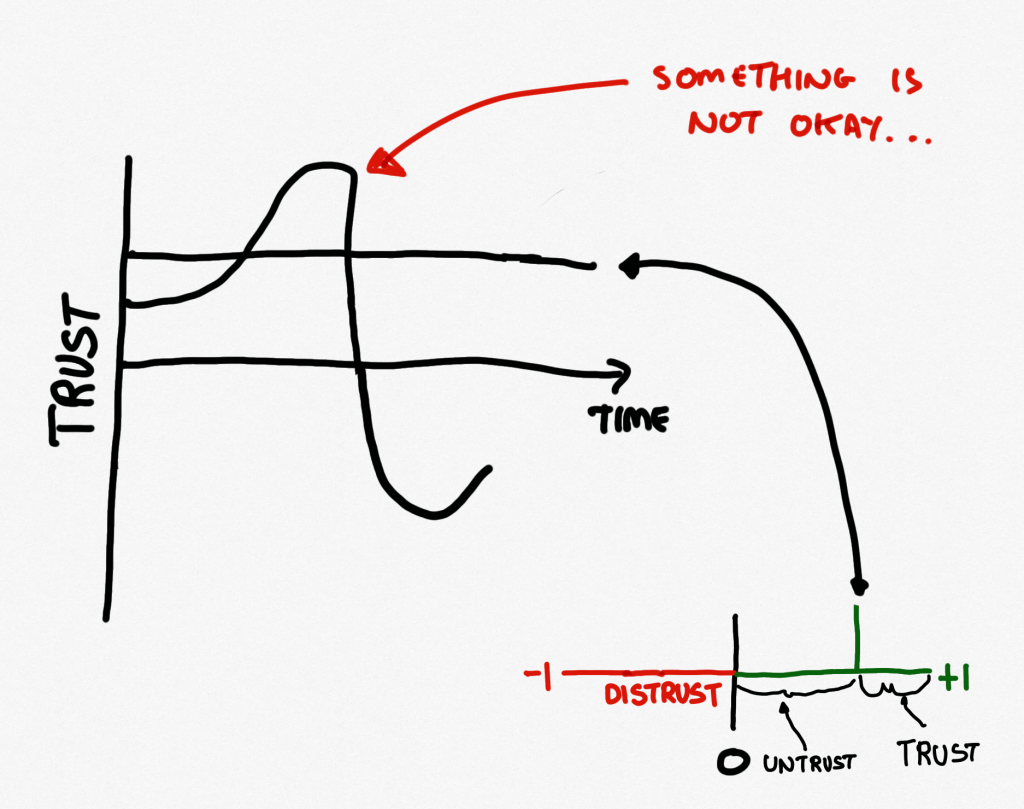

This is important. It took me a long time to work around being comfortable with keeping trust and distrust on the same scale, but I reasoned like this: The two things are related, and you can move from trust to distrust. You can even move from distrust to trust (it’s harder, but you can do it). So whilst they are not the same they are connected. In the sense of a computational scale, I eventually settled on –1 to 0 as the range of distrust and 0 to 1 as trust. Yes, 0 is on both. IN effect, it’s saying that we haven’t got the information we need to make a judgment one way or another. Anyway, this separates the two yet keeps them related; it allowed me to think what I thought were interesting things about how the whole worked.

So that leaves us with a really neat continuum for “trust” with a range between –1 and +1. There’s all kinds of stuff you can do with that. Not least, you can show it in a picture. As you might have spotted by now, I like pictures. Not because they say a thousand words, but because they can say the same thing differently. Looking at a problem from a different direction is the most valuable thing you can do with any problem (apart, of course, from solving it!).

Now I could make all kinds of nice lines to show what it looks like, but the spirit of this book is a little different from that, so Figure 4.2 shows what that looks like.

Pretty good, eh? Now we have a really neat tool to be able to explore trust. Don’t believe me? It gets better, as you’ll see. Firstly it allowed me to be able to think about that other problem I was having with trust. The first is that you can’t see it. We still can’t see it, but we can visualize it, and that’s good.

The second was something I alluded to earlier. Remember when I started getting D&D geek and talking about a d100? Right, I said there was something really important there. This is where we tease that out.

You see, when we decide to trust someone to do something, we don’t think in terms of probabilities. We trust them. That’s it. When we decide to trust the babysitter, we don’t run back from the movie theatre every minute to see if the probability of their behaving properly has changed. When we trust the brain surgeon to do the operation, we don’t keep getting off the operating table to see if they are doing it right.

Let me digress a moment. One of the more fascinating things for me whilst at high school[2] was when our physics teacher, Mrs. Pearce, showed us how light behaves when you put a laser beam through prisms and through gratings (very, very, very thin slits in a piece of metal). The results were fantastic for little me (okay, I was 17): light behaved in different ways depending on how you looked at it.

Mind blown.

Now, it was a long time ago and I’m no quantum physicist (indeed, no physicist at all, as it turns out), but here’s what I got out of of it: the phenomenon is called wave-particle duality and it goes something like this: photons (and electrons and other elementary particles) exhibit the properties of both waves and particles. Light, which is made up of photons, is a wave and a bunch of particles all at the same time. As a really simple explanation for a really complex phenomenon, that worked for me.

I like simple explanations for complex things. As you will see later in the book, I practically demand them.

Excuse the digression, but it’s like this: trust behaves like light. Not just because you can’t see it until it interacts with something. It behaves like light because you can talk about it in two distinct ways. The continuum helped me to figure this one out better.

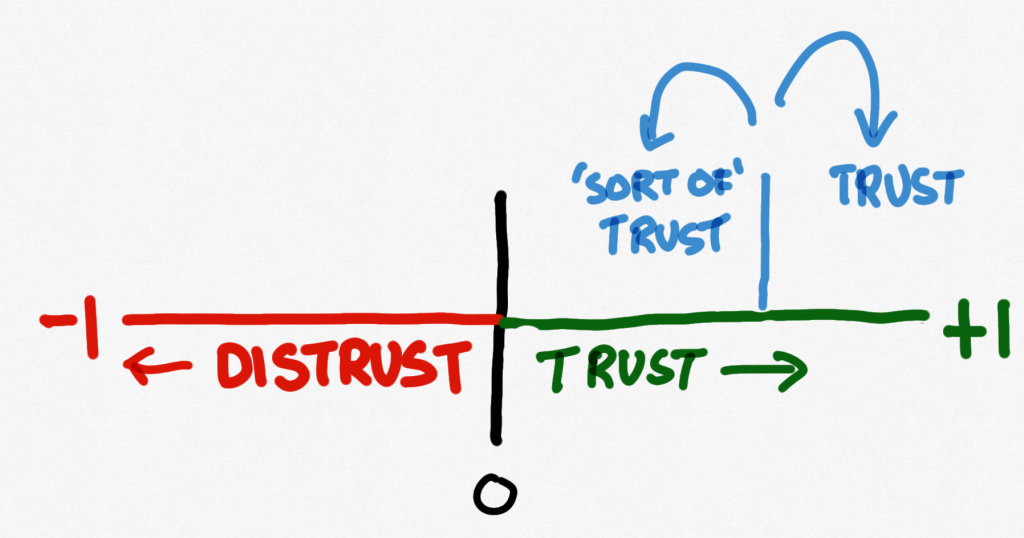

In the first instance, we reason with and about trust, trying to work out that ‘probability’ that the other person or thing will do what they say they are going to. But when we make a trusting decision, this reasoning is no longer valid. When we decide to trust someone in a specific context, trust isn’t probabilistic, if it ever was: it’s binary. We either do, or we don’t.

It’s the same trust. It just behaves differently.

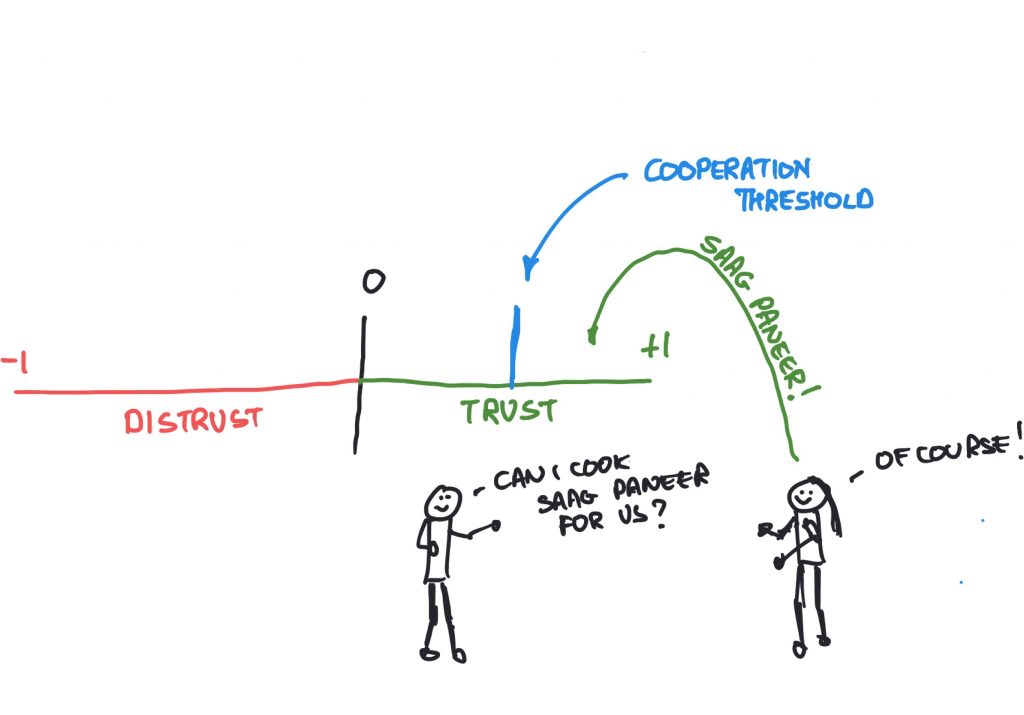

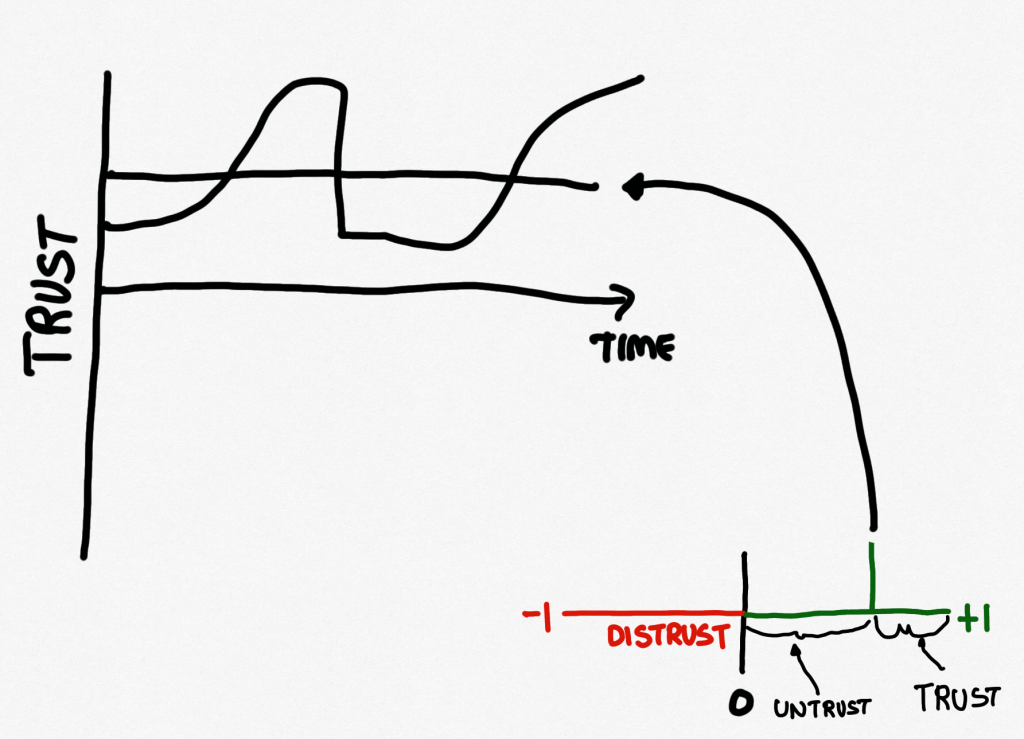

How can we show that on a continuum? Well, it’s like this: you can see trust as threshold-based. This means that when it reaches a certain value (if we put values on it) it crosses a threshold into the I-trust-you relationship from the I-sort-of-trust-you relationship. You can see the picture in Figure 4.3.

See how that works? Excellent!

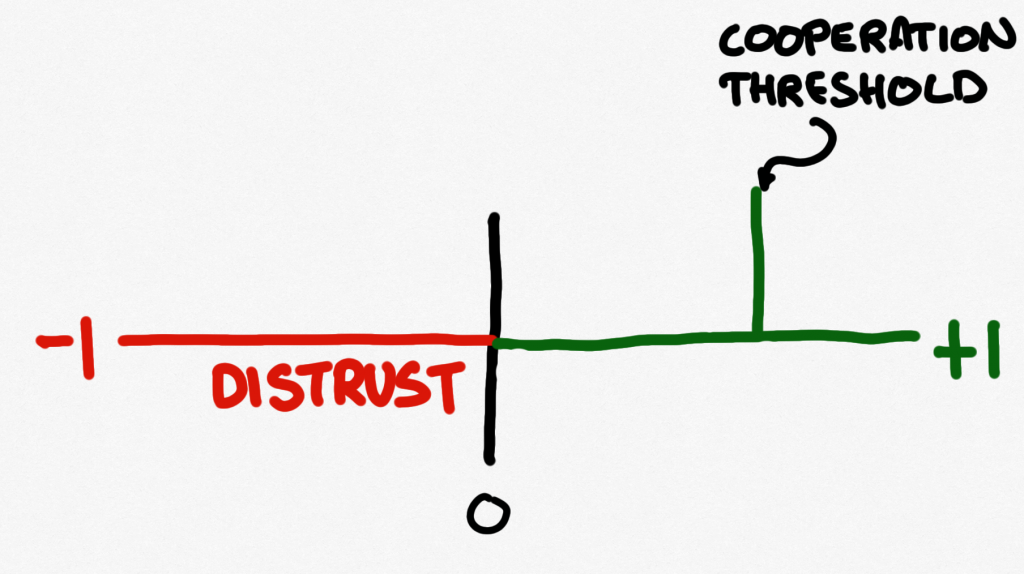

Cooperation Threshold

Now, I was back then quite concerned with agents working together (basically, in a distributed artificial intelligence sense, although being a proud European I called it multi-agent systems. If you’re not sure what either of those is, DuckDuckGo is always your friend!). This, I decided, was to be called “cooperation” and so that threshold became forever known to me as the “cooperation threshold” and so, the picture becomes what you see in Figure 4.4.

Above the cooperation threshold, you can say, “I trust you” (and the ‘what for’ is implied, to be honest). Below it, all sorts of really interesting things happen, which is wonderful.

Take a look at that picture in Figure 4.4 again. What do you see? Below the cooperation threshold we now have two distinct things (actually, we have three, but I will get to that). We have “sort of” trust, and we have distrust. At the time, that was fine – there’s only so much you can do for a PhD and to be honest, starting a new field is pretty cool, even though I didn’t know that at the time. I didn’t completely leave it though, as you’ll see in a wee while. In fact, I saw it as an ideal situation for thinking about things like system trust, the law, contracts, trustless systems, building trust and so on. We’ll get to more of this later in this chapter. Let’s not get sidetracked too much.

I should mention that as I was thinking about the continuum I was also thinking about how to calculate all this stuff: cooperation threshold, trust in context, all that kind of thing. It was a fun time. In the next chapter I’ll show you what I came up with and pair it with the continuum more.

Back to that gap. You know, the one that hangs out between zero and the cooperation threshold. It doesn’t seem right to say that the other person isn’t trusted in this context. After all, there is a positive amount of trust, yes? So it’s, like, trust, yes? That’s what I thought too.

And so, much later, a colleague, Mark Dibben and I tried to tease out what this all meant. When I say “much later,” I mean around 11 years in a paper at the iTrust conference (Marsh & Dibben, 2005). We decided to tease out that little gap and at the same time think about how the language around trust might be used to better define what the continuum was trying to show. I mean, definitely unfinished business.

We tackled distrust first because we had for some time been troubled by the use of “mistrust” and “distrust” as synonymous. After all, firstly why have two words for the same thing and secondly, possibly more importantly, it is not how the “mis” and “dis” prefixes work in other words.

We took our inspiration from information. When you consider that word things start to make much more sense. When we put a “dis” at the front of it, we get “disinformation”, which can be defined as information someone gives to you in order to make you believe something that is not true. As I write, there’s been a lot of that done recently by people who should know better but clearly don’t care. Regardless, disinformation is a purposeful thing. However, if we put a “mis” in front of information we get “misinformation” which is defined more as mistaken information being passed around, sort of like a nasty meme. To put it another way, you can look at misinformation as stuff people believe and tell others with the best of intentions, and not to mislead them (and there’s another interesting “mis” word).

So, disinformation is purposeful, misinformation is mistaken, an accident if you like. Disinformation clearly leads to misinformation – in fact, you might say that misinformation is the purpose of disinformation.

Back to trust. We decided that this was a really nice way for us to think about trust. Distrust, which we really already have, is an active decision not to trust someone, in fact to expect them to behave against our best interests. Fair enough, that’s pretty much where we already were. However, this allows us to look at mistrust as a mistaken decision to trust or not. In other words, it is something that exists across the whole continuum.

To explain a little more, think about Bob and Chuck, our poor, overworked security personas. Chuck is entirely naughty and untrustworthy, and will always do bad things. Bob does not know this and decides to trust Chuck to post a letter, say. Chuck happily opens it up, reads the content, steals the money that was in it and throws the rest into a ditch. The end. In this instance, we would say that Bob made a mistrustful decision: it was a mistake. But it was still trust.

Let’s now take Bob and Derek. Derek is everyone’s idea of a wonderful guy, entirely trustworthy and would do anything for anyone. However, Bob takes a dislike to him immediately (I don’t know why, perhaps Derek has nicer teeth) and decides to distrust Derek, never giving him a chance to prove his trustworthiness. Guess what? In this instance, Bob also made a mistrustful decision: it was a mistake, but in this case it was to distrust instead of trust.

This frees up distrust to do what it was supposed to do in the first place: represent that belief about someone we perceive as not having our best interests at heart. So Bob definitely distrusts Derek, but this is a mistake, which means he mistrusts Derek too. Similarly Bob trusts Chuck, but this is also a mistake, which means he mistrusts Chuck.

It takes a while, give it time.

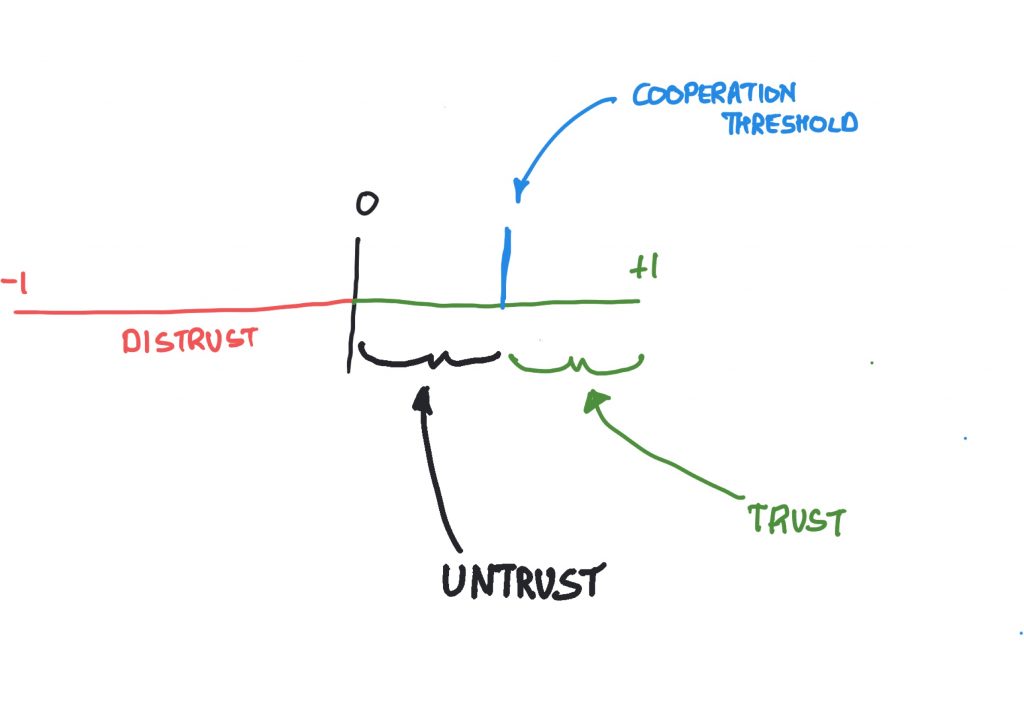

Alright, so now we can look at our continuum again! Guess what? Nothing changed! (Except that anyplace on it could be a mistrustful decision). That’s a bit boring and it really doesn’t answer the question: what’s that bit between zero and the cooperation threshold?

Fortunately we thought of that too. There’s a clue earlier. I called Chuck untrustworthy which means that it’s probably not a good idea for Bob to put his trust in Chuck. That prefix, “un” means something.

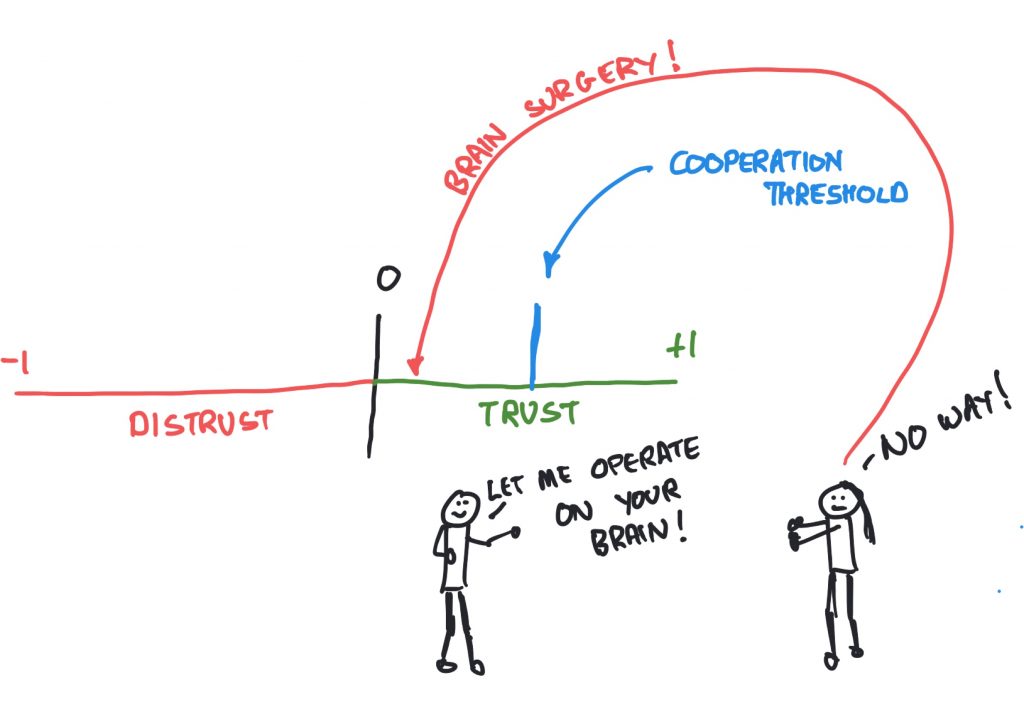

If we go back to our contextual thoughts of trust, we start thinking about this: Steve can’t do brain surgery, but he can cook a mean saag paneer[3]. In the context of doing brain surgery, I’m untrustworthy[4]. In the context of cooking saag paneer, I’m trustworthy. Now, you were to ask, say, my partner Patricia if she trusts me there would (I hope!) be a positive answer. But she still wouldn’t trust me to do that brain surgery, right? Saag paneer now, oh sure, I can make that any time.

There’s a couple of really nice pictures around here that show what I mean. They’re Figures 4.5 and 4.6. I’m sure you will find them.

Untrust

Alright. See how Patricia still trusts me in the brain surgery thing (I probably wouldn’t but there you are, all analogies break down somewhere. Mine is people messing about with my brain). Right, there’s trust, but certainly not enough to allow what I’m asking in that context. That is the ‘sort of’ trust which Mark and I decided to call “untrust,” taking a cue from untrustworthy, you see? And Figure 4.7 shows you where it lies.

And right now, we can bring that continuum back into play with a nice new shiny word, which is very exciting.

Why is it exciting? Because it’s always nice when things work out.

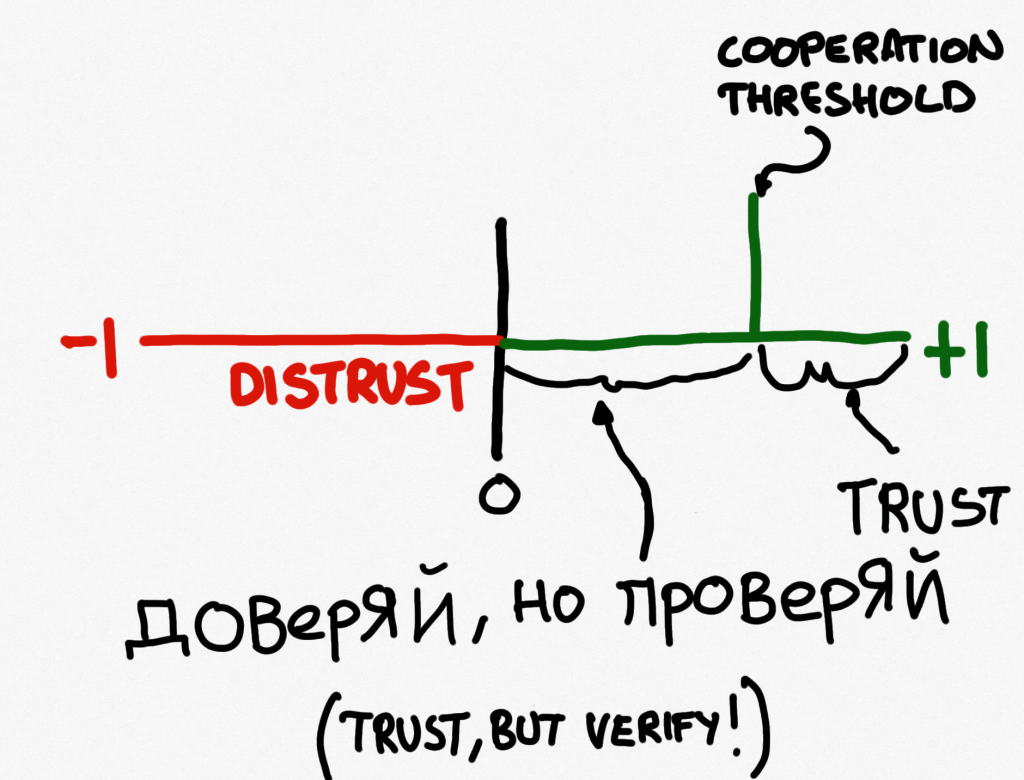

Let’s go back to 1985, or thereabouts. This was around the same time I was watching laser beams whizz around the physics lab, but the world was probably much more interested in what Ronald Reagan and Mikhail Gorbachev were talking about: nuclear disarmament (I like the picture in Figure 4.8… If people can smile properly at each other, we’re getting somewhere).

Now these discussions went on for a while and during them, also at the signing, President Reagan used the words, “trust, but verify”. President Reagan learned the phrase from the American scholar Suzanne Massie. He used it many times. I know this because growing up in the 1980s you heard a lot of Reagan (and Thatcher). It comes from a rhyming Russian proverb. This probably reveals a lot about the Russian psyche.

I had, at the time I was doing my PhD research, been troubled about this for several years. Essentially, “trust, but verify” is the equivalent of popping over to the house every too often to see if the babysitter is drinking the single malt.

In sum: it’s not trust.

I was gratified to hear that President Gorbachev (whom I confess is something of a hero of mine) in response brought up Emerson.

Let’s put it like this: if you have to verify the behaviour of the other person (or evil empire) then you simply don’t have the trust you need to get the job done. Try looking elsewhere.

At the point of figuring this out, my doubts were not laid to rest, but they were at least put on a continuum. This, too, you can use the continuum drawings for (Figure 4.9).

To drop into politics for a short paragraph, since then, various politicians have co-opted the phrase, most recently Pompeo, then Secretary of State for the USA, who used “distrust and verify.” I do not subscribe to this point of view[5]. But then, I never really subscribed to the “trust, but verify” one either. Oddly, the world seems a much less trusting place than it did in the 1980s; yet at that time, trust seemed in short supply.

We are now in a pretty nice position to be able to talk about the behaviour of trust.

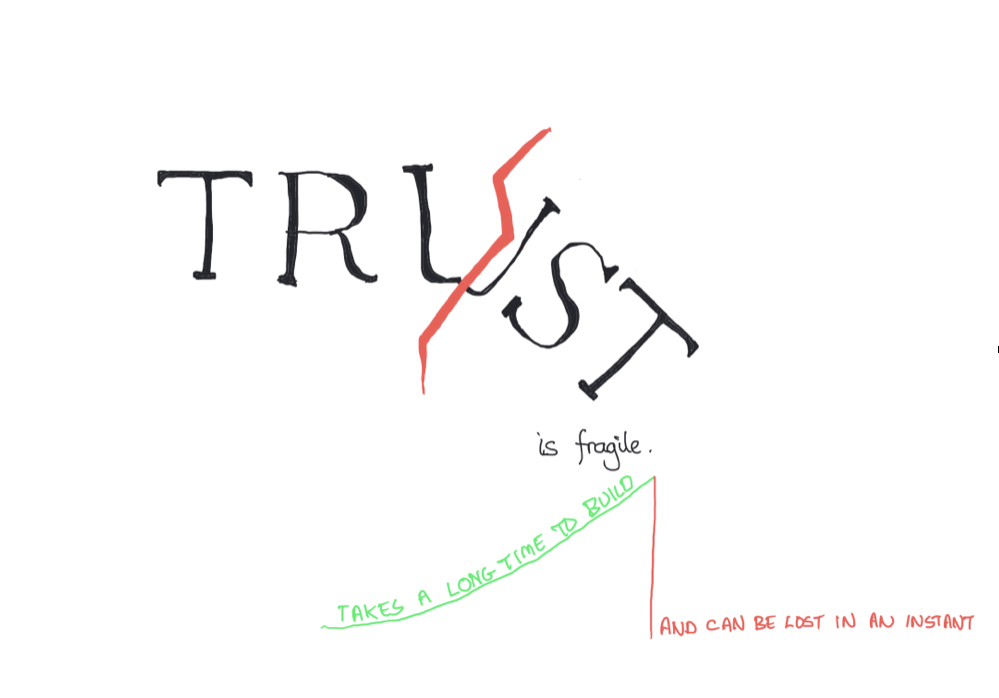

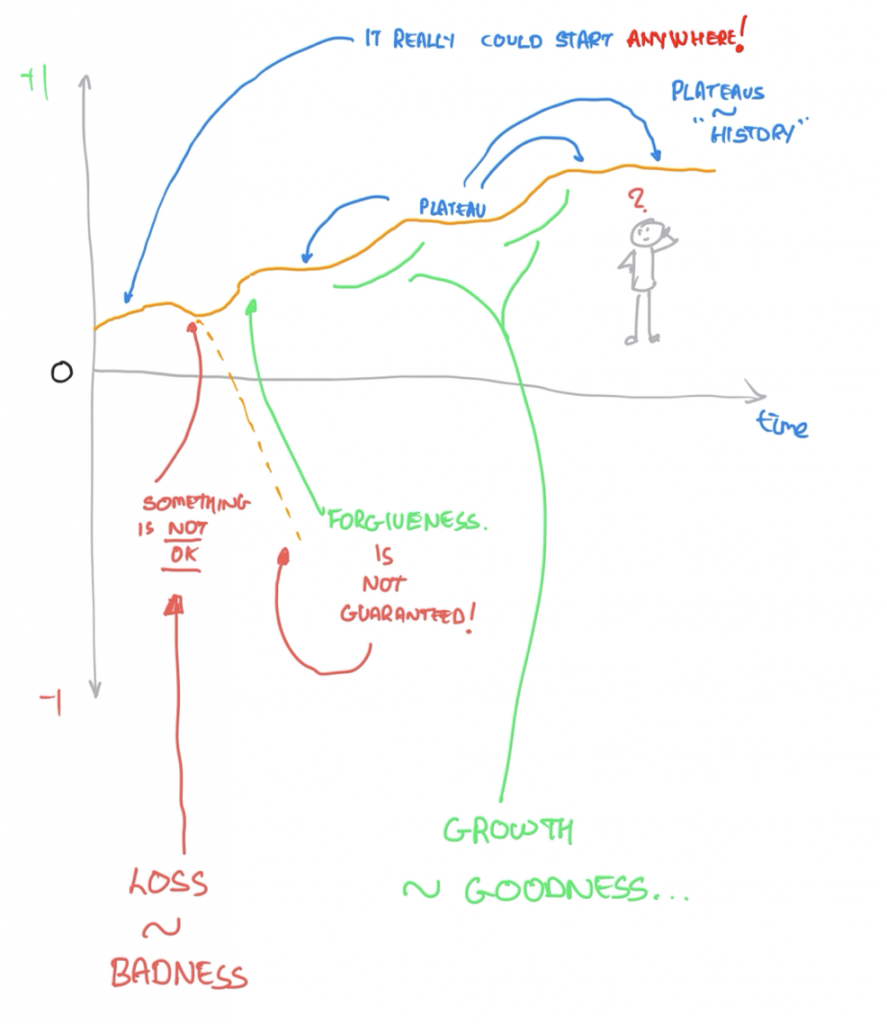

Generally speaking, it’s accepted that trust is fragile–it goes up (slowly) and down (fast). Certainly this appears to be the case, although as Lewicki & Bunker note (1995), in a relationship there may well be plateaus which are reached and which serve other purposes, which we’ll look at it a moment.

In order to illustrate this, we can turn our continuum on its side and add another dimension, that of time. It kind of looks like what you see in Figure 4.11.

You can see the continuum is still there, it’s just sideways and the y-axis on this graph, and the x-axis as ever is time. A quick look at that graph shows, in this instance, trust growing from some point in time based on, presumably, good behaviour on the part of the trustee. However, at the “something is not okay” point in time, the trustee “betrays” the trust in some way, which means that the truster loses all that nice trust and it heads into the distrust territory. At this point, it may well be hard for the truster to forgive that just happened.

This is fragility.

Remember that “forgiveness” word. It will come in handy shortly.

Let’s explore that a bit further now that we have a more interesting graph, with time no less, to build on. First of all, let’s talk about plateaus.

Lewicki and Bunker (1994) in their work distinguish between calculus-based, knowledge-based, and identification-based trust (after Shapiro, 1992). In the first, the trust is based on something like a cost-benefit analysis — what would it cost to break the relationship versus what is gained from keeping it, for instance. They also see it as deterrence-based because “punishment for non consistency is more likely to produce this type of trust than rewards or incentives derived from maintaining the trust” (Lewicki and Bunker, 1994, Page 146). Be that as it may, knowledge-based trust follows when predictability is established. Identification-based trust is established when the desires and intentions of the other person are internalized[6]. As you might expect, people probably have lots of calculus-based, many knowledge-based and just a few Identification-Based relationships.

Which reminds me of wa, or harmony in a Japanese society (one that is based on mutual trust, but with some special aspects where foreigners are concerned) which I first encountered in my PhD work[7]was written about by Yutaka Yamamoto in 1990. It goes a bit like this:

Context 1: The presumption of general mutual basic trust is beyond reasonable doubt. The relations between members of close knit families, between intimate friends, and between lovers are examples.

Context 2: The presumption of general mutual basic trust is reasonable, but it is not beyond reasonable doubt Examples are relationships between neighbours and casual acquaintances.

Context 3: The presumption of (general) mutual basic trust is unreasonable. A meeting with a person for the first time is an example; the first meeting between a Japanese and a foreigner is, for the former, a paradigm.

If you think about it, things like context one match Lewicki and Bunker’s and Shapiro’s knowledge-based trust, whilst context two matches identification-based trust and context three more closely matches calculus-based trust. It probably goes without saying that there are subtle differences here, but the similarities are striking. It also likely goes without saying that as you see in the chapter on calculations in this book, it would seem to be that all I talk about really is calculus-based trust. It’s always been a little bit of a concern to me that this would seem to be so. I want my artificial agents to be able to get into more deep relationships, if I’m honest. I’ll talk about that a bit more here. This also brings to mind Francis Fukuyama’s book – Trust: The Social Virtues and the Creation of Propserity. Fukuyama wrote this in 1996 (so, after Yamamoto). In it, Fukuyama combines culture and economics and suggests that countries with high social trust can build enough social capital (told you it was economics) to create successful large-scale business organizations. I’m not convinced that this is so, but the countries that displayed such trust included Japan and Germany, culturally strong mutual trust countries you might say. Lower mutual trust (or more rigidly defined) countries like Italy and France don’t do so well on the social capital front (although they are highly family-oriented countries). Quite interestingly, he also identified (in 1996) the growth in individualism that would cause problems in the USA

Okay, back to the continuum for a moment. There’s not actually much of a difference between these different kinds of trust and the continuum except that I don’t really label the different trusts because the continuum takes care of this.

One interesting thing that Lewicki and Bunker identified is that, if a person is in one level (say Identification-Based) then there may be different plateaus within that level. Meanwhile, if trust is violated at some level or sub-level, it is not necessarily completely destroyed but may, depending on the situation, be preserved yet lessened — for instance to the next level down. This is because of the large amount of personal capital and effort that is used in the creation of the trust relationship in the first place, not to mention the loss of things like ‘face’ or self-esteem if a high level trust (like Identification-Based Trust) is betrayed and seen to be a mistake, since no-one likes to admit such costly mistakes[8].

Figure 4.12 shows a possible example using a graph for trust with plateaus… Note that ‘good’ behaviour results in a slow growth to a plateau and bad behaviour results in a drop to the next plateau down, or possibly worse.

In all of these different situations, the amount of trust increase is relative to the ‘goodness’ of what is done as well as the trusting disposition of the truster (which we will come to later). The decrease in trust is relative to the ‘badness’ of what was done (likewise, we will come to this later).

As an aside, I’m not claiming that trust actually does behave like this, but I am saying that it may well, and there are various explorations around it that bear it out. However, it is really useful when describing what trust might look like in computational systems, which was really the point. That it in some way clarifies trust in humans is a nice thing to have at this point.

Forgiveness

In Figure 4.12 you see the word forgiveness, and you see that it is not guaranteed. In Figure 4.11 you don’t see it, but you can probably figure out where it might happen, if it does. Indeed, that forgiveness is not guaranteed is the case.In Marsh & Briggs (2008) we examined how this all might work in a computational context.

Forgiveness is interesting not just because it repairs trust; forgiveness is interesting because it is a fascinating way to deal with problems you face all the time online, like cheap pseudonyms. What are they, you ask? Well, in 2000, Eric Friedman and Paul Resnick (Friedman & Resnick, 2000) brought up this problem: in a system where you could choose a pseudonym (basically a fake name) for yourself you could join, do wonderful things, then do a really bad thing and leave only to join as someone else, with no reputational harm, as it were. For instance, you could be “john1234” on eBay, sell a lot of little things for not much money, build up a nice reputation, and then slowly get more and more expensive so as not to raise eyebrows, and finally charge a big amount for something before heading off into the sunset of anonymity with a fat wallet. A couple of seconds later you could rejoin as “tim1234” and no-one would be any the wiser, so you could do it all over again. Sure, there are some safeguards in place for this kind of behaviour but it’s actually a pretty easy attack on reputation systems. It’s basically a sort of whitewashing. We’ll get to attacks (and defences) in a later chapter.

So, why does forgiveness help? If we accept that people are fallible (and computers too), then we automatically accept the risk that they may make mistakes, and sometimes even try to cheat us. Hang on, this bit is where some mental gymnastics is required[9]. We can also accept that they don’t need to dodge away and create a new pseudonym if forgiven. Think about it this way:: if someone screws up, on purpose or by accident, they don’t need to try to hide it if forgiveness is something that might happen. More to the point, things like forgiveness allow relationships like that to continue in the face of uncertainty over who is who (as it were: better the devil you know).

This is, it must be added, a rather dysfunctional view of a human relationship: forgiveness isn’t a good thing if it means that the forgiver stays in an abusive situation. However, that’s not what I’m advocating here for various reasons, and with various safeguards it can be handled pretty well. Generally speaking though, forgiveness in these environments is better applied when mistakes happen, rather than deliberate betrayals.

In systems where humans collaborate across networks, there is a lot of research about how trust builds and is lost, as well as how things like forgiveness help. For instance, Natasha Dwyer’s work examines trust empowerment, where the potential truster gets to think about what is important to them and is supported by the systems they use to be able to get this information. This is opposed to Trust Enforcement, where reputation systems tell you that you can trust someone or something because their algorithm says so. We’ll come back to things like Trust Empowerment when we look at where trust can help people online later in the book.

Forgiveness and apology in human online social systems was extensively studied by Vasalou, Hopfensitz & Pitt (2008). IT goes something like this: in one experiment, after a transgression and subsequent apology, a trustee was forgiven (it’s a one step function). That works pretty well when the trustor is human actually because if we bring in Trust Empowerment, the forgiveness step is based on information that the thruster has in order to let it happen. I hasten to add that this is a very short explanation of something very important and that there’s an entire section of the Pioneers chapter devoted to Jeremy Pitt and colleagues‘ work in this book.

In the case of computational systems, there’s a lot of grey inbetween the grim sadness of distrust and the “ahh wonderful” of trust, especially where forgiveness might be concerned. So we decided on an approach that took into account time. You will have noticed by now that our graphs using the continuum have stealthily introduced the concept of time to this discussion. So, we have it to use, and as Data once remarked, for an android, 0.68 seconds “is nearly an eternity” (Star Trek: First Contact).

In the model we are examining here, forgiveness is something that can happen pretty much after a length of time that is determined by the ‘badness’ of a transgression, the forgiving nature of the truster and (cf Vasalou et al, 2008) if an apology or expression of regret was forthcoming.

Moreover, in the model, the rate of forgiveness is something that is also determined by the same three considerations. For more information on how this all works in formulae, look here.

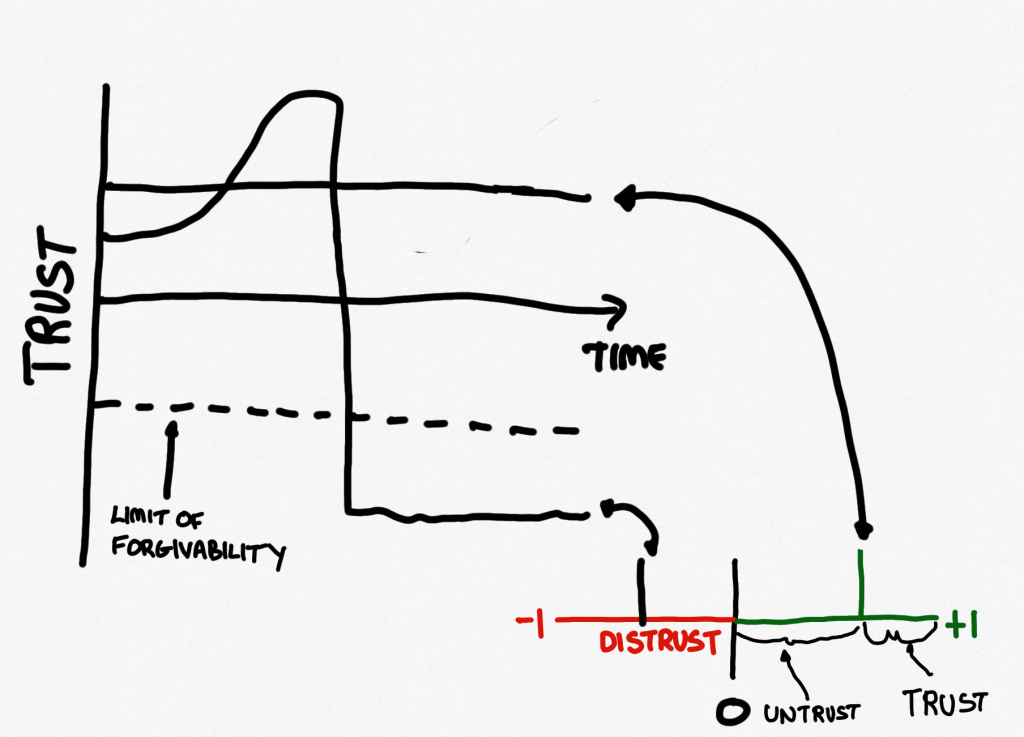

Naturally, we can show that on our ‘continuum-time’ graph, as you can see in Figure S.13.

If you take a look at that you can see that the trust goes slowly up, above the Cooperation Threshold (that blue line) and then something bad happens which makes the truster lose trust (the amount of the loss is related to the ‘badness’ of what happened, as before). Over time (related to the things I mentioned before) this is allowed to begin rebuilding, the rate of which is dependent on the same ‘forgiveness’ variables. Neat, isn’t it. Naturally it’s hard to show on a one dimensional continuum, so we won’t.

But there is something we can show

Ever been in a relationship when something so egregious happens that the thought of forgiveness is not even possible? Almost certainly. Lucky, if not. In either case, it’s probably fair to say that there are situations like that.

The same can happen for our continuum of computational trust too. In such an instance, the drop in trust is too large for the system to think about forgiveness. Recall that this drop is related to the ‘badness’ of what happened and it begins to make sense. Figure S.14 shows what that looks like. Take a look at it.

You will see two horizontal lines, one above the x-axis of time, which is our regular Cooperation Threshold (which you can also see in Figure S.13). The second (dashed) is below the x-axis, so it’s in ‘Distrust’ territory, although it really doesn’t have to be. This is the place where the straw breaks the camel’s back, as it were: it’s the limit at which the truster walks away with no possibility of forgiveness – and as you can see from the graph, the level of trust just doesn’t change afterwards.

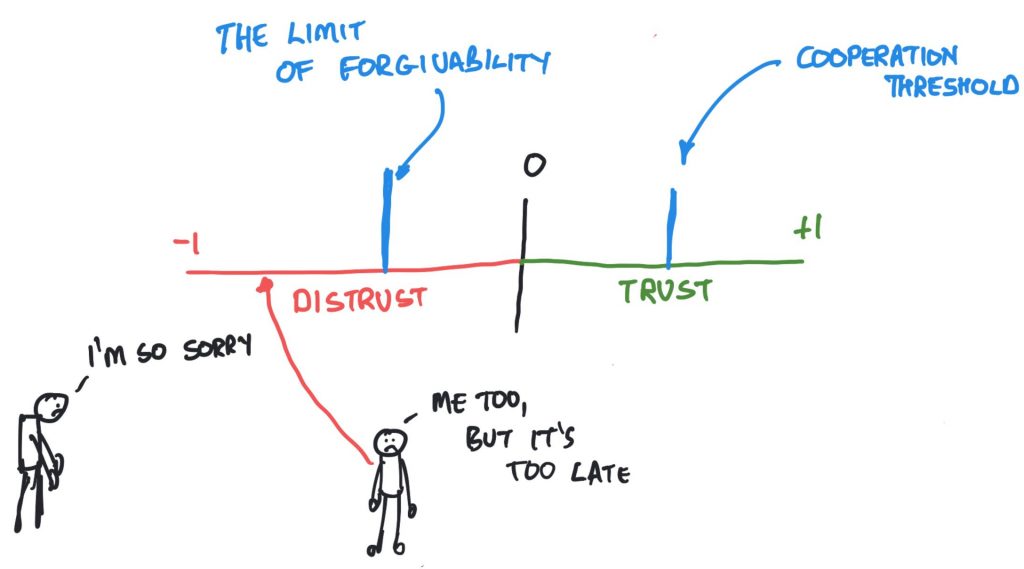

We call that dashed line the limit of forgivability.

It’s an important line, and for our model its value is dependent on a couple of things – the agent (or person) being considered (which is something we will come to) and how forgiving the truster is (yes, that too).

Phew.

Let’s have a look at that on the continuum. You’ll see it in Figure 4.15, which is one of the saddest drawings in the whole book, and for good reason.

I mentioned regret and apology earlier. You can’t see them on the continuum (although you can probably see both in Figure S.15). However, they exist, and they are a really nice segue to the next chapter, which takes what we have here and puts mathematics around it. Don’t worry, it’s not complicated mathematics. Why not? There are several reasons, and in the next chapter you’ll find out.

I think this wraps it up for our continuum. If you look back at the start of the chapter it was really about ‘can we see trust?’

The answer is yes. Just look around you.

“The reward of a thing well done is to have done it.”

President Mikhail Gorbachev quoting Ralph Waldo Emerson at the INF Treaty signing, December 8th 1987.

- It's been pointed out to me that this is a reference that others may find a bit confusing. What is a d100? Well in role playing (you know, like Dungeons and Dragons... Where were you when they were screening Stranger Things?) we sometimes need a percentage, like in chance, for the probability that something happens. We can roll two dice, one which has faces of 00 to 90, and one which has 0 top 9 faces. Put them together (like a 40 and a 5) and you get 45. Simple. Except this has its own stupid debates too, by the way. Some people have too much time on their hands, or want to lecture too much. As an aside, if you're a computer scientist, starting at zero makes perfect sense. ↵

- I’m an Old Hancastrian as it happens, from one of the oldest schools in England ↵

- It's actually true. It is also an example you will see overworked in another chapter... ↵

- You could say I'm untrustworthy if I wanted to try to do it, anyway. If I don't ask you to trust me to do it, am I still untrustworthy? ↵

- To paraphrase Sting. ↵

- There may be some debate as to whether machines ever get to this stage, but we’ll leave that for another chapter. ↵

- And identified for future work, which this kind of is, but there's more. ↵

- For a little more on this, also see here. ↵

- Because I made it up and even I sometimes ask "what?!" ↵