Working with Data

10 Supporting Reproducible Research with Active Data Curation

Sandra Sawchuk; Louise Gillis; and Lachlan MacLeod

Learning Outcomes

By the end of this chapter you should be able to:

- Understand the role of active data curation within the broader domain of Research Data Management.

- Identify key features of active data management tools, such as versioning, scripting, software containers, and virtual machines.

- Assess an example of a reproducible dataset in a software container.

Introduction

This chapter will focus on the interoperable and reusable aspects of the FAIR model (Findable, Accessible, Interoperable, Reusable), which was introduced in chapter 2, “The FAIR Principles and Research Data Management,” providing you with the confidence and skills to engage in active data curation.

Active data curation during ongoing research creates data that are FAIR: Findable, Accessible, Interoperable, and Reusable (Johnston, Carlson, Hudson-Vitale, et al., 2017; Wilkinson et al., 2016). The term active describes curatorial practices that happen during the data collection, analysis, and dissemination stages of research. Data curation involves managing research data that has been selected or is required to be deposited for long-term storage and preservation (Krier & Strasser, 2014). Conventionally, curation is tackled toward the end of a project, often after the analysis is complete. Excellent resources, like the “Dataverse Curation Guide” and the Data Curation Network’s CURATED workflow, provide invaluable guidance on curating once the project has ended its active phase (Cooper et al., 2021; Johnston, Carlson, Kozlowski, et al., 2017). There is value in working on curation as the project is happening. Doing so catches errors before they become catastrophic and gives data a better chance of being well described and contextualized (Sawchuk & Khair, 2021).

This chapter will provide guidance on the tools and techniques that facilitate the curation of research data during the active phases of research. Like Cooper et al. (2021), we know that capacity to provide curation support varies across Canadian institutions, and that the role of libraries is often to provide education and awareness of best practices. The actual day-to-day management of the research and its associated data is the responsibility of the researchers who conduct the work.

We discuss strategies for implementing good data management practices, with a focus on activities that help improve data interoperability and reproducibility. We also consider best practices for the curation of research data, including tools for communication and collaboration. While the tools covered in this chapter are primarily used to support computational research, the reproducibility principles we describe will have applications in all disciplines.

Platforms

Choosing a data storage platform isn’t exactly curatorial. However, the implications of choosing one storage platform over another do have important curatorial consequences.

Storage options are covered more fully in chapter 5, “Research Data Sharing and Reuse in Canada,” but here is a brief review. Your platform choices fit into three categories:

- Local storage is either built into or connects directly to your device, and includes hard drives, and USB jump drives.

- Network attached storage (NAS) systems connect devices within a local network. Examples include departmental, faculty, and university servers.

- Cloud storage is internet-based and provided through a third party. Examples include Dropbox, Google Drive, OSF, and OneDrive.

Table 1 outlines advantages and disadvantages of each of these main platform types. There are use-cases for each, but all else considered, cloud platforms do offer compelling curatorial features.

| Advantages | Disadvantages | |

|

Local

|

|

|

|

Network

|

|

|

|

Cloud

|

|

|

Guidelines for Data Storage

- If appropriate, consider using a cloud platform and backing up your data on an institutional network. Most cloud storage platforms have automatic versioning features. Automation means less work for you and less opportunity for human error. Important files can be copied to institutional networks, which are backed up regularly, further guarding against data loss that could occur on local drives.

Every time you edit in a cloud environment, a new version of your file is saved along with information about the file’s provenance:

- who made the edit

- when the edit was made

- what those edits were

- Choose an institutionally supported solution. By choosing an institutionally supported solution, you’ll also have access to local tech support, training, and the reassurance that comes with knowing it’s been evaluated. Choosing a well-supported solution is a good way to increase the probability that your data will be accessible and usable in the long term. In the Canadian context, this might mean using Microsoft Office 365, which many universities support.

- Use an electronic lab notebook (ELN) or project management tool. ELNs are online tools built off the design and use of paper lab notebooks. At their most basic, they provide space to record research protocols, observations, notes, and other project-related data. Their electronic format supports good data management, bypassing issues of poor handwriting and data loss due to physical damage. ELNs also provide data security and allow collaboration. This can be especially helpful if you are working in the private sector, or in situations where team members come from multiple institutions. You might look beyond institutional solutions to collaborative tools like the Open Science Framework (OSF), which is free to use, open source, and provides file provenance detail. It can be used as a collaborative data-sharing space, or as an ELN.

Data Security

Address anticipated risks in your Research Data Management (RDM) plan and take care to ensure the measures you outline are feasible to implement and relative to the risk associated with your data. If you are working with personal health data, for example, you will need to exercise more care than someone working with open source code. Similar considerations must be taken when working with data about marginalized or racialized groups. Your choice of storage platform is also important. Data stored on a portable USB stick is susceptible to loss and damage, while data stored in the cloud is susceptible to hacking, malware, and phishing.

Guidelines for Addressing Data Security

- Avoid using portable drives and local storage.

- Secure your computer and network by installing software updates and antivirus protection, enabling firewalls, and locking your computer and other devices when you are away from them.

- Use strong passwords. Strong passwords are unique and complex (long character strings with a combination of symbols, numbers, lower and upper case letters). Unfortunately, they’re also hard to remember. One solution is to use a password manager, such as KeePassX or 1Password, that stores your usernames and passwords in one place. Change your passwords regularly!

- Encrypt files and disks if you are working with proprietary or sensitive data. You can use Firevault for Macs and BitLocker for Windows.

- If you are working on a cloud platform, use multifactor authentication for file access.

- When transferring data, use encryption. OneDrive is an example of a storage platform that allows you to send and receive encrypted files. Globus file transfer is an option for large files, and many large research institutions use Globus for sensitive research data.

Active Data Curation

Active data curation involves organizing, describing, and managing your research files and documentation. How you organize your files is a personal choice. There is no one way to do it, and a workable solution will be one that makes sense to you and your team. Document your decisions, communicate those decisions to all that are involved, and revisit them regularly. If a strategy no longer works, amend it and move on.

Guidelines for Active Data Curation

- Organizing research files

- Have one key person responsible for ensuring logical organization and naming. This person can perform checks at regular intervals to make sure documentation, file naming, and file paths are consistent. They can also be the primary contact for any research assistants who may have questions about organizational practices or data errors.

- Keep your organizational scheme, file structure, and naming conventions in a single document: on a printout next to your work computer or in a documentation file with your project work. If they are nearby, they can be used. If they are buried away, they cannot.

- Implement clear workflows to ensure work is not overwritten or undone. “Protect your original data by locking it or making it read-only” (Training Expert Group, 2020) and compressing it. Create separate workspaces for different data workers, with a central coordinator or analyst responsible for joining the disparate pieces together. Another option, if the project and timeline allow, is to have people work on a regular, but not overlapping, schedule. Use a Gantt chart or other models to develop a project timeline and manage duties.

- Organize with economy. Limit the number of folders you use. This makes it easier to find data and helps with processing time for backups and combining or analyzing large datasets.

- Describing files

- Use a consistent naming scheme for all files and create a document that describes the naming scheme. This can prevent errors, save on training time for research assistants, and serve as a basis for your data dictionary (described below). It can be helpful to include abbreviations or acronyms of project names, funders, grant numbers, content type, and so on. Include dates (we recommend YYYY-MM-DD format) and be descriptive but brief. Use camel case (CamelCase) or underscores (under_scores) as delimiters. Computer systems do not always understand spaces and special characters.

- Versioning should be clear and judicious. Not every edit needs a new version number, but substantive changes to files warrant updated version numbers. Use V01, 02, and so on to make your revision history clear and easy to follow, or use an automatic version control system.

- Syntax files are code files with sequences of actions performed by statistical analysis software; they can be generated by the software or coded by the analyst. Perform or record all your actions using a syntax file that lists the actions performed by statistical analysis software. Depending on the specific software you use, syntax files may be called program files, script files, or something similar. Most syntax editors have built-in notation (or commenting) functionality that can help you remember what you did and communicate this process to your co-investigators. Include descriptions of what you have done in syntax files and clean your syntax as you go. This will also be useful if your code is going to be reused for future projects or disseminated on a research data repository.

- If using specialized software for data exploration and analysis, determine if documentation about data file processing is automatically generated and supplement as required. Include as much detail as you would need to recreate your workflow. If you intend to revisit your data later, you’ll appreciate the effort you made!

Create your own file naming scheme. Krista Briney’s Filing Naming Convention Worksheet guides you through the process of creating a meaningful plan.

- Creating codebooks and data dictionaries

A codebook is a document that describes a dataset, including details about its contents and design. A data dictionary is a machine-readable and often machine-actionable document, similar to a codebook, that generally contains detailed information about the technical structure of a dataset in addition to its contents (Buchanan et al 2021); however, the two terms are often used interchangeably. Codebooks may be automatically generated by the statistical software you use, or you may need to create one yourself. It is good practice to develop the codebook as you go so that data will be standardized. Document any recoding or other manipulation of data. Even if the survey software generates the codebook, you will likely need to add more information. Ideally, your codebook will be simple, including variable names and short descriptions. Though, according to the Inter-university Consortium for Political and Social Research (ICPSR, 2023), the information contained in codebooks may differ across projects and domains.You should include the codebook in the methodology section of a study. As a starting point, document any analysis you’ve done as notation in the syntax file for your analysis. A well-notated syntax file can become the basis for a codebook, or even the methods section of a report, thesis, or publication. Methodological descriptions will vary widely by field of study, but some key things can always be included:

- Values and labels for any fields

- Include a description of how null values were addressed during analysis.

- Basic descriptions or distributions of the results

- Omitted or suppressed variables

- Relationships between variables, including survey piping (wording automatically inserted by survey software based on previous responses) or follow-up experiments

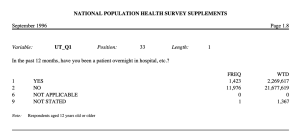

Figure 1 shows an excerpt of a codebook published by Statistics Canada for the National Population Health Survey. In this example, the codebook contains the name of the variable, the survey question and responses, and a note about the age of the respondents. This codebook also includes the position and length of the variable; this information would also be included in the data dictionary.

Going Further

Regardless of the software that you choose to use, good documentation is the key to effective data management and curation. This section will introduce important concepts to consider in the active curation of computational research, including file versioning, scripting, and software containers.

We can take these lessons about active data curation and apply them to the case of computational research. Computers have become so user-friendly that it is easy to overlook their complexity. Researchers can choose from a variety of open source or proprietary software to perform tasks at every stage of their project, from data collection to visualization.

Proprietary software, such as SPSS or Microsoft Excel, is akin to a “black box” where data goes in and data comes out, with little indication of what has happened inside (Morin et al., 2012). Depending on the end-user agreement, it may be disallowed or impossible to inspect the code. Proprietary software is often easier to use than open source software, and it may or may not be free (Singh et al., 2015). Open source software is often free, but it may also be more complex to use (Cox, 2019). This complexity is balanced with the ability to inspect the source code, and depending on the software license, make changes to the program itself (Singh et al., 2015).

Programmatic File Versioning

Active data curation, as discussed earlier in this chapter, involves more than creating straightforward folder hierarchies and using consistent file naming practices. You must also manage the content of the files in a systematic and transparent way, with an eye for reuse. You can accomplish this programmatically with the use of automatic version control features, which are found in many cloud-connected document managers, such as Office365 and Google Docs. The assessment activity at the end of this chapter is hosted on a version control platform known as GitHub, which is commonly used by people who write and develop code.

Version control, or versioning, means keeping track of the changes that are made to a file, no matter how small. When files are saved using automatic version control, both the content and the revisions are automatically recorded, allowing users to return to all previous saved versions of the file (Vuorre & Curley, 2018). Each time you save a file, every single change to the file is recorded, and the file is saved as a new version without the need to rename the file. This allows you to “go back in time” to see how the file was developed, as all the changes in the file will be identified.

Repositories such as Dataverse and Zenodo include version information in their generated citations, which makes it easy for authors and secondary users to identify which version of a dataset or manuscript they have used.

Scripting: For Making Analysis Reproducible and Automating Data Management Processes

Automating research workflows, such as data import, cleaning, and visualization, allows you to execute computational experiments with limited manual intervention. Automation relies on scripts, which are sets of computational routines written with code (Alston & Rick, 2021; Rokem et al., 2017). Scripts should be accompanied by detailed documentation describing each step in the routine so that the provenance of an experiment can be understood. Provenance in computational research shares the same meaning as archival provenance; it is a record of the source, history, and ownership of an artifact, though in this case the artifact is computational.

While automation and provenance-tracking facilitate reproducibility and reuse for researchers and reviewers outside of the project, the biggest beneficiary will always be the original research team (Rokem et al., 2017; Sawchuk & Khair, 2021). Detailed documentation helps identify errors and provides valuable context for training new team members. Automation allows experiments to be run and rerun with minimal effort, which is especially useful when datasets have been amended or updated.

In some cases, automation and provenance can occur in the same place. As we discussed earlier, syntax files include the commands used to manipulate, analyze, and visualize data; these files can be further edited to include descriptive comments about the rationale and the analysis. Syntax files can then be bundled with the data and output files, allowing other users to evaluate and reuse the entire project.

Electronic code notebooks are another tool that incorporates automation and provenance-tracking in one linear document. A code notebook, such as Jupyter Notebook (https://jupyter.org), is an interface that encourages the practice of literate programming, where code, commentary, and output display together in a linear fashion, much like a piece of literature (Hunt & Gagnon-Bartsch, 2021; Kery et al., 2018).

Good documentation is essential for reproducible research, regardless of who might be doing the reusing (Benureau & Rougier, 2018). It is good practice to include descriptive annotations with all computational assets used in a project to provide valuable context throughout all stages of the research lifecycle.

Sharing Code: Electronic Notebooks and Software Containers

Code that works on one computer is not guaranteed to work on another. Differences among hardware, operating systems, installed programs, and administrative privileges create barriers to running or reading the code that has been used to conduct data analysis. Some researchers use proprietary file formats that can only be accessed through purchase or subscription to specific software. In addition, those conducting and managing a research project will likely have varying degrees of coding literacy, which can lead to inconsistencies in documentation and the inclusion of errors (Hunt & Gagnon-Bartsch, 2021). While sharing research data and code to a repository that facilitates versioning is good, you should take concrete steps during the active phase of a research project to encourage reproducibility and reuse.

There are a number of technical solutions that facilitate the sharing of code, which range in complexity on a spectrum from static to dynamic. The static approach to sharing code is to simply upload the raw code to a repository with a well-documented README file and a list of dependencies, or requirements, for the computing environment. The dynamic approach involves packaging the data, code, and dependencies into a self-contained format known as a container (Hunt & Gagnon-Bartsch, 2021; Vuorre & Crump, 2021).

A software container is like a self-contained virtual computer within a computer. Software containers can be hosted on a web service, such as Docker, or a USB stick. They include everything required to run a piece of software (including the operating system), without the need to download and install any programs or data. Containerization facilitates computational reproducibility, which occurs when the computational aspects of a research project can be independently replicated by a third party (Benureau & Rougier, 2018). For a project to be truly reproducible, all research assets — from the data to the code and the analysis — must be included. For this reason, software containers include detailed information about the computing environment used to conduct the research (Hunt & Gagnon-Bartsch, 2021). This includes information about the type of computer and operating system (e.g., Mac OS Monterey v12.3, Windows v11, Linux Ubuntu v21.10); the name and version of any commercial software used in data collection or analysis or, alternatively, the coding language used to create the software; and the names and version numbers of any dependencies that support the software.

A dependency is an additional software library that can be downloaded from the internet and used for specific programmatic tasks. For example, users of the coding language Python can go online and download entire packages of prewritten code that facilitate specialized operations, such as mathematical graphing or text analysis. Dependencies are written and maintained by people outside of the project, which means that versions may be updated frequently or not at all. Some dependencies have a large user base and come with a lot of documentation, while others don’t. It is up to the researcher to verify that the code does what it says it will, and that there are no errors or bugs that will impact the data or the resulting analysis (Cox, 2019). It’s essential that you carefully document dependencies (and their versions) in a project for reproducible research, as even small changes between versions can break the code, or worse, output incorrect results.

One of the most common ways to write code for software containers is through the use of an electronic code notebook. Containerizing a code notebook allows users to analyze and alter the code to test the output and the analyses. End-users can experiment with the code without worrying about breaking it or making irreversible changes, and they do not have to worry about security issues related to software installations.

Conclusion

The active curation of research data leads to better research, as good curation saves time and reduces the potential for errors. Using standard workflows, organizing and labelling research assets in a consistent way, and providing thorough documentation facilitates reuse for the primary research team and for secondary users. Standardization enhances discovery for data in repositories, which allows for the inclusion of datasets in systematic reviews and meta-analyses, ultimately increasing citation counts and the profile of the research team.

While the suggestions in this chapter are considered best practices, the best RDM is any management at all. Each project will come with its own unique challenges, but attention to active data curation will ensure that the documentation is sufficient for data deposit and discovery.

Reflective Questions

See Appendix 3 for a set of exercises.

Key Takeaways

- Active data curation helps researchers ensure their data is accurate, reliable, and accessible to those who need it. Research data that is properly managed and maintained remains useful and accessible over time.

- Data management practices, such as versioning and scripting, help to improve data accuracy and security. Automating the description, organization, and storage of research data saves time and prevents errors.

- Tools that enable reproducible computation and analysis, such as electronic lab notebooks and software containers, provide opportunities for research to replicated and verified. By making data and analysis methods openly available, researchers can demonstrate the rigour and reliability of their research and allow others to scrutinize their work.

Reference List

Alston, J. M., & Rick, J. A. (2021). A beginner’s guide to conducting reproducible research. The Bulletin of the Ecological Society of America, 102(2), 1–14. https://doi.org/10.1002/bes2.1801

Arguillas, F., Christian, T.-M., Gooch, M., Honeyman, T., Peer, L., & CURE-FAIR WG. (2022). 10 things for curating reproducible and FAIR research (1.1). Zenodo. https://doi.org/10.15497/RDA00074

Benureau, F. C. Y., & Rougier, N. P. (2018). Re-run, repeat, reproduce, reuse, replicate: Transforming code into scientific contributions. Frontiers in Neuroinformatics, 11. https://doi.org/10/ggb79t

Buchanan, E. M., Crain, S. E., Cunningham, A. L., Johnson, H., Stash, H. R., Papadatou-Pastou, M., Isager, P. I., Carlsson, R., & Aczel, B. (2021). Getting started creating data dictionaries: How to create a shareable data set. Advances in Methods and Practices in Psychological Science, 4(1), 1-10. https://doi.org/10.1177/2515245920928007

Cooper, A., Steeleworthy, M., Paquette-Bigras, È., Clary, E., MacPherson, E., Gillis, L., & Brodeur, J. (2021). Creating guidance for Canadian Dataverse curators: Portage Network’s Dataverse curation guide. Journal of EScience Librarianship, 10(3), 1-26. https://doi.org/10/gmgks4

Cox, R. (2019). Surviving software dependencies: Software reuse is finally here but comes with risks. ACMQueue, 17(2), 24-47. https://doi.org/10.1145/3329781.3344149

Hunt, G. J., & Gagnon-Bartsch, J. A. (2021). A review of containerization for interactive and reproducible analysis. ArXiv Preprint ArXiv:2103.16004.

ICPSR Institute for Social Research. (2023). Glossary of social science terms. National Addiction and HIV Data Archive Program. https://www.icpsr.umich.edu/web/NAHDAP/cms/2042

Johnston, L., Carlson, J., Hudson-Vitale, C., Imker, H., Kozlowski, W., Olendorf, R., & Stewart, C. (2017). Data Curation Network: A cross-institutional staffing model for curating research data. https://conservancy.umn.edu/bitstream/handle/11299/188654/DataCurationNetworkModelReport_July2017_V1.pdf

Johnston, L., Carlson, J. R., Kozlowski, W., Imker, H., Olendorf, R., & Hudson-Vitale, C. (2017). Checklist of DCN CURATE steps. IASSIST & DCN – Data Curation Workshop.

Kery, M. B., Radensky, M., Arya, M., John, B. E., & Myers, B. A. (2018). The story in the notebook: Exploratory data science using a literate programming tool. Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, 1–11.

Krier, L., & Strasser, C. A. (2014). Data management for libraries: A LITA guide. American Library Association.

Morin, A., Urban, J., Adams, P. D., Foster, I., Sali, A., Baker, D., & Sliz, P. (2012). Shining light into black boxes. Science, 336(6078), 159–160. https://doi.org/10/m5t

Possati, L. M. (2020). Towards a hermeneutic definition of software. Humanities and Social Sciences Communications, 7(1), 1–11. https://doi.org/10.1057/s41599-020-00565-0

Rokem, A., Marwick, B., & Staneva, V. (2017). Assessing reproducibility. In J. Kitzes, D. Turek, & F. Deniz (Eds.), The practice of reproducible research: Case studies and lessons from the data-intensive sciences. University of California Press. http://www.practicereproducibleresearch.org/core-chapters/2-assessment.html#

Sawchuk, S. L., & Khair, S. (2021). Computational reproducibility: A practical framework for data curators. Journal of EScience Librarianship, 10(3), 1-16. https://doi.org/10/gmgkth

Singh, A., Bansal, R., & Jha, N. (2015). Open source software vs proprietary software. International Journal of Computer Applications, 114(18), 26-31. https://doi.org/10/gh4jxn

Statistics Canada. (1996). Codebook A – National Population Health Survey (NPHS)—1994-1995—Supplements. https://www.statcan.gc.ca/eng/statistical-programs/document/3225_DLI_D2_T22_V1-eng.pdf

Training Expert Group. (2020, August 25). Brief Guide – Research Data Management. Zenodo. https://doi.org/10.5281/zenodo.4000989

Vuorre, M., & Crump, M. J. C. (2021). Sharing and organizing research products as R packages. Behavior Research Methods, 53(2), 792–802. https://doi.org/10/gg9w4c

Vuorre, M., & Curley, J. P. (2018). Curating research assets: A tutorial on the Git Version Control System. Advances in Methods and Practices in Psychological Science, 1(2), 219–236. https://doi.org/10/gdj7ch

Wilkinson, M. D., Dumontier, M., Aalbersberg, Ij. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J.-W., da Silva Santos, L. B., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., Gonzalez-Beltran, A., … Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific Data, 3. https://doi.org/10/bdd4

interoperability requires that data and metadata use formalized, accessible, and widely used formats. For example, when saving tabular data, it is recommended to use a .csv file over a proprietary file such as .xlsx (Excel). A .csv file can be opened and read by more programs than an .xlsx file.

online tools built off the design and use of paper lab notebooks

when software is open source, users are permitted to inspect, use, modify, improve, and redistribute the underlying code. Many programmers use the MIT License when publishing their code, which includes the requirement that all subsequent iterations of the software include the MIT license as well.

a term that describes all the activities that researchers perform to structure, organize, and maintain research data before, during, and after the research process.

a computer program that stores passwords. Some password managers also create and suggest complex passwords for use.

data which cannot be shared without potentially violating the trust of or risking harm to an individual, entity, or community.

multi-factor authentication requires two things: a password and a device. When you use your password to sign into a service, your login prompts a request for a one-time code generated by a device such as a cellphone or a computer. One-time codes may be delivered by text message or email, or they may be generated on your device via an authentication app like Google Authenticator. Many banks and government organizations, such as Canada Revenue Agency, now require users to enable two-factor authentication.

writing text with no spaces or punctuation while using capital letters to distinguish between words.

also known as version control, this means keeping track of the changes that are made to a file, no matter how small. This is usually done using an automated Version Control System, such as GitHub. Many file storage services, such as Dropbox, OneDrive, and Google Drive, keep historic versions of a file every time it is saved. These versions can be accessed by browsing the file's history.

a document that describes a dataset, including details about its contents and design.

a machine-readable and often machine-actionable document, similar to a codebook, that generally contains detailed information about the technical structure of a dataset in addition to its contents.

wording automatically inserted by survey software based on previous responses.

research that relies on computers for data creation and/or analysis.

a system for automatically tracking every change to a document or file, allowing users to revert to all previously saved versions without needing to continually save copies under different file names.

a record of the source, history, and ownership of an artifact, though in this case the artifact is computational.

where code, commentary, and output display together in a linear fashion, much like a piece of literature.

reproducible research is research that can be repeated by researchers who were not part of the original research team using the original data and getting the same results.

learning computer code has been compared to learning a new language. Coding literacy is the ability to comprehend computer code, much like mathematical literacy is the ability to comprehend math.

a plain text file that includes detailed information about datasets or code files. These files help users understand what is required to use and interpret the files, which means they are unique to each individual project. Cornell University has a detailed guide to writing README files that includes downloadable templates (Research Data Management Service Group, n.d.).

like a self-contained virtual computer within a computer. It includes everything required to run a piece of software (including the operating system), without the need to download and install any programs or data.

an additional software library that can be downloaded from the internet and used for specific programmatic tasks.