What to Do About Assessment?

So What Can Educators Do?

The first step is to ensure that your assessments are aligned with your GenAI use policy. Note: your policy does not have to be the same for all activities and assessments, but you do need to be explicit about how students can use various tools for the different parts of activities and assessments.

The first step is to ensure that your assessments are aligned with your GenAI use policy. Note: your policy does not have to be the same for all activities and assessments, but you do need to be explicit about how students can use various tools for the different parts of activities and assessments.

Once you have an idea of how you would like students to accomplish the task (e.g., with a GenAI rough draft; in a group; in-class; as an oral exam; etc.) you should write up the detailed instructions for the assessment.

Then, you need to test your assessment, using the latest LLM-based tools. Input the assignment instructions into ChatGPT (and Copilot and Gemini) and evaluate the tool’s response: how would you grade the output? Did ChatGPT “earn” a passing grade on this assessment? If so, you need to alter the assessment in some way.

In April 2023, Graham Clay posted a challenge on his blog, AutomatED: Teaching Better with Tech: Believe Your Assignment is AI-Immune? Let’s Put it to the Test. He asked professors to submit their current assessments and his team would try to accomplish them—and earn a passing grade—in one hour or less, using AI tools. About this challenge, Clay and Lee write that the results “…do not imply the demise of written take-home assignments. Instead, it narrows the scope of viable written take-home assignments, like a track that used to have eight lanes but now has four” (Clay & Lee, 2023). This assessment design challenge is especially useful because in it, Clay debunks some of the techniques or approaches that instructors think are making their assignments more AI-immune.

AI-Immunity Challenge: Lessons from a Clinical Research Exam

What we learned from using AI to try to crack an exam’s iterated questions, verboten content, and field-specific standards.

Why We Failed to “Plagiarize” an Economics Project with AI

What we learned trying to crack a project’s reliance on lengthy novels, journal articles, and field-specific standards.

What We Learned from an AI-Driven Assignment on Plato

Our task was to train an AI character to emulate Thrasymachus. We struggled and so did two students.

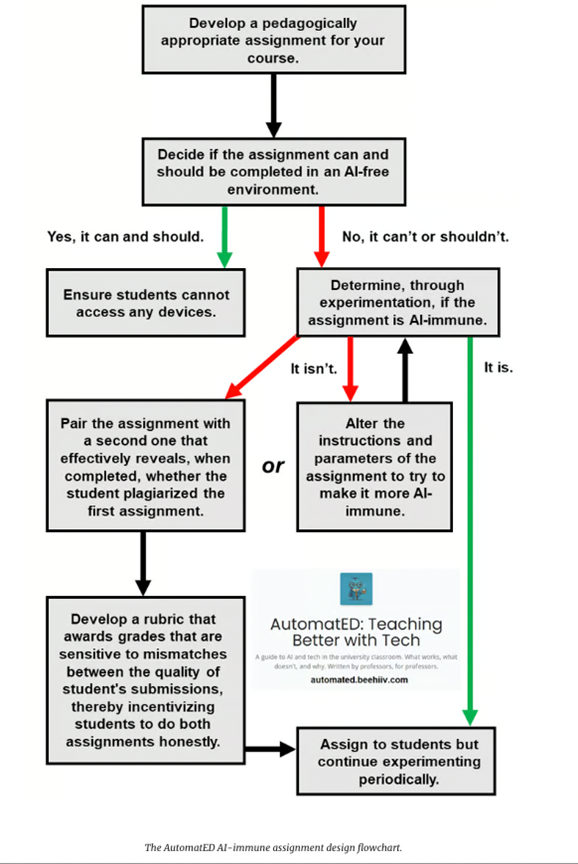

Clay proposes a flowchart to help instructors evaluate their assessments in the age of GenAI:

Assignment Design Process

According to these suggestions (and to a similar decision tree from the University of Michigan: Course and Assignment (Re-)Design), there are three major approaches to take. If the assessment must absolutely be done without the use of AI, the instructor must ensure that students have no access to any devices that could help them. This likely means an in-person, invigilated exam (or, supervised in-class work). It also means that students with accessibility needs will have their accommodations scrutinized (students who write with computers rather than by hand; etc.), that test anxiety will increase, and that the hard-won progress away from rigid, inauthentic written and timed final exams will be lost.

The next approach is to make the assessment AI-immune (or “ChatGPT-proof”). Clay’s assessment design challenge showed that this was, in fact, quite difficult. Some of the examples above were innovative and took a lot of work, but it is unclear if they were even achieving the intended learning outcomes. An instructor may be hard-pressed to come up with a single, high-stakes, summative assignment that is ChatGPT-proof….and what about all the other assessment that needs to be done throughout the course?

The answer may lie in Clay’s final recommended approach: pairing assessments. Clay suggests making all assignments two-part: the first part can be accomplished at home, but the second must be in-person/in-class (or synchronous live, in an online course). The second part (quick oral or written exam; class presentation; in-class peer review; pair discussion with a write-up handed in; etc.) serves essentially as the insurance that the student understands the material, and is weighted more than the first part. The educator should explain the approach to students, that it is in their interest to fully participate in the creation of the first part of the assignment to ensure their success on the second part, which is worth more. This discussion of in-person, synchronous assessments segues nicely into our next section…..

The answer may lie in Clay’s final recommended approach: pairing assessments. Clay suggests making all assignments two-part: the first part can be accomplished at home, but the second must be in-person/in-class (or synchronous live, in an online course). The second part (quick oral or written exam; class presentation; in-class peer review; pair discussion with a write-up handed in; etc.) serves essentially as the insurance that the student understands the material, and is weighted more than the first part. The educator should explain the approach to students, that it is in their interest to fully participate in the creation of the first part of the assignment to ensure their success on the second part, which is worth more. This discussion of in-person, synchronous assessments segues nicely into our next section…..

Media Attributions

- This image was created using DALL·E

- This image was created using DALL·E