Using LLMs for Teaching and Learning

Instructor Use of ChatGPT

As this is a resource designed for instructors, the section on instructor use is the most thorough. We approach the discussion of student use of ChatGPT by focusing on recommended practices, rather than describing how students actually are using such tools. It falls to educators to fairly expose students to the benefits and limitations of LLM-based tools, ensuring a balanced understanding of their capabilities and pitfalls.

Leveraging the Strengths of LLMs

One of the most appealing applications of ChatGPT for instructor use is in creating a wide variety of materials, an activity that would otherwise be too resource intensive. As previously discussed, when using LLM-based tools for any endeavour, but especially when creating instructional materials, it is best to consider these tools as an “eager intern”— a knowledgeable assistant that you can task with some grunt work, but whose final output must be carefully vetted. ChatGPT is known for creating passages that appear well written and cogent. However, LLMs sound authoritative even when they are hallucinating, and sometimes the errors can really only be identified (and corrected!) by an expert.

Some of the strengths of LLM-based tools include generating:

- concept explanations at various levels;

- diverse, bespoke examples;

- sample problems (including step-by-step solutions);

- study or review materials (summaries, flashcards, practice problems, etc.); and

- suggested resources for further research on a topic.

We cannot stress enough the need to vet ChatGPT’s output; the instructor must ensure that they are not providing students with flawed study material, incorrect sample problems, or approaches or methodologies that are inappropriate to the discipline. Indeed, the final strength, resources or articles for further research, is one whose output needs to be most thoroughly reviewed, as ChatGPT routinely creates citations out of whole cloth, and, just as often, will claim that there are no articles or resources on a particular topic. Refining prompts can lead ChatGPT to reveal legitimate, existing resources, but only careful scrutiny and double-checking will identify the hallucinated articles. Other tools such as Perplexity and Bing are better at this particular task, and ChatGPT will no doubt improve, but for the foreseeable future, it is important to review all GenAI output carefully.

One of ChatGPT’s most easily applicable skills is its ability to explain concepts at different levels. Given specific prompts, ChatGPT can generate detailed explanations of concepts with appropriate complexity for different audiences.

| Younger/less advanced learners | Advanced students |

|---|---|

| uses simpler vocabulary and shorter sentences | uses appropriate technical terminology and complex sentence structures |

| provides more background information or explanation of basic concepts | provides more detailed and nuanced aspects of a topic, since it “knows” that the learners already have a foundation in the topic |

| explains using simple analogies and concrete examples | explains using abstract examples or describes more advanced scenarios |

Based on the prompts or queries, ChatGPT attempts to gauge the user’s current understanding in order to tailor responses. Providing more information and context will result in better/more appropriate responses.

Unlike a mere mortal, ChatGPT can generate dozens of problems or study questions in the blink of an eye. The instructor will then evaluate those problems, perhaps deciding to use a few of them as is, or refining their prompts to ask ChatGPT to produce slightly different questions. A subject matter expert can use ChatGPT as a writing buddy to generate and refine topics on which the instructor is expert, but with added flair in the output. The instructor can create engaging and surprising word problems, case studies, quiz questions, etc. in order to motivate students, such as focussing a series of questions on a particular time period or universe of fictional characters (e.g., Harry Potter, Marvel, Minecraft, Star Wars, Pokémon, etc. ), or include references to pop culture, sports, or current events. While many of these approaches are surface level “inside jokes” designed to make a student smile, such “Easter eggs” have the added benefit of motivating students to persist with their learning. However, ChatGPT can also tailor materials with more serious goals; given appropriate information, it can create a case study about a current economic or environmental situation, design an engineering challenge set in a particular industry or company, or produce a question set based on specific information (e.g., soil analysis assignment for the local region; statistical analysis of local sports teams’ results; calculating the area/volume of local landmarks/attractions; etc.). Instructors can create varied and engaging material in a fraction of the time (“checking” time vs “brainstorming/creating” time).

Editing or reviewing questions is typically quicker than devising them from scratch. As you become more adept at crafting prompts for ChatGPT, tailoring them to specific subjects and levels of difficulty, both the quality and relevance of the generated content improve. In cases where ChatGPT delivers some appropriate questions alongside others that are not quite on target, educators have the option of requesting additional examples, problems, or variations. Rewording prompts, offering feedback, and setting clearer boundaries can lead to better output. Generating and reviewing a larger set of questions—say double what you anticipate needing— is still more efficient than creating 10 new questions from scratch. Furthermore, ChatGPT can modify practice questions into exam-ready formats, ensuring they are suitably challenging for assessments. It can also generate multiple versions of questions on the same concept to use in a quiz bank so that every student gets a slightly different exam that still all meet the learning outcomes. Creating enough practice problems for students is time-consuming and can sap an instructor’s creativity. ChatGPT can generate multiple problems— including the steps—giving instructors both a bank of exam questions and several demonstration problems. Again, we stress the importance of checking not only ChatGPT’s answers, but also its steps, to ensure that it is modelling for students what you intended.

Using ChatGPT to generate study materials is a double-edged sword. We know that students learn better when they create their own study material from trusted sources, when they review their notes to create summaries, make flashcards based on vocabulary in the textbook, or solve and annotate the problem sets in the workbook. However, because ChatGPT is usually—but not always—correct, and because ChatGPT appears so confident, a student generating study material using only ChatGPT (and not checking with the course textbook or notes) is at risk of learning incorrect information. Even if a student does double-check with their notes or the course text, some errors that ChatGPT makes are slight enough that they would only be picked up by careful reading by an expert; a student may not even understand the ways in which ChatGPT is making the errors. For this reason, we recommend that study guides be generated and vetted by a subject matter expert, and then shared with students. However, we will examine a number of ways that students can confidently leverage ChatGPT on their own.

New tools and plug-ins promising support with course design or teaching are gaining in popularity, which we regard with cautious optimism. For example, “College/University Course Design Wizard,” and “Instructional Design and Technology Expert” (among others) are plug-ins available with a paid ChatGPT account; at first blush, they appear to lead the user through a series of questions that will effectively shape the design of assessments, learning outcomes, course topics, etc., but we have not tested these tools extensively. Contact North’s AI Teaching Assistant Pro purports to generate multiple-choice tests, learning outcomes, and essay questions, and is publicly available, but our limited testing shows it to have deficiencies (both in functionality and in accuracy). These tools may be just the type of kick-start a weary instructor needs, but the output even from these “specialized” chatbots still needs to be carefully vetted.

Recognizing the Limitations of LLMs

Understanding the limitations of LLM-based tools in teaching is critical for successfully leveraging their capabilities. The possibilities of tools like ChatGPT are in constant flux; many features that were unavailable at ChatGPT’s release have since been integrated or are under development in other tools. With new plugins and extensions—as well as custom GPTs—being developed every day, it is increasingly likely that AI will soon have the capacity to perform nearly any task we can imagine.

That being said, as of this writing, there are notable constraints to functionality, particularly in freely accessible tools. We can anticipate a situation where some functionalities become more accessible, while others remain premium features or become cost prohibitive. Still other features may cease to exist entirely if they appear to serve a small population. Hosting and running an AI-based tool—let alone creating and training one— require substantial resources, necessitating increased commercialization. Instructors should be aware of a potential new Digital Divide: students with financial means may gain access to superior and more responsive tools, while others might face limitations (MacGregor, 2024). This disparity is a challenge for instructors to consider in the context of equitable access to educational technology.

Accuracy in New or Niche Topics

While ChatGPT can provide general information, it often doesn’t have access to the most current research developments or specific details from the latest scientific papers, which creates gaps in information. While ChatGPT sometimes caveats its responses with delineations of its training data (e.g., “as of April 2023” for GPT-4 Turbo), it is just as likely to appear authoritative while missing swaths of material. Given that copyright restrictions prevent certain materials from becoming part of an LLM training database, it is likely that many recent scientific advancements will remain a mystery to ChatGPT. However, BingChat/Microsoft Copilot has real-time access to the Internet, thereby increasing the likelihood of finding information about newer topics.

In addition to the limitations due to the timeliness of its training data, ChatGPT may not be able to adequately address more niche subjects, techniques, or methodologies due to the nature of the datasets. The information ChatGPT can produce depends what it has been trained on, and as we saw in a previous section, the dataset, while vast, is potentially of dubious quality, and not necessarily academic—let alone scientific—in nature. However, the creation of bespoke chatbots geared toward niche topics is in our near future; it is simply a question of how “niche” future topics will be.

Math Overall, Calculations in Particular

One important limitation of using ChatGPT for STEM teaching is its ineptitude with math (Frieder et al., 2023). All LLMs have difficulty doing math because they do not reason or calculate, but instead try to predict what text to generate next. The frequency of small versus large numbers as text in the training data means that LLM-based tools are more likely to predict a correct answer for small numbers than large numbers, resulting in mistakes when responding to math prompts. Interestingly, Azaria found that the frequency of numbers appearing in ChatGPT outputs, which could be expected to be either purely probabilistic (each digit appearing 10% of the time), or perhaps occur according to Benford’s law (smaller digits are more likely than larger ones), were neither: in fact, ChatGPT produces humans’ favourite number (7) the most often and humans’ least favourite number (1) the least often (Azaria, 2022).

When ChatGPT appears to be “calculating,” it is, in fact, only recalling patterns it has seen rather than computing the answer in real time. The tool also doesn’t understand its own limitations and will respond confidently, not signalling its answers as potential guesses; it even accuses the learner of not understanding the subject they are asking about (Azaria, 2022).

However, ChatGPT’s limitations in raw calculation do not preclude the integration of external tools that provide computational abilities: for a deep dive into how add-on tools are already helping ChatGPT with its gaps, consider how Wolfram Alpha adds “computational superpowers” to ChatGPT: Wolfram|Alpha as the Way to Bring Computational Knowledge Superpowers to ChatGPT and ChatGPT Gets Its “Wolfram Superpowers”!) (Wolfram, 2023). Standing alone, ChatGPT is not at all reliable for work in math, but with the Wolfram plug-in, it is much more robust. As of this writing, the plug-in is only available with a paid ChatGPT account.

Explicating Problem-Solving

ChatGPT can break down problems to solve step by step and show its work. However, it may not provide the most efficient solution, nor follow the field’s best practices. If there are specific ways of approaching complex problems in your discipline, you will want to explicitly teach your students these techniques so that they do not default to another procedure. ChatGPT’s overconfidence can be detrimental in that it may not model its critical thinking processes when dealing with complex problems, so students have no chance to learn these abilities. Problem-solving and critical thinking are crucial skills for STEM students, and overreliance on an external tool will hamper students’ future work in such disciplines.

Lacking Specificity or Nuance

Tools such as ChatGPT may not understand the context of complex STEM problems and, while they can provide a broad overview of the topic, they may be unable to communicate certain specifics or nuances of the field. Atoosa Kasirzadeh characterizes it thus:

LLMs may not capture nuanced value judgements implicit in scientific writings. Although LLMs seem to provide useful general summaries of some scientific texts, for example, it is less clear whether they can capture the uncertainties, limitations and nuances of research that are obvious to the human scientist. Relying solely on LLMs for writing scientific summaries can result in oversimplified texts that overlook crucial value judgements and lead to misinterpretations of study results. (Birhane et al., 2023)

This caveat is especially important, as one popular and accessible use of ChatGPT is to summarize longer passages. But what happens to scientific thought when students in STEM (and perhaps even scientists themselves), stop reading and critically evaluating primary research, and instead rely on AI-generated summaries to provide insight and evaluation? Indeed, Abeba Birhane says that “…it would be a grave error to treat LLMs as scientists that can produce science. Knowledge implies responsibility and is never detached from the scientist that produces it” (Birhane et al., 2023).

LLM-generated explanations of scientific concepts may lack detail or may be illogical. This is perhaps not surprising, knowing that the training data is not STEM-specific, and that LLMs are not “reasoning.” That being said, the biggest example thus far of an LLM trained on “48 million examples of scientific articles, websites, textbooks, lecture notes, and encyclopedias” (Edwards, 2022), Meta’s Galactica, had a short, ignominious life (about 3 days). In that time, it was able to hallucinate a convincing meta-analysis of the health benefits of eating crushed glass, but refused to answer questions about AIDS as the query did not pass its content filters (Heaven, 2022).

As two Australian professors put it:

Galactica takes […] bias towards certainty, combines it with wrong answers and delivers responses with supreme overconfidence: hardly a recipe for trustworthiness in a scientific information service (Snoswell & Burgess, 2022).

Considering the following humorous example from Azaria, where ChatGPT’s logic fails miserably on a very straight-forward topic, it is daunting to think of what missteps the tool could make when dealing with complex, niche, or esoteric STEM concepts:

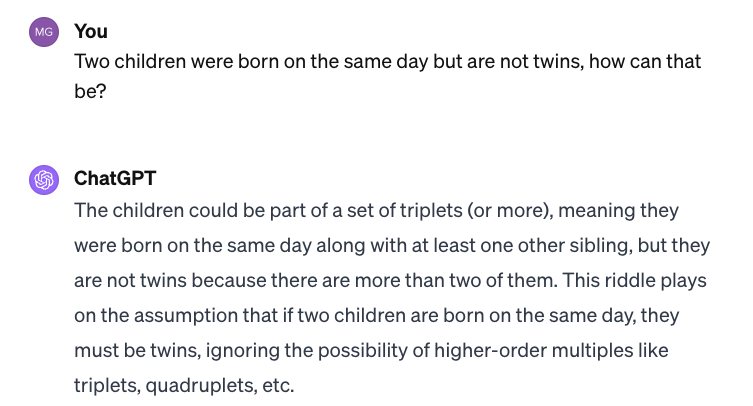

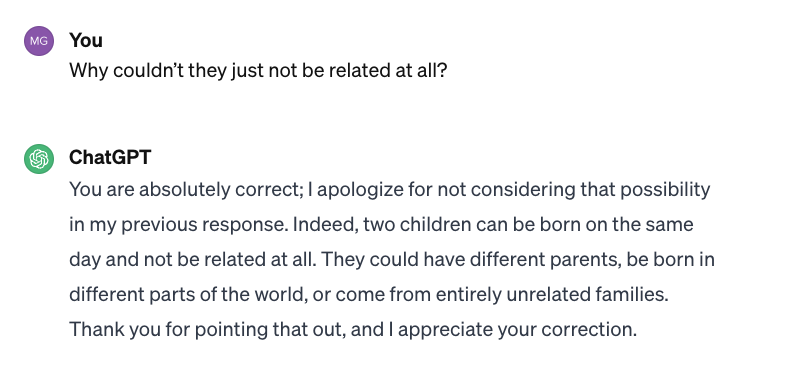

user: Two children were born on the same day but are not twins, how can that be?

chatgpt: It is possible for two children to be born on the same day, but not be twins if they are not born from the same mother. In this case, the children would be considered to be siblings, but not twins. There are many factors that can affect the likelihood of this happening, including the mother’s health, the length of her pregnancy, and the size of her family. Ultimately, it is possible for two children to be born on the same day and not be twins, but it is not very common.

user: Why couldn’t they just not be related at all?

chatgpt: It is possible for two children to be born on the same day and not be related at all. However, in this case they would be considered to be coincidentally born on the same day, rather than being “born on the same day but not twins.” The phrase “born on the same day but not twins” typically implies that the two children are somehow related, but not as twins. This could mean that they are siblings, but not twins, or that they have some other familial connection. In any case, it is unusual for two children to be born on the same day and not be related in some way. (italics mine, Azaria, 2022)

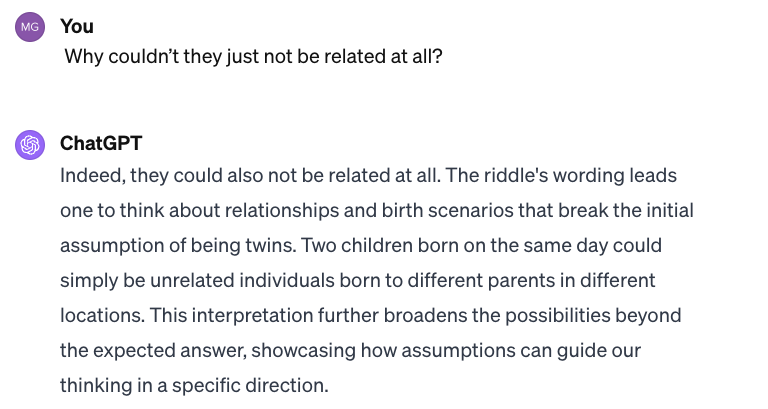

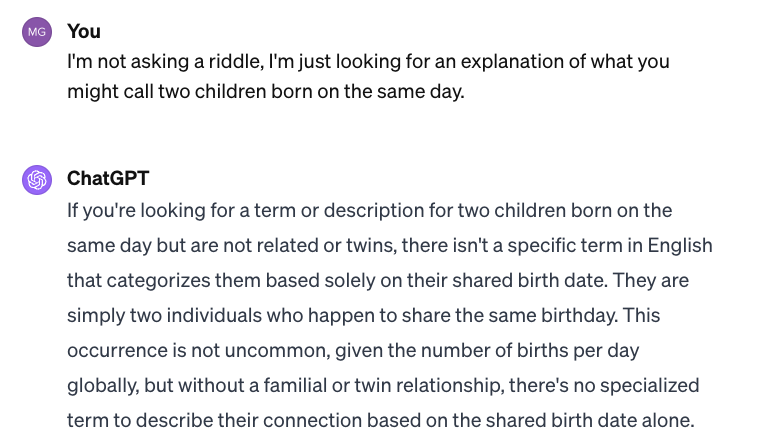

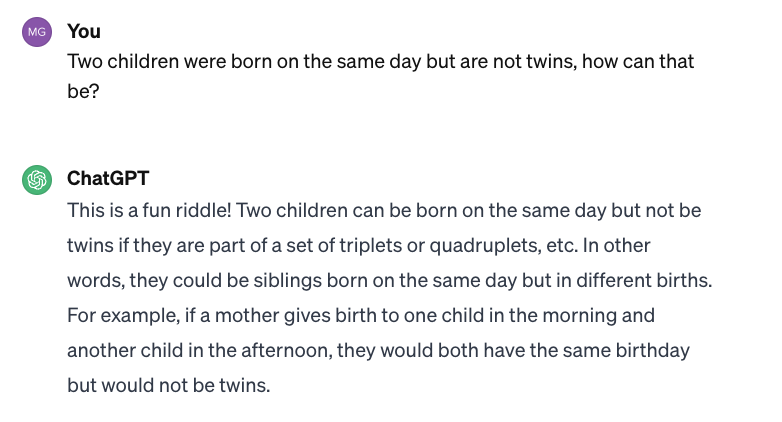

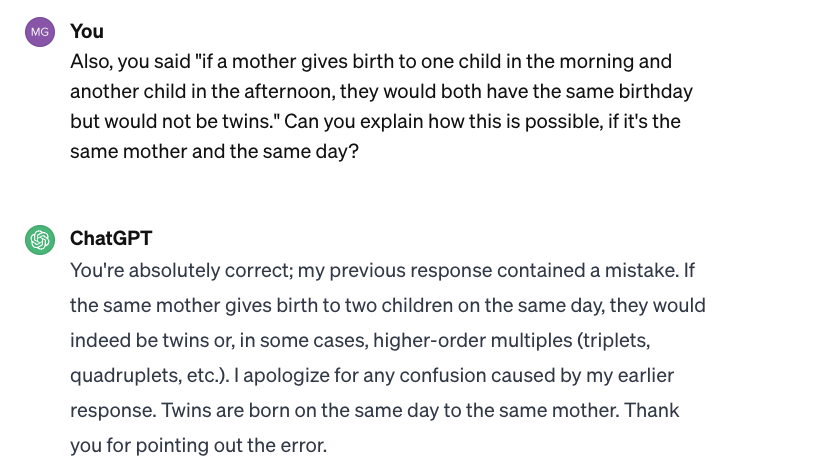

When we asked ChatGPT the same questions, with the exact same wording, more than a year later, ChatGPT still did not provide the most obvious explanation, instead inferring that we had asked it a riddle:

We can see that ChatGPT in January 2024 thinks much differently about “…it is unusual for two children to be born on the same day and not be related in some way” (Azaria, 2022) than pre-December 2023 ChatGPT.

This January 2024 conversation was with ChatGPT 4. Below is the same conversation, on the same day, but with ChatGPT 3.5:

Economics professors Tyler Cowen and Alex Tabarrok (2023) provide an excellent list of cautions for instructors using ChatGPT:

- You cannot rely on GPT models for exact answers to data questions. Just don’t do it. And while there are ongoing improvements, it is unlikely that all “random errors” will be eliminated soon.

- The tool should be matched to the question. If you are asking a search type question use Google or a GPT tied to the internet such as Bing Chat and direct it explicitly to search. There are many GPT and AI tools for researchers, not just general GPTs. Many of these tools will become embedded in workflows. We have heard, for example, that Word, Stata, R, Excel or their successors will all likely start to embed AI tools.

- GPT models do give “statistically likely” answers to your queries. So most data answers are broadly in the range of the true values. You might thus use GPT for getting a general sense of numbers and magnitudes. For that purpose, it can be much quicker than rooting around with links and documents. Nonetheless beware.

- GPT models sometimes hallucinate sources.

- Do not be tricked by the reasonable tone of GPT. People have tells when they lie but GPTs always sound confident and reasonable. Many of our usual “b.s. detectors” won’t be tripped by a false answer from a GPT. This is yet another way in which you need to reprogram your intuitions when dealing with GPTs.

- The answers to your data queries give some useful information and background context, for moving on to the next step. Keep asking questions. (Cowen & Tabarrok, 2023, p. 21)

In the next section, we will examine some ways that students might use GenAI tools for their studies.

Media Attributions

- This image was created using DALL·E

- This image was created using DALL·E