A Brief History of Machine Learning and LLMs

1980s – 1990s

The Second Wave of AI and the Second AI Winter

During the 1980s, progress continued on neural network-based AI models. IBM developed new statistical models infused with nascent machine learning capabilities. These systems could now make decisions not through rigid rules, but by discerning probabilistic patterns within vast datasets. Interestingly, because the first application of IBM’s models was translating French to English, the datasets used were the official (bilingual) records (Hansards) of the 36th Canadian Parliament (Collins, n.d.).

Meanwhile, John Hopfield made strides in understanding the computational nature of memory. In his 1982 paper, Hopfield described a model of recurrent neural network (RNN), present in biological systems, but applicable to engineered systems, that could learn and recall complex patterns.

And in 1997, Sepp Hochreiter and Jürgen Schmidhuber introduced the idea of long short-term memory (LSTM), which utilize RNN models. These networks, a refinement of RNNs, had performance advantages over, and addressed a persistent weakness in, their predecessors: the inability to handle long-range dependencies within language.

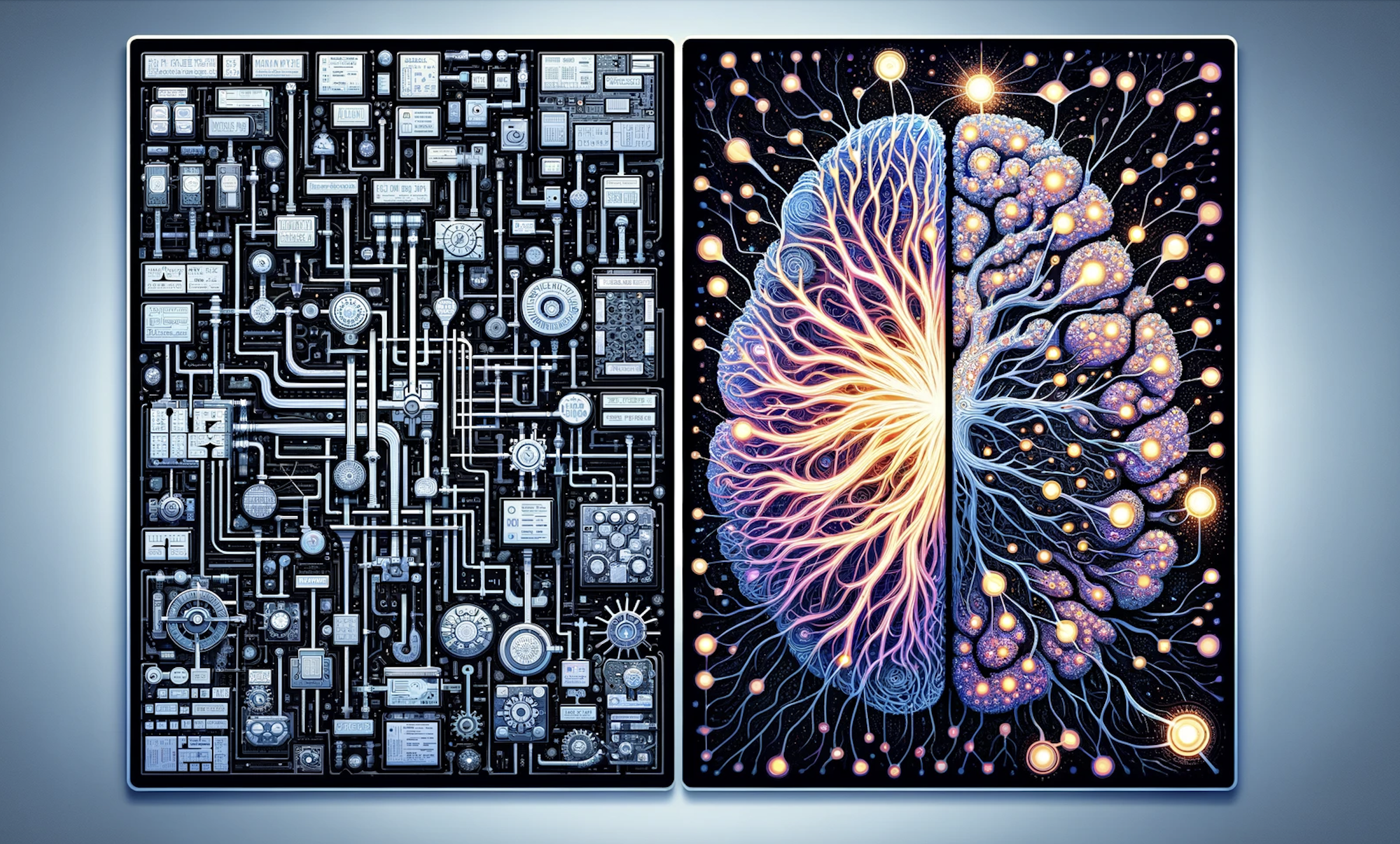

But during this same period, a new model of AI began to gain prominence. Around the start of the first AI winter, Edward Feigenbaum had introduced the first expert system, DENDRAL. Expert systems took a different approach than previous models, both in goals and architecture. Rather than attempting to mimic (simplified) biological brain function and dynamic learning using neural networks, expert systems sought to understand and mimic static expertise. To do this, they used a model which applied a system of heuristic rules (expert thinking) to a highly detailed and specialized knowledgebase (expert knowledge). These models were not trying to learn or grow, they were trying to provide specialized information and decisions from a fixed set of knowledge, in a repeatable way. To put it another way, an expert system was like a meticulously crafted algorithm or flowchart, guiding a machine step by step through a process to an output. A neural network was like a brain that could learn through trial, error, and reinforcement —often without being able to “retrace its steps” from input to output, the way an expert system could.

But during this same period, a new model of AI began to gain prominence. Around the start of the first AI winter, Edward Feigenbaum had introduced the first expert system, DENDRAL. Expert systems took a different approach than previous models, both in goals and architecture. Rather than attempting to mimic (simplified) biological brain function and dynamic learning using neural networks, expert systems sought to understand and mimic static expertise. To do this, they used a model which applied a system of heuristic rules (expert thinking) to a highly detailed and specialized knowledgebase (expert knowledge). These models were not trying to learn or grow, they were trying to provide specialized information and decisions from a fixed set of knowledge, in a repeatable way. To put it another way, an expert system was like a meticulously crafted algorithm or flowchart, guiding a machine step by step through a process to an output. A neural network was like a brain that could learn through trial, error, and reinforcement —often without being able to “retrace its steps” from input to output, the way an expert system could.

As Joshua Lederberg (Feigenbaum’s collaborator on DENDRAL) would later reflect:

“…we were trying to invent AI, and in the process discovered an expert system. This shift of paradigm, ‘that Knowledge IS Power’ was explicated in our 1971 paper, and has been the banner of the knowledge-based-system movement within AI research from that moment.”(p. 12, Lederberg, 1987)

Expert systems were an important shift of focus for AI research and were able to show success in specialized areas of expertise. This success began to draw research attention and a resurgence of funding from agencies like DARPA and government programs like the Strategic Computing Initiative.

Expert systems did, however, have several drawbacks. The prevalent high level programming language used to create expert systems, LISP, was resource intensive, and as more ambitious models were developed, dedicated hardware was designed to run LISP efficiently. A small industry developed in the design and manufacture of dedicated workstations and minicomputers known as LISP Machines. In addition, as the models became more ambitious, the creation of the knowledgebases and heuristic rulesets became more and more labour intensive, and the labour required was highly specialized expertise. And not all researchers in the field were convinced expert systems were the best way forward. Notably, they came under criticism from John McCarthy, a pioneer in the field of AI and one of the conveners of the Darthmouth Summer Research Project discussed earlier. In his 1984 paper Some Expert Systems Need Common Sense, he argued that expert systems would never have enough common-sense knowledge or common-sense reasoning ability to be suitable for many applications.

These two factors led to an eventual disillusionment of policy makers in the promise of expert systems to solve broader problems. As an example of this rising skepticism, the US Military’s 1986 SDI Large-Scale System Technology Study evaluated expert systems as part of the SDI (colloquially known as the “Star Wars” anti-ballistic missile defense system), and gave this (by now familiar) commentary:

“Finally, AI researchers must guard against an excess of technical hubris induced by self-generated hype. AI researchers have identified a number of exceedingly difficult problems that form the basis of the field. In most cases, relatively minute inroads have been made in the solution of these problems. For example, AI Systems can represent and draw conclusions in relatively simple situations and solve relatively simple problems. The promise offered by those inroads (in, say, expert systems) has been so great as to distort completely, in many cases, the perspective that ought to be maintained. As a result AI has taken on magical attributes, and the expectations of customers are exoatmospheric. It is critical to the orderly advancement of the field to maintain realistic expectations; that is, that the potential contribution of AI to solving difficult, complex problems is quite high, but significant effort remains before the potential will come to fruition.” (p. 6-10, System Development Corporation, 1986)

Such overhyped promises and exaggerated capabilities led to disappointment once again, causing a reduction of funding in some cases, and a reallocation of funding to neural network-based models in others. At the same time, rapid advances in microcomputer performance and widespread availability began to seriously call into question the need for expensive specialized hardware like the LISP Machines. The rapid decline of the industry manufacturing them further contributed to the decline of capital and talent available to the field.

Such overhyped promises and exaggerated capabilities led to disappointment once again, causing a reduction of funding in some cases, and a reallocation of funding to neural network-based models in others. At the same time, rapid advances in microcomputer performance and widespread availability began to seriously call into question the need for expensive specialized hardware like the LISP Machines. The rapid decline of the industry manufacturing them further contributed to the decline of capital and talent available to the field.

These developments together led to another period of slowed progress, reduced funding, and lower interest and expectations in AI that would be known as the “Second AI Winter”.

Media Attributions

- This image was created using DALL·E

- This image was created using DALL·E