6.3 Measures of Central Tendency and Spread

Learning Objectives

By the end of this section, you will be able to:

- Explain why central tendency and dispersion are important.

- Calculate the mean, median and mode for a set of data and explain what these measures represent.

- Identify the types of variables that the mean, median, and mode are most appropriate.

- Describe skewness and how it affects the mean.

- Define the term outlier and its impact on central tendency and dispersion.

- Calculate variance, standard deviation, range, inter-quartile range.

Now that we have visualized our data to understand its shape, we can begin with numerical analyses. The descriptive statistics presented in this chapter serve to describe the distribution of our data objectively and mathematically—our first step into statistical analysis! The topics here will serve as the basis for everything we do in the rest of the course.

What Is Central Tendency?

What is central tendency, and why do we want to know the central tendency of a group of scores? Let us first try to answer these questions intuitively. Then we will proceed to a more formal discussion.

Imagine this situation: You are in a class with just four other students, and the five of you took a 5-point pop quiz: Today your instructor is walking around the room, handing back the quizzes. She stops at your desk and hands you your paper. Written in bold black ink on the front is “[latex]\frac{3}{5}[/latex].” How do you react? Are you happy with your score of [latex]3[/latex] or disappointed? How do you decide? You might calculate your percentage correct, realize it is [latex]60\%[/latex], and be appalled. But it is more likely that when deciding how to react to your performance, you will want additional information. What additional information would you like?

If you are like most students, you will immediately ask your classmates, “What’d ya get?” and then ask the instructor, “How did the class do?” In other words, the additional information you want is how your quiz score compares to other students’ scores. You therefore understand the importance of comparing your score to the class distribution of scores. Should your score of [latex]3[/latex] turn out to be among the higher scores, then you’ll be pleased after all. On the other hand, if [latex]3[/latex] is among the lower scores in the class, you won’t be quite so happy.

This idea of comparing individual scores to a distribution of scores is fundamental to statistics. So let’s explore it further, using the same example (the pop quiz you took with your four classmates). Three possible outcomes are shown in Table 6.3.1. They are labelled “Dataset A,” “Dataset B,” and “Dataset C.” Which of the three datasets would make you happiest? In other words, in comparing your score with your fellow students’ scores, in which dataset would your score of [latex]3[/latex] be the most impressive?

|

Student |

Dataset A |

Dataset B |

Dataset C |

|---|---|---|---|

|

You |

[latex]3[/latex] |

[latex]3[/latex] |

[latex]3[/latex] |

|

Ahmed |

[latex]3[/latex] |

[latex]4[/latex] |

[latex]2[/latex] |

|

Rosa |

[latex]3[/latex] |

[latex]4[/latex] |

[latex]2[/latex] |

|

Tamika |

[latex]3[/latex] |

[latex]4[/latex] |

[latex]2[/latex] |

|

Luther |

[latex]3[/latex] |

[latex]5[/latex] |

[latex]1[/latex] |

In Dataset A, everyone’s score is [latex]3[/latex]. This puts your score at the exact centre of the distribution. You can draw satisfaction from the fact that you did as well as everyone else. But of course it cuts both ways: everyone else did just as well as you.

Now consider the possibility that the scores are described as in Dataset B. This is a depressing outcome even though your score is no different than the one in Dataset A. The problem is that the other four students had higher grades, putting yours below the centre of the distribution.

Finally, let’s look at Dataset C. This is more like it! All of your classmates score lower than you, so your score is above the centre of the distribution.

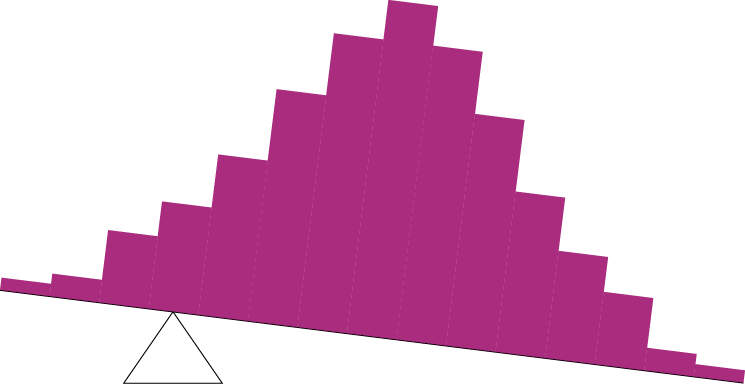

Now let’s change the example in order to develop more insight into the centre of a distribution. Figure 6.3.1 shows the results of an experiment on memory for chess positions. Subjects were shown a chess position and then asked to reconstruct it on an empty chess board. The number of pieces correctly placed was recorded. This was repeated for two more chess positions. The scores represent the total number of chess pieces correctly placed for the three chess positions. The maximum possible score was [latex]89[/latex].

Two groups are compared. On the left are people who don’t play chess. On the right are people who play a great deal (tournament players). It is clear that the location of the centre of the distribution for the non-players is much lower than the centre of the distribution for the tournament players.

We’re sure you get the idea now about the centre of a distribution. It is time to move beyond intuition. We need a formal definition of the centre of a distribution. In fact, we’ll offer you three definitions! This is not just generosity on our part. There turn out to be (at least) three different ways of thinking about the centre of a distribution, all of them useful in various contexts. In the remainder of this section we attempt to communicate the idea behind each concept. In the succeeding sections we will give statistical measures for these concepts of central tendency.

Definitions of Centre

Now we explain the three measures of central tendency: (1) the point on which a distribution will balance, (2) the value whose average absolute deviation from all the other values is minimized, and (3) the value whose squared deviation from all the other values is minimized.

Balance Scale

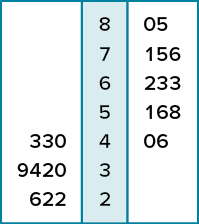

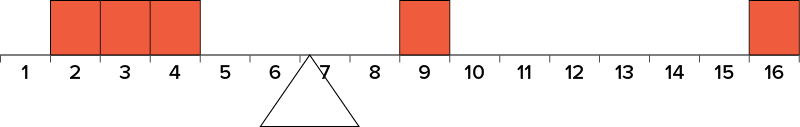

One definition of central tendency is the point at which the distribution is in balance. Figure 6.3.2 shows the distribution of the five numbers [latex]2, 3, 4, 9, 16[/latex] placed upon a balance scale. If each number weighs one pound, and is placed at its position along the number line, then it would be possible to balance them by placing a fulcrum at a particular point.

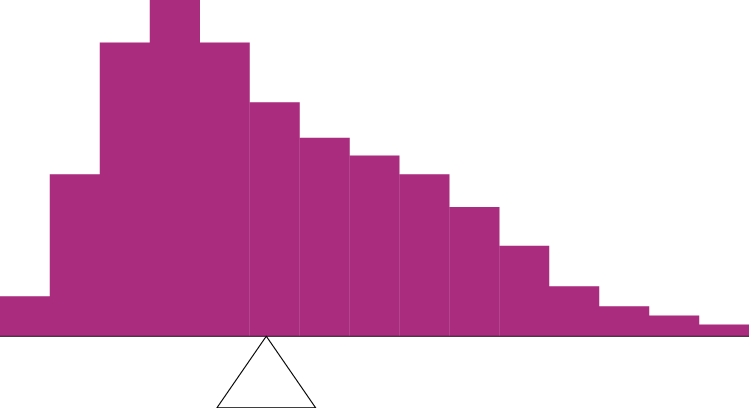

For another example, consider the distribution shown in Figure 6.3.3. It is balanced by placing the fulcrum in the geometric middle.

Figure 6.3.4 illustrates that the same distribution can’t be balanced by placing the fulcrum to the left of centre.

Figure 6.3.5 shows an asymmetric distribution. To balance it, we cannot put the fulcrum halfway between the lowest and highest values (as we did in Figure 6.3.3). Placing the fulcrum at the “half way” point would cause it to tip towards the left.

Smallest Absolute Deviation

Another way to define the centre of a distribution is based on the concept of the sum of the absolute deviations (differences). Consider the distribution made up of the five numbers [latex]2, 3, 4, 9, 16[/latex]. Let’s see how far the distribution is from [latex]10[/latex] (picking a number arbitrarily). Table 6.3.2 shows the sum of the absolute deviations of these numbers from the number [latex]10[/latex].

|

Values |

Absolute Deviations from [latex]10[/latex] |

|---|---|

|

[latex]2[/latex] |

[latex]8[/latex] |

|

[latex]3[/latex] |

[latex]7[/latex] |

|

[latex]4[/latex] |

[latex]6[/latex] |

|

[latex]9[/latex] |

[latex]1[/latex] |

|

[latex]16[/latex] |

[latex]6[/latex] |

|

Sum |

[latex]28[/latex] |

The first row of the table shows that the absolute value of the difference between [latex]2[/latex] and [latex]10[/latex] is [latex]8[/latex]; the second row shows that the absolute difference between [latex]3[/latex] and [latex]10[/latex] is [latex]7[/latex], and similarly for the other rows. When we add up the five absolute deviations, we get [latex]28[/latex]. So, the sum of the absolute deviations from [latex]10[/latex] is [latex]28[/latex]. Likewise, the sum of the absolute deviations from [latex]5[/latex] equals [latex]3+2+1+4+11=21[/latex]. So, the sum of the absolute deviations from [latex]5[/latex] is smaller than the sum of the absolute deviations from [latex]10[/latex]. In this sense, [latex]5[/latex] is closer, overall, to the other numbers than is [latex]10[/latex].

We are now in a position to define a second measure of central tendency, this time in terms of absolute deviations. Specifically, according to our second definition, the centre of a distribution is the number for which the sum of the absolute deviations is smallest. As we just saw, the sum of the absolute deviations from [latex]10[/latex] is [latex]28[/latex] and the sum of the absolute deviations from [latex]5[/latex] is [latex]21[/latex]. Is there a value for which the sum of the absolute deviations is even smaller than [latex]21[/latex]? Yes. For these data, there is a value for which the sum of absolute deviations is only [latex]20[/latex]. See if you can find it.

Smallest Squared Deviation

We shall discuss one more way to define the centre of a distribution. It is based on the concept of the sum of squared deviations (differences). Again, consider the distribution of the five numbers [latex]2,3,4,9,16[/latex]. Table 6.3.3 shows the sum of the squared deviations of these numbers from the number [latex]10[/latex].

| Values | Squared Deviations from [latex]10[/latex] |

| [latex]2[/latex] | [latex]64[/latex] |

| [latex]3[/latex] | [latex]49[/latex] |

| [latex]4[/latex] | [latex]36[/latex] |

| [latex]9[/latex] | [latex]1[/latex] |

| [latex]16[/latex] | [latex]36[/latex] |

| Sum | [latex]186[/latex] |

The first row in the table shows that the squared value of the difference between [latex]2[/latex] and [latex]10[/latex] is [latex]64[/latex]; the second row shows that the squared difference between [latex]3[/latex] and [latex]10[/latex] is [latex]49[/latex], and so forth. When we add up all these squared deviations, we get [latex]186[/latex].

Changing the target from [latex]10[/latex] to [latex]5[/latex], we calculate the sum of the squared deviations from [latex]5[/latex] as [latex]9+4+1+16+121=151[/latex]. So, the sum of the squared deviations from [latex]5[/latex] is smaller than the sum of the squared deviations from [latex]10[/latex]. Is there a value for which the sum of the squared deviations is even smaller than [latex]151[/latex]? Yes, it is possible to reach [latex]134.8[/latex]. Can you find the target number for which the sum of squared deviations is [latex]134.8[/latex]?

The target that minimizes the sum of squared deviations provides another useful definition of central tendency (the last one to be discussed in this section). It can be challenging to find the value that minimizes this sum.

Measures of Central Tendency

In the previous section we saw that there are several ways to define central tendency. This section defines the three most common measures of central tendency: the mean, the median, and the mode. The relationships among these measures of central tendency and the definitions given in the previous section will probably not be obvious to you.

This section gives only the basic definitions of the mean, median and mode.

Arithmetic Mean

The arithmetic mean—the sum of the numbers divided by the number of numbers—is the most common measure of central tendency. The symbol “[latex]\mu[/latex]” (pronounced “mew”) is used for the mean of a population. The symbol [latex]M[/latex] is used for the mean of a sample. (In advanced statistics textbooks, the symbol [latex]\bar{X}[/latex], pronounced “x bar,” may be used to represent the mean of a sample.) The formula for [latex]\mu[/latex] is shown below:

[latex]\mu=\frac{\sum X}{N}[/latex]

where [latex]\sum X[/latex] is the sum of all the numbers in the population and [latex]N[/latex] is the number of numbers in the population.

The formula for [latex]M[/latex] is essentially identical:

[latex]M=\frac{\sum X}{n}[/latex]

where [latex]\sum X[/latex] is the sum of all the numbers in the sample and [latex]n[/latex] is the number of numbers in the sample. The only distinction between these two equations is whether we are referring to the population (in which case we use [latex]\mu[/latex] and [latex]N[/latex]) or a sample of that population (in which case we use [latex]M[/latex] and [latex]n[/latex]).

As an example, the mean of the numbers [latex]1,2,3,6,8[/latex] is [latex]\frac{20}{5}=4[/latex] regardless of whether the numbers constitute the entire population or just a sample from the population.

Figure 6.3.6 shows the number of touchdown (TD) passes thrown by each of the [latex]31[/latex] teams in the National Football League in the 2000 season. The mean number of touchdown passes thrown is [latex]20.45[/latex], as shown below.

[latex]\mu=\frac{\sum X}{N}=\frac{634}{31}=20.45[/latex]

Although the arithmetic mean is not the only “mean” (there is also a geometric mean, a harmonic mean, and many others that are all beyond the scope of this course), it is by far the most commonly used. Therefore, if the term “mean” is used without specifying whether it is the arithmetic mean, the geometric mean, or some other mean, it is assumed to refer to the arithmetic mean.

Try It

1) If the mean time to respond to a stimulus is much higher than the median time to respond, what can you say about the shape of the distribution of response times?

Solution

If the mean is higher, that means it is farther out into the right-hand tail of the distribution. Therefore, we know this distribution is positively skewed.

Median

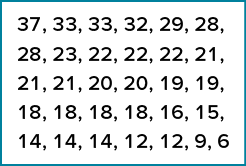

The median is also a frequently used measure of central tendency. The median is the midpoint of a distribution: the same number of scores is above the median as below it. For the data in Figure 6.3.6, there are [latex]31[/latex] scores. The [latex]16^{th}[/latex] highest score (which equals [latex]20[/latex]) is the median because there are [latex]15[/latex] scores below the [latex]16^{th}[/latex] score and [latex]15[/latex] scores above the [latex]16^{th}[/latex] score. The median can also be thought of as the [latex]50^{th}[/latex] percentile.

When there is an odd number of numbers, the median is simply the middle number. For example, the median of [latex]2,4[/latex], and [latex]7[/latex] is [latex]4[/latex]. When there is an even number of numbers, the median is the mean of the two middle numbers. Thus, the median of the numbers [latex]2,4,7,12[/latex] is:

[latex]\frac{4+7}{2}=5.5[/latex]

When there are numbers with the same values, each appearance of that value gets counted. For example, in the set of numbers [latex]1,3,4,4,5,8[/latex], and [latex]9[/latex], the median is [latex]4[/latex] because there are three numbers ([latex]1,3[/latex], and [latex]4[/latex]) below it and three numbers ([latex]5,8[/latex], and [latex]9[/latex]) above it. If we only counted [latex]4[/latex] once, the median would incorrectly be calculated at [latex]4.5[/latex] ([latex]4+5[/latex], divided by [latex]2[/latex]). When in doubt, writing out all of the numbers in order and marking them off one at a time from the top and bottom will always lead you to the correct answer.

Mode

The mode is the most frequently occurring value in the dataset. For the data in Figure 6.3.6, the mode is [latex]18[/latex] since more teams ([latex]4[/latex]) had [latex]18[/latex] touchdown passes than any other number of touchdown passes. With continuous data, such as response time measured to many decimals, the frequency of each value is one since no two scores will be exactly the same (see discussion of continuous variables in 6.1 Basics of Statistics). Therefore the mode of continuous data is normally computed from a grouped frequency distribution. Table 6.3.4 shows a grouped frequency distribution for the target response time data. Since the interval with the highest frequency is [latex]600[/latex] to [latex]700[/latex], the mode is the middle of that interval ([latex]650[/latex]). Although the mode is not frequently used for continuous data, it is nevertheless an important measure of central tendency as it is the only measure we can use on qualitative or categorical data.

|

Range |

Frequency |

|---|---|

|

[latex]500[/latex] to [latex]600[/latex] |

[latex]3[/latex] |

|

[latex]600[/latex] to [latex]700[/latex] |

[latex]5[/latex] |

|

[latex]700[/latex] to [latex]800[/latex] |

[latex]5[/latex] |

|

[latex]800[/latex] to [latex]900[/latex] |

[latex]5[/latex] |

|

[latex]900[/latex] to [latex]1000[/latex] |

[latex]0[/latex] |

|

[latex]1000[/latex] to [latex]1100[/latex] |

[latex]1[/latex] |

More on the Mean and Median

In the section What Is Central Tendency? above, we saw that the centre of a distribution could be defined three ways: (1) the point on which a distribution would balance, (2) the value whose average absolute deviation from all the other values is minimized, and (3) the value whose squared deviation from all the other values is minimized. The mean is the point on which a distribution would balance, the median is the value that minimizes the sum of absolute deviations, and the mean is the value that minimizes the sum of the squared deviations.

Table 6.3.5 shows the absolute and squared deviations of the numbers [latex]2,3,4,9[/latex], and [latex]16[/latex] from their median of [latex]4[/latex] and their mean of [latex]6.8[/latex]. You can see that the sum of absolute deviations from the median ([latex]20[/latex]) is smaller than the sum of absolute deviations from the mean ([latex]22.8[/latex]). On the other hand, the sum of squared deviations from the median ([latex]174[/latex]) is larger than the sum of squared deviations from the mean ([latex]134.8[/latex]).

|

Value |

Absolute Deviation from Median |

Absolute Deviation from Mean |

Squared Deviation from Median |

Squared Deviation from Mean |

|---|---|---|---|---|

|

[latex]2[/latex] |

[latex]2[/latex] |

[latex]4.8[/latex] |

[latex]4[/latex] |

[latex]23.04[/latex] |

|

[latex]3[/latex] |

[latex]1[/latex] |

[latex]3.8[/latex] |

[latex]1[/latex] |

[latex]14.44[/latex] |

|

[latex]4[/latex] |

[latex]0[/latex] |

[latex]2.8[/latex] |

[latex]0[/latex] |

[latex]7.84[/latex] |

|

[latex]9[/latex] |

[latex]5[/latex] |

[latex]2.2[/latex] |

[latex]25[/latex] |

[latex]4.84[/latex] |

|

[latex]16[/latex] |

[latex]12[/latex] |

[latex]9.2[/latex] |

[latex]144[/latex] |

[latex]84.64[/latex] |

|

Total |

[latex]20[/latex] |

[latex]22.8[/latex] |

[latex]174[/latex] |

[latex]134.80[/latex] |

Figure 6.3.7 shows that the distribution balances at the mean of [latex]6.8[/latex] and not at the median of [latex]4[/latex]. The relative advantages and disadvantages of the mean and median are discussed in the section Comparing Measures of Central Tendency.

When a distribution is symmetric, then the mean and the median are the same. Consider the following distribution: [latex]1,3,4,5,6,7,9[/latex]. The mean and median are both [latex]5[/latex]. The mean, median, and mode are identical in the bell-shaped normal distribution.

Try It

2) For the following problem, use the following scores: [latex]5,8,8,8,7,8,9,12,8,9,8,10,7,9,7,6,9 10,11,8[/latex].

a. Create a histogram of these data. What is the shape of this histogram?

b. How do you think the three measures of central tendency will compare to each other in this dataset?

c. Compute the sample mean, the median, and the mode

d. Draw and label lines on your histogram for each of the above values. Do your results match your predictions?

Solution

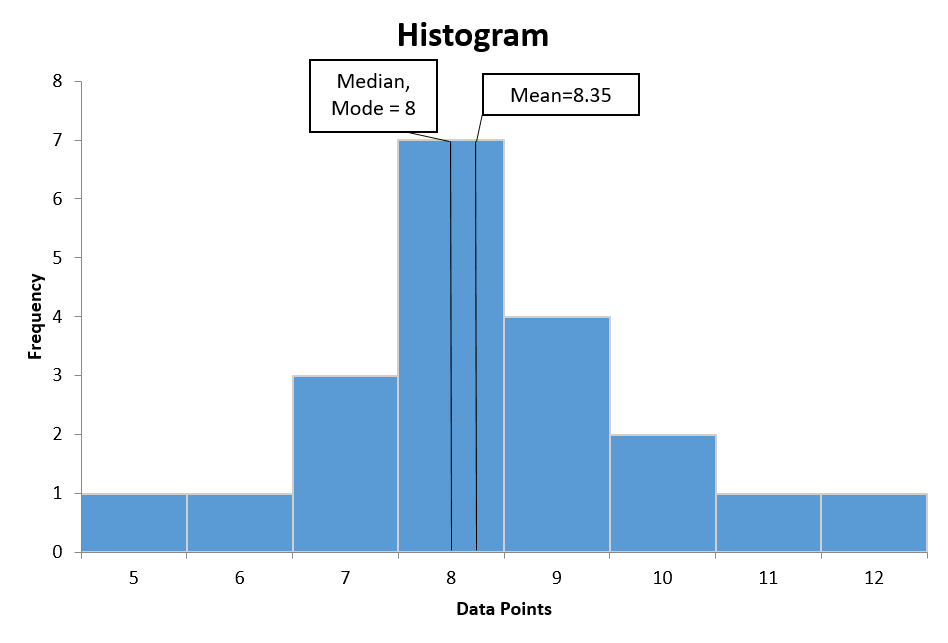

a.

b. This histogram is slightly positively skewed. Since there is a positive skew, we would anticipate that the mean would be greater than the median. The mode and the median may be similar here because of the number of data points in the middle of the distribution.

c. median = [latex]8[/latex], mode = [latex]8[/latex], mean = [latex]8.35[/latex]

d. The mean being larger than the median was expected with the positive skew. The mode and the median being equal makes sense since the skew is minimal.

Comparing Measures of Central Tendency

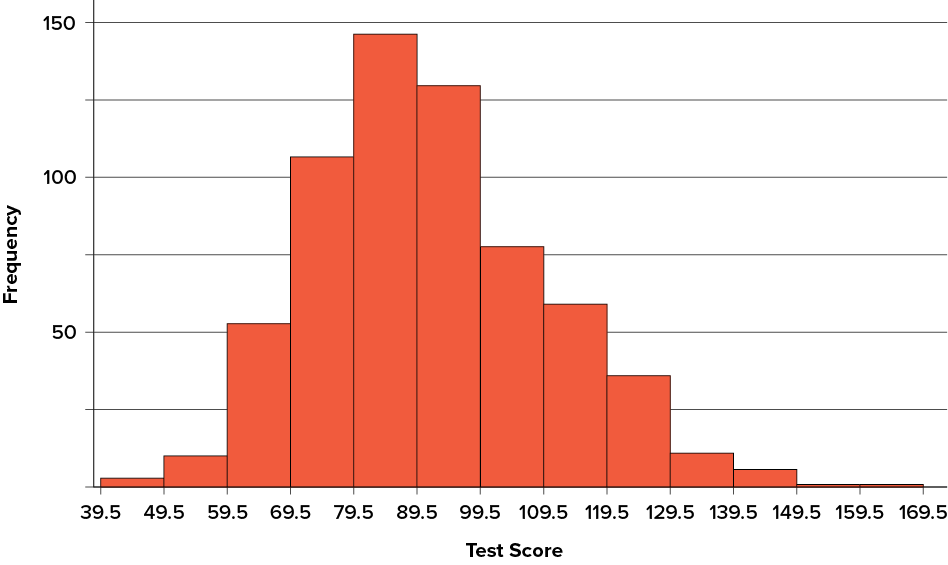

How do the various measures of central tendency compare with each other? For symmetric distributions, the mean and median are the same value, as is the mode except in bimodal distributions. However, differences among the measures occur with skewed distributions. Figure 6.3.9 shows the distribution of [latex]642[/latex] scores on an introductory psychology test. Notice this distribution has a slight positive skew.

Measures of central tendency are shown in Table 6.3.6. Notice they do not differ greatly, with the exception that the mode is considerably lower than the other measures. When distributions have a positive skew, the mean is typically higher than the median, although it may not be in bimodal distributions. For these data, the mean of [latex]91.58[/latex] is higher than the median of [latex]90[/latex]. This pattern holds true for any skew: the mode will remain at the highest point in the distribution, the median will be pulled slightly out into the skewed tail (the longer end of the distribution), and the mean will be pulled the farthest out. Thus, the mean is more sensitive to skew than the median or mode, and in cases of extreme skew, the mean may no longer be appropriate to use.

| Measure | Value |

| Mode | [latex]84.00[/latex] |

| Median | [latex]90.00[/latex] |

| Mean | [latex]91.58[/latex] |

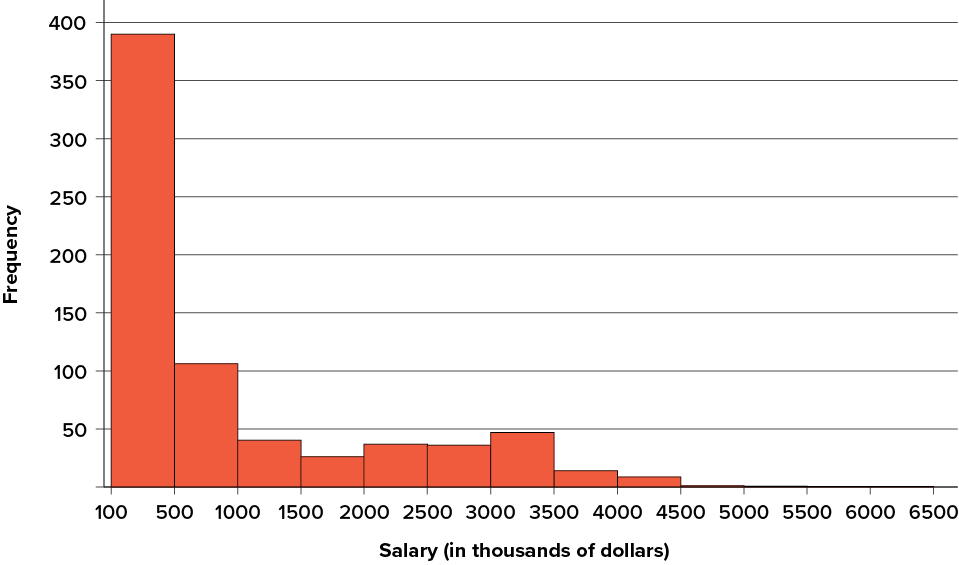

The distribution of baseball salaries (in 1994) shown in Figure 6.3.10 has a much more pronounced skew than the distribution in Figure 6.3.9.

Table 6.3.7 shows the measures of central tendency for these data. The large skew results in very different values for these measures. No single measure of central tendency is sufficient for data such as these. If you were asked the very general question: “So, what do baseball players make?” and answered with the mean of [latex]\$1,183,000[/latex], you would not have told the whole story since only about one third of baseball players make that much. If you answered with the mode of [latex]\$109,000[/latex] or the median of [latex]\$500,000[/latex], you would not be giving any indication that some players make many millions of dollars. Fortunately, there is no need to summarize a distribution with a single number. When the various measures differ, our opinion is that you should report the mean and median. Sometimes it is worth reporting the mode as well. In the media, the median is usually reported to summarize the centre of skewed distributions. You will hear about median salaries and median prices of houses sold, etc. This is better than reporting only the mean, but it would be informative to hear more statistics.

| Measure | Value |

| Mode | [latex]190[/latex] |

| Median | [latex]500[/latex] |

| Mean | [latex]1\text{,}183[/latex] |

Outliers

Outliers are data values that are significantly different than the other data values present. These can sometimes arise due to error in collecting the data, but can also be true data values. For example, if one baseball player made [latex]\$6,500,000[/latex], the mean would be pulled even more towards that value. Outliers affect the mean more than the median or mode. We say that the median and mode are more resistant to outliers.

Try It

3) Compare the mean, median, and mode in terms of their sensitivity to extreme scores.

Solution

The mean is the most sensitive to extreme scores as all data values affect the mean. The median is sensitive to the middle points of a data set, and so it is resistant to extreme scores. The mode is sensitive to the most frequent scores and so it is resistant to extreme scores. The best measure of central tendency when there are extreme scores is the median.

Try It

4) Your younger brother comes home one day after taking a science test. He says someone at school told him that “[latex]60\%[/latex] of the students in the class scored above the median test grade.” What is wrong with this statement?

What if he had said “[latex]60\%[/latex] of the students scored above the mean?”

Solution

The median is defined as the value with [latex]50\%[/latex] of scores above it and [latex]50\%[/latex] of scores below it; therefore, [latex]60\%[/latex] of score cannot fall above the median. If [latex]60\%[/latex] of scores fall above the mean, that would indicate that the mean has been pulled down below the value of the median, which means that the distribution is negatively skewed

Spread and Variability

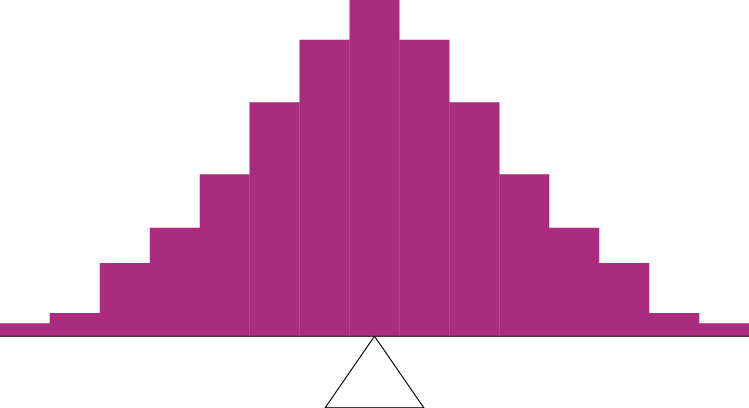

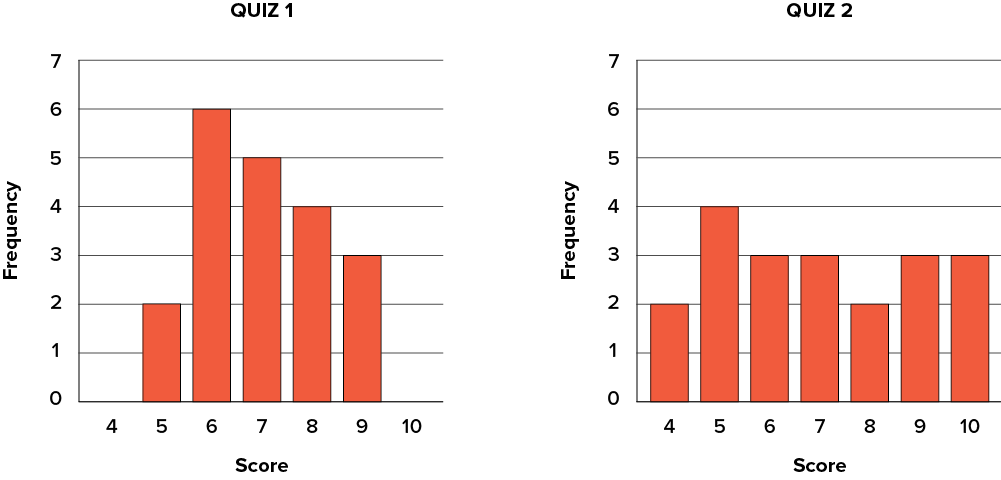

Variability refers to how “spread out” a group of scores is. To see what we mean by spread out, consider the graphs in Figure 6.3.11. These graphs represent the scores on two quizzes. The mean score for each quiz is [latex]7.0[/latex]. Despite the equality of means, you can see that the distributions are quite different. Specifically, the scores on Quiz 1 are more densely packed and those on Quiz 2 are more spread out. The differences among students were much greater on Quiz 2 than on Quiz 1.

The terms variability, spread, and dispersion are synonyms and refer to how spread out a distribution is. Just as in the section on central tendency where we discussed measures of the centre of a distribution of scores, in this section we will discuss measures of the variability of a distribution. There are three frequently used measures of variability: range, variance, and standard deviation. In the next few paragraphs, we will look at each of these measures of variability in more detail.

Range

The range is the simplest measure of variability to calculate, and one you have probably encountered many times in your life. The range is simply the highest score minus the lowest score. Let’s take a few examples. What is the range of the following group of numbers: [latex]10,2,5,6,7,3,4[/latex]? Well, the highest number is [latex]10[/latex], and the lowest number is [latex]2[/latex], so [latex]10-2=8[/latex]. The range is [latex]8[/latex].

Let’s take another example. Here’s a dataset with [latex]10[/latex] numbers: [latex]99,45,23,67,45,91,82,78,62,51[/latex]. What is the range? The highest number is [latex]99[/latex] and the lowest number is [latex]23[/latex], so [latex]99-23=76[/latex]; the range is [latex]76[/latex]. Now consider the two quizzes shown in Figure 6.3.10. On Quiz 1, the lowest score is [latex]5[/latex] and the highest score is [latex]9[/latex]. Therefore, the range is [latex]4[/latex]. The range on Quiz 2 was larger: the lowest score was [latex]4[/latex] and the highest score was [latex]10[/latex]. Therefore the range is [latex]6[/latex].

The problem with using range is that it is extremely sensitive to outliers, and one number far away from the rest of the data will greatly alter the value of the range. For example, in the set of numbers [latex]1,3,4,4,5,8[/latex], and [latex]9[/latex], the range is [latex]8(9-1)[/latex]. However, if we add a single person whose score is nowhere close to the rest of the scores, say, [latex]20[/latex], the range more than doubles from [latex]8[/latex] to [latex]19[/latex].

Interquartile Range

The interquartile range (IQR) is the range of the middle [latex]50\%[/latex] of the scores in a distribution and is sometimes used to communicate where the bulk of the data in the distribution are located. It is computed as follows:

[latex]\text{IQR}=75^{th}\;\text{percentile}-25^{th}\;\text{percentile}[/latex]

For Quiz 1, the [latex]75^{th}[/latex] percentile is [latex]8[/latex] and the [latex]25^{th}[/latex] percentile is [latex]6[/latex]. The interquartile range is therefore [latex]2[/latex]. For Quiz 2, which has greater spread, the [latex]75^{th}[/latex] percentile is [latex]9[/latex], the [latex]25^{th}[/latex] percentile is [latex]5[/latex], and the interquartile range is [latex]4[/latex]. Recall that in the discussion of box plots, the [latex]75^{th}[/latex] percentile was called the upper hinge and the [latex]25^{th}[/latex] percentile was called the lower hinge. Using this terminology, the interquartile range is referred to as the H-spread.

Sum of Squares

Variability can also be defined in terms of how close the scores in the distribution are to the middle of the distribution. Using the mean as the measure of the middle of the distribution, we can see how far, on average, each data point is from the centre. The data from Quiz 1 are shown in Table 6.3.8.

There are a few things to note about how Table 6.3.8 is formatted. The raw data scores [latex](X)[/latex] are always placed in the left-most column. This column is then summed at the bottom [latex]\left(\sum X\right)[/latex] to facilitate calculating the mean by dividing the sum of [latex]X[/latex] values by the number of scores in the table [latex](N)[/latex]. The mean score is [latex]7.0[/latex] [latex]\left(\frac{\sum X}{N}=\frac{140}{20}=7.0\right)[/latex]. Once you have the mean, you can easily work your way down the second column calculating the deviation scores [latex](X-M)[/latex], representing how far each score deviates from the mean, here calculated as the score ([latex]X[/latex] value) minus [latex]7[/latex]. This column is also summed and has a very important property: it will always sum to [latex]0[/latex], or close to zero if you have rounding error due to many decimal places [latex]\left(\sum(X-M)=0\right)[/latex]. This step is used as a check on your math to make sure you haven’t made a mistake. If this column sums to [latex]0[/latex], you can move on to filling in the third column, which is composed of the squared deviation scores. The deviation scores are squared to remove negative values and appear in the third column [latex](X-M)^2[/latex]. When these values are summed, you have the sum of the squared deviations, or the sum of squares [latex](SS)[/latex], calculated with the formula

[latex]SS=\sum (X-M)^2[/latex]

|

[latex]X[/latex] |

[latex]X-M[/latex] |

[latex](X-M)^2[/latex] |

[latex]X^2[/latex] |

|---|---|---|---|

|

[latex]9[/latex] |

[latex]2[/latex] |

[latex]4[/latex] |

[latex]81[/latex] |

|

[latex]9[/latex] |

[latex]2[/latex] |

[latex]4[/latex] |

[latex]81[/latex] |

|

[latex]9[/latex] |

[latex]2[/latex] |

[latex]4[/latex] |

[latex]81[/latex] |

|

[latex]8[/latex] |

[latex]1[/latex] |

[latex]1[/latex] |

[latex]64[/latex] |

|

[latex]8[/latex] |

[latex]1[/latex] |

[latex]1[/latex] |

[latex]64[/latex] |

|

[latex]8[/latex] |

[latex]1[/latex] |

[latex]1[/latex] |

[latex]64[/latex] |

|

[latex]8[/latex] |

[latex]1[/latex] |

[latex]1[/latex] |

[latex]64[/latex] |

|

[latex]7[/latex] |

[latex]0[/latex] |

[latex]0[/latex] |

[latex]49[/latex] |

|

[latex]7[/latex] |

[latex]0[/latex] |

[latex]0[/latex] |

[latex]49[/latex] |

|

[latex]7[/latex] |

[latex]0[/latex] |

[latex]0[/latex] |

[latex]49[/latex] |

|

[latex]7[/latex] |

[latex]0[/latex] |

[latex]0[/latex] |

[latex]49[/latex] |

|

[latex]7[/latex] |

[latex]0[/latex] |

[latex]0[/latex] |

[latex]49[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]6[/latex] |

[latex]-1[/latex] |

[latex]1[/latex] |

[latex]36[/latex] |

|

[latex]5[/latex] |

[latex]-2[/latex] |

[latex]4[/latex] |

[latex]25[/latex] |

|

[latex]5[/latex] |

[latex]-2[/latex] |

[latex]4[/latex] |

[latex]25[/latex] |

|

[latex]\sum X=140[/latex] |

[latex]\sum(X-M)=0[/latex] |

[latex]\sum(X-M)^2=30[/latex] |

[latex]\sum X^2=1\text{,}010[/latex] |

|

[latex](\sum X)^2=19,600[/latex] |

|

|

|

[latex]SS=4+4+4+1+1+1+1+0+0+0+0+0+1+1+1+1+1+1+4+4=30[/latex]

The preceding formula is called the definitional formula, as it shows the logic behind the sum of squared deviations calculation. As mentioned earlier, there can be rounding errors in calculating the deviation scores. Also, when the set of scores is large, calculating the deviation scores, squaring the scores, and then summing those values can be tedious. To simplify the sum of squares calculation, the computational formula is used instead. The computational formula is as follows:

[latex]SS=\sum X^2-\frac{(\sum X)^2}{N}[/latex]

The last column in Table 6.3.8 represents the [latex]X[/latex] values squared and then summed — [latex]\sum X^2[/latex]. At the bottom of the first column, the [latex]\sum X[/latex] value is squared — [latex](\sum X)^2[/latex]. These are the values used in the computational formula for the sum of squares. As you can see in the calculation below, the [latex]SS[/latex] value is the same for both the definitional formula and the computational formula:

[latex]SS=\sum X^2-\frac{(\sum X)^2}{N}=1,010=\frac{19,600}{20}=30[/latex]

As we will see, the sum of squares appears again and again in different formulas — it is a very important value, and using the [latex]X[/latex] and [latex]X^2[/latex] columns in this table makes it simple to calculate the [latex]SS[/latex] without error.

Variance

Now that we have the sum of squares calculated, we can use it to compute our formal measure of average distance from the mean—the variance. The variance is defined as the average squared difference of the scores from the mean. We square the deviation scores because, as we saw in the second column of Table 6.3.8, the sum of raw deviations is always [latex]0[/latex], and there’s nothing we can do mathematically without changing that.

The population parameter for variance is [latex]\sigma^2[/latex] (“sigma-squared”) and is calculated as:

[latex]\sigma^2=\frac{SS}{N}[/latex]

We can use the value we previously calculated for [latex]SS[/latex] in the numerator, then simply divide that value by [latex]N[/latex] to get variance. If we assume that the values in Table 6.3.8 represent the full population, then we can take our value of sum of squares and divide it by [latex]N[/latex] to get our population variance:

[latex]\sigma^2=\frac{30}{20}=1.5[/latex]

So, on average, scores in this population are [latex]1.5[/latex] squared units away from the mean. This measure of spread exhibits much more robustness (a term used by statisticians to mean resilience or resistance to outliers) than the range, so it is a much more useful value to compute. Additionally, as we will see in future chapters, variance plays a central role in inferential statistics.

The sample statistic used to estimate the variance is [latex]s^2[/latex] (“s-squared”):

[latex]s^2=\frac{SS}{N-1}=\frac{SS}{df}[/latex]

This formula is very similar to the formula for the population variance with one change: we now divide by [latex]N-1[/latex] instead of [latex]N[/latex]. The value [latex]N-1[/latex] has a special name: the degrees of freedom (abbreviated as df). You don’t need to understand in depth what degrees of freedom are (essentially they account for the fact that we have to use a sample statistic to estimate the mean [latex]M[/latex] before we estimate the variance) in order to calculate variance, but knowing that the denominator is called df provides a nice shorthand for the variance formula:

[latex]\frac{SS}{df}[/latex]

Going back to the values in Table 6.3.8 and treating those scores as a sample, we can estimate the sample variance as:

[latex]s^2=\frac{30}{20-1}=1.58[/latex]

Notice that this value is slightly larger than the one we calculated when we assumed these scores were the full population. This is because our value in the denominator is slightly smaller, making the final value larger. In general, as your sample size [latex]N[/latex] gets bigger, the effect of subtracting [latex]1[/latex] becomes less and less. Comparing a sample size of [latex]10[/latex] to a sample size of [latex]1000[/latex]; [latex]10-1=9[/latex], or [latex]90\%[/latex] of the original value, whereas [latex]1000-1=999[/latex], or [latex]99.9\%[/latex] of the original value. Thus, larger sample sizes will bring the estimate of the sample variance closer to that of the population variance. This is a key idea and principle in statistics that we will see over and over again: larger sample sizes better reflect the population.

Standard Deviation

The standard deviation is simply the square root of the variance. This is a useful and interpretable statistic because taking the square root of the variance (recalling that variance is the average squared difference) puts the standard deviation back into the original units of the measure we used. Thus, when reporting descriptive statistics in a study, scientists virtually always report mean and standard deviation. Standard deviation is therefore the most commonly used measure of spread for our purposes, representing the average distance of the scores from the mean.

The population parameter for standard deviation is [latex]\sigma[/latex] (“sigma”), which, intuitively, is the square root of the variance parameter [latex]\sigma^2[/latex] (occasionally, the symbols work out nicely that way). The formula is simply the formula for variance under a square root sign:

[latex]\sigma=\sqrt{\frac{SS}{N}}=\sqrt{\frac{30}{20}}=\sqrt{1.5}=1.22[/latex]

The sample statistic follows the same conventions and is given as [latex]s[/latex] in mathematical formulas.

[latex]s=\sqrt{\frac{SS}{df}}=\sqrt{\frac{30}{20-1}}=\sqrt{1.58}=1.26[/latex]

Try It

5) Compute the sample mean and sample standard deviation for the following scores: [latex]=8,-4,-7,-6,-8,-5,-7,-9,-2,0[/latex]

Solution

Sample mean [latex]-5.6[/latex] and sample standard deviation [latex]2.875[/latex].

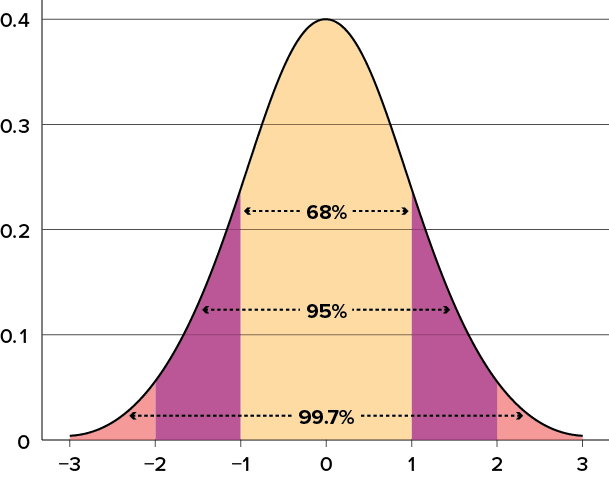

The standard deviation is an especially useful measure of variability when the distribution is normal or approximately normal because the proportion of the distribution within a given number of standard deviations from the mean can be calculated. For example, [latex]68\%[/latex] of the distribution is within one standard deviation (above and below) of the mean and approximately [latex]95\%[/latex] of the distribution is within two standard deviations of the mean, as shown in Figure 6.3.12. Therefore, if you had a normal distribution with a mean of [latex]50[/latex] and a standard deviation of [latex]10[/latex], then [latex]68\%[/latex] of the distribution would be between [latex]50-10=40[/latex] and [latex]50+10=60[/latex]. Similarly, about [latex]95\%[/latex] of the distribution would be between [latex]50-2\times10=30[/latex] and [latex]50+2\times10=70[/latex].

Try It

6) Make up three datasets with five numbers each that have:

a. The same mean but different standard deviations.

b. The same mean but different medians.

c. The same median but different means.

Solution

Note, many answers are possible for this question.

a. 1,2,3,4,5 (mean 3, standard deviation 1.41) vs 2,3,3,3,4 (mean 3, standard deviation 0.63)

b. 1,2,3,4,5 (mean 3 median 3) vs 1,2,4,4,4 (mean 3 median 4)

c. 1,2,3,4,5 (mean 3 median 3) vs 1,2,3,5,7 (mean 3.6 median 3)

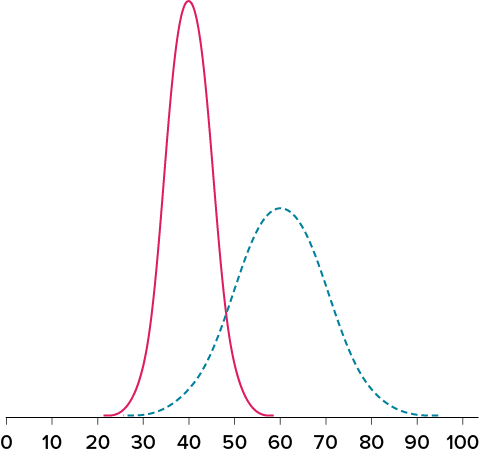

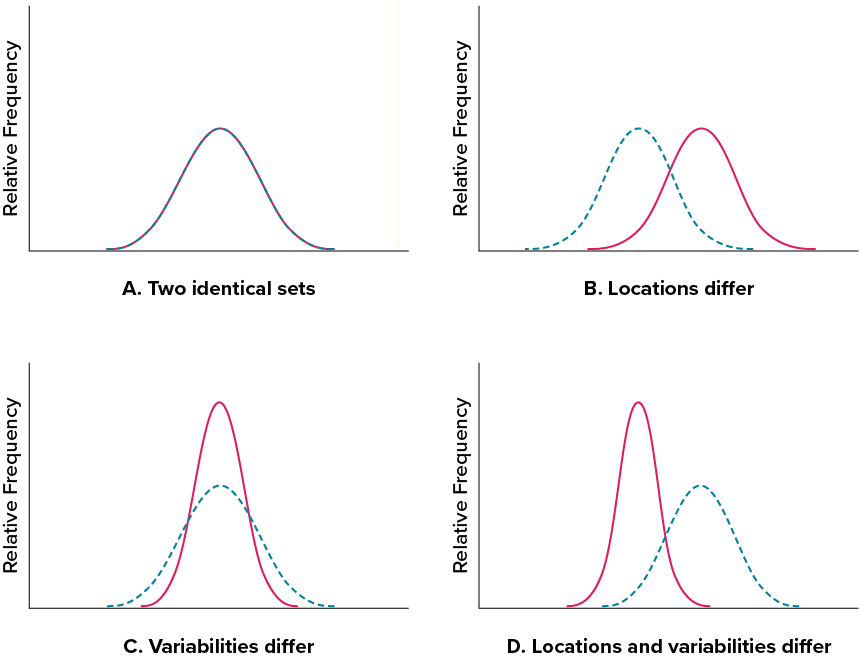

Figure 6.3.13 shows two normal distributions. The red (left-most) distribution has a mean of [latex]40[/latex] and a standard deviation of [latex]5[/latex]; the blue (right-most) distribution has a mean of [latex]60[/latex] and a standard deviation of [latex]10[/latex]. For the red distribution, [latex]68\%[/latex] of the distribution is between [latex]45[/latex] and [latex]55[/latex]; for the blue distribution, [latex]68\%[/latex] is between [latex]50[/latex] and [latex]70[/latex]. Notice that as the standard deviation gets smaller, the distribution becomes much narrower, regardless of where the centre of the distribution (mean) is. Figure 6.3.14 presents several more examples of this effect.

Try It

7) Compute the population mean and population standard deviation for the following scores (remember to use the sum of squares table): [latex]5,7,8,3,4,4,2,7,1,6[/latex]

Solution

[latex]\mu=4.80,\;s^2=2.36[/latex]

Try It

8) Compute the range, sample variance, and sample standard deviation for the following scores: [latex]25,36,41,28,29,32,39,37,34,34,37,35,30,36,31,31[/latex].

Solution

[latex]\text{Range}\;=16,\;s^2=18.4,\;s=4.29[/latex]

Try It

9) Using the same values from the previous problem, calculate the range, sample variance, and sample standard deviation, but this time include [latex]65[/latex] in the list of values. How did each of the three values change?

Solution

[latex]\text{Range}\;=40,\;s^2=75.8,\;s=8.71[/latex] Each of the three values increased after including the extreme value of 65.

Try It

10) Two normal distributions have exactly the same mean, but one has a standard deviation of [latex]20[/latex] and the other has a standard deviation of [latex]10[/latex]. How would the shapes of the two distributions compare?

Solution

If both distributions are normal, then they are both symmetrical, and having the same mean causes them to overlap with one another. The distribution with the standard deviation of [latex]10[/latex] will be narrower than the other distribution.

Self Check

a) After completing the exercises, use this checklist to evaluate your mastery of the objectives of this section.

b) After looking at the checklist, do you think you are well-prepared for the next section? Why or why not?

Glossary

- arithmetic mean

- Perhaps the most common measure of central tendency, the mean is the mathematical average of the scores in a sample.

- central tendency

- The centre or middle of a distribution. There are many measures of central tendency. The most common are the mean, median, and mode.

- degrees of freedom

- The number of independent pieces of information that go into the estimate. In general, the degrees of freedom for an estimate is equal to the number of values minus the number of parameters estimated en route to the estimate in question.

- dispersion

- The extent to which values differ from one another; that is, how much they vary. Dispersion is also called variability or spread.

- interquartile range (IQR)

- The range of the middle 50% of the scores in a distribution; computed by subtracting the 25th percentile from the 75th percentile. The interquartile range is a robust measure of central tendency.

- median

- The median is a popular measure of central tendency. It is the 50th percentile of a distribution.

- mode

- A measure of central tendency, the mode is the most frequent value in a distribution.

- range

- The difference between the maximum and minimum values of a variable or distribution. The range is the simplest measure of variability.

- robustness

- Something is robust if it holds up well in the face of adversity. A measure of central tendency or variability is considered robust if it is not greatly affected by a few extreme scores. A statistical test is considered robust if it works well in spite of moderate violations of the assumptions on which it is based.

- spread

- The extent to which values differ from one another; that is, how much they vary. Spread is also called variability or dispersion.

- standard deviation

- The standard deviation is a widely used measure of variability. It is computed by taking the square root of the variance.

- sum of squares

- The sum of squared deviations, or differences, between scores and the mean in a numeric dataset.

- variability

- The extent to which values differ from one another; that is, how much they vary. Variability can also be thought of as how spread out or dispersed a distribution is.

- variance

- The variance is a widely used measure of variability. It is defined as the mean squared deviation of scores from the mean.

The center or middle of a distribution. There are many measures of central tendency. The most common are the mean, median, and mode.

Perhaps the most common measure of central tendency, the mean is the mathematical average of the scores in a sample.

The median is a popular measure of central tendency. It is the 50th percentile of a distribution.

A measure of central tendency, the mode is the most frequent value in a distribution.

The extent to which values differ from one another; that is, how much they vary. Variability can also be thought of as how spread out or dispersed a distribution is.

The extent to which values differ from one another; that is, how much they vary. Spread is also called variability or dispersion.

The extent to which values differ from one another; that is, how much they vary. Dispersion is also called variability or spread.

The difference between the maximum and minimum values of a variable or distribution. The range is the simplest measure of variability.

The variance is a widely used measure of variability. It is defined as the mean squared deviation of scores from the mean.

The standard deviation is a widely used measure of variability. It is computed by taking the square root of the variance.

The sum of squared deviations, or differences, between scores and the mean in a numeric dataset.

Something is robust if it holds up well in the face of adversity. A measure of central tendency or variability is considered robust if it is not greatly affected by a few extreme scores. A statistical test is considered robust if it works well in spite of moderate violations of the assumptions on which it is based.

The number of independent pieces of information that go into the estimate. In general, the degrees of freedom for an estimate is equal to the number of values minus the number of parameters estimated en route to the estimate in question.

The range of the middle 50% of the scores in a distribution; computed by subtracting the 25th percentile from the 75th percentile. The interquartile range is a robust measure of central tendency.