The Lives of Ethical Philosophers

The Ethics of Aristotle: Virtue Theory

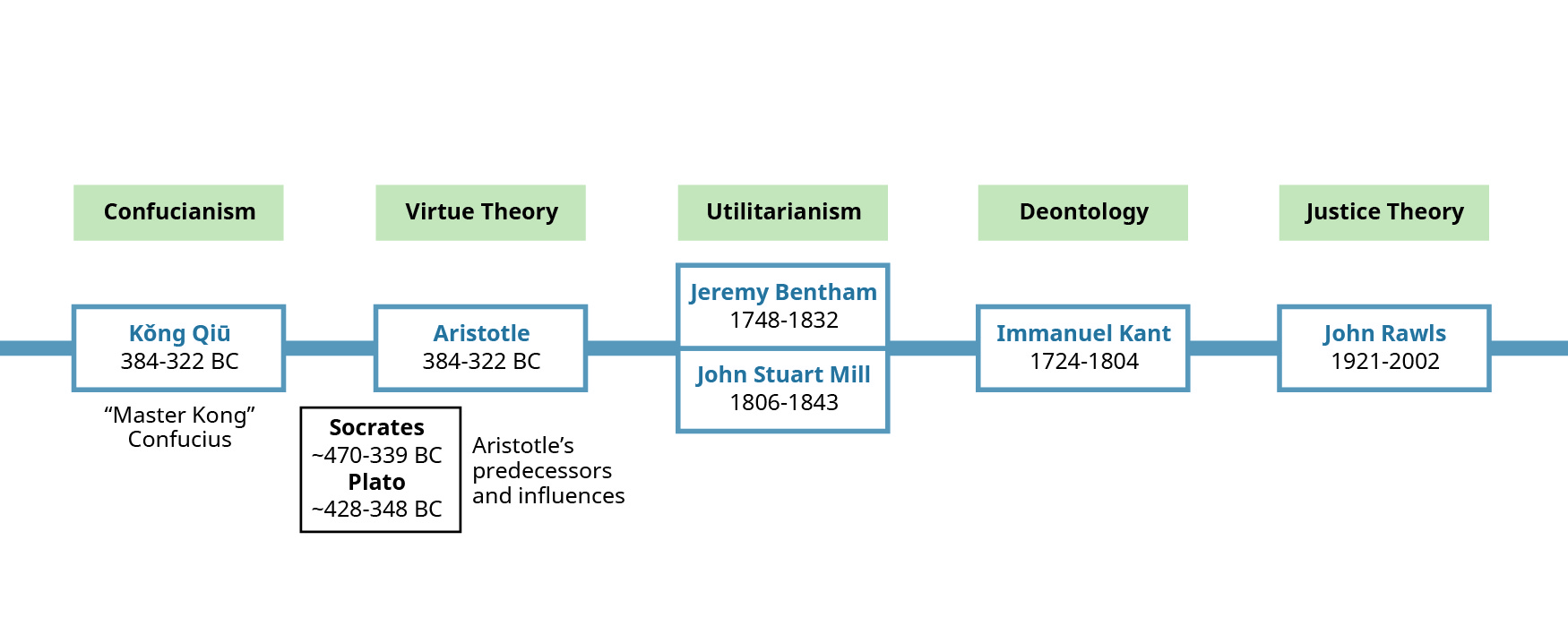

Aristotle, 384 BCE–322 BCE

Aristotle (384 BCE–322 BCE) was a student of Plato, who was himself a student of Socrates, one of the founders of Western philosophy. Aristotle spent about twenty years at Plato’s Academy in Athens, first as a student and then as an associate. Later he tutored the young Alexander of Macedonia, who would become Alexander the Great.1

Aristotle eventually returned to Athens where he opened his own school, the Lyceum, and where he studied and taught extensively in philosophy, government, and the natural and social sciences. He, along with most of the classical Greek thinkers, believed that all academic disciplines were linked. They were far less inclined than we are to rigidly separate academic subjects.

Aristotle’s principle work on ethics, The Nicomachean Ethics, was dedicated either to his father or son, both of whom were named Nicomachus, a popular name within his family. In Ethics, where Aristotle laid out the essence of virtue theory, he stated that if we truly desire people to be ethical, then we must have them practice ethics from an early age. Just as Plato claimed that unethical individuals are simply uneducated in ethics, so Aristotle held that constant practice is the best means by which to create ethical humans. He contended that men—for Aristotle, unlike Plato, education was restricted to males—who are taught to be ethical in minor matters as boys will automatically act ethically in all matters as they mature. Of course, a legitimate question regarding both philosophers is whether we believe they are correct on these points.

In Ethics, Aristotle introduced the concept of what is usually referred to as the golden mean of moderation. He believed that every virtue resides somewhere between the vices of defect and excess. That is, one can display either too little or too much of a good thing, or a virtue. The trick, as for Goldilocks, is to have just the right amount of it. Adding to the complexity of this, however, is the fact that striking the right balance between too much and too little does not necessarily put one midway between the two. The mean of moderation is more of a sliding value, fluctuating between defect and excess, but not automatically splitting the difference between them. Depending on the virtue in question, the mean may lie closer to a deficit or to a surplus. For example, take the virtue of courage ((Figure)). For Aristotle, the mean laid closer to foolhardiness or brashness. It’s not that foolhardiness is less a vice than cowardice; it’s just that courage verges closer to the one than to the other.

What constitutes a virtue in the first place, according to Aristotle? Besides courage, the virtues include wisdom, kindness, love, beauty, honesty, and justice. These approximate the same virtues proclaimed by Plato.

Aristotle also speaks of eudaemonia, a perfect balance of happiness and goodness interpreted classically. Humans experience eudaemonia both in themselves and in the world when they act virtuously and live a life of rational thought and contemplation. As Aristotle argued, rational thought is the activity of the divine, so it is appropriate for men to emulate this practice, as well.

The Ethics of Bentham and Mill: Utilitarianism

Jeremy Bentham, 1748–1832

John Stuart Mill, 1806–1873

Jeremy Bentham, an attorney, became what we would today call a consultant to the British Parliament in the late-eighteenth century. He was given the task of devising a method by which members could evaluate the worth of proposed legislation. He took a Latin term—util, or utility, usefulness, or happiness—and calculated the number of utils in proposed bills. Essentially this quantified the scoring of upcoming legislation—those pieces with the greatest number of utils were given a higher ranking than those with the least.

Utilitarianism as an ethical system today, though it has application to many areas beyond that simply of lawmaking, holds to this same principle. When making moral decisions, we are advised to select that action which produces the greatest amount of good for the greatest number of people. If the balance of good or happiness or usefulness outweighs that of evil, harm, or unhappiness, then the choice is a moral one. On the other hand, if the balance of evil outweighs that of good, then the choice is immoral. Due to this emphasis on the outcome of ethical decisions, utilitarianism is classified as a consequentialist theory.

Bentham lays much of his theory out in An Introduction to the Principles of Morals and Legislation (1789). There, he proposes the hedonic calculus—from the Greek hedone, or pleasure—as a mechanism by which one can determine the amount of pleasure versus pain in moral choices.

Bentham found a ready supporter and lieutenant in James Mill (1773–1836), a Scottish lawyer who came to assist Bentham in championing utilitarianism as a political philosophy. And when Mill’s son, John Stuart, was born, Bentham, having no children of his own, became his godfather. Together, Bentham and the elder Mill established a curriculum through which the younger Mill was schooled at home, an arrangement that was not uncommon in the early nineteenth century. John Stuart was evidently a prodigy and at an early age was taking on Greek, Latin, economic theory, and higher mathematics.

An odd twist accompanies the arrangements that Bentham made for his body after his death. Because donated cadavers were rare in teaching hospitals and this had led to a rash of grave-robbing, he stipulated that his body be dissected by surgeons for the education of their students, while in the presence of his friends. He further requested that, afterward, his body be re-stitched together, dressed in his own clothes, and perpetually displayed at what was then a new school that he had endowed, University College in London. To this day, Bentham’s corpse, with a wax head to replace the original, mummified one, is posed in a glass case at meetings of the trustees of University College, all by provision of his will.

John Stuart Mill, as he reached adulthood, became a leader of the second generation of utilitarians. He broke with his mentor, though, in one significant way: by distinguishing between different levels of pleasure—higher and lower ones—and offering a means by which to determine where any given pleasure falls. While Bentham insisted that ranking pleasures were subjective and that no one could truly say that some pleasures were objectively more worthy than others, the younger Mill claimed that we could indeed specifically determine which pleasures were the higher ones by polling educated people. Those pleasures which were ranked highest by this select cohort were indeed the greatest ones, and those which were ranked least were the inferior ones.

Mill also refined the political applications of utilitarianism and, in so doing, laid the foundation for the political movement of libertarianism. Though he himself never used this term and probably would take issue with being labeled a libertarian were he alive today, he did introduce many of the principles that are esteemed by libertarians. In his most important work on political freedoms, On Liberty (1859), he introduced the no-harm rule. By this, Mill proposed that no individual be deprived of his or her right to act in any fashion, even a self-destructive one, provided that his or her action does not impinge physically on others.2

For example, according to Mill, we may try to persuade an alcoholic to give up drinking. We may marshal our best arguments in an attempt to convince him or her that this is wrong and harmful—“remonstrate” is the verb that he employed. Still, if the alcoholic persists in drinking excessively despite our best efforts to encourage him or her otherwise, then no power of the state ought to be brought to bear to prevent him or her from drinking, unless and until the drinking causes physical harm to others. One can see the application of this to, say, motorcycle-helmet laws today. Mill would hold that even though the injury-preventing capacity of helmets clearly can be demonstrated, bikers still ought to be permitted to refrain from wearing them if they so choose.

The significance of utilitarianism in our era lies in the fact that many of us implement utilitarian thought processes when we have to make many ethical choices, even if we don’t necessarily consider ourselves to be utilitarians. In addition, utilitarianism continues to influence new generations of philosophers and ethical thinkers, such as the Australian Peter Singer, an inspiration for the contemporary animal rights movement who is currently on the faculty at Princeton University.

A telling critique of utilitarianism, however, is the objection that it assays no good or evil in acts themselves, but only in the good or evil that these acts produce. If a proposed municipal, state, or federal law could be demonstrated to serve the defined interests of a majority at the expense of the interests only of a minority, then utilitarianism would suggest that such a law is good and moral. Little recognition appears within utilitarianism of the possibility of tyranny of the majority. Many critics of utilitarianism have scored this weakness of the ethical system. A persuasive instance of this is the short story “Those Who Walk Away from Omelas” by the American writer Ursula K. Le Guin (1929–2018).

The Ethics of Kant: Deontology

Immanuel Kant, 1724–1804

The sage of Königsberg in Prussia (now Kaliningrad in Russia), Kant taught philosophy at the University of Königsberg for several years. In fact, throughout a very long lifetime, especially by the standards of the eighteenth century, he never traveled far from the city where he had been born.

Kant’s parents were members of a strict sect of Lutheranism called pietism, and he remained a practicing Christian throughout his life. Though he only occasionally noted religion in his writing, his advocacy of deontology cannot be understood apart from an appreciation of his religious faith. Religion and ethics went hand in hand for Kant, and God always remained the ground or matrix upon which his concept of morality was raised.

Though he never married, Kant was by contemporary accounts no dour loner. He apparently was highly popular among his colleagues and students and often spent evenings eating and drinking in their company. He frequently hosted gatherings at his own lodging and served as a faculty master at the university. He was also a creature of habit, taking such regular walks through the neighborhood surrounding campus that residents could tell the time of day by the moment when he would pass their doorway or window.

The term deontology stems from the Greek deon—duty, obligation, or command. As an ethical system, it is the radical opposite of utilitarianism in that it holds that the consequences of a moral decision are of no matter whatsoever. What is important are the motives as to why one has acted in the way that one has. So an action may have beneficial results, but still be unethical if it has been performed for the wrong reasons. Similarly, an action may have catastrophic consequences, but still be deemed moral if it has been done on the basis of the right will.

Not only is deontology non-consequentialist, it is also non-situationalist. That is, an act is either right or wrong always and everywhere. The context surrounding it is unimportant. The best example of this is Kant’s famous allusion to an axe-murderer who, in seeking his victim, must always be told the truth as to his would-be victim’s whereabouts. By Kant’s reasoning, one cannot lie even in this dire circumstance in order to save the life of an innocent person. Kant was not diminishing the significance of human life in holding that the truth must always be told. Instead, he was insisting that truth-telling is one of the inviolable principles that frames our lives. To lie—even in defense of life—is to cheapen and weaken an essential pillar that sustains us. Kant knew that this example would draw critics, but he deliberately chose it anyway in order to demonstrate his conviction about the rightness of certain acts.

Perhaps the most well-known element of Kant’s ethics is his explanation of the categorical imperative, laid out in his Fundamental Principles of the Metaphysics of Ethics, 1785. This intimidating phrase is just a fancy way of saying that some actions must always be taken and certain standards always upheld, such as truth-telling. The categorical imperative has two expressions, each of which Kant regarded as stating the same thing. In its first expression, the categorical imperative holds that a moral agent (i.e., a human being imbued with reason and a God-given soul) is free to act only in ways that he or she would permit any other moral agent to act. That is, none of us is able to claim that we are special and so entitled to privileges to which others are not also entitled. And in its second expression, the categorical imperative stipulates that we must treat others as ends in themselves and not just as means to our own ends. So we can never simply use people as stepping stones to our own goals and objectives unless we are also willing to be so treated by them.

Despite the enduring popularity of utilitarianism as an ethical system, deontology is probably even more pronounced within our moral sensitivity. Perhaps the best indicator of this is that most of us believe that a person’s motives for acting ought to be taken into account when judging whether those actions are ethical or unethical. To witness a famous literary example of this, Victor Hugo made clear in Les Misérables that his protagonist, Jean Valjean, became a hunted man simply because he stole bread to feed his starving family. By Hugo’s standards—and our own—Valjean truly committed no crime, and the tragedy of his life is that he must spend a significant part of it on the run from the dogged Inspector Javert.

Deontology, like all ethical systems, has its critics, and they zero in on its inflexibility regarding acts which may never be permitted, such as telling a lie, even if it is to save a life. Still, the system continues to inspire a devoted following of philosophers to this day. In the twentieth century, this was notably represented by the British ethicist W. D. Ross (1877–1971) and the American political philosopher John Rawls (1921–2002). Those who embrace deontology are typically attracted to its deep-seated sense of honor and commitment to objective values in addition to its insistence that all humans be treated with dignity and respect.

The Ethics of John Rawls: Justice Theory

John Rawls, 1921–2002

Though Rawls considered himself to be a utilitarian, he also acknowledged that his moral philosophy owed much to the social contract tradition represented over the past few centuries by John Locke and David Hume, among others. To complicate Rawls’ philosophy even further, there was a bit of deontology exhibited in it, too, through Rawls’ sentiment was that political freedoms and material possessions be distributed as fully and widely as possible precisely because it is the right thing to do.

Rawls is a uniquely American political philosopher, and this can be seen from his emphasis on political liberty. But this statement also speaks to his commitment to the utilitarianism of John Stuart Mill, the second-generation leader of that movement. Hence Rawls’s assertion that he actually was a utilitarian at heart.

Whatever the influences on his thought, Rawls was the most significant political philosopher ever to emerge from the United States, and probably one of the most influential ethicists in the West over the past several centuries. He labeled his ethics to be “justice as fairness,” and he developed it over nearly a lifetime. It was laid out formally in 1971 with the publication of his A Theory of Justice, a treatise of more than 550 pages. Still, preliminary drafts of what became this book were circulating within philosophical circles beginning in the late 1950s.

To be fair, Rawls insisted, human justice must be centered on a firm foundation comprising a first and second principle. The first principle declared that “each person is to have an equal right to the most extensive basic liberty compatible with a similar liberty for others.” These liberties included traditional ones such as freedom of thought and speech, the vote, a fair trial when accused of a crime, and the ownership of some personal property not subject to the state’s seizure. Very few commentators have criticized this principle.

It is the second principle, however, which has incurred the loudest objections. It consisted of two sub-points: first, socio-economic inequality is permissible only to the degree that it brings the greatest benefit to the least-advantaged members of society. (Rawls labeled this the difference principle.) And, second, authority and offices are to be available to everyone competent to hold them. (Rawls called this fair equality of opportunity.) Additionally, the training to ensure that all may merit these offices absolutely must be available to all.

What Rawls actually advocated was an at-least minimal distribution of material goods and services to everyone, regardless of what inheritance he or she might come by or what work he or she might engage in. And this tenet has incurred a firestorm of controversy. Many have embraced what they term Rawls’ egalitarian perspective on the ownership of property. Yet others have argued that he ignored the unlimited right to ownership of personal property specifically predicated on hard work and/or bequests from family. On the other hand, pure Marxists have dismissed this principle as not going far enough to ensure that sizable estates, as well as the means of production, be extracted from the clutches of plutocrats.

How might society move toward justice as fairness? Rawls proposed a thought exercise: If we all could imagine ourselves, before birth, to be in what he calls the Original Position, knowing only that we would be born but without knowledge of what sex, race, wealth, ethnicity, intelligence, health, or family structures we would be assigned, then we necessarily would ensure that these two principles would be observed. We would do so because we would have absolutely no way of predicting the real-life circumstances which we would inherit post-birth and wouldn’t want to risk being born into an impoverished or tyrannical environment.

The reason why we would be blind as to the world that each of us would inhabit would be because we would be cloaked by a “veil of ignorance” that would screen us from pre-knowledge of our circumstances once we were born—in other words, viewed from the original position, we wouldn’t take the chance of suffering from political oppression or material poverty. Self-interest, then, would motivate us to insist that these minimum levels of political and material largesse would be the birthright of all.

Of course, we can’t return to our pre-birth stage and so negotiate this sort of arrangement beforehand. Hence, the only way of creating this sort of world now would be to imagine that we were in the original position and deliberately build such a fair environment for all.

Given human nature and its inherent selfishness, is it reasonable to expect human beings to make a concerted effort to create the structures needed for justice as fairness? Perhaps not, but realize that Rawls was only following in the footsteps of Plato in his proposal to craft a perfect polis, or city-state, in The Republic. Therein Plato took all of the beauty and wisdom of the Athens of his day and imagined it without any of its limitations. Plato knew that this was an ideal, but he also realized that even an attempt to build such a city-state would produce what he regarded as much incalculable good.

Footnotes

- 1 Aristotle’s family originated from a region in northern Greece that was adjacent to classical Macedonia, and his father, Nicomachus, had similarly tutored Alexander’s father, Philip II of Macedonia.

- 2 A limitation within Mill’s no-harm principle was its focus solely on physical harm without acknowledgement of the reality of psychological damage. He made no allowance for what the law today denotes as pain and suffering. In his defense, this concept is a twentieth-century one and has little credibility among Mill’s contemporaries.