2.3 Behaviourist Psychology

Jennifer Walinga

Learning Objectives

- Understand the principles of behaviourist psychology and how these differ from psychodynamic principles in terms of theory and application.

- Distinguish between classical and operant conditioning.

- Become familiar with key behaviourist theorists and approaches.

- Identify applications of the behaviourist models in modern life.

Emerging in contrast to psychodynamic psychology, behaviourism focuses on observable behaviour as a means to studying the human psyche. The primary tenet of behaviourism is that psychology should concern itself with the observable behaviour of people and animals, not with unobservable events that take place in their minds. The behaviourists criticized the mentalists for their inability to demonstrate empirical evidence to support their claims. The behaviourist school of thought maintains that behaviours can be described scientifically without recourse either to internal physiological events or to hypothetical constructs such as thoughts and beliefs, making behaviour a more productive area of focus for understanding human or animal psychology.

The main influences of behaviourist psychology were Ivan Pavlov (1849-1936), who investigated classical conditioning though often disagreeing with behaviourism or behaviourists; Edward Lee Thorndike (1874-1949), who introduced the concept of reinforcement and was the first to apply psychological principles to learning; John B. Watson (1878-1958), who rejected introspective methods and sought to restrict psychology to experimental methods; and B.F. Skinner (1904-1990), who conducted research on operant conditioning.

The first of these, Ivan Pavlov, is known for his work on one important type of learning, classical conditioning. As we learn, we alter the way we perceive our environment, the way we interpret the incoming stimuli, and therefore the way we interact, or behave. Pavlov, a Russian physiologist, actually discovered classical conditioning accidentally while doing research on the digestive patterns in dogs. During his experiments, he would put meat powder in the mouth of a dog who had tubes inserted into various organs to measure bodily responses. Pavlov discovered that the dog began to salivate before the meat powder was presented to it. Soon the dog began to salivate as soon as the person feeding it entered the room. Pavlov quickly began to gain interest in this phenomenon and abandoned his digestion research in favour of his now famous classical conditioning study.

Basically, Pavlov’s findings support the idea that we develop responses to certain stimuli that are not naturally occurring. When we touch a hot stove, our reflex pulls our hand back. We do this instinctively with no learning involved. The reflex is merely a survival instinct. Pavlov discovered that we make associations that cause us to generalize our response to one stimuli onto a neutral stimuli it is paired with. In other words, hot burner = ouch; stove = burner; therefore, stove = ouch.

In his research with the dogs, Pavlov began pairing a bell sound with the meat powder and found that even when the meat powder was not presented, a dog would eventually begin to salivate after hearing the bell. In this case, since the meat powder naturally results in salivation, these two variables are called the unconditioned stimulus (UCS) and the unconditioned response (UCR), respectively. In the experiment, the bell and salivation are not naturally occurring; the dog is conditioned to respond to the bell. Therefore, the bell is considered the conditioned stimulus (CS), and the salivation to the bell, the conditioned response (CR).

Many of our behaviours today are shaped by the pairing of stimuli. The smell of a cologne, the sound of a certain song, or the occurrence of a specific day of the year can trigger distinct memories, emotions, and associations. When we make these types of associations, we are experiencing classical conditioning.

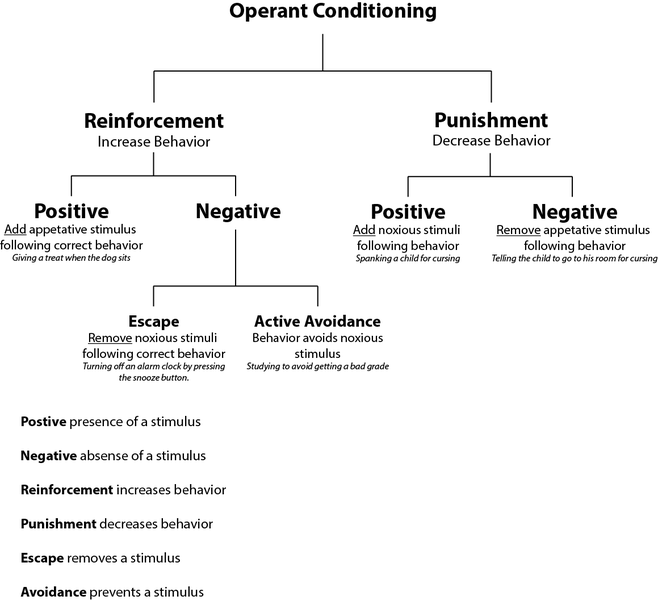

Operant conditioning is another type of learning that refers to how an organism operates on the environment or how it responds to what is presented to it in the environment (Figure 2.12).

Examples of operant conditioning include the following:

Reinforcement means to strengthen, and is used in psychology to refer to any stimulus which strengthens or increases the probability of a specific response. For example, if you want your dog to sit on command, you may give him a treat every time he sits for you. The dog will eventually come to understand that sitting when told to will result in a treat. This treat is reinforcing the behaviour because the dog likes it and will result in him sitting when instructed to do so. There are four types of reinforcement: positive, negative, punishment, and extinction.

- Positive reinforcement involves adding something in order to increase a response. For example, adding a treat will increase the response of sitting; adding praise will increase the chances of your child cleaning his or her room. The most common types of positive reinforcement are praise and reward, and most of us have experienced this as both the giver and receiver.

- Negative reinforcement involves taking something negative away in order to increase a response. Imagine a teenager who is nagged by his parents to take out the garbage week after week. After complaining to his friends about the nagging, he finally one day performs the task and, to his amazement, the nagging stops. The elimination of this negative stimulus is reinforcing and will likely increase the chances that he will take out the garbage next week.

- Punishment refers to adding something aversive in order to decrease a behaviour. The most common example of this is disciplining (e.g., spanking) a child for misbehaving. The child begins to associate being punished with the negative behaviour. The child does not like the punishment and, therefore, to avoid it, he or she will stop behaving in that manner.

- Extinction involves removing something in order to decrease a behaviour. By having something taken away, a response is decreased.

Research has found positive reinforcement is the most powerful of any of these types of operant conditioning responses. Adding a positive to increase a response not only works better, but allows both parties to focus on the positive aspects of the situation. Punishment, when applied immediately following the negative behaviour, can be effective, but results in extinction when it is not applied consistently. Punishment can also invoke other negative responses such as anger and resentment.

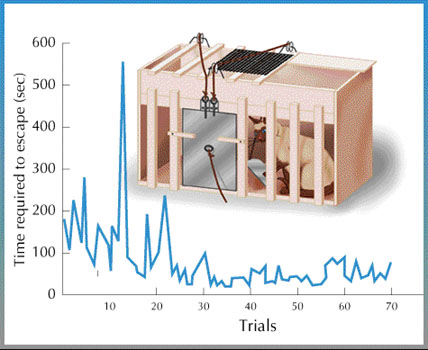

Thorndike’s (1898) work with cats and puzzle boxes illustrates the concept of conditioning. The puzzle boxes were approximately 50 cm long, 38 cm wide, and 30 cm tall (Figure 2.13). Thorndike’s puzzle boxes were built so that the cat, placed inside the box, could escape only if it pressed a bar or pulled a lever, which caused the string attached to the door to lift the weight and open the door. Thorndike measured the time it took the cat to perform the required response (e.g., pulling the lever). Once it had learned the response he gave the cat a reward, usually food.

Thorndike found that once a cat accidentally stepped on the switch, it would then press the switch faster in each succeeding trial inside the puzzle box. By observing and recording how long it took a variety of animals to escape through several trials, Thorndike was able to graph the learning curve (graphed as an S-shape). He observed that most animals had difficulty escaping at first, then began to escape faster and faster with each successive puzzle box trial, and eventually levelled off in their escape times. The learning curve also suggested that different species learned in the same way but at different speeds. His finding was that cats, for instance, consistently showed gradual learning.

From his research with puzzle boxes, Thorndike was able to create his own theory of learning (1932):

- Learning is incremental.

- Learning occurs automatically.

- All animals learn the same way.

- Law of effect. If an association is followed by satisfaction, it will be strengthened, and if it is followed by annoyance, it will be weakened.

- Law of use. The more often an association is used, the stronger it becomes.

- Law of disuse. The longer an association is unused, the weaker it becomes.

- Law of recency. The most recent response is most likely to reoccur.

- Multiple response. An animal will try multiple responses (trial and error) if the first response does not lead to a specific state of affairs.

- Set or attitude. Animals are predisposed to act in a specific way.

- Prepotency of elements. A subject can filter out irrelevant aspects of a problem and focus on and respond to significant elements of a problem.

- Response by analogy. Responses from a related or similar context may be used in a new context.

- Identical elements theory of transfer. The more similar the situations are, the greater the amount of information that will transfer. Similarly, if the situations have nothing in common, information learned in one situation will not be of any value in the other situation.

- Associative shifting. It is possible to shift any response from occurring with one stimulus to occurring with another stimulus. Associative shift maintains that a response is first made to situation A, then to AB, and then finally to B, thus shifting a response from one condition to another by associating it with that condition.

- Law of readiness. A quality in responses and connections that results in readiness to act. Behaviour and learning are influenced by the readiness or unreadiness of responses, as well as by their strength.

- Identifiability. Identification or placement of a situation is a first response of the nervous system, which can recognize it. Then connections may be made to one another or to another response, and these connections depend on the original identification. Therefore, a large amount of learning is made up of changes in the identifiability of situations.

- Availability. The ease of getting a specific response. For example, it would be easier for a person to learn to touch his or her nose or mouth with closed eyes than it would be to draw a line five inches long with closed eyes.

John B. Watson promoted a change in psychology through his address, Psychology as the Behaviorist Views It (1913), delivered at Columbia University. Through his behaviourist approach, Watson conducted research on animal behaviour, child rearing, and advertising while gaining notoriety for the controversial “Little Albert” experiment. Immortalized in introductory psychology textbooks, this experiment set out to show how the recently discovered principles of classical conditioning could be applied to condition fear of a white rat into Little Albert, an 11-month-old boy. Watson and Rayner (1920) first presented to the boy a white rat and observed that the boy was not afraid. Next they presented him with a white rat and then clanged an iron rod. Little Albert responded by crying. This second presentation was repeated several times. Finally, Watson and Rayner presented the white rat by itself and the boy showed fear. Later, in an attempt to see if the fear transferred to other objects, Watson presented Little Albert with a rabbit, a dog, and a fur coat. He cried at the sight of all of them. This study demonstrated how emotions could become conditioned responses.

Burrhus Frederic Skinner called his particular brand of behaviourism radical behaviourism (1974). Radical behaviourism is the philosophy of the science of behaviour. It seeks to understand behaviour as a function of environmental histories of reinforcing consequences. This applied behaviourism does not accept private events such as thinking, perceptions, and unobservable emotions in a causal account of an organism’s behaviour.

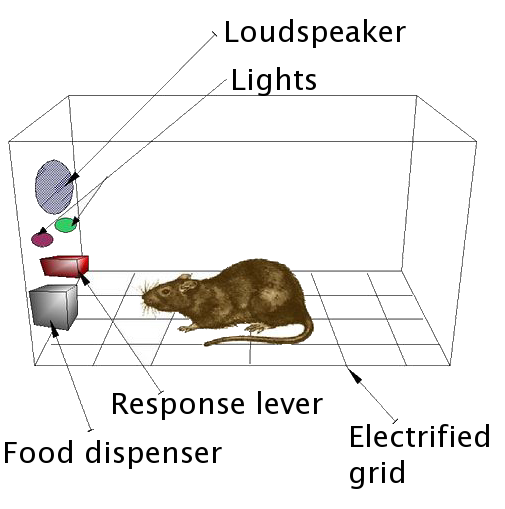

While a researcher at Harvard, Skinner invented the operant conditioning chamber, popularly referred to as the Skinner box (Figure 2.14), used to measure responses of organisms (most often rats and pigeons) and their orderly interactions with the environment. The box had a lever and a food tray, and a hungry rat inside the box could get food delivered to the tray by pressing the lever. Skinner observed that when a rat was first put into the box, it would wander around, sniffing and exploring, and would usually press the bar by accident, at which point a food pellet would drop into the tray. After that happened, the rate of bar pressing would increase dramatically and remain high until the rat was no longer hungry.

Negative reinforcement was also exemplified by Skinner placing rats into an electrified chamber that delivered unpleasant shocks. Levers to cut the power were placed inside these boxes. By running a current through the box, Skinner noticed that the rats, after accidentally pressing the lever in a frantic bid to escape, quickly learned the effects of the lever and consequently used this knowledge to stop the currents both during and prior to electrical shock. These two learned responses are known as escape learning and avoidance learning (Skinner, 1938). The operant chamber for pigeons involved a plastic disk in which the pigeon pecked in order to open a drawer filled with grain. The Skinner box led to the principle of reinforcement, which is the probability of something occurring based on the consequences of a behaviour.

Research Focus

Applying game incentives such as prompts, competition, badges, and rewards to ordinary activities, or gamification, is a growing approach to behaviour modification today. Health care has also applied some early innovative uses of gamification — from a Sony PS3 Move motion controller used to help children diagnosed with cancer to the launch of Games for Health, the first peer-reviewed journal dedicated to the research and design of health games and behavioural health strategies. Gamification is the process of taking an ordinary activity (like jogging or car sharing) and adding game mechanisms to it, including prompts, rewards, leader-boards, and competition between different players.

When used in social marketing and online health-promotion campaigns, gamification can be used to encourage a new, healthy behaviour such as regular exercise, improved diet, or completing actions required for treatment. Typically, gamification is web-based, usually with a mobile app or as a micro-site. Behavioural change campaigns require an understanding of human psychology, specifically the benefits and barriers associated with a behaviour. There have been several campaigns using gamification techniques that have had remarkable results. For example, organizations that wanted employees to exercise regularly have installed gyms in their offices and created a custom application that rewards employees for “checking in” to the gyms. Employees can form regionally based teams, check in to workouts, and chart their team’s progress on a leader-board. This has a powerful effect on creating and sustaining a positive behavioural change.

Similar game mechanics have been used in sustainability campaigns aimed at increasing household environmental compliance. Such sites use game mechanics such as points, challenges, and rewards to increase daily “green” habits like recycling and conserving water. Other behavioural change campaigns that have applied social gaming include using cameras to record speeding cars, which reduce the incidence of speeding, and offering products that allow users to track their healthy behaviours through the day, including miles travelled, calories burned, and stairs climbed.

Key Takeaways

- Behaviourist psychology should concern itself with the observable behaviour of people and animals, not with unobservable events that take place in their minds.

- The main influences of behaviourist psychology were Ivan Pavlov (1849-1936), Edward Lee Thorndike (1874-1949), John B. Watson (1878-1958), and B.F. Skinner (1904-1990).

- The idea that we develop responses to certain stimuli that are not naturally occurring is called “classical conditioning.”

- Operant conditioning refers to how an organism operates on the environment or how it responds to what is presented to it in the environment.

- Reinforcement means to strengthen, and is used in psychology to refer to any stimulus that strengthens or increases the probability of a specific response.

- There are four types of reinforcement: positive, negative, punishment, and extinction.

- Behaviourist researchers used experimental methods (puzzle box, operant conditioning or Skinner box, Little Albert experiment) to investigate learning processes.

- Today, behaviourism is still prominent in applications such as gamification.

Exercises and Critical Thinking

- Reflect on your educational experience and try to determine what aspects of behaviourism were employed.

- Research Skinner’s other inventions, such as the “teaching machine” or the “air crib,” and discuss with a group the underlying principles, beliefs, and values governing such “machines.” Do you disagree or agree with their use?

- What might be some other applications for gamification behavioural change strategies? Design a campaign or strategy for changing a behaviour of your choice (e.g., health, work, addiction, or sustainable practice).

Image Attributions

Figure 2.12: Operant conditioning diagram by studentne (http://commons.wikimedia.org/wiki/File:Operant_conditioning_diagram.png) used under CC BY SA 3.0 license (http://creativecommons.org/licenses/by-sa/3.0/deed.en).

Figure 2.13: Thorndike’s Puzzle Box. by Jacob Sussman (http://commons.wikimedia.org/wiki/File:Puzzle_box.jpg) is in the public domain.

Figure 2.14: Skinner box scheme 01 by Andreas1 (http://commons.wikimedia.org/wiki/File:Skinner_box_scheme_01.png) used under CC BY SA 3.0 license (http://creativecommons.org/licenses/by-sa/3.0/deed.en).

References

Skinner, B.F. (1938). The behavior of organisms: an experimental analysis. Oxford, England: Appleton-Century.

Skinner, B.F. (1974). About behaviorism. New York, NY: Random House.

Thorndike, Edward Lee. (1898). Animal intelligence. Princeton, NJ: MacMillan.

Thorndike, Edward (1932). The fundamentals of learning. New York, NY: AMS Press Inc.

Watson, J. B. (1913). Psychology as the behaviorist views it. Psychological Review, 20, 158-177.

Watson, J. B., & Rayner, R. (1920). Conditioned emotional reactions. Journal of Experimental Psychology, 3, 1-14.