Conversational AI Theory

2 Natural Language Processing

Developing a computer application that can accurately parse and interpret how humans communicate has been a decades long struggle. Natural language is defined as a language that has developed and evolved naturally, through use by human beings, as opposed to an invented or constructed language, as a computer programming language. The English language has around 600,000 words, within these words are complex grammatical constructs, different meanings and interpretations. This makes trying to develop a rule-based system that is capable of interpreting and speaking natural language next to impossible.

History of Natural Language Processing

Natural language processing is the technique that is used to decode, interpret and understand natural language. There have been essentially three generations of Virtual Language processing strategies that conversation all systems. These generations are Symbolic, Statistical and Neural.

Symbolic Natural Language Processing was first introduced in the 1950s. Symbolic NLP functions by having a list of rules that the computer can use to evaluate and process language data. Symbolic systems were often used to solve language translation, as well as preform entity substitution into user utterances. For example a user might say “My hand hurts.” The chat bot could then reply with something like “Why does your hand hurt?” Unfortunately these types of systems lacked any contextual awareness.

Statistical NLP By the 1990s the majority of conversational systems had moved to using statistical analysis of natural language. This type of processing required increased computing resources. The shift from Symbolic NLP to statistical represented a shift from fixed rules to probabilistic AI processes. These solutions relied on what is known as text corpora which is a sample of data (actual language) that contains meaningful context. This data can then be used to preform statistical analysis, hypothesis testing or validating linguistic rules.

A corpus is a representative sample of actual language production within a meaningful context and with a general purpose. This can be thought of as training data for machine learning system.

Source: https://odsc.medium.com/20-open-datasets-for-natural-language-processing-538fbfaf8e38

General |

|

| Enron Dataset: | Over half a million anonymized emails from over 100 users. It’s one of the few publicly available collections of “real” emails available for study and training sets. |

| e Blogger Corpus: | Nearly 700,000 blog posts from blogger.com. The meat of the blogs contain commonly occurring English words, at least 200 of them in each entry. |

| SMS Spam Collection : | Excellent dataset focused on spam. Nearly 6000 messages tagged as legitimate or spam messages with a useful subset extracted directly from Grumbletext. |

| Recommender Systems Datasets : | Datasets from a variety of sources, including fitness tracking, video games, song data, and social media. Labels include star ratings, time stamps, social networks, and images. |

| Project Gutenberg : | Extensive collection of book texts. These are public domain and available in a variety of languages, spanning a long period of time.\ |

| Sentiment 140 : | 160,000 tweets scrubbed of emoticons. They’re arranged in six fields — polarity, tweet date, user, text, query, and ID. |

| MultiDomain Sentiment Analysis Dataset : | Includes a wide range of Amazon reviews. Dataset can be converted to binary labels based on star review, and some product categories have thousands of entries. |

| Yelp Reviews : | Restaurant rankings and reviews. It includes a variety of aspects including reviews for sentiment analysis plus a challenge with cash prizes for those working with Yelp’s datasets. |

| Dictionaries for Movies and Finance : | Specific dictionaries for sentiment analysis using a specific field for testing data. Entries are clean and arranged in positive or negative connotations. |

| OpinRank Dataset : | 300,000 reviews from Edmunds and TripAdvisor. They’re neatly arranged by car model or by travel destination and relevant to the hotel. |

Text |

|

| 20 Newsgroups : | 20,000 documents from over 20 different newsgroups. The content covers a variety of topics with some closely related for reference. There are three versions, one in its original form, one with dates removed, and one with duplicates removed. |

| The WikiQA Corpus : | Contains question and sentence pairs. It’s robust and compiled from Bing query logs. There are over 3000 questions and over 29,000 answer sentences with just under 1500 labeled as answer sentences. |

| European Parliament Proceedings Parallel Corpus : | Sentence pairs from Parliament proceedings. There are entries from 21 European languages including some less common entries for ML corpus. |

| Jeopardy : | Over 200,000 questions from the famed tv show. It includes category and value designations as well as other descriptors like question and answer fields and rounds. |

| Legal Case Reports Dataset : | Text summaries of legal cases. It contains wrapups of over 4000 legal cases and could be great for training for automatic text summarization. |

Speech |

|

| LibriSpeech : | Nearly 1000 hours of speech in English taken from audiobook clips. |

| Spoken Wikipedia Corpora : | Spoken articles from Wikipedia in three languages, English, German, and Dutch. It includes a diverse speaker set and range of topics. There are hundreds of hours available for training sets. |

| LJ Speech Dataset : | 13,100 clips of short passages from audiobooks. They vary in length but contain a single speaker and include a transcription of the audio, which has been verified by a human reader. |

| M-AI Labs Speech Dataset : | Nearly 1000 hours of audio plus transcriptions. It includes multiple languages arranged by male voices, female voices, and a mix of the two. |

Neural Natural Language processing utilize deep neural network machine learning that results in enhanced language modeling and parsing. The majority of natural language processing solutions developed in the last 10 years generally use Neural NLP. The use of Neural networks greatly improves the ability of the capability of a NLP system to model, learn and reason. While also greatly reducing the amount of human perpetration of these systems.

Key Natural Language concepts

NLP Modeling – The modeling of natural language can refer to a number of different aspects such as encoding and decoding a sentence, creating sequence of labels. Using modern neural network in NLP this type of modeling allows for the breakdown of language into millions of trainable nodes that can preform syntactical semantic and sentiment analysis, language translation, topic extraction, classification and next sentence prediction.

NLP Reasoning – Also known as common-sense reasoning is ability to allow computer to better interact and understand human interaction by collecting assumptions and extracting meaning behind the text provided but refining the assumptions throughout the duration of the conversation. In many ways common sense is the application of pragmatics in a conversational. Being situational aware of the context of the conversation is extremely important to interpreting meaning. This area of NLP, focusing on trying to reduce the “absurd” mistakes that NLP systems can make when they “jump to the wrong conclusion” due to a lack of commonsense.

Natural Language Generation – This is the process by which the the machine learning algorithm creates natural language in such a way that is indistinguishable from a human response. This relies on constructing proper sentences that are suitable for the target demographics of users, without sounding to rigid or robotic. The key is to properly format and present the required response back to the user there are many ways to achive this one of the most common was is using statistical responses based from a large corpus of human text.

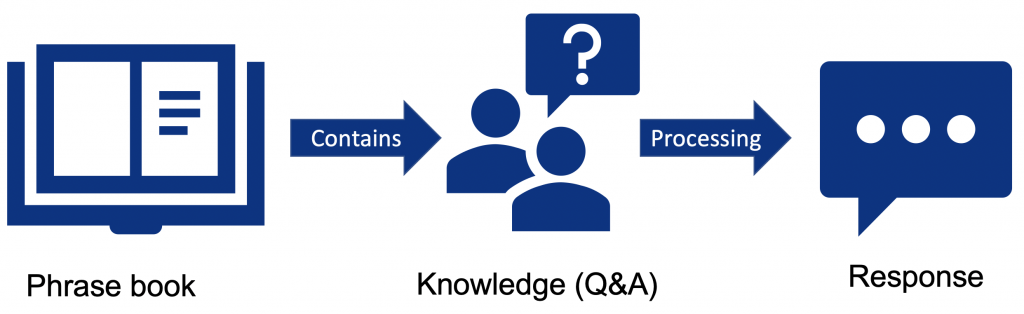

Overview of a modern Conversational AI system.

Natural Language Processing is an important part of a conversational AI system. It is this processing that is used to review the user’s input and prepare a reply back. Often conversational systems use voice interactions. In these cases the NLP is surrounded by a speech-to-text and text-to-speech process that is used to interact directly using voice. These voice replies utilize a synthetic voice engine which is modeled after the human voice. The goal of the synthesized voice is to provide a clear and easy to understand voice along while also attempting to mimic human speech inflections.

|

|

|

| Speech to Text Used to decode sound waves translate this into a textual representation of exactly what has been said. |

Natural Language Processing Evaluates the provided input, determines the correct response and formats the reply into natural language. |

Text to Speech The computer will formulate a response and translate a written text string into and auditory signal.. |

Natural Language is a language that has developed and evolved naturally, through use by human beings, as opposed to an invented or constructed language, as a computer programming language.