58 Some Basic Null Hypothesis Tests

Learning Objectives

- Conduct and interpret one-sample, dependent-samples, and independent-samples t- tests.

- Interpret the results of one-way, repeated measures, and factorial ANOVAs.

- Conduct and interpret null hypothesis tests of Pearson’s r.

In this section, we look at several common null hypothesis testing procedures. The emphasis here is on providing enough information to allow you to conduct and interpret the most basic versions. In most cases, the online statistical analysis tools mentioned in Chapter 12 will handle the computations—as will programs such as Microsoft Excel and SPSS.

The t-Test

As we have seen throughout this book, many studies in psychology focus on the difference between two means. The most common null hypothesis test for this type of statistical relationship is the t- test. In this section, we look at three types of t tests that are used for slightly different research designs: the one-sample t-test, the dependent-samples t- test, and the independent-samples t- test. You may have already taken a course in statistics, but we will refresh your statistical

One-Sample t-Test

The one-sample t-test is used to compare a sample mean (M) with a hypothetical population mean (μ0) that provides some interesting standard of comparison. The null hypothesis is that the mean for the population (µ) is equal to the hypothetical population mean: μ = μ0. The alternative hypothesis is that the mean for the population is different from the hypothetical population mean: μ ≠ μ0. To decide between these two hypotheses, we need to find the probability of obtaining the sample mean (or one more extreme) if the null hypothesis were true. But finding this p value requires first computing a test statistic called t. (A test statistic is a statistic that is computed only to help find the p value.) The formula for t is as follows:

Again, M is the sample mean and µ0 is the hypothetical population mean of interest. SD is the sample standard deviation and N is the sample size.

Again, M is the sample mean and µ0 is the hypothetical population mean of interest. SD is the sample standard deviation and N is the sample size.

The reason the t statistic (or any test statistic) is useful is that we know how it is distributed when the null hypothesis is true. As shown in Figure 13.1, this distribution is unimodal and symmetrical, and it has a mean of 0. Its precise shape depends on a statistical concept called the degrees of freedom, which for a one-sample t-test is N − 1. (There are 24 degrees of freedom for the distribution shown in Figure 13.1.) The important point is that knowing this distribution makes it possible to find the p value for any t score. Consider, for example, a t score of 1.50 based on a sample of 25. The probability of a t score at least this extreme is given by the proportion of t scores in the distribution that are at least this extreme. For now, let us define extreme as being far from zero in either direction. Thus the p value is the proportion of t scores that are 1.50 or above or that are −1.50 or below—a value that turns out to be .14.

Fortunately, we do not have to deal directly with the distribution of t scores. If we were to enter our sample data and hypothetical mean of interest into one of the online statistical tools in Chapter 12 or into a program like SPSS (Excel does not have a one-sample t-test function), the output would include both the t score and the p value. At this point, the rest of the procedure is simple. If p is equal to or less than .05, we reject the null hypothesis and conclude that the population mean differs from the hypothetical mean of interest. If p is greater than .05, we retain the null hypothesis and conclude that there is not enough evidence to say that the population mean differs from the hypothetical mean of interest. (Again, technically, we conclude only that we do not have enough evidence to conclude that it does differ.)

If we were to compute the t score by hand, we could use a table like Table 13.2 to make the decision. This table does not provide actual p values. Instead, it provides the critical values of t for different degrees of freedom (df) when α is .05. For now, let us focus on the two-tailed critical values in the last column of the table. Each of these values should be interpreted as a pair of values: one positive and one negative. For example, the two-tailed critical values when there are 24 degrees of freedom are 2.064 and −2.064. These are represented by the red vertical lines in Figure 13.1. The idea is that any t score below the lower critical value (the left-hand red line in Figure 13.1) is in the lowest 2.5% of the distribution, while any t score above the upper critical value (the right-hand red line) is in the highest 2.5% of the distribution. Therefore any t score beyond the critical value in either direction is in the most extreme 5% of t scores when the null hypothesis is true and has a p value less than .05. Thus if the t score we compute is beyond the critical value in either direction, then we reject the null hypothesis. If the t score we compute is between the upper and lower critical values, then we retain the null hypothesis.

| Critical value | ||

| df | One-tailed | Two-tailed |

| 3 | 2.353 | 3.182 |

| 4 | 2.132 | 2.776 |

| 5 | 2.015 | 2.571 |

| 6 | 1.943 | 2.447 |

| 7 | 1.895 | 2.365 |

| 8 | 1.860 | 2.306 |

| 9 | 1.833 | 2.262 |

| 10 | 1.812 | 2.228 |

| 11 | 1.796 | 2.201 |

| 12 | 1.782 | 2.179 |

| 13 | 1.771 | 2.160 |

| 14 | 1.761 | 2.145 |

| 15 | 1.753 | 2.131 |

| 16 | 1.746 | 2.120 |

| 17 | 1.740 | 2.110 |

| 18 | 1.734 | 2.101 |

| 19 | 1.729 | 2.093 |

| 20 | 1.725 | 2.086 |

| 21 | 1.721 | 2.080 |

| 22 | 1.717 | 2.074 |

| 23 | 1.714 | 2.069 |

| 24 | 1.711 | 2.064 |

| 25 | 1.708 | 2.060 |

| 30 | 1.697 | 2.042 |

| 35 | 1.690 | 2.030 |

| 40 | 1.684 | 2.021 |

| 45 | 1.679 | 2.014 |

| 50 | 1.676 | 2.009 |

| 60 | 1.671 | 2.000 |

| 70 | 1.667 | 1.994 |

| 80 | 1.664 | 1.990 |

| 90 | 1.662 | 1.987 |

| 100 | 1.660 | 1.984 |

Thus far, we have considered what is called a two-tailed test, where we reject the null hypothesis if the t score for the sample is extreme in either direction. This test makes sense when we believe that the sample mean might differ from the hypothetical population mean but we do not have good reason to expect the difference to go in a particular direction. But it is also possible to do a one-tailed test, where we reject the null hypothesis only if the t score for the sample is extreme in one direction that we specify before collecting the data. This test makes sense when we have good reason to expect the sample mean will differ from the hypothetical population mean in a particular direction.

Here is how it works. Each one-tailed critical value in Table 13.2 can again be interpreted as a pair of values: one positive and one negative. A t score below the lower critical value is in the lowest 5% of the distribution, and a t score above the upper critical value is in the highest 5% of the distribution. For 24 degrees of freedom, these values are −1.711 and 1.711. (These are represented by the green vertical lines in Figure 13.1.) However, for a one-tailed test, we must decide before collecting data whether we expect the sample mean to be lower than the hypothetical population mean, in which case we would use only the lower critical value, or we expect the sample mean to be greater than the hypothetical population mean, in which case we would use only the upper critical value. Notice that we still reject the null hypothesis when the t score for our sample is in the most extreme 5% of the t scores we would expect if the null hypothesis were true—so α remains at .05. We have simply redefined extreme to refer only to one tail of the distribution. The advantage of the one-tailed test is that critical values are less extreme. If the sample mean differs from the hypothetical population mean in the expected direction, then we have a better chance of rejecting the null hypothesis. The disadvantage is that if the sample mean differs from the hypothetical population mean in the unexpected direction, then there is no chance at all of rejecting the null hypothesis.

Example One-Sample t–Test

Imagine that a health psychologist is interested in the accuracy of university students’ estimates of the number of calories in a chocolate chip cookie. He shows the cookie to a sample of 10 students and asks each one to estimate the number of calories in it. Because the actual number of calories in the cookie is 250, this is the hypothetical population mean of interest (µ0). The null hypothesis is that the mean estimate for the population (μ) is 250. Because he has no real sense of whether the students will underestimate or overestimate the number of calories, he decides to do a two-tailed test. Now imagine further that the participants’ actual estimates are as follows:

250, 280, 200, 150, 175, 200, 200, 220, 180, 250.

The mean estimate for the sample (M) is 212.00 calories and the standard deviation (SD) is 39.17. The health psychologist can now compute the t score for his sample:

If he enters the data into one of the online analysis tools or uses SPSS, it would also tell him that the two-tailed p value for this t score (with 10 − 1 = 9 degrees of freedom) is .013. Because this is less than .05, the health psychologist would reject the null hypothesis and conclude that university students tend to underestimate the number of calories in a chocolate chip cookie. If he computes the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 9 degrees of freedom is ±2.262. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis. Using APA style, these results would be reported as follows: t(9) = -3.07, p = .01. Note that the t and p are italicized, the degrees of freedom appear in brackets with no decimal remainder, and the values of t and p are rounded to two decimal places.

If he enters the data into one of the online analysis tools or uses SPSS, it would also tell him that the two-tailed p value for this t score (with 10 − 1 = 9 degrees of freedom) is .013. Because this is less than .05, the health psychologist would reject the null hypothesis and conclude that university students tend to underestimate the number of calories in a chocolate chip cookie. If he computes the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 9 degrees of freedom is ±2.262. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis. Using APA style, these results would be reported as follows: t(9) = -3.07, p = .01. Note that the t and p are italicized, the degrees of freedom appear in brackets with no decimal remainder, and the values of t and p are rounded to two decimal places.

Finally, if this researcher had gone into this study with good reason to expect that university students underestimate the number of calories, then he could have done a one-tailed test instead of a two-tailed test. The only thing this decision would change is the critical value, which would be −1.833. This slightly less extreme value would make it a bit easier to reject the null hypothesis. However, if it turned out that university students overestimate the number of calories—no matter how much they overestimate it—the researcher would not have been able to reject the null hypothesis.

The Dependent-Samples t–Test

The dependent-samples t-test (sometimes called the paired-samples t-test) is used to compare two means for the same sample tested at two different times or under two different conditions. This comparison is appropriate for pretest-posttest designs or within-subjects experiments. The null hypothesis is that the means at the two times or under the two conditions are the same in the population. The alternative hypothesis is that they are not the same. This test can also be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

It helps to think of the dependent-samples t-test as a special case of the one-sample t-test. However, the first step in the dependent-samples t-test is to reduce the two scores for each participant to a single difference score by taking the difference between them. At this point, the dependent-samples t-test becomes a one-sample t-test on the difference scores. The hypothetical population mean (µ0) of interest is 0 because this is what the mean difference score would be if there were no difference on average between the two times or two conditions. We can now think of the null hypothesis as being that the mean difference score in the population is 0 (µ0 = 0) and the alternative hypothesis as being that the mean difference score in the population is not 0 (µ0 ≠ 0).

Example Dependent-Samples t–Test

Imagine that the health psychologist now knows that people tend to underestimate the number of calories in junk food and has developed a short training program to improve their estimates. To test the effectiveness of this program, he conducts a pretest-posttest study in which 10 participants estimate the number of calories in a chocolate chip cookie before the training program and then again afterward. Because he expects the program to increase the participants’ estimates, he decides to do a one-tailed test. Now imagine further that the pretest estimates are

230, 250, 280, 175, 150, 200, 180, 210, 220, 190

and that the posttest estimates (for the same participants in the same order) are

250, 260, 250, 200, 160, 200, 200, 180, 230, 240.

The difference scores, then, are as follows:

20, 10, −30, 25, 10, 0, 20, −30, 10, 50.

Note that it does not matter whether the first set of scores is subtracted from the second or the second from the first as long as it is done the same way for all participants. In this example, it makes sense to subtract the pretest estimates from the posttest estimates so that positive difference scores mean that the estimates went up after the training and negative difference scores mean the estimates went down.

The mean of the difference scores is 8.50 with a standard deviation of 27.27. The health psychologist can now compute the t score for his sample as follows:

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the one-tailed p value for this t score (again with 10 − 1 = 9 degrees of freedom) is .148. Because this is greater than .05, he would retain the null hypothesis and conclude that the training program does not significantly increase people’s calorie estimates. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a one-tailed test with 9 degrees of freedom is 1.833. (It is positive this time because he was expecting a positive mean difference score.) The fact that his t score was less extreme than this critical value would tell him that his p value is greater than .05 and that he should fail to reject the null hypothesis.

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the one-tailed p value for this t score (again with 10 − 1 = 9 degrees of freedom) is .148. Because this is greater than .05, he would retain the null hypothesis and conclude that the training program does not significantly increase people’s calorie estimates. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a one-tailed test with 9 degrees of freedom is 1.833. (It is positive this time because he was expecting a positive mean difference score.) The fact that his t score was less extreme than this critical value would tell him that his p value is greater than .05 and that he should fail to reject the null hypothesis.

The Independent-Samples t-Test

The independent-samples t-test is used to compare the means of two separate samples (M1 and M2). The two samples might have been tested under different conditions in a between-subjects experiment, or they could be pre-existing groups in a cross-sectional design (e.g., women and men, extraverts and introverts). The null hypothesis is that the means of the two populations are the same: µ1 = µ2. The alternative hypothesis is that they are not the same: µ1 ≠ µ2. Again, the test can be one-tailed if the researcher has good reason to expect the difference goes in a particular direction.

The t statistic here is a bit more complicated because it must take into account two sample means, two standard deviations, and two sample sizes. The formula is as follows:

Notice that this formula includes squared standard deviations (the variances) that appear inside the square root symbol. Also, lowercase n1 and n2 refer to the sample sizes in the two groups or condition (as opposed to capital N, which generally refers to the total sample size). The only additional thing to know here is that there are N − 2 degrees of freedom for the independent-samples t- test.

Notice that this formula includes squared standard deviations (the variances) that appear inside the square root symbol. Also, lowercase n1 and n2 refer to the sample sizes in the two groups or condition (as opposed to capital N, which generally refers to the total sample size). The only additional thing to know here is that there are N − 2 degrees of freedom for the independent-samples t- test.

Example Independent-Samples t–Test

Now the health psychologist wants to compare the calorie estimates of people who regularly eat junk food with the estimates of people who rarely eat junk food. He believes the difference could come out in either direction so he decides to conduct a two-tailed test. He collects data from a sample of eight participants who eat junk food regularly and seven participants who rarely eat junk food. The data are as follows:

Junk food eaters: 180, 220, 150, 85, 200, 170, 150, 190

Non–junk food eaters: 200, 240, 190, 175, 200, 300, 240

The mean for the non-junk food eaters is 220.71 with a standard deviation of 41.23. The mean for the junk food eaters is 168.12 with a standard deviation of 42.66. He can now compute his t score as follows:

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the two-tailed p value for this t score (with 15 − 2 = 13 degrees of freedom) is .015. Because this p value is less than .05, the health psychologist would reject the null hypothesis and conclude that people who eat junk food regularly make lower calorie estimates than people who eat it rarely. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 13 degrees of freedom is ±2.160. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

If he enters the data into one of the online analysis tools or uses Excel or SPSS, it would tell him that the two-tailed p value for this t score (with 15 − 2 = 13 degrees of freedom) is .015. Because this p value is less than .05, the health psychologist would reject the null hypothesis and conclude that people who eat junk food regularly make lower calorie estimates than people who eat it rarely. If he were to compute the t score by hand, he could look at Table 13.2 and see that the critical value of t for a two-tailed test with 13 degrees of freedom is ±2.160. The fact that his t score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

The Analysis of Variance

T-tests are used to compare two means (a sample mean with a population mean, the means of two conditions or two groups). When there are more than two groups or condition means to be compared, the most common null hypothesis test is the analysis of variance (ANOVA). In this section, we look primarily at the one-way ANOVA, which is used for between-subjects designs with a single independent variable. We then briefly consider some other versions of the ANOVA that are used for within-subjects and factorial research designs.

One-Way ANOVA

The one-way ANOVA is used to compare the means of more than two samples (M1, M2…MG) in a between-subjects design. The null hypothesis is that all the means are equal in the population: µ1= µ2 =…= µG. The alternative hypothesis is that not all the means in the population are equal.

The test statistic for the ANOVA is called F. It is a ratio of two estimates of the population variance based on the sample data. One estimate of the population variance is called the mean squares between groups (MSB) and is based on the differences among the sample means. The other is called the mean squares within groups (MSW) and is based on the differences among the scores within each group. The F statistic is the ratio of the MSB to the MSW and can, therefore, be expressed as follows:

F = MSB/MSW

Again, the reason that F is useful is that we know how it is distributed when the null hypothesis is true. As shown in Figure 13.2, this distribution is unimodal and positively skewed with values that cluster around 1. The precise shape of the distribution depends on both the number of groups and the sample size, and there are degrees of freedom values associated with each of these. The between-groups degrees of freedom is the number of groups minus one: dfB = (G − 1). The within-groups degrees of freedom is the total sample size minus the number of groups: dfW = N − G. Again, knowing the distribution of F when the null hypothesis is true allows us to find the p value.

The online tools in Chapter 12 and statistical software such as Excel and SPSS will compute F and find the p value. If p is equal to or less than .05, then we reject the null hypothesis and conclude that there are differences among the group means in the population. If p is greater than .05, then we retain the null hypothesis and conclude that there is not enough evidence to say that there are differences. In the unlikely event that we would compute F by hand, we can use a table of critical values like Table 13.3 “Table of Critical Values of ” to make the decision. The idea is that any F ratio greater than the critical value has a p value of less than .05. Thus if the F ratio we compute is beyond the critical value, then we reject the null hypothesis. If the F ratio we compute is less than the critical value, then we retain the null hypothesis.

| dfB | |||

| dfW | 2 | 3 | 4 |

| 8 | 4.459 | 4.066 | 3.838 |

| 9 | 4.256 | 3.863 | 3.633 |

| 10 | 4.103 | 3.708 | 3.478 |

| 11 | 3.982 | 3.587 | 3.357 |

| 12 | 3.885 | 3.490 | 3.259 |

| 13 | 3.806 | 3.411 | 3.179 |

| 14 | 3.739 | 3.344 | 3.112 |

| 15 | 3.682 | 3.287 | 3.056 |

| 16 | 3.634 | 3.239 | 3.007 |

| 17 | 3.592 | 3.197 | 2.965 |

| 18 | 3.555 | 3.160 | 2.928 |

| 19 | 3.522 | 3.127 | 2.895 |

| 20 | 3.493 | 3.098 | 2.866 |

| 21 | 3.467 | 3.072 | 2.840 |

| 22 | 3.443 | 3.049 | 2.817 |

| 23 | 3.422 | 3.028 | 2.796 |

| 24 | 3.403 | 3.009 | 2.776 |

| 25 | 3.385 | 2.991 | 2.759 |

| 30 | 3.316 | 2.922 | 2.690 |

| 35 | 3.267 | 2.874 | 2.641 |

| 40 | 3.232 | 2.839 | 2.606 |

| 45 | 3.204 | 2.812 | 2.579 |

| 50 | 3.183 | 2.790 | 2.557 |

| 55 | 3.165 | 2.773 | 2.540 |

| 60 | 3.150 | 2.758 | 2.525 |

| 65 | 3.138 | 2.746 | 2.513 |

| 70 | 3.128 | 2.736 | 2.503 |

| 75 | 3.119 | 2.727 | 2.494 |

| 80 | 3.111 | 2.719 | 2.486 |

| 85 | 3.104 | 2.712 | 2.479 |

| 90 | 3.098 | 2.706 | 2.473 |

| 95 | 3.092 | 2.700 | 2.467 |

| 100 | 3.087 | 2.696 | 2.463 |

Example One-Way ANOVA

Imagine that the health psychologist wants to compare the calorie estimates of psychology majors, nutrition majors, and professional dieticians. He collects the following data:

Psych majors: 200, 180, 220, 160, 150, 200, 190, 200

Nutrition majors: 190, 220, 200, 230, 160, 150, 200, 210, 195

Dieticians: 220, 250, 240, 275, 250, 230, 200, 240

The means are 187.50 (SD = 23.14), 195.00 (SD = 27.77), and 238.13 (SD = 22.35), respectively. So it appears that dieticians made substantially more accurate estimates on average. The researcher would almost certainly enter these data into a program such as Excel or SPSS, which would compute F for him or her and find the p value. Table 13.4 shows the output of the one-way ANOVA function in Excel for these data. This table is referred to as an ANOVA table. It shows that MSB is 5,971.88, MSW is 602.23, and their ratio, F, is 9.92. The p value is .0009. Because this value is below .05, the researcher would reject the null hypothesis and conclude that the mean calorie estimates for the three groups are not the same in the population. Notice that the ANOVA table also includes the “sum of squares” (SS) for between groups and for within groups. These values are computed on the way to finding MSB and MSW but are not typically reported by the researcher. Finally, if the researcher were to compute the F ratio by hand, he could look at Table 13.3 and see that the critical value of F with 2 and 21 degrees of freedom is 3.467 (the same value in Table 13.4 under Fcrit). The fact that his F score was more extreme than this critical value would tell him that his p value is less than .05 and that he should reject the null hypothesis.

| ANOVA | ||||||

| Source of variation | SS | df | MS | F | p-value | Fcrit |

| Between groups | 11,943.75 | 2 | 5,971.875 | 9.916234 | 0.000928 | 3.4668 |

| Within groups | 12,646.88 | 21 | 602.2321 | |||

| Total | 24,590.63 | 23 | ||||

ANOVA Elaborations

Post Hoc Comparisons

When we reject the null hypothesis in a one-way ANOVA, we conclude that the group means are not all the same in the population. But this can indicate different things. With three groups, it can indicate that all three means are significantly different from each other. Or it can indicate that one of the means is significantly different from the other two, but the other two are not significantly different from each other. It could be, for example, that the mean calorie estimates of psychology majors, nutrition majors, and dieticians are all significantly different from each other. Or it could be that the mean for dieticians is significantly different from the means for psychology and nutrition majors, but the means for psychology and nutrition majors are not significantly different from each other. For this reason, statistically significant one-way ANOVA results are typically followed up with a series of post hoc comparisons of selected pairs of group means to determine which are different from which others.

One approach to post hoc comparisons would be to conduct a series of independent-samples t-tests comparing each group mean to each of the other group means. But there is a problem with this approach. In general, if we conduct a t-test when the null hypothesis is true, we have a 5% chance of mistakenly rejecting the null hypothesis (see Section 13.3 “Additional Considerations” for more on such Type I errors). If we conduct several t-tests when the null hypothesis is true, the chance of mistakenly rejecting at least one null hypothesis increases with each test we conduct. Thus researchers do not usually make post hoc comparisons using standard t-tests because there is too great a chance that they will mistakenly reject at least one null hypothesis. Instead, they use one of several modified t-test procedures—among them the Bonferonni procedure, Fisher’s least significant difference (LSD) test, and Tukey’s honestly significant difference (HSD) test. The details of these approaches are beyond the scope of this book, but it is important to understand their purpose. It is to keep the risk of mistakenly rejecting a true null hypothesis to an acceptable level (close to 5%).

Repeated-Measures ANOVA

Recall that the one-way ANOVA is appropriate for between-subjects designs in which the means being compared come from separate groups of participants. It is not appropriate for within-subjects designs in which the means being compared come from the same participants tested under different conditions or at different times. This requires a slightly different approach, called the repeated-measures ANOVA. The basics of the repeated-measures ANOVA are the same as for the one-way ANOVA. The main difference is that measuring the dependent variable multiple times for each participant allows for a more refined measure of MSW. Imagine, for example, that the dependent variable in a study is a measure of reaction time. Some participants will be faster or slower than others because of stable individual differences in their nervous systems, muscles, and other factors. In a between-subjects design, these stable individual differences would simply add to the variability within the groups and increase the value of MSW (which would, in turn, decrease the value of F). In a within-subjects design, however, these stable individual differences can be measured and subtracted from the value of MSW. This lower value of MSW means a higher value of F and a more sensitive test.

Factorial ANOVA

When more than one independent variable is included in a factorial design, the appropriate approach is the factorial ANOVA. Again, the basics of the factorial ANOVA are the same as for the one-way and repeated-measures ANOVAs. The main difference is that it produces an F ratio and p value for each main effect and for each interaction. Returning to our calorie estimation example, imagine that the health psychologist tests the effect of participant major (psychology vs. nutrition) and food type (cookie vs. hamburger) in a factorial design. A factorial ANOVA would produce separate F ratios and p values for the main effect of major, the main effect of food type, and the interaction between major and food. Appropriate modifications must be made depending on whether the design is between-subjects, within-subjects, or mixed.

Testing Correlation Coefficients

For relationships between quantitative variables, where Pearson’s r (the correlation coefficient) is used to describe the strength of those relationships, the appropriate null hypothesis test is a test of the correlation coefficient. The basic logic is exactly the same as for other null hypothesis tests. In this case, the null hypothesis is that there is no relationship in the population. We can use the Greek lowercase rho (ρ) to represent the relevant parameter: ρ = 0. The alternative hypothesis is that there is a relationship in the population: ρ ≠ 0. As with the t- test, this test can be two-tailed if the researcher has no expectation about the direction of the relationship or one-tailed if the researcher expects the relationship to go in a particular direction.

It is possible to use the correlation coefficient for the sample to compute a t score with N − 2 degrees of freedom and then to proceed as for a t-test. However, because of the way it is computed, the correlation coefficient can also be treated as its own test statistic. The online statistical tools and statistical software such as Excel and SPSS generally compute the correlation coefficient and provide the p value associated with that value. As always, if the p value is equal to or less than .05, we reject the null hypothesis and conclude that there is a relationship between the variables in the population. If the p value is greater than .05, we retain the null hypothesis and conclude that there is not enough evidence to say there is a relationship in the population. If we compute the correlation coefficient by hand, we can use a table like Table 13.5, which shows the critical values of r for various samples sizes when α is .05. A sample value of the correlation coefficient that is more extreme than the critical value is statistically significant.

| Critical value of r | ||

| N | One-tailed | Two-tailed |

| 5 | .805 | .878 |

| 10 | .549 | .632 |

| 15 | .441 | .514 |

| 20 | .378 | .444 |

| 25 | .337 | .396 |

| 30 | .306 | .361 |

| 35 | .283 | .334 |

| 40 | .264 | .312 |

| 45 | .248 | .294 |

| 50 | .235 | .279 |

| 55 | .224 | .266 |

| 60 | .214 | .254 |

| 65 | .206 | .244 |

| 70 | .198 | .235 |

| 75 | .191 | .227 |

| 80 | .185 | .220 |

| 85 | .180 | .213 |

| 90 | .174 | .207 |

| 95 | .170 | .202 |

| 100 | .165 | .197 |

Example Test of a Correlation Coefficient

Imagine that the health psychologist is interested in the correlation between people’s calorie estimates and their weight. She has no expectation about the direction of the relationship, so she decides to conduct a two-tailed test. She computes the correlation coefficient for a sample of 22 university students and finds that Pearson’s r is −.21. The statistical software she uses tells her that the p value is .348. It is greater than .05, so she retains the null hypothesis and concludes that there is no relationship between people’s calorie estimates and their weight. If she were to compute the correlation coefficient by hand, she could look at Table 13.5 and see that the critical value for 22 − 2 = 20 degrees of freedom is .444. The fact that the correlation coefficient for her sample is less extreme than this critical value tells her that the p value is greater than .05 and that she should retain the null hypothesis.

Learning Objectives

- Explain the difference between between-subjects and within-subjects experiments, list some of the pros and cons of each approach, and decide which approach to use to answer a particular research question.

- Define random assignment, distinguish it from random sampling, explain its purpose in experimental research, and use some simple strategies to implement it

- Define several types of carryover effect, give examples of each, and explain how counterbalancing helps to deal with them.

In this section, we look at some different ways to design an experiment. The primary distinction we will make is between approaches in which each participant experiences one level of the independent variable and approaches in which each participant experiences all levels of the independent variable. The former are called between-subjects experiments and the latter are called within-subjects experiments.

Between-Subjects Experiments

In a between-subjects experiment, each participant is tested in only one condition. For example, a researcher with a sample of 100 university students might assign half of them to write about a traumatic event and the other half write about a neutral event. Or a researcher with a sample of 60 people with severe agoraphobia (fear of open spaces) might assign 20 of them to receive each of three different treatments for that disorder. It is essential in a between-subjects experiment that the researcher assigns participants to conditions so that the different groups are, on average, highly similar to each other. Those in a trauma condition and a neutral condition, for example, should include a similar proportion of men and women, and they should have similar average IQs, similar average levels of motivation, similar average numbers of health problems, and so on. This matching is a matter of controlling these extraneous participant variables across conditions so that they do not become confounding variables.

Random Assignment

The primary way that researchers accomplish this kind of control of extraneous variables across conditions is called random assignment, which means using a random process to decide which participants are tested in which conditions. Do not confuse random assignment with random sampling. Random sampling is a method for selecting a sample from a population, and it is rarely used in psychological research. Random assignment is a method for assigning participants in a sample to the different conditions, and it is an important element of all experimental research in psychology and other fields too.

In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands heads, the participant is assigned to Condition A, and if it lands tails, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as they are tested. When the procedure is computerized, the computer program often handles the random assignment.

One problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization. In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. Table 5.2 shows such a sequence for assigning nine participants to three conditions. The Research Randomizer website (http://www.randomizer.org) will generate block randomization sequences for any number of participants and conditions. Again, when the procedure is computerized, the computer program often handles the block randomization.

| Participant | Condition |

| 1 | A |

| 2 | C |

| 3 | B |

| 4 | B |

| 5 | C |

| 6 | A |

| 7 | C |

| 8 | B |

| 9 | A |

Random assignment is not guaranteed to control all extraneous variables across conditions. The process is random, so it is always possible that just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this possibility is not a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population takes the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this confound is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design.

Matched Groups

An alternative to simple random assignment of participants to conditions is the use of a matched-groups design. Using this design, participants in the various conditions are matched on the dependent variable or on some extraneous variable(s) prior the manipulation of the independent variable. This guarantees that these variables will not be confounded across the experimental conditions. For instance, if we want to determine whether expressive writing affects people's health then we could start by measuring various health-related variables in our prospective research participants. We could then use that information to rank-order participants according to how healthy or unhealthy they are. Next, the two healthiest participants would be randomly assigned to complete different conditions (one would be randomly assigned to the traumatic experiences writing condition and the other to the neutral writing condition). The next two healthiest participants would then be randomly assigned to complete different conditions, and so on until the two least healthy participants. This method would ensure that participants in the traumatic experiences writing condition are matched to participants in the neutral writing condition with respect to health at the beginning of the study. If at the end of the experiment, a difference in health was detected across the two conditions, then we would know that it is due to the writing manipulation and not to pre-existing differences in health.

Within-Subjects Experiments

In a within-subjects experiment, each participant is tested under all conditions. Consider an experiment on the effect of a defendant’s physical attractiveness on judgments of his guilt. Again, in a between-subjects experiment, one group of participants would be shown an attractive defendant and asked to judge his guilt, and another group of participants would be shown an unattractive defendant and asked to judge his guilt. In a within-subjects experiment, however, the same group of participants would judge the guilt of both an attractive and an unattractive defendant.

The primary advantage of this approach is that it provides maximum control of extraneous participant variables. Participants in all conditions have the same mean IQ, same socioeconomic status, same number of siblings, and so on—because they are the very same people. Within-subjects experiments also make it possible to use statistical procedures that remove the effect of these extraneous participant variables on the dependent variable and therefore make the data less “noisy” and the effect of the independent variable easier to detect. We will look more closely at this idea later in the book. However, not all experiments can use a within-subjects design nor would it be desirable to do so.

Carryover Effects and Counterbalancing

The primary disadvantage of within-subjects designs is that they can result in order effects. An order effect occurs when participants' responses in the various conditions are affected by the order of conditions to which they were exposed. One type of order effect is a carryover effect. A carryover effect is an effect of being tested in one condition on participants’ behavior in later conditions. One type of carryover effect is a practice effect, where participants perform a task better in later conditions because they have had a chance to practice it. Another type is a fatigue effect, where participants perform a task worse in later conditions because they become tired or bored. Being tested in one condition can also change how participants perceive stimuli or interpret their task in later conditions. This type of effect is called a context effect (or contrast effect). For example, an average-looking defendant might be judged more harshly when participants have just judged an attractive defendant than when they have just judged an unattractive defendant. Within-subjects experiments also make it easier for participants to guess the hypothesis. For example, a participant who is asked to judge the guilt of an attractive defendant and then is asked to judge the guilt of an unattractive defendant is likely to guess that the hypothesis is that defendant attractiveness affects judgments of guilt. This knowledge could lead the participant to judge the unattractive defendant more harshly because he thinks this is what he is expected to do. Or it could make participants judge the two defendants similarly in an effort to be “fair.”

Carryover effects can be interesting in their own right. (Does the attractiveness of one person depend on the attractiveness of other people that we have seen recently?) But when they are not the focus of the research, carryover effects can be problematic. Imagine, for example, that participants judge the guilt of an attractive defendant and then judge the guilt of an unattractive defendant. If they judge the unattractive defendant more harshly, this might be because of his unattractiveness. But it could be instead that they judge him more harshly because they are becoming bored or tired. In other words, the order of the conditions is a confounding variable. The attractive condition is always the first condition and the unattractive condition the second. Thus any difference between the conditions in terms of the dependent variable could be caused by the order of the conditions and not the independent variable itself.

There is a solution to the problem of order effects, however, that can be used in many situations. It is counterbalancing, which means testing different participants in different orders. The best method of counterbalancing is complete counterbalancing in which an equal number of participants complete each possible order of conditions. For example, half of the participants would be tested in the attractive defendant condition followed by the unattractive defendant condition, and others half would be tested in the unattractive condition followed by the attractive condition. With three conditions, there would be six different orders (ABC, ACB, BAC, BCA, CAB, and CBA), so some participants would be tested in each of the six orders. With four conditions, there would be 24 different orders; with five conditions there would be 120 possible orders. With counterbalancing, participants are assigned to orders randomly, using the techniques we have already discussed. Thus, random assignment plays an important role in within-subjects designs just as in between-subjects designs. Here, instead of randomly assigning to conditions, they are randomly assigned to different orders of conditions. In fact, it can safely be said that if a study does not involve random assignment in one form or another, it is not an experiment.

A more efficient way of counterbalancing is through a Latin square design which randomizes through having equal rows and columns. For example, if you have four treatments, you must have four versions. Like a Sudoku puzzle, no treatment can repeat in a row or column. For four versions of four treatments, the Latin square design would look like:

| A | B | C | D |

| B | C | D | A |

| C | D | A | B |

| D | A | B | C |

You can see in the diagram above that the square has been constructed to ensure that each condition appears at each ordinal position (A appears first once, second once, third once, and fourth once) and each condition precedes and follows each other condition one time. A Latin square for an experiment with 6 conditions would by 6 x 6 in dimension, one for an experiment with 8 conditions would be 8 x 8 in dimension, and so on. So while complete counterbalancing of 6 conditions would require 720 orders, a Latin square would only require 6 orders.

Finally, when the number of conditions is large experiments can use random counterbalancing in which the order of the conditions is randomly determined for each participant. Using this technique every possible order of conditions is determined and then one of these orders is randomly selected for each participant. This is not as powerful a technique as complete counterbalancing or partial counterbalancing using a Latin squares design. Use of random counterbalancing will result in more random error, but if order effects are likely to be small and the number of conditions is large, this is an option available to researchers.

There are two ways to think about what counterbalancing accomplishes. One is that it controls the order of conditions so that it is no longer a confounding variable. Instead of the attractive condition always being first and the unattractive condition always being second, the attractive condition comes first for some participants and second for others. Likewise, the unattractive condition comes first for some participants and second for others. Thus any overall difference in the dependent variable between the two conditions cannot have been caused by the order of conditions. A second way to think about what counterbalancing accomplishes is that if there are carryover effects, it makes it possible to detect them. One can analyze the data separately for each order to see whether it had an effect.

When 9 Is “Larger” Than 221

Researcher Michael Birnbaum has argued that the lack of context provided by between-subjects designs is often a bigger problem than the context effects created by within-subjects designs. To demonstrate this problem, he asked participants to rate two numbers on how large they were on a scale of 1-to-10 where 1 was “very very small” and 10 was “very very large”. One group of participants were asked to rate the number 9 and another group was asked to rate the number 221 (Birnbaum, 1999)[1]. Participants in this between-subjects design gave the number 9 a mean rating of 5.13 and the number 221 a mean rating of 3.10. In other words, they rated 9 as larger than 221! According to Birnbaum, this difference is because participants spontaneously compared 9 with other one-digit numbers (in which case it is relatively large) and compared 221 with other three-digit numbers (in which case it is relatively small).

Simultaneous Within-Subjects Designs

So far, we have discussed an approach to within-subjects designs in which participants are tested in one condition at a time. There is another approach, however, that is often used when participants make multiple responses in each condition. Imagine, for example, that participants judge the guilt of 10 attractive defendants and 10 unattractive defendants. Instead of having people make judgments about all 10 defendants of one type followed by all 10 defendants of the other type, the researcher could present all 20 defendants in a sequence that mixed the two types. The researcher could then compute each participant’s mean rating for each type of defendant. Or imagine an experiment designed to see whether people with social anxiety disorder remember negative adjectives (e.g., “stupid,” “incompetent”) better than positive ones (e.g., “happy,” “productive”). The researcher could have participants study a single list that includes both kinds of words and then have them try to recall as many words as possible. The researcher could then count the number of each type of word that was recalled.

Between-Subjects or Within-Subjects?

Almost every experiment can be conducted using either a between-subjects design or a within-subjects design. This possibility means that researchers must choose between the two approaches based on their relative merits for the particular situation.

Between-subjects experiments have the advantage of being conceptually simpler and requiring less testing time per participant. They also avoid carryover effects without the need for counterbalancing. Within-subjects experiments have the advantage of controlling extraneous participant variables, which generally reduces noise in the data and makes it easier to detect any effect of the independent variable upon the dependent variable. Within-subjects experiments also require fewer participants than between-subjects experiments to detect an effect of the same size.

A good rule of thumb, then, is that if it is possible to conduct a within-subjects experiment (with proper counterbalancing) in the time that is available per participant—and you have no serious concerns about carryover effects—this design is probably the best option. If a within-subjects design would be difficult or impossible to carry out, then you should consider a between-subjects design instead. For example, if you were testing participants in a doctor’s waiting room or shoppers in line at a grocery store, you might not have enough time to test each participant in all conditions and therefore would opt for a between-subjects design. Or imagine you were trying to reduce people’s level of prejudice by having them interact with someone of another race. A within-subjects design with counterbalancing would require testing some participants in the treatment condition first and then in a control condition. But if the treatment works and reduces people’s level of prejudice, then they would no longer be suitable for testing in the control condition. This difficulty is true for many designs that involve a treatment meant to produce long-term change in participants’ behavior (e.g., studies testing the effectiveness of psychotherapy). Clearly, a between-subjects design would be necessary here.

Remember also that using one type of design does not preclude using the other type in a different study. There is no reason that a researcher could not use both a between-subjects design and a within-subjects design to answer the same research question. In fact, professional researchers often take exactly this type of mixed methods approach.

Research methods is my favorite course to teach. It is somewhat less popular with students, but I’m working on that issue. Part of the excitement of teaching this class comes from the uniquely open framework—students get to design a research study about a topic that interests them. By reading my students’ papers every semester, I learn about a wide range of topics relevant to social work that I otherwise would not have known about. But what topic should you choose?

Chapter outline

- 2.1 Getting started

- 2.2 Sources of information

- 2.3 Finding literature

Content advisory

This chapter discusses or mentions the following topics: racism and hate groups, police violence, substance abuse, and mental health.

Learning Objectives

- Differentiate among exploratory, descriptive, and explanatory research studies

A recent news story on college students’ addictions to electronic gadgets (Lisk, 2011) [2] describes current research findings from Professor Susan Moeller and colleagues from the University of Maryland (http://withoutmedia.wordpress.com). The story raises a number of interesting questions. Just what sorts of gadgets are students addicted to? How do these addictions work? Why do they exist, and who is most likely to experience them?

Social science research is great for answering these types of questions, but to answer them thoroughly, we must take care in designing our research projects. In this chapter, we’ll discuss what aspects of a research project should be considered at the beginning, including specifying the goals of the research, the components that are common across most research projects, and a few other considerations.

When designing a research project, you should first consider what you hope to accomplish by conducting the research. What do you hope to be able to say about your topic? Do you hope to gain a deep understanding of the phenomenon you’re studying, or would you rather have a broad, but perhaps less deep, understanding? Do you want your research to be used by policymakers or others to shape social life, or is this project more about exploring your curiosities? Your answers to each of these questions will shape your research design.

Exploration, description, and explanation

In the beginning phases, you’ll need to decide whether your research will be exploratory, descriptive, or explanatory. Each has a different purpose, so how you design your research project will be determined in part by this decision.

Researchers conducting exploratory research are typically in the early stages of examining their topics. These sorts of projects are usually conducted when a researcher wants to test the feasibility of conducting a more extensive study and to figure out the “lay of the land” with respect to the particular topic. Perhaps very little prior research has been conducted on this subject. If this is the case, a researcher may wish to do some exploratory work to learn what method to use in collecting data, how best to approach research subjects, or even what sorts of questions are reasonable to ask. A researcher wanting to simply satisfy their curiosity about a topic could also conduct exploratory research. For example, an exploratory study may be a suitable step toward understanding the relatively new phenomenon of college students' addictions to their electronic gadgets.

It is important to note that exploratory designs do not make sense for topic areas with a lot of existing research. For example, it would not make much sense to conduct an exploratory study on common interventions for parents who neglect their children because the topic has already been extensive study in this area. Exploratory questions are best suited to topics that have not been studied. Students may justify an exploratory approach to their project by claiming that there is very little literature on their topic. Most of the time, the student simply needs more direction on where to search, however each semester a few topics are chosen for which there actually is a lack of literature. Perhaps there would be less available literature if a student set out to study child neglect interventions for parents who identify as transgender or parents who are refugees from the Syrian civil war. In that case, an exploratory design would make sense as there is less literature to guide your study.

Another purpose of research is to describe or define a particular phenomenon, termed descriptive research. For example, a social work researcher may want to understand what it means to be a first-generation college student or a resident in a psychiatric group home. In this case, descriptive research would be an appropriate strategy. A descriptive study of college students’ addictions to their electronic gadgets, for example, might aim to describe patterns in how many hours students use gadgets or which sorts of gadgets students tend to use most regularly.

Researchers at the Princeton Review conduct descriptive research each year when they set out to provide students and their parents with information about colleges and universities around the United States. They describe the social life at a school, the cost of admission, and student-to-faculty ratios among other defining aspects. Although students and parents may be able to obtain much of this information on their own, having access to the data gathered by a team of researchers is much more convenient and less time consuming.

Social workers often rely on descriptive research to tell them about their service area. Keeping track of the number of children receiving foster care services, their demographic makeup (e.g., race, gender), and length of time in care are excellent examples of descriptive research. On a macro-level, the Centers for Disease Control provides a remarkable amount of descriptive research on mental and physical health conditions. In fact, descriptive research has many useful applications, and you probably rely on findings from descriptive research without even being aware.

Finally, social work researchers often aim to explain why particular phenomena work in the way that they do. Research that answers “why” questions is referred to as explanatory research. In this case, the researcher is trying to identify the causes and effects of whatever phenomenon they are studying. An explanatory study of college students’ addictions to their electronic gadgets might aim to understand why students become addicted. Does the addiction have anything to do with their family histories, extracurricular hobbies and activities, or with whom they spend their time? An explanatory study could answer these kinds of questions.

There are numerous examples of explanatory social scientific investigations. For example, the recent work of Dominique Simons and Sandy Wurtele (2010) [3] sought to discover whether receiving corporal punishment from parents led children to turn to violence in solving their interpersonal conflicts with other children. In their study of 102 families with children between the ages of 3 and 7, the researchers found that experiencing frequent spanking did, in fact, result in children being more likely to accept aggressive problem-solving techniques. Another example of explanatory research can be seen in Robert Faris and Diane Felmlee’s (2011) [4] research study on the connections between popularity and bullying. From their study of 8th, 9th, and 10th graders in 19 North Carolina schools, they found that aggression increased as adolescents’ popularity increased. [5]

The choice between descriptive, exploratory, and explanatory research should be made with your research question in mind. What does your question ask? Are you trying to learn the basics about a new area, establish a clear “why” relationship, or define or describe an activity or concept? In the next section, we will explore how each type of research is associated with different methods, paradigms, and forms of logic.

Key Takeaways

- Exploratory research is usually conducted when a researcher has just begun an investigation and wishes to understand the topic generally.

- Descriptive research aims to describe or define the topic at hand.

- Explanatory research is aims to explain why particular phenomena work in the way that they do.

Glossary

Descriptive research- describes or defines a particular phenomenon

Explanatory research- explains why particular phenomena work in the way that they do, answers “why” questions

Exploratory research- conducted during the early stages of a project, usually when a researcher wants to test the feasibility of conducting a more extensive study

Learning Objectives

- Develop and revise questions that focus your inquiry

- Create a concept map that demonstrates the relationships between concepts

Once you have selected your topic area and reviewed literature related to it, you may need to narrow it down to something that can be realistically researched and answered. In the last section, we learned about asking who, what, when, where, why, and how questions. As you read more about your topic area, the focus of your inquiry should become more specific and clearer. As a result, you might begin to ask questions that describe a phenomenon, compare one phenomenon with another, or probe the relationship between two concepts.

You might begin by asking a series of PICO questions. Although the PICO method is used primarily in the health sciences, it can also be useful for narrowing/refining a research question in the social sciences. A way to formulate an answerable question using the PICO model could look something like this:

- Patient, population or problem: What are the characteristics of the patient or population? (e.g., gender, age, other demographics) What is the social problem or diagnosis you are interested in? (e.g., poverty or substance use disorder)

- Intervention or exposure: What do you want to do with the patient, person, or population (e.g., treat, diagnose, observe)? For example, you may want to observe a client’s behavior or a reaction to a specific type of treatment.

- Comparison: What is the alternative to the intervention? (e.g., other therapeutic interventions, programs, or policies) For example, how does a sample group that is assigned to mandatory rehabilitation compare to a sample group assigned to an intervention that builds motivation to enter treatment voluntarily?

- Outcome: What are the relevant outcomes? (e.g., academic achievement, healthy relationships, shame) For example, how does recognizing triggers for trauma flashbacks impact the target population?

Some examples of how the PICO method is used to refine a research question include:

- “Can music therapy improve communication skills in students diagnosed with autism spectrum disorder?”

- Population (autistic students)

- Intervention (music therapy)

- “How effective are antidepressant medications in reducing symptoms of anxiety and depression?”

- Population (clients with anxiety and depression)

- Intervention (antidepressants)

- “How does race impact help-seeking behaviors for students with mental health diagnoses?

- Population (students with mental health diagnoses, students of minority races)

- Comparison (students of different races)

- Outcome (seeking help for mental health issues)

Another mnemonic technique used in the social sciences for narrowing a topic is SPICE. An example of how SPICE factors can be used to develop a research question is given below:

Setting – for example, a college campus

Perspective – for example, college students

Intervention – for example, text message reminders

Comparisons – for example, telephone message re minders

Evaluation – for example, number of cigarettes used after text message reminder compared to the number of cigarettes used after a telephone reminder

Developing a concept map

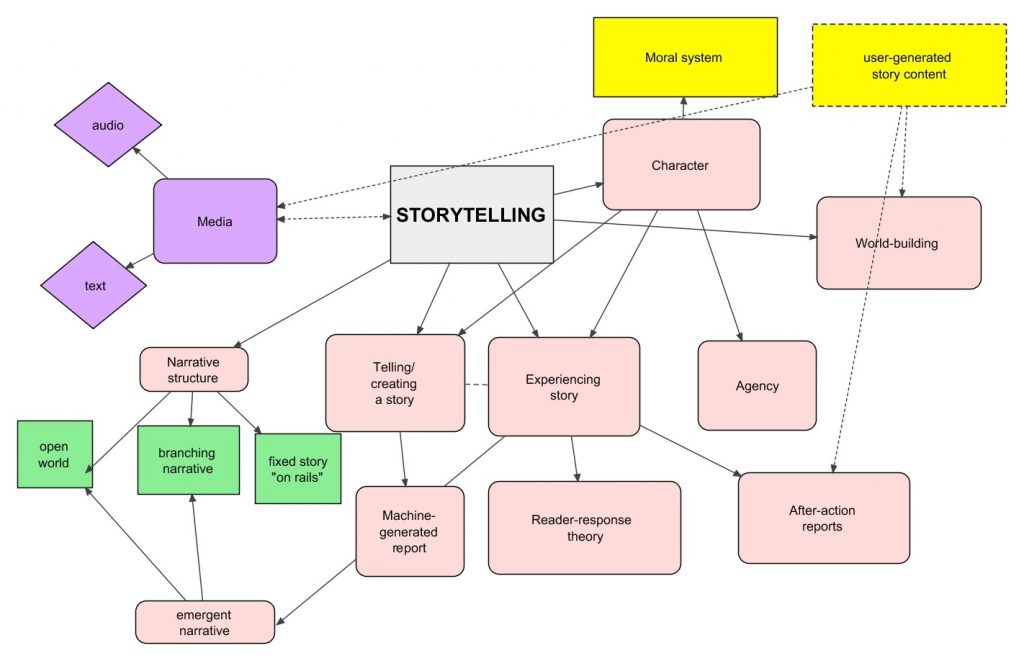

Likewise, developing a concept map or mind map around your topic may help you analyze your question and determine more precisely what you want to research. Using this technique, start with the broad topic, issue, or problem, and begin writing down all the words, phrases and ideas related to that topic that come to mind and then ‘map’ them to the original idea. This technique is illustrated in Figure 3.2.

Concept mapping aims to improve the “description of the breadth and depth of literature in a domain of inquiry. It also facilitates identification of the number and nature of studies underpinning mapped relationships among concepts, thus laying the groundwork for systematic research reviews and meta-analyses” (Lesley, Floyd, & Oermann, 2002, p. 229). [7] Its purpose, like the other methods of question refining, is to help you organize, prioritize, and integrate material into a workable research area; one that is interesting, answerable, feasible, objective, scholarly, original, and clear.

The process of concept mapping is beneficial when you begin your own literature review, as it will help you to come up with keywords and concepts related to your topic. Concept mapping can also be helpful when creating a topical outline or drafting your literature review, as it demonstrates the important of each concept and sub-concepts as well as the relationships between each concept.

For example, perhaps your initial idea or interest is how to prevent obesity. After an initial search of the relevant literature, you realize the topic of obesity is too broad to adequately cover in the time you have to do your literature review. You decide to narrow your focus to causes of childhood obesity. Using PICO factors, you further narrow your search to the influence of family factors on overweight children. A potential research question might then be “What maternal factors are associated with toddler obesity in the United States?” You’re now ready to begin searching the literature for studies, reports, cases, and other information sources that relate to this question.

Similarly, for a broad topic like school performance or grades, and after an initial literature search that provides some variables, examples of a narrow research question might be:

- “To what extent does parental involvement in children’s education relate to school performance over the course of the early grades?”

- “Do parental involvement levels differ by family, social, demographic, and contextual characteristics?”

- “What forms of parent involvement are most highly correlated with children’s outcomes? What factors might influence the extent of parental involvement?” (Early Childhood Longitudinal Program, 2011). [8]

In either case, your literature search, working question, and understanding of the topic are constantly changing as your knowledge of the topic deepens. Conducting a literature review is an iterative process, as it stops, starts, and loops back on itself multiple times before completion. As research is a practice behavior of social workers, you should apply the same type of critical reflection to your inquiry as you would to your clinical or macro practice.

Key Takeaways

- As you read more articles, you should revise your original question to make it more focused and clear.

- You can further develop the important concepts and relationships for your project by using concept maps and the PICO/SPICE frameworks.

Whether you plan to engage in clinical, administrative, or policy practice, all social workers must be able to look at the available literature on a topic and synthesize the relevant facts into a coherent review. Literature reviews can powerful effects. For example, a concise literature review can provide the factual basis for a new program or policy in an agency or government entity. In your own research proposal, conducting a thorough literature review will help you build strong arguments for why your topic is important and why your research question must be answered.

Chapter outline

- 4.1 What is a literature review?

- 4.2 Synthesizing literature

- 4.3 Writing the literature review

Content advisory

This chapter discusses or mentions the following topics: homelessness, suicide, depression, LGBTQ oppression, drug use, and psychotic disorders.

Whether you plan to engage in clinical, administrative, or policy practice, all social workers must be able to look at the available literature on a topic and synthesize the relevant facts into a coherent review. Literature reviews can powerful effects. For example, a concise literature review can provide the factual basis for a new program or policy in an agency or government entity. In your own research proposal, conducting a thorough literature review will help you build strong arguments for why your topic is important and why your research question must be answered.

Chapter outline

- 4.1 What is a literature review?

- 4.2 Synthesizing literature

- 4.3 Writing the literature review

Content advisory

This chapter discusses or mentions the following topics: homelessness, suicide, depression, LGBTQ oppression, drug use, and psychotic disorders.

Learning Objectives

- Find a topic to investigate

- Create a working question

Choosing a social work research topic

According to the Action Network for Social Work Education and Research (ANSWER), social work research is conducted to benefit “consumers, practitioners, policymakers, educators, and the general public through the examination of societal issues” (ANSWER, n.d., para. 2). [9] Common social issues that are studied include “health care, substance abuse, community violence, family issues, child welfare, aging, well-being and resiliency, and the strengths and needs of underserved populations” (ANSWER, n.d., para. 2). This list is certainly not exhaustive. Social workers may study any area that impacts their practice. However, the unifying feature of social work research is its focus on promoting the well-being of target populations.

If you are an undergraduate social work student that is not yet practicing social work, then how do you identify a researchable topic? Part of the joy in being a social work student is figuring out what areas of social work are appealing to you. Perhaps there are certain theories that speak to you, based on your values or experiences. Perhaps there are social issues you wish to change. Perhaps there are certain groups of people you want to help. Perhaps there are clinical interventions that interest you. Any one of these areas is a good place to start. At the beginning of a research project, your focus should be finding a social work topic that is interesting enough to spend a semester reading and writing about.

A good topic selection plan begins with a general orientation into the subject you are interested in pursuing in more depth. Here are some suggestions when choosing a topic area:

- Pick an area of interest or experience, or an area where you know there is a need for more research.

- It may be easier to start with “what” and “why” questions and expand on those. For example, what are the best methods of treating severe depression? Or why are people receiving SNAP more likely to be obese?

- If you already have practice experience in social work through employment, an internship, or volunteer work, think about practice issues you noticed in the placement.

- Ask a professor, preferably one active in research, about possible topics.

- Read departmental information on research interests of the faculty. Faculty research interests vary widely, and it might surprise you what they’ve published on in the past. Most departmental websites post the curriculum vitae, or CV, of faculty which lists their publications, credentials, and interests.

- Read a research paper that interests you. The paper’s literature review or background section will provide insight into the research question the author was seeking to address with their study. Is the research incomplete, imprecise, biased, or inconsistent? As you’re reading the paper, look for what’s missing. These may be “gaps in the literature” that you might explore in your own study. The conclusion or discussion section at the end may also offer some questions for future exploration. A recent blog posting in Science (Pain, 2016) [10] provides several tips from researchers and graduate students on how to effectively read these papers.

- Think about papers you enjoyed researching and writing in other classes. Research is a unique class and will use the tools of social science for you to think more in depth about a topic. It will bring a new perspective that will deepen your knowledge of the topic.

- Identify and browse journals related to your research interests. Faculty and librarians can help you identify relevant journals in your field and specific areas of interest.

How do you feel about your topic?

Perhaps you have started with a specific population in mind, such as youth who identify as LGBTQ or visitors of a local health clinic. Perhaps you choose to start with a specific social problem, such as gang violence, or social policy or program, such as zero-tolerance policies in schools. Alternately, maybe there are interventions that you are interested in learning more about, such as dialectical behavioral therapy or applied behavior analysis. Your motivation for choosing a topic does not have to be objective. Because social work is a values-based profession, social work researchers often find themselves motivated to conduct research that furthers social justice or fights oppression. Just because you think a policy is wrong or a group is being marginalized, for example, does not mean that your research will be biased. Instead, it means that you must understand how you feel, why you feel that way, and what would cause you to feel differently about your topic.

Start by asking yourself how you feel about your topic. Be totally honest, and ask yourself whether you believe your perspective is the only valid one. Perhaps yours isn’t the only perspective, but do you believe it is the wisest one? The most practical one? How do you feel about other perspectives on this topic? If you are concerned that you may design a project to only achieve answers that you like and/or cover up findings that you do not like, then you must choose a different topic. For example, a researcher may want to find out whether there is a relationship between intelligence and political affiliation, while holding the personal bias that members of her political party are the most intelligent. Her strong opinion would not be a problem by itself, however if she feels rage when considering the possibility that the opposing party’s members are more intelligent than those of her party, then the topic is probably too conflicting to use for unbiased research.

It is important to note that strong feelings about a topic are not always problematic. In fact, some of the best topics to research are those that are important to us. What better way to stay motivated than to study something that you care about? You must be able to accept that people will have a different perspective than you do, and try to represent their viewpoints fairly in your research. If you feel prepared to accept all findings, even those that may be unflattering to or distinct from your personal perspective, then perhaps you should intentionally study a topic about which you have strong feelings.

Kathleen Blee (2002) [11] has taken this route in her research. Blee studies hate movement participants, people whose racist ideologies she studies but does not share. You can read her accounts of this research in two of her most well-known publications, Inside Organized Racism and Women of the Klan. Blee’s research is successful because she was willing to report her findings and observations honestly, even those about which she may have strong feelings. Unlike Blee, if you conclude that you cannot accept or share with findings that you disagree with, then you should study a different topic. Knowing your own hot-button issues is an important part of self-knowledge and reflection in social work.