3 Conducting Psychology Research in the Real World

Original chapter by Matthias R. Mehl adapted by the Queen’s University Psychology Department

This Open Access chapter was originally written for the NOBA project. Information on the NOBA project can be found below.

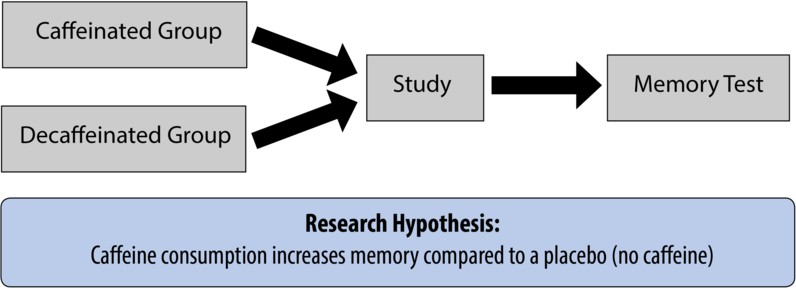

Because of its ability to determine cause-and-effect relationships, the laboratory experiment is traditionally considered the method of choice for psychological science. One downside, however, is that as it carefully controls conditions and their effects, it can yield findings that are out of touch with reality and have limited use when trying to understand real-world behavior. This module highlights the importance of also conducting research outside the psychology laboratory, within participants’ natural, everyday environments, and reviews existing methodologies for studying daily life

Learning Objectives

- Identify limitations of the traditional laboratory experiment.

- Explain ways in which daily life research can further psychological science.

- Know what methods exist for conducting psychological research in the real world.

Introduction

The laboratory experiment is traditionally considered the “gold standard” in psychology research. This is because only laboratory experiments can clearly separate cause from effect and therefore establish causality. Despite this unique strength, it is also clear that a scientific field that is mainly based on controlled laboratory studies ends up lopsided. Specifically, it accumulates a lot of knowledge on what can happen—under carefully isolated and controlled circumstances—but it has little to say about what actually does happen under the circumstances that people actually encounter in their daily lives.

For example, imagine you are a participant in an experiment that looks at the effect of being in a good mood on generosity, a topic that may have a good deal of practical application. Researchers create an internally-valid, carefully-controlled experiment where they randomly assign you to watch either a happy movie or a neutral movie, and then you are given the opportunity to help the researcher out by staying longer and participating in another study. If people in a good mood are more willing to stay and help out, the researchers can feel confident that – since everything else was held constant – your positive mood led you to be more helpful. However, what does this tell us about helping behaviors in the real world? Does it generalize to other kinds of helping, such as donating money to a charitable cause? Would all kinds of happy movies produce this behavior, or only this one? What about other positive experiences that might boost mood, like receiving a compliment or a good grade? And what if you were watching the movie with friends, in a crowded theatre, rather than in a sterile research lab? Taking research out into the real world can help answer some of these sorts of important questions.

As one of the founding fathers of social psychology remarked, “Experimentation in the laboratory occurs, socially speaking, on an island quite isolated from the life of society” (Lewin, 1944, p. 286). This module highlights the importance of going beyond experimentation and also conducting research outside the laboratory (Reis & Gosling, 2010), directly within participants’ natural environments, and reviews existing methodologies for studying daily life.

Rationale for Conducting Psychology Research in the Real World

One important challenge researchers face when designing a study is to find the right balance between ensuring Internal Validity, or the degree to which a study allows unambiguous causal inferences, and External Validity, or the degree to which a study ensures that potential findings apply to settings and samples other than the ones being studied (Brewer, 2000). Unfortunately, these two kinds of validity tend to be difficult to achieve at the same time, in one study. This is because creating a controlled setting, in which all potentially influential factors (other than the experimentally-manipulated variable) are controlled, is bound to create an environment that is quite different from what people naturally encounter (e.g., using a happy movie clip to promote helpful behavior). However, it is the degree to which an experimental situation is comparable to the corresponding real-world situation of interest that determines how generalizable potential findings will be. In other words, if an experiment is very far-off from what a person might normally experience in everyday life, you might reasonably question just how useful its findings are.

Because of the incompatibility of the two types of validity, one is often—by design—prioritized over the other. Due to the importance of identifying true causal relationships, psychology has traditionally emphasized internal over external validity. However, in order to make claims about human behavior that apply across populations and environments, researchers complement traditional laboratory research, where participants are brought into the lab, with field research where, in essence, the psychological laboratory is brought to participants. Field studies allow for the important test of how psychological variables and processes of interest “behave” under real-world circumstances (i.e., what actually does happen rather than what can happen). They can also facilitate “downstream” operationalizations of constructs that measure life outcomes of interest directly rather than indirectly.

Take, for example, the fascinating field of psychoneuroimmunology, where the goal is to understand the interplay of psychological factors – such as personality traits or one’s stress level – and the immune system. Highly sophisticated and carefully controlled experiments offer ways to isolate the variety of neural, hormonal, and cellular mechanisms that link psychological variables such as chronic stress to biological outcomes such as immunosuppression (a state of impaired immune functioning; Sapolsky, 2004). Although these studies demonstrate impressively how psychological factors can affect health-relevant biological processes, they—because of their research design—remain mute about the degree to which these factors actually do undermine people’s everyday health in real life. It is certainly important to show that laboratory stress can alter the number of natural killer cells in the blood. But it is equally important to test to what extent the levels of stress that people experience on a day-to-day basis result in them catching a cold more often or taking longer to recover from one. The goal for researchers, therefore, must be to complement traditional laboratory experiments with less controlled studies under real-world circumstances. The term ecological validity is used to refer the degree to which an effect has been obtained under conditions that are typical for what happens in everyday life (Brewer, 2000). In this example, then, people might keep a careful daily log of how much stress they are under as well as noting physical symptoms such as headaches or nausea. Although many factors beyond stress level may be responsible for these symptoms, this more correlational approach can shed light on how the relationship between stress and health plays out outside of the laboratory.

An Overview of Research Methods for Studying Daily Life

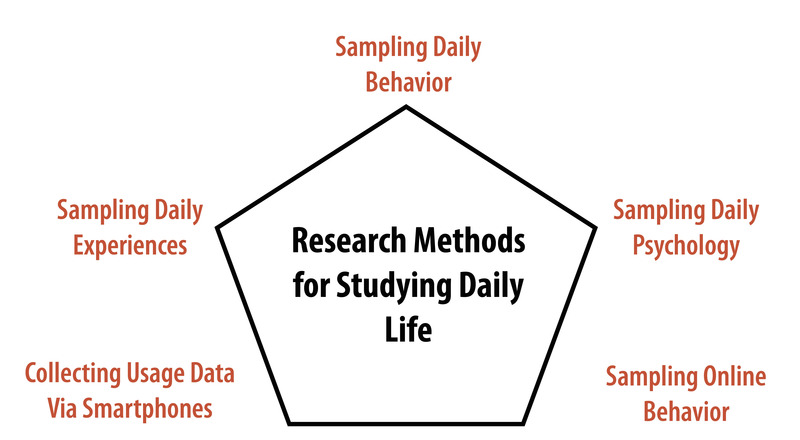

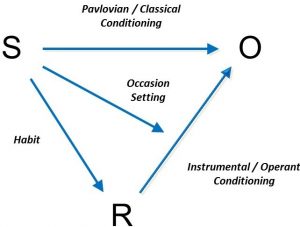

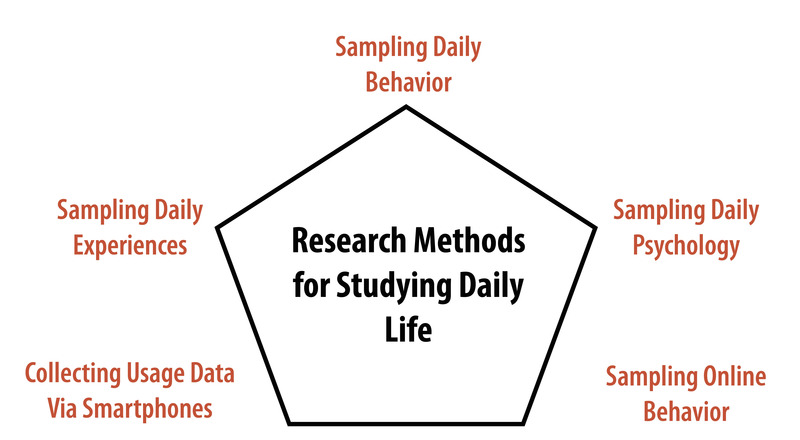

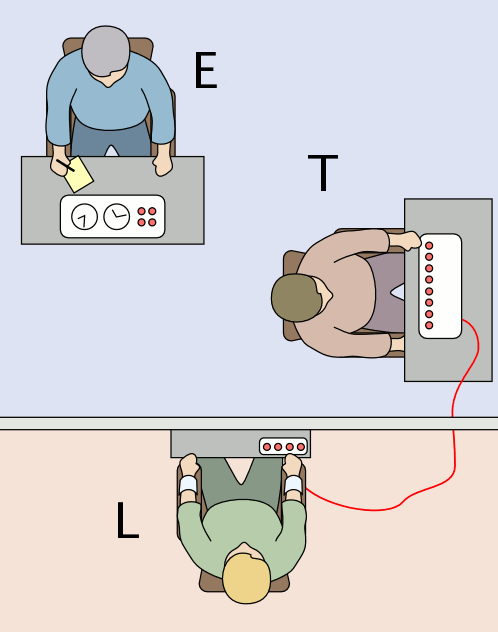

Capturing “life as it is lived” has been a strong goal for some researchers for a long time. Wilhelm and his colleagues recently published a comprehensive review of early attempts to systematically document daily life (Wilhelm, Perrez, & Pawlik, 2012). Building onto these original methods, researchers have, over the past decades, developed a broad toolbox for measuring experiences, behavior, and physiology directly in participants’ daily lives (Mehl & Conner, 2012). Figure 1 provides a schematic overview of the methodologies described below.

Studying Daily Experiences

Starting in the mid-1970s, motivated by a growing skepticism toward highly-controlled laboratory studies, a few groups of researchers developed a set of new methods that are now commonly known as the experience-sampling method (Hektner, Schmidt, & Csikszentmihalyi, 2007), ecological momentary assessment (Stone & Shiffman, 1994), or the diary method (Bolger & Rafaeli, 2003). Although variations within this set of methods exist, the basic idea behind all of them is to collect in-the-moment (or, close-to-the-moment) self-report data directly from people as they go about their daily lives. This is typically accomplished by asking participants’ repeatedly (e.g., five times per day) over a period of time (e.g., a week) to report on their current thoughts and feelings. The momentary questionnaires often ask about their location (e.g., “Where are you now?”), social environment (e.g., “With whom are you now?”), activity (e.g., “What are you currently doing?”), and experiences (e.g., “How are you feeling?”). That way, researchers get a snapshot of what was going on in participants’ lives at the time at which they were asked to report.

Technology has made this sort of research possible, and recent technological advances have altered the different tools researchers are able to easily use. Initially, participants wore electronic wristwatches that beeped at preprogrammed but seemingly random times, at which they completed one of a stack of provided paper questionnaires. With the mobile computing revolution, both the prompting and the questionnaire completion were gradually replaced by handheld devices such as smartphones. Being able to collect the momentary questionnaires digitally and time-stamped (i.e., having a record of exactly when participants responded) had major methodological and practical advantages and contributed to experience sampling going mainstream (Conner, Tennen, Fleeson, & Barrett, 2009).

Over time, experience sampling and related momentary self-report methods have become very popular, and, by now, they are effectively the gold standard for studying daily life. They have helped make progress in almost all areas of psychology (Mehl & Conner, 2012). These methods ensure receiving many measurements from many participants, and has further inspired the development of novel statistical methods (Bolger & Laurenceau, 2013). Finally, and maybe most importantly, they accomplished what they sought out to accomplish: to bring attention to what psychology ultimately wants and needs to know about, namely “what people actually do, think, and feel in the various contexts of their lives” (Funder, 2001, p. 213). In short, these approaches have allowed researchers to do research that is more externally valid, or more generalizable to real life, than the traditional laboratory experiment.

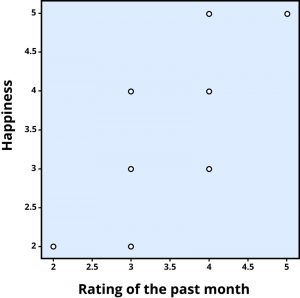

To illustrate these techniques, consider a classic study, Stone, Reed, and Neale (1987), who tracked positive and negative experiences surrounding a respiratory infection using daily experience sampling. They found that undesirable experiences peaked and desirable ones dipped about four to five days prior to participants coming down with the cold. More recently, Killingsworth and Gilbert (2010) collected momentary self-reports from more than 2,000 participants via a smartphone app. They found that participants were less happy when their mind was in an idling, mind-wandering state, such as surfing the Internet or multitasking at work, than when it was in an engaged, task-focused one, such as working diligently on a paper. These are just two examples that illustrate how experience-sampling studies have yielded findings that could not be obtained with traditional laboratory methods.

Recently, the day reconstruction method (DRM) (Kahneman, Krueger, Schkade, Schwarz, & Stone, 2004) has been developed to obtain information about a person’s daily experiences without going through the burden of collecting momentary experience-sampling data. In the DRM, participants report their experiences of a given day retrospectively after engaging in a systematic, experiential reconstruction of the day on the following day. As a participant in this type of study, you might look back on yesterday, divide it up into a series of episodes such as “made breakfast,” “drove to work,” “had a meeting,” etc. You might then report who you were with in each episode and how you felt in each. This approach has shed light on what situations lead to moments of positive and negative mood throughout the course of a normal day.

Studying Daily Behavior

Experience sampling is often used to study everyday behavior (i.e., daily social interactions and activities). In the laboratory, behavior is best studied using direct behavioral observation (e.g., video recordings). In the real world, this is, of course, much more difficult. As Funder put it, it seems it would require a “detective’s report [that] would specify in exact detail everything the participant said and did, and with whom, in all of the contexts of the participant’s life” (Funder, 2007, p. 41).

As difficult as this may seem, Mehl and colleagues have developed a naturalistic observation methodology that is similar in spirit. Rather than following participants—like a detective—with a video camera (see Craik, 2000), they equip participants with a portable audio recorder that is programmed to periodically record brief snippets of ambient sounds (e.g., 30 seconds every 12 minutes). Participants carry the recorder (originally a microcassette recorder, now a smartphone app) on them as they go about their days and return it at the end of the study. The recorder provides researchers with a series of sound bites that, together, amount to an acoustic diary of participants’ days as they naturally unfold—and that constitute a representative sample of their daily activities and social encounters. Because it is somewhat similar to having the researcher’s ear at the participant’s lapel, they called their method the electronically activated recorder, or EAR (Mehl, Pennebaker, Crow, Dabbs, & Price, 2001). The ambient sound recordings can be coded for many things, including participants’ locations (e.g., at school, in a coffee shop), activities (e.g., watching TV, eating), interactions (e.g., in a group, on the phone), and emotional expressions (e.g., laughing, sighing). As unnatural or intrusive as it might seem, participants report that they quickly grow accustomed to the EAR and say they soon find themselves behaving as they normally would.

In a cross-cultural study, Ramírez-Esparza and her colleagues used the EAR method to study sociability in the United States and Mexico. Interestingly, they found that although American participants rated themselves significantly higher than Mexicans on the question, “I see myself as a person who is talkative,” they actually spent almost 10 percent less time talking than Mexicans did (Ramírez-Esparza, Mehl, Álvarez Bermúdez, & Pennebaker, 2009). In a similar way, Mehl and his colleagues used the EAR method to debunk the long-standing myth that women are considerably more talkative than men. Using data from six different studies, they showed that both sexes use on average about 16,000 words per day. The estimated sex difference of 546 words was trivial compared to the immense range of more than 46,000 words between the least and most talkative individual (695 versus 47,016 words; Mehl, Vazire, Ramírez-Esparza, Slatcher, & Pennebaker, 2007). Together, these studies demonstrate how naturalistic observation can be used to study objective aspects of daily behavior and how it can yield findings quite different from what other methods yield (Mehl, Robbins, & Deters, 2012).

A series of other methods and creative ways for assessing behavior directly and unobtrusively in the real world are described in a seminal book on real-world, subtle measures (Webb, Campbell, Schwartz, Sechrest, & Grove, 1981). For example, researchers have used time-lapse photography to study the flow of people and the use of space in urban public places (Whyte, 1980). More recently, they have observed people’s personal (e.g., dorm rooms) and professional (e.g., offices) spaces to understand how personality is expressed and detected in everyday environments (Gosling, Ko, Mannarelli, & Morris, 2002). They have even systematically collected and analyzed people’s garbage to measure what people actually consume (e.g., empty alcohol bottles or cigarette boxes) rather than what they say they consume (Rathje & Murphy, 2001). Because people often cannot and sometimes may not want to accurately report what they do, the direct—and ideally nonreactive—assessment of real-world behavior is of high importance for psychological research (Baumeister, Vohs, & Funder, 2007).

Studying Daily Physiology

In addition to studying how people think, feel, and behave in the real world, researchers are also interested in how our bodies respond to the fluctuating demands of our lives. What are the daily experiences that make our “blood boil”? How do our neurotransmitters and hormones respond to the stressors we encounter in our lives? What physiological reactions do we show to being loved—or getting ostracized? You can see how studying these powerful experiences in real life, as they actually happen, may provide more rich and informative data than one might obtain in an artificial laboratory setting that merely mimics these experiences.

Also, in pursuing these questions, it is important to keep in mind that what is stressful, engaging, or boring for one person might not be so for another. It is, in part, for this reason that researchers have found only limited correspondence between how people respond physiologically to a standardized laboratory stressor (e.g., giving a speech) and how they respond to stressful experiences in their lives. To give an example, Wilhelm and Grossman (2010) describe a participant who showed rather minimal heart rate increases in response to a laboratory stressor (about five to 10 beats per minute) but quite dramatic increases (almost 50 beats per minute) later in the afternoon while watching a soccer game. Of course, the reverse pattern can happen as well, such as when patients have high blood pressure in the doctor’s office but not in their home environment—the so-called white coat hypertension (White, Schulman, McCabe, & Dey, 1989).

Ambulatory physiological monitoring – that is, monitoring physiological reactions as people go about their daily lives – has a long history in biomedical research and an array of monitoring devices exist (Fahrenberg & Myrtek, 1996). Among the biological signals that can now be measured in daily life with portable signal recording devices are the electrocardiogram (ECG), blood pressure, electrodermal activity (or “sweat response”), body temperature, and even the electroencephalogram (EEG) (Wilhelm & Grossman, 2010). Most recently, researchers have added ambulatory assessment of hormones (e.g., cortisol) and other biomarkers (e.g., immune markers) to the list (Schlotz, 2012). The development of ever more sophisticated ways to track what goes on underneath our skins as we go about our lives is a fascinating and rapidly advancing field.

In a recent study, Lane, Zareba, Reis, Peterson, and Moss (2011) used experience sampling combined with ambulatory electrocardiography (a so-called Holter monitor) to study how emotional experiences can alter cardiac function in patients with a congenital heart abnormality (e.g., long QT syndrome). Consistent with the idea that emotions may, in some cases, be able to trigger a cardiac event, they found that typical—in most cases even relatively low intensity— daily emotions had a measurable effect on ventricular repolarization, an important cardiac indicator that, in these patients, is linked to risk of a cardiac event. In another study, Smyth and colleagues (1998) combined experience sampling with momentary assessment of cortisol, a stress hormone. They found that momentary reports of current or even anticipated stress predicted increased cortisol secretion 20 minutes later. Further, and independent of that, the experience of other kinds of negative affect (e.g., anger, frustration) also predicted higher levels of cortisol and the experience of positive affect (e.g., happy, joyful) predicted lower levels of this important stress hormone. Taken together, these studies illustrate how researchers can use ambulatory physiological monitoring to study how the little—and seemingly trivial or inconsequential—experiences in our lives leave objective, measurable traces in our bodily systems.

Studying Online Behavior

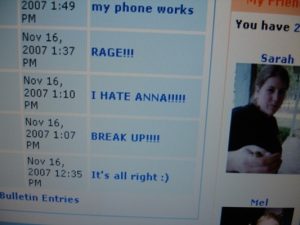

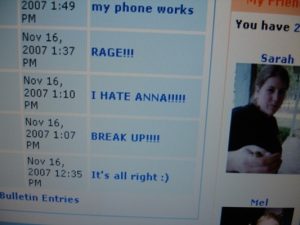

Another domain of daily life that has only recently emerged is virtual daily behavior or how people act and interact with others on the Internet. Irrespective of whether social media will turn out to be humanity’s blessing or curse (both scientists and laypeople are currently divided over this question), the fact is that people are spending an ever increasing amount of time online. In light of that, researchers are beginning to think of virtual behavior as being as serious as “actual” behavior and seek to make it a legitimate target of their investigations (Gosling & Johnson, 2010).

One way to study virtual behavior is to make use of the fact that most of what people do on the Web—emailing, chatting, tweeting, blogging, posting— leaves direct (and permanent) verbal traces. For example, differences in the ways in which people use words (e.g., subtle preferences in word choice) have been found to carry a lot of psychological information (Pennebaker, Mehl, & Niederhoffer, 2003). Therefore, a good way to study virtual social behavior is to study virtual language behavior. Researchers can download people’s—often public—verbal expressions and communications and analyze them using modern text analysis programs (e.g., Pennebaker, Booth, & Francis, 2007).

For example, Cohn, Mehl, and Pennebaker (2004) downloaded blogs of more than a thousand users of lifejournal.com, one of the first Internet blogging sites, to study how people responded socially and emotionally to the attacks of September 11, 2001. In going “the online route,” they could bypass a critical limitation of coping research, the inability to obtain baseline information; that is, how people were doing before the traumatic event occurred. Through access to the database of public blogs, they downloaded entries from two months prior to two months after the attacks. Their linguistic analyses revealed that in the first days after the attacks, participants expectedly expressed more negative emotions and were more cognitively and socially engaged, asking questions and sending messages of support. Already after two weeks, though, their moods and social engagement returned to baseline, and, interestingly, their use of cognitive-analytic words (e.g., “think,” “question”) even dropped below their normal level. Over the next six weeks, their mood hovered around their pre-9/11 baseline, but both their social engagement and cognitive-analytic processing stayed remarkably low. This suggests a social and cognitive weariness in the aftermath of the attacks. In using virtual verbal behavior as a marker of psychological functioning, this study was able to draw a fine timeline of how humans cope with disasters.

Reflecting their rapidly growing real-world importance, researchers are now beginning to investigate behavior on social networking sites such as Facebook (Wilson, Gosling, & Graham, 2012). Most research looks at psychological correlates of online behavior such as personality traits and the quality of one’s social life but, importantly, there are also first attempts to export traditional experimental research designs into an online setting. In a pioneering study of online social influence, Bond and colleagues (2012) experimentally tested the effects that peer feedback has on voting behavior. Remarkably, their sample consisted of 16 million (!) Facebook users. They found that online political-mobilization messages (e.g., “I voted” accompanied by selected pictures of their Facebook friends) influenced real-world voting behavior. This was true not just for users who saw the messages but also for their friends and friends of their friends. Although the intervention effect on a single user was very small, through the enormous number of users and indirect social contagion effects, it resulted cumulatively in an estimated 340,000 additional votes—enough to tilt a close election. In short, although still in its infancy, research on virtual daily behavior is bound to change social science, and it has already helped us better understand both virtual and “actual” behavior.

“Smartphone Psychology”?

A review of research methods for studying daily life would not be complete without a vision of “what’s next.” Given how common they have become, it is safe to predict that smartphones will not just remain devices for everyday online communication but will also become devices for scientific data collection and intervention (Kaplan & Stone, 2013; Yarkoni, 2012). These devices automatically store vast amounts of real-world user interaction data, and, in addition, they are equipped with sensors to track the physical (e. g., location, position) and social (e.g., wireless connections around the phone) context of these interactions. Miller (2012, p. 234) states, “The question is not whether smartphones will revolutionize psychology but how, when, and where the revolution will happen.” Obviously, their immense potential for data collection also brings with it big new challenges for researchers (e.g., privacy protection, data analysis, and synthesis). Yet it is clear that many of the methods described in this module—and many still to be developed ways of collecting real-world data—will, in the future, become integrated into the devices that people naturally and happily carry with them from the moment they get up in the morning to the moment they go to bed.

Conclusion

This module sought to make a case for psychology research conducted outside the lab. If the ultimate goal of the social and behavioral sciences is to explain human behavior, then researchers must also—in addition to conducting carefully controlled lab studies—deal with the “messy” real world and find ways to capture life as it naturally happens.

Mortensen and Cialdini (2010) refer to the dynamic give-and-take between laboratory and field research as “full-cycle psychology”. Going full cycle, they suggest, means that “researchers use naturalistic observation to determine an effect’s presence in the real world, theory to determine what processes underlie the effect, experimentation to verify the effect and its underlying processes, and a return to the natural environment to corroborate the experimental findings” (Mortensen & Cialdini, 2010, p. 53). To accomplish this, researchers have access to a toolbox of research methods for studying daily life that is now more diverse and more versatile than it has ever been before. So, all it takes is to go ahead and—literally—bring science to life.

Check Your Knowledge

To help you with your studying, we’ve included some practice questions for this module. These questions do not necessarily address all content in this module. They are intended as practice, and you are responsible for all of the content in this module even if there is no associated practice question. To promote deeper engagement with the material, we encourage you to create some questions of your own for your practice. You can then also return to these self-generated questions later in the course to test yourself.

Vocabulary

Ambulatory assessment

An overarching term to describe methodologies that assess the behavior, physiology, experience, and environments of humans in naturalistic settings.

Daily Diary method

A methodology where participants complete a questionnaire about their thoughts, feelings, and behavior of the day at the end of the day.

Day reconstruction method (DRM)

A methodology where participants describe their experiences and behavior of a given day retrospectively upon a systematic reconstruction on the following day.

Ecological momentary assessment

An overarching term to describe methodologies that repeatedly sample participants’ real-world experiences, behavior, and physiology in real time.

Ecological validity

The degree to which a study finding has been obtained under conditions that are typical for what happens in everyday life.

Electronically activated recorder, or EAR

A methodology where participants wear a small, portable audio recorder that intermittently records snippets of ambient sounds around them.

Experience-sampling method

A methodology where participants report on their momentary thoughts, feelings, and behaviors at different points in time over the course of a day.

External validity

The degree to which a finding generalizes from the specific sample and context of a study to some larger population and broader settings.

Full-cycle psychology

A scientific approach whereby researchers start with an observational field study to identify an effect in the real world, follow up with laboratory experimentation to verify the effect and isolate the causal mechanisms, and return to field research to corroborate their experimental findings.

Generalize

Generalizing, in science, refers to the ability to arrive at broad conclusions based on a smaller sample of observations. For these conclusions to be true the sample should accurately represent the larger population from which it is drawn.

Internal validity

The degree to which a cause-effect relationship between two variables has been unambiguously established.

Linguistic inquiry and word count

A quantitative text analysis methodology that automatically extracts grammatical and psychological information from a text by counting word frequencies.

Lived day analysis

A methodology where a research team follows an individual around with a video camera to objectively document a person’s daily life as it is lived.

White coat hypertension

A phenomenon in which patients exhibit elevated blood pressure in the hospital or doctor’s office but not in their everyday lives.

References

- Baumeister, R. F., Vohs, K. D., & Funder, D. C. (2007). Psychology as the science of self-reports and finger movements: Whatever happened to actual behavior? Perspectives on Psychological Science, 2, 396–403.

- Bolger, N., & Laurenceau, J-P. (2013). Intensive longitudinal methods: An introduction to diary and experience sampling research. New York, NY: Guilford Press.

- Bolger, N., Davis, A., & Rafaeli, E. (2003). Diary methods: Capturing life as it is lived. Annual Review of Psychology, 54, 579–616.

- Bond, R. M., Jones, J. J., Kramer, A. D., Marlow, C., Settle, J. E., & Fowler, J. H. (2012). A 61 million-person experiment in social influence and political mobilization. Nature, 489, 295–298.

- Brewer, M. B. (2000). Research design and issues of validity. In H. T. Reis & C. M. Judd (Eds.), Handbook of research methods in social psychology (pp. 3–16). New York, NY: Cambridge University Press.

- Cohn, M. A., Mehl, M. R., & Pennebaker, J. W. (2004). Linguistic indicators of psychological change after September 11, 2001. Psychological Science, 15, 687–693.

- Conner, T. S., Tennen, H., Fleeson, W., & Barrett, L. F. (2009). Experience sampling methods: A modern idiographic approach to personality research. Social and Personality Psychology Compass, 3, 292–313.

- Craik, K. H. (2000). The lived day of an individual: A person-environment perspective. In W. B. Walsh, K. H. Craik, & R. H. Price (Eds.), Person-environment psychology: New directions and perspectives (pp. 233–266). Mahwah, NJ: Lawrence Erlbaum Associates.

- Fahrenberg, J., &. Myrtek, M. (Eds.) (1996). Ambulatory assessment: Computer-assisted psychological and psychophysiological methods in monitoring and field studies. Seattle, WA: Hogrefe & Huber.

- Funder, D. C. (2007). The personality puzzle. New York, NY: W. W. Norton & Co.

- Funder, D. C. (2001). Personality. Review of Psychology, 52, 197–221.

- Gosling, S. D., & Johnson, J. A. (2010). Advanced methods for conducting online behavioral research. Washington, DC: American Psychological Association.

- Gosling, S. D., Ko, S. J., Mannarelli, T., & Morris, M. E. (2002). A room with a cue: Personality judgments based on offices and bedrooms. Journal of Personality and Social Psychology, 82, 379–398.

- Hektner, J. M., Schmidt, J. A., & Csikszentmihalyi, M. (2007). Experience sampling method: Measuring the quality of everyday life. Thousand Oaks, CA: Sage.

- Kahneman, D., Krueger, A., Schkade, D., Schwarz, N., and Stone, A. (2004). A survey method for characterizing daily life experience: The Day Reconstruction Method. Science, 306, 1776–780.

- Kaplan, R. M., & Stone A. A. (2013). Bringing the laboratory and clinic to the community: Mobile technologies for health promotion and disease prevention. Annual Review of Psychology, 64, 471-498.

- Killingsworth, M. A., & Gilbert, D. T. (2010). A wandering mind is an unhappy mind. Science, 330, 932.

- Lane, R. D., Zareba, W., Reis, H., Peterson, D., &, Moss, A. (2011). Changes in ventricular repolarization duration during typical daily emotion in patients with Long QT Syndrome. Psychosomatic Medicine, 73, 98–105.

- Lewin, K. (1944) Constructs in psychology and psychological ecology. University of Iowa Studies in Child Welfare, 20, 23–27.

- Mehl, M. R., & Conner, T. S. (Eds.) (2012). Handbook of research methods for studying daily life. New York, NY: Guilford Press.

- Mehl, M. R., Pennebaker, J. W., Crow, M., Dabbs, J., & Price, J. (2001). The electronically activated recorder (EAR): A device for sampling naturalistic daily activities and conversations. Behavior Research Methods, Instruments, and Computers, 33, 517–523.

- Mehl, M. R., Robbins, M. L., & Deters, G. F. (2012). Naturalistic observation of health-relevant social processes: The electronically activated recorder (EAR) methodology in psychosomatics. Psychosomatic Medicine, 74, 410–417.

- Mehl, M. R., Vazire, S., Ramírez-Esparza, N., Slatcher, R. B., & Pennebaker, J. W. (2007). Are women really more talkative than men? Science, 317, 82.

- Miller, G. (2012). The smartphone psychology manifesto. Perspectives in Psychological Science, 7, 221–237.

- Mortenson, C. R., & Cialdini, R. B. (2010). Full-cycle social psychology for theory and application. Social and Personality Psychology Compass, 4, 53–63.

- Pennebaker, J. W., Mehl, M. R., Niederhoffer, K. (2003). Psychological aspects of natural language use: Our words, our selves. Annual Review of Psychology, 54, 547–577.

- Ramírez-Esparza, N., Mehl, M. R., Álvarez Bermúdez, J., & Pennebaker, J. W. (2009). Are Mexicans more or less sociable than Americans? Insights from a naturalistic observation study. Journal of Research in Personality, 43, 1–7.

- Rathje, W., & Murphy, C. (2001). Rubbish! The archaeology of garbage. New York, NY: Harper Collins.

- Reis, H. T., & Gosling, S. D. (2010). Social psychological methods outside the laboratory. In S. T. Fiske, D. T. Gilbert, & G. Lindzey, (Eds.), Handbook of social psychology (5th ed., Vol. 1, pp. 82–114). New York, NY: Wiley.

- Sapolsky, R. (2004). Why zebras don’t get ulcers: A guide to stress, stress-related diseases and coping. New York, NY: Henry Holt and Co.

- Schlotz, W. (2012). Ambulatory psychoneuroendocrinology: Assessing salivary cortisol and other hormones in daily life. In M.R. Mehl & T.S. Conner (Eds.), Handbook of research methods for studying daily life (pp. 193–209). New York, NY: Guilford Press.

- Smyth, J., Ockenfels, M. C., Porter, L., Kirschbaum, C., Hellhammer, D. H., & Stone, A. A. (1998). Stressors and mood measured on a momentary basis are associated with salivary cortisol secretion. Psychoneuroendocrinology, 23, 353–370.

- Stone, A. A., & Shiffman, S. (1994). Ecological momentary assessment (EMA) in behavioral medicine. Annals of Behavioral Medicine, 16, 199–202.

- Stone, A. A., Reed, B. R., Neale, J. M. (1987). Changes in daily event frequency precede episodes of physical symptoms. Journal of Human Stress, 13, 70–74.

- Webb, E. J., Campbell, D. T., Schwartz, R. D., Sechrest, L., & Grove, J. B. (1981). Nonreactive measures in the social sciences. Boston, MA: Houghton Mifflin Co.

- White, W. B., Schulman, P., McCabe, E. J., & Dey, H. M. (1989). Average daily blood pressure, not office blood pressure, determines cardiac function in patients with hypertension. Journal of the American Medical Association, 261, 873–877.

- Whyte, W. H. (1980). The social life of small urban spaces. Washington, DC: The Conservation Foundation.

- Wilhelm, F.H., & Grossman, P. (2010). Emotions beyond the laboratory: Theoretical fundaments, study design, and analytic strategies for advanced ambulatory assessment. Biological Psychology, 84, 552–569.

- Wilhelm, P., Perrez, M., & Pawlik, K. (2012). Conducting research in daily life: A historical review. In M. R. Mehl & T. S. Conner (Eds.), Handbook of research methods for studying daily life. New York, NY: Guilford Press.

- Wilson, R., & Gosling, S. D., & Graham, L. (2012). A review of Facebook research in the social sciences. Perspectives on Psychological Science, 7, 203–220.

- Yarkoni, T. (2012). Psychoinformatics: New horizons at the interface of the psychological and computing sciences. Current Directions in Psychological Science, 21, 391–397.

How to cite this Chapter using APA Style:

Mehl, M. R. (2019). Conducting psychology research in the real world. Adapted for use by Queen’s University. Original chapter in R. Biswas-Diener & E. Diener (Eds), Noba textbook series: Psychology. Champaign, IL: DEF publishers. Retrieved from http://noba.to/hsfe5k3d

Copyright and Acknowledgment:

This material is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. To view a copy of this license, visit: http://creativecommons.org/licenses/by-nc-sa/4.0/deed.en_US.

This material is attributed to the Diener Education Fund (copyright © 2018) and can be accessed via this link: http://noba.to/hsfe5k3d.

Additional information about the Diener Education Fund (DEF) can be accessed here.

Parents’ manipulation of and intrusion into adolescents’ emotional and cognitive world through invalidating adolescents’ feelings and pressuring them to think in particular ways.

Ways in which development occurs in a gradual incremental manner, rather than through sudden jumps.

Baillargeon, R. (1987). Object permanence in 3 1/2- and 4 1/2-month-old infants. Developmental Psychology, 23, 655–664.

Period within Piagetian theory from birth to age 2 years, during which children come to represent the enduring reality of objects.

Original chapter by Eric Turkheimer adapted by the Queen's University Psychology Department

This Open Access chapter was originally written for the NOBA project. Information on the NOBA project can be found below.

People have a deep intuition about what has been called the “nature–nurture question.” Some aspects of our behavior feel as though they originate in our genetic makeup, while others feel like the result of our upbringing or our own hard work. The scientific field of behavior genetics attempts to study these differences empirically, either by examining similarities among family members with different degrees of genetic relatedness, or, more recently, by studying differences in the DNA of people with different behavioral traits. The scientific methods that have been developed are ingenious, but often inconclusive. Many of the difficulties encountered in the empirical science of behavior genetics turn out to be conceptual, and our intuitions about nature and nurture get more complicated the harder we think about them. In the end, it is an oversimplification to ask how “genetic” some particular behavior is. Genes and environments always combine to produce behavior, and the real science is in the discovery of how they combine for a given behavior.

Learning Objectives

- Understand what the nature–nurture debate is and why the problem fascinates us.

- Understand why nature–nurture questions are difficult to study empirically.

- Know the major research designs that can be used to study nature–nurture questions.

- Appreciate the complexities of nature–nurture and why questions that seem simple turn out not to have simple answers.

Introduction

There are three related problems at the intersection of philosophy and science that are fundamental to our understanding of our relationship to the natural world: the mind–body problem, the free will problem, and the nature–nurture problem. These great questions have a lot in common. Everyone, even those without much knowledge of science or philosophy, has opinions about the answers to these questions that come simply from observing the world we live in. Our feelings about our relationship with the physical and biological world often seem incomplete. We are in control of our actions in some ways, but at the mercy of our bodies in others; it feels obvious that our consciousness is some kind of creation of our physical brains, at the same time we sense that our awareness must go beyond just the physical. This incomplete knowledge of our relationship with nature leaves us fascinated and a little obsessed, like a cat that climbs into a paper bag and then out again, over and over, mystified every time by a relationship between inner and outer that it can see but can’t quite understand.

It may seem obvious that we are born with certain characteristics while others are acquired, and yet of the three great questions about humans’ relationship with the natural world, only nature–nurture gets referred to as a “debate.” In the history of psychology, no other question has caused so much controversy and offense: We are so concerned with nature–nurture because our very sense of moral character seems to depend on it. While we may admire the athletic skills of a great basketball player, we think of his height as simply a gift, a payoff in the “genetic lottery.” For the same reason, no one blames a short person for his height or someone’s congenital disability on poor decisions: To state the obvious, it’s “not their fault.” But we do praise the concert violinist (and perhaps her parents and teachers as well) for her dedication, just as we condemn cheaters, slackers, and bullies for their bad behavior.

The problem is, most human characteristics aren’t usually as clear-cut as height or instrument-mastery, affirming our nature–nurture expectations strongly one way or the other. In fact, even the great violinist might have some inborn qualities—perfect pitch, or long, nimble fingers—that support and reward her hard work. And the basketball player might have eaten a diet while growing up that promoted his genetic tendency for being tall. When we think about our own qualities, they seem under our control in some respects, yet beyond our control in others. And often the traits that don’t seem to have an obvious cause are the ones that concern us the most and are far more personally significant. What about how much we drink or worry? What about our honesty, or religiosity, or sexual orientation? They all come from that uncertain zone, neither fixed by nature nor totally under our own control.

One major problem with answering nature-nurture questions about people is, how do you set up an experiment? In nonhuman animals, there are relatively straightforward experiments for tackling nature–nurture questions. Say, for example, you are interested in aggressiveness in dogs. You want to test for the more important determinant of aggression: being born to aggressive dogs or being raised by them. You could mate two aggressive dogs—angry Chihuahuas—together, and mate two nonaggressive dogs—happy beagles—together, then switch half the puppies from each litter between the different sets of parents to raise. You would then have puppies born to aggressive parents (the Chihuahuas) but being raised by nonaggressive parents (the Beagles), and vice versa, in litters that mirror each other in puppy distribution. The big questions are: Would the Chihuahua parents raise aggressive beagle puppies? Would the beagle parents raise nonaggressive Chihuahua puppies? Would the puppies’ nature win out, regardless of who raised them? Or... would the result be a combination of nature and nurture? Much of the most significant nature–nurture research has been done in this way (Scott & Fuller, 1998), and animal breeders have been doing it successfully for thousands of years. In fact, it is fairly easy to breed animals for behavioral traits.

With people, however, we can’t assign babies to parents at random, or select parents with certain behavioral characteristics to mate, merely in the interest of science (though history does include horrific examples of such practices, in misguided attempts at “eugenics,” the shaping of human characteristics through intentional breeding). In typical human families, children’s biological parents raise them, so it is very difficult to know whether children act like their parents due to genetic (nature) or environmental (nurture) reasons. Nevertheless, despite our restrictions on setting up human-based experiments, we do see real-world examples of nature-nurture at work in the human sphere—though they only provide partial answers to our many questions.

The science of how genes and environments work together to influence behavior is called behavioral genetics. The easiest opportunity we have to observe this is the adoption study. When children are put up for adoption, the parents who give birth to them are no longer the parents who raise them. This setup isn’t quite the same as the experiments with dogs (children aren’t assigned to random adoptive parents in order to suit the particular interests of a scientist) but adoption still tells us some interesting things, or at least confirms some basic expectations. For instance, if the biological child of tall parents were adopted into a family of short people, do you suppose the child’s growth would be affected? What about the biological child of a Spanish-speaking family adopted at birth into an English-speaking family? What language would you expect the child to speak? And what might these outcomes tell you about the difference between height and language in terms of nature-nurture?

Another option for observing nature-nurture in humans involves twin studies. There are two types of twins: monozygotic (MZ) and dizygotic (DZ). Monozygotic twins, also called “identical” twins, result from a single zygote (fertilized egg) and have the same DNA. They are essentially clones. Dizygotic twins, also known as “fraternal” twins, develop from two zygotes and share 50% of their DNA. Fraternal twins are ordinary siblings who happen to have been born at the same time. To analyze nature–nurture using twins, we compare the similarity of MZ and DZ pairs. Sticking with the features of height and spoken language, let’s take a look at how nature and nurture apply: Identical twins, unsurprisingly, are almost perfectly similar for height. The heights of fraternal twins, however, are like any other sibling pairs: more similar to each other than to people from other families, but hardly identical. This contrast between twin types gives us a clue about the role genetics plays in determining height. Now consider spoken language. If one identical twin speaks Spanish at home, the co-twin with whom she is raised almost certainly does too. But the same would be true for a pair of fraternal twins raised together. In terms of spoken language, fraternal twins are just as similar as identical twins, so it appears that the genetic match of identical twins doesn’t make much difference.

Twin and adoption studies are two instances of a much broader class of methods for observing nature-nurture called quantitative genetics, the scientific discipline in which similarities among individuals are analyzed based on how biologically related they are. We can do these studies with siblings and half-siblings, cousins, twins who have been separated at birth and raised separately (Bouchard, Lykken, McGue, & Segal, 1990; such twins are very rare and play a smaller role than is commonly believed in the science of nature–nurture), or with entire extended families (see Plomin, DeFries, Knopik, & Neiderhiser, 2012, for a complete introduction to research methods relevant to nature–nurture).

For better or for worse, contentions about nature–nurture have intensified because quantitative genetics produces a number called a heritability coefficient, varying from 0 to 1, that is meant to provide a single measure of genetics’ influence of a trait. In a general way, a heritability coefficient measures how strongly differences among individuals are related to differences among their genes. But beware: Heritability coefficients, although simple to compute, are deceptively difficult to interpret. Nevertheless, numbers that provide simple answers to complicated questions tend to have a strong influence on the human imagination, and a great deal of time has been spent discussing whether the heritability of intelligence or personality or depression is equal to one number or another.

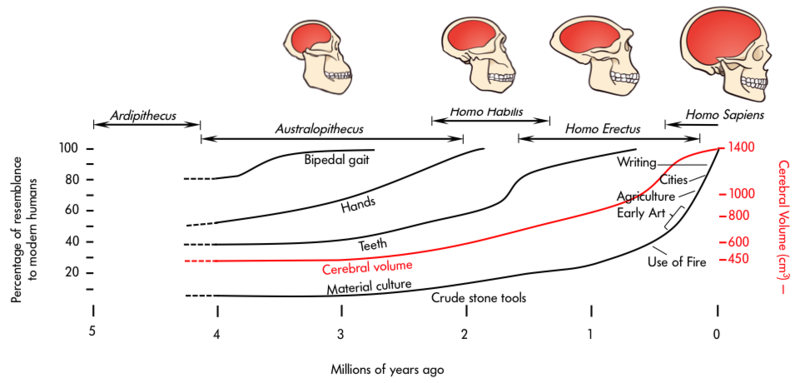

One reason nature–nurture continues to fascinate us so much is that we live in an era of great scientific discovery in genetics, comparable to the times of Copernicus, Galileo, and Newton, with regard to astronomy and physics. Every day, it seems, new discoveries are made, new possibilities proposed. When Francis Galton first started thinking about nature–nurture in the late-19th century he was very influenced by his cousin, Charles Darwin, but genetics per se was unknown. Mendel’s famous work with peas, conducted at about the same time, went undiscovered for 20 years; quantitative genetics was developed in the 1920s; DNA was discovered by Watson and Crick in the 1950s; the human genome was completely sequenced at the turn of the 21st century; and we are now on the verge of being able to obtain the specific DNA sequence of anyone at a relatively low cost. No one knows what this new genetic knowledge will mean for the study of nature–nurture, but as we will see in the next section, answers to nature–nurture questions have turned out to be far more difficult and mysterious than anyone imagined.

What Have We Learned About Nature–Nurture?

It would be satisfying to be able to say that nature–nurture studies have given us conclusive and complete evidence about where traits come from, with some traits clearly resulting from genetics and others almost entirely from environmental factors, such as childrearing practices and personal will; but that is not the case. Instead, everything has turned out to have some footing in genetics. The more genetically-related people are, the more similar they are—for everything: height, weight, intelligence, personality, mental illness, etc. Sure, it seems like common sense that some traits have a genetic bias. For example, adopted children resemble their biological parents even if they have never met them, and identical twins are more similar to each other than are fraternal twins. And while certain psychological traits, such as personality or mental illness (e.g., schizophrenia), seem reasonably influenced by genetics, it turns out that the same is true for political attitudes, how much television people watch (Plomin, Corley, DeFries, & Fulker, 1990), and whether or not they get divorced (McGue & Lykken, 1992).

It may seem surprising, but genetic influence on behavior is a relatively recent discovery. In the middle of the 20th century, psychology was dominated by the doctrine of behaviorism, which held that behavior could only be explained in terms of environmental factors. Psychiatry concentrated on psychoanalysis, which probed for roots of behavior in individuals’ early life-histories. The truth is, neither behaviorism nor psychoanalysis is incompatible with genetic influences on behavior, and neither Freud nor Skinner was naive about the importance of organic processes in behavior. Nevertheless, in their day it was widely thought that children’s personalities were shaped entirely by imitating their parents’ behavior, and that schizophrenia was caused by certain kinds of “pathological mothering.” Whatever the outcome of our broader discussion of nature–nurture, the basic fact that the best predictors of an adopted child’s personality or mental health are found in the biological parents he or she has never met, rather than in the adoptive parents who raised him or her, presents a significant challenge to purely environmental explanations of personality or psychopathology. The message is clear: You can’t leave genes out of the equation. But keep in mind, no behavioral traits are completely inherited, so you can’t leave the environment out altogether, either.

Trying to untangle the various ways nature-nurture influences human behavior can be messy, and often common-sense notions can get in the way of good science. One very significant contribution of behavioral genetics that has changed psychology for good can be very helpful to keep in mind: When your subjects are biologically-related, no matter how clearly a situation may seem to point to environmental influence, it is never safe to interpret a behavior as wholly the result of nurture without further evidence. For example, when presented with data showing that children whose mothers read to them often are likely to have better reading scores in third grade, it is tempting to conclude that reading to your kids out loud is important to success in school; this may well be true, but the study as described is inconclusive, because there are genetic as well asenvironmental pathways between the parenting practices of mothers and the abilities of their children. This is a case where “correlation does not imply causation,” as they say. To establish that reading aloud causes success, a scientist can either study the problem in adoptive families (in which the genetic pathway is absent) or by finding a way to randomly assign children to oral reading conditions.

The outcomes of nature–nurture studies have fallen short of our expectations (of establishing clear-cut bases for traits) in many ways. The most disappointing outcome has been the inability to organize traits from more- to less-genetic. As noted earlier, everything has turned out to be at least somewhat heritable (passed down), yet nothing has turned out to be absolutely heritable, and there hasn’t been much consistency as to which traits are moreheritable and which are less heritable once other considerations (such as how accurately the trait can be measured) are taken into account (Turkheimer, 2000). The problem is conceptual: The heritability coefficient, and, in fact, the whole quantitative structure that underlies it, does not match up with our nature–nurture intuitions. We want to know how “important” the roles of genes and environment are to the development of a trait, but in focusing on “important” maybe we’re emphasizing the wrong thing. First of all, genes and environment are both crucial to every trait; without genes the environment would have nothing to work on, and too, genes cannot develop in a vacuum. Even more important, because nature–nurture questions look at the differences among people, the cause of a given trait depends not only on the trait itself, but also on the differences in that trait between members of the group being studied.

The classic example of the heritability coefficient defying intuition is the trait of having two arms. No one would argue against the development of arms being a biological, genetic process. But fraternal twins are just as similar for “two-armedness” as identical twins, resulting in a heritability coefficient of zero for the trait of having two arms. Normally, according to the heritability model, this result (coefficient of zero) would suggest all nurture, no nature, but we know that’s not the case. The reason this result is not a tip-off that arm development is less genetic than we imagine is because people do not vary in the genes related to arm development—which essentially upends the heritability formula. In fact, in this instance, the opposite is likely true: the extent that people differ in arm number is likely the result of accidents and, therefore, environmental. For reasons like these, we always have to be very careful when asking nature–nurture questions, especially when we try to express the answer in terms of a single number. The heritability of a trait is not simply a property of that trait, but a property of the trait in a particular context of relevant genes and environmental factors.

Another issue with the heritability coefficient is that it divides traits’ determinants into two portions—genes and environment—which are then calculated together for the total variability. This is a little like asking how much of the experience of a symphony comes from the horns and how much from the strings; the ways instruments or genes integrate is more complex than that. It turns out to be the case that, for many traits, genetic differences affect behavior under some environmental circumstances but not others—a phenomenon called gene-environment interaction, or G x E. In one well-known example, Caspi et al. (2002) showed that among maltreated children, those who carried a particular allele of the MAOA gene showed a predisposition to violence and antisocial behavior, while those with other alleles did not. Whereas, in children who had not been maltreated, the gene had no effect. Making matters even more complicated are very recent studies of what is known as epigenetics (see module, “Epigenetics”), a process in which the DNA itself is modified by environmental events, and those genetic changes transmitted to children.

Some common questions about nature–nurture are, how susceptible is a trait to change, how malleable is it, and do we “have a choice” about it? These questions are much more complex than they may seem at first glance. For example, phenylketonuria is an inborn error of metabolism caused by a single gene; it prevents the body from metabolizing phenylalanine. Untreated, it causes intellectual disability and death. But it can be treated effectively by a straightforward environmental intervention: avoiding foods containing phenylalanine. Height seems like a trait firmly rooted in our nature and unchangeable, but the average height of many populations in Asia and Europe has increased significantly in the past 100 years, due to changes in diet and the alleviation of poverty. Even the most modern genetics has not provided definitive answers to nature–nurture questions. When it was first becoming possible to measure the DNA sequences of individual people, it was widely thought that we would quickly progress to finding the specific genes that account for behavioral characteristics, but that hasn’t happened. There are a few rare genes that have been found to have significant (almost always negative) effects, such as the single gene that causes Huntington’s disease, or the Apolipoprotein gene that causes early onset dementia in a small percentage of Alzheimer’s cases. Aside from these rare genes of great effect, however, the genetic impact on behavior is broken up over many genes, each with very small effects. For most behavioral traits, the effects are so small and distributed across so many genes that we have not been able to catalog them in a meaningful way. In fact, the same is true of environmental effects. We know that extreme environmental hardship causes catastrophic effects for many behavioral outcomes, but fortunately extreme environmental hardship is very rare. Within the normal range of environmental events, those responsible for differences (e.g., why some children in a suburban third-grade classroom perform better than others) are much more difficult to grasp.

The difficulties with finding clear-cut solutions to nature–nurture problems bring us back to the other great questions about our relationship with the natural world: the mind-body problem and free will. Investigations into what we mean when we say we are aware of something reveal that consciousness is not simply the product of a particular area of the brain, nor does choice turn out to be an orderly activity that we can apply to some behaviors but not others. So it is with nature and nurture: What at first may seem to be a straightforward matter, able to be indexed with a single number, becomes more and more complicated the closer we look. The many questions we can ask about the intersection among genes, environments, and human traits—how sensitive are traits to environmental change, and how common are those influential environments; are parents or culture more relevant; how sensitive are traits to differences in genes, and how much do the relevant genes vary in a particular population; does the trait involve a single gene or a great many genes; is the trait more easily described in genetic or more-complex behavioral terms?—may have different answers, and the answer to one tells us little about the answers to the others.

It is tempting to predict that the more we understand the wide-ranging effects of genetic differences on all human characteristics—especially behavioral ones—our cultural, ethical, legal, and personal ways of thinking about ourselves will have to undergo profound changes in response. Perhaps criminal proceedings will consider genetic background. Parents, presented with the genetic sequence of their children, will be faced with difficult decisions about reproduction. These hopes or fears are often exaggerated. In some ways, our thinking may need to change—for example, when we consider the meaning behind the fundamental American principle that all men are created equal. Human beings differ, and like all evolved organisms they differ genetically. The Declaration of Independence predates Darwin and Mendel, but it is hard to imagine that Jefferson—whose genius encompassed botany as well as moral philosophy—would have been alarmed to learn about the genetic diversity of organisms. One of the most important things modern genetics has taught us is that almost all human behavior is too complex to be nailed down, even from the most complete genetic information, unless we’re looking at identical twins. The science of nature and nurture has demonstrated that genetic differences among people are vital to human moral equality, freedom, and self-determination, not opposed to them. As Mordecai Kaplan said about the role of the past in Jewish theology, genetics gets a vote, not a veto, in the determination of human behavior. We should indulge our fascination with nature–nurture while resisting the temptation to oversimplify it.

Check Your Knowledge

To help you with your studying, we’ve included some practice questions for this module. These questions do not necessarily address all content in this module. They are intended as practice, and you are responsible for all of the content in this module even if there is no associated practice question. To promote deeper engagement with the material, we encourage you to create some questions of your own for your practice. You can then also return to these self-generated questions later in the course to test yourself.

Vocabulary

Adoption study

A behavior genetic research method that involves comparison of adopted children to their adoptive and biological parents.

Behavioral genetics

The empirical science of how genes and environments combine to generate behavior.

Heritability coefficient

An easily misinterpreted statistical construct that purports to measure the role of genetics in the explanation of differences among individuals.

Quantitative genetics

Scientific and mathematical methods for inferring genetic and environmental processes based on the degree of genetic and environmental similarity among organisms.

Twin studies

A behavior genetic research method that involves comparison of the similarity of identical (monozygotic; MZ) and fraternal (dizygotic; DZ) twins.

References

- Bouchard, T. J., Lykken, D. T., McGue, M., & Segal, N. L. (1990). Sources of human psychological differences: The Minnesota study of twins reared apart. Science, 250(4978), 223–228.

- Caspi, A., McClay, J., Moffitt, T. E., Mill, J., Martin, J., Craig, I. W., Taylor, A. & Poulton, R. (2002). Role of genotype in the cycle of violence in maltreated children. Science, 297(5582), 851–854.

- McGue, M., & Lykken, D. T. (1992). Genetic influence on risk of divorce. Psychological Science, 3(6), 368–373.

- Plomin, R., Corley, R., DeFries, J. C., & Fulker, D. W. (1990). Individual differences in television viewing in early childhood: Nature as well as nurture. Psychological Science, 1(6), 371–377.

- Plomin, R., DeFries, J. C., Knopik, V. S., & Neiderhiser, J. M. (2012). Behavioral genetics. New York, NY: Worth Publishers.

- Scott, J. P., & Fuller, J. L. (1998). Genetics and the social behavior of the dog. Chicago, IL: University of Chicago Press.

- Turkheimer, E. (2000). Three laws of behavior genetics and what they mean. Current Directions in Psychological Science, 9(5), 160–164.

How to cite this Chapter using APA Style:

Turkheimer, E. (2019). The nature-nurture question. Adapted for use by Queen's University. Original chapter in R. Biswas-Diener & E. Diener (Eds), Noba textbook series: Psychology. Champaign, IL: DEF publishers. Retrieved from http://noba.to/tvz92edh

Copyright and Acknowledgment:

This material is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. To view a copy of this license, visit: http://creativecommons.org/licenses/by-nc-sa/4.0/deed.en_US.

This material is attributed to the Diener Education Fund (copyright © 2018) and can be accessed via this link: http://noba.to/tvz92edh.

Additional information about the Diener Education Fund (DEF) can be accessed here.

Discontinuous development

Period within Piagetian theory from age 2 to 7 years, in which children can represent objects through drawing and language but cannot solve logical reasoning problems, such as the conservation problems.

Piagetian stage between ages 7 and 12 when children can think logically about concrete situations but not engage in systematic scientific reasoning.

Original chapter by David M. Buss adapted by the Queen's Psychology Department

This Open Access chapter was originally written for the NOBA project. Information on the NOBA project can be found below.

Evolution or change over time occurs through the processes of natural and sexual selection. In response to problems in our environment, we adapt both physically and psychologically to ensure our survival and reproduction. Sexual selection theory describes how evolution has shaped us to provide a mating advantage rather than just a survival advantage and occurs through two distinct pathways: intrasexual competition and intersexual selection. Gene selection theory, the modern explanation behind evolutionary biology, occurs through the desire for gene replication. Evolutionary psychology connects evolutionary principles with modern psychology and focuses primarily on psychological adaptations: changes in the way we think in order to improve our survival. Two major evolutionary psychological theories are described: Sexual strategies theory describes the psychology of human mating strategies and the ways in which women and men differ in those strategies. Error management theory describes the evolution of biases in the way we think about everything.

Learning Objectives

- Learn what “evolution” means.

- Define the primary mechanisms by which evolution takes place.

- Identify the two major classes of adaptations.

- Define sexual selection and its two primary processes.

- Define gene selection theory.

- Understand psychological adaptations.

- Identify the core premises of sexual strategies theory.

- Identify the core premises of error management theory, and provide two empirical examples of adaptive cognitive biases.

Introduction

If you have ever been on a first date, you’re probably familiar with the anxiety of trying to figure out what clothes to wear or what perfume or cologne to put on. In fact, you may even consider flossing your teeth for the first time all year. When considering why you put in all this work, you probably recognize that you’re doing it to impress the other person. But how did you learn these particular behaviors? Where did you get the idea that a first date should be at a nice restaurant or someplace unique? It is possible that we have been taught these behaviors by observing others. It is also possible, however, that these behaviors—the fancy clothes, the expensive restaurant—are biologically programmed into us. That is, just as peacocks display their feathers to show how attractive they are, or some lizards do push-ups to show how strong they are, when we style our hair or bring a gift to a date, we’re trying to communicate to the other person: “Hey, I’m a good mate! Choose me! Choose me!"

However, we all know that our ancestors hundreds of thousands of years ago weren’t driving sports cars or wearing designer clothes to attract mates. So how could someone ever say that such behaviors are “biologically programmed” into us? Well, even though our ancestors might not have been doing these specific actions, these behaviors are the result of the same driving force: the powerful influence of evolution. Yes, evolution—certain traits and behaviors developing over time because they are advantageous to our survival. In the case of dating, doing something like offering a gift might represent more than a nice gesture. Just as chimpanzees will give food to mates to show they can provide for them, when you offer gifts to your dates, you are communicating that you have the money or “resources” to help take care of them. And even though the person receiving the gift may not realize it, the same evolutionary forces are influencing his or her behavior as well. The receiver of the gift evaluates not only the gift but also the gift-giver's clothes, physical appearance, and many other qualities, to determine whether the individual is a suitable mate. But because these evolutionary processes are hardwired into us, it is easy to overlook their influence.

To broaden your understanding of evolutionary processes, this module will present some of the most important elements of evolution as they impact psychology. Evolutionary theory helps us piece together the story of how we humans have prospered. It also helps to explain why we behave as we do on a daily basis in our modern world: why we bring gifts on dates, why we get jealous, why we crave our favorite foods, why we protect our children, and so on. Evolution may seem like a historical concept that applies only to our ancient ancestors but, in truth, it is still very much a part of our modern daily lives.

Basics of Evolutionary Theory

Evolution simply means change over time. Many think of evolution as the development of traits and behaviors that allow us to survive this “dog-eat-dog” world, like strong leg muscles to run fast, or fists to punch and defend ourselves. However, physical survival is only important if it eventually contributes to successful reproduction. That is, even if you live to be a 100-year-old, if you fail to mate and produce children, your genes will die with your body. Thus, reproductive success, not survival success, is the engine of evolution by natural selection. Every mating success by one person means the loss of a mating opportunity for another. Yet every living human being is an evolutionary success story. Each of us is descended from a long and unbroken line of ancestors who triumphed over others in the struggle to survive (at least long enough to mate) and reproduce. However, in order for our genes to endure over time—to survive harsh climates, to defeat predators—we have inherited adaptive, psychological processes designed to ensure success.

At the broadest level, we can think of organisms, including humans, as having two large classes of adaptations—or traits and behaviors that evolved over time to increase our reproductive success. The first class of adaptations are called survival adaptations: mechanisms that helped our ancestors handle the “hostile forces of nature.” For example, in order to survive very hot temperatures, we developed sweat glands to cool ourselves. In order to survive very cold temperatures, we developed shivering mechanisms (the speedy contraction and expansion of muscles to produce warmth). Other examples of survival adaptations include developing a craving for fats and sugars, encouraging us to seek out particular foods rich in fats and sugars that keep us going longer during food shortages. Some threats, such as snakes, spiders, darkness, heights, and strangers, often produce fear in us, which encourages us to avoid them and thereby stay safe. These are also examples of survival adaptations. However, all of these adaptations are for physical survival, whereas the second class of adaptations are for reproduction, and help us compete for mates. These adaptations are described in an evolutionary theory proposed by Charles Darwin, called sexual selection theory.

Sexual Selection Theory

Darwin noticed that there were many traits and behaviors of organisms that could not be explained by “survival selection.” For example, the brilliant plumage of peacocks should actually lower their rates of survival. That is, the peacocks’ feathers act like a neon sign to predators, advertising “Easy, delicious dinner here!” But if these bright feathers only lower peacocks’ chances at survival, why do they have them? The same can be asked of similar characteristics of other animals, such as the large antlers of male stags or the wattles of roosters, which also seem to be unfavorable to survival. Again, if these traits only make the animals less likely to survive, why did they develop in the first place? And how have these animals continued to survive with these traits over thousands and thousands of years? Darwin’s answer to this conundrum was the theory of sexual selection: the evolution of characteristics, not because of survival advantage, but because of mating advantage.

Sexual selection occurs through two processes. The first, intrasexual competition, occurs when members of one sex compete against each other, and the winner gets to mate with a member of the opposite sex. Male stags, for example, battle with their antlers, and the winner (often the stronger one with larger antlers) gains mating access to the female. That is, even though large antlers make it harder for the stags to run through the forest and evade predators (which lowers their survival success), they provide the stags with a better chance of attracting a mate (which increases their reproductive success). Similarly, human males sometimes also compete against each other in physical contests: boxing, wrestling, karate, or group-on-group sports, such as football. Even though engaging in these activities poses a "threat" to their survival success, as with the stag, the victors are often more attractive to potential mates, increasing their reproductive success. Thus, whatever qualities lead to success in intrasexual competition are then passed on with greater frequency due to their association with greater mating success.

The second process of sexual selection is preferential mate choice, also called intersexual selection. In this process, if members of one sex are attracted to certain qualities in mates—such as brilliant plumage, signs of good health, or even intelligence—those desired qualities get passed on in greater numbers, simply because their possessors mate more often. For example, the colorful plumage of peacocks exists due to a long evolutionary history of peahens’ (the term for female peacocks) attraction to males with brilliantly colored feathers.

In all sexually-reproducing species, adaptations in both sexes (males and females) exist due to survival selection and sexual selection. However, unlike other animals where one sex has dominant control over mate choice, humans have “mutual mate choice.” That is, both women and men typically have a say in choosing their mates. And both mates value qualities such as kindness, intelligence, and dependability that are beneficial to long-term relationships—qualities that make good partners and good parents.

Gene Selection Theory

In modern evolutionary theory, all evolutionary processes boil down to an organism’s genes. Genes are the basic “units of heredity,” or the information that is passed along in DNA that tells the cells and molecules how to “build” the organism and how that organism should behave. Genes that are better able to encourage the organism to reproduce, and thus replicate themselves in the organism’s offspring, have an advantage over competing genes that are less able. For example, take female sloths: In order to attract a mate, they will scream as loudly as they can, to let potential mates know where they are in the thick jungle. Now, consider two types of genes in female sloths: one gene that allows them to scream extremely loudly, and another that only allows them to scream moderately loudly. In this case, the sloth with the gene that allows her to shout louder will attract more mates—increasing reproductive success—which ensures that her genes are more readily passed on than those of the quieter sloth.

Essentially, genes can boost their own replicative success in two basic ways. First, they can influence the odds for survival and reproduction of the organism they are in (individual reproductive success or fitness—as in the example with the sloths). Second, genes can also influence the organism to help other organisms who also likely contain those genes—known as “genetic relatives”—to survive and reproduce (which is called inclusive fitness). For example, why do human parents tend to help their own kids with the financial burdens of a college education and not the kids next door? Well, having a college education increases one’s attractiveness to other mates, which increases one’s likelihood for reproducing and passing on genes. And because parents’ genes are in their own children (and not the neighborhood children), funding their children’s educations increases the likelihood that the parents’ genes will be passed on.