Humanities Computing

Up until the early years of the 21st century, the digital humanities field was also known as Humanities Computing. Therefore, when discussing the history of the discipline until 2004, “humanities computing” will be employed. In the time period discussed here, the focus is on the computer-assisted analysis and interpretation of textual material.

The historical development presented here is based mostly on the 2004 article by Susan Hockey, a British computer scientist and Emeritus Professor of Library and Information Studies at University College London, and will commence from 1966, with the establishment of Computers and the Humanities (CHum), mentioned above. However, it must be mentioned that a large part of the foundation of the discipline was laid in in the 1940s, when Roberto Busa, in collaboration with IBM, developed Index Thomisticus, a computer-generated concordance to the writings of Thomas Aquinas. A full “pre-history” of the digital humanities/humanities computing can be found in Hockey’s 2004 article (Hockey, 2004).

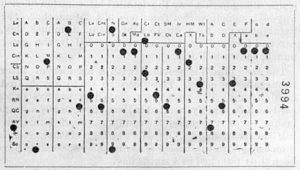

One of the main concerns that humanities scholars faced when adopting computational methods was that data needed to be analyzed as texts or numerical values. In the 1950s up to the early 1970s, programs were entered on punch cards (Hollerith cards), which was a cumbersome undertaking, given that each card had a capacity of 80 characters or one line of (upper-case) text. Representing characters was identified as a major challenge, given the wide variety of characters present in the manuscripts under investigation. Consequently, lower-case, accented, and non-standard characters were often represented by placing an asterisk or another character in front of the upper-case character, while non-Roman alphabets could only be represented in transliterated format.

Although, due to the limitations of computer resources of this period, not a lot of groundbreaking work was done, some humanities computing centers were established in the 1960s, including the Centre for Literary and Linguistic Computing in Cambridge, England (1963), which supported work in Early Middle High German texts. A group to develop software programs for text analysis for the production of critical editions was formed in Tübingen, Germany, with some of the modules of the original software suite, TuStep, still in use. However, advances were slow, due to technological limitations of computer hardware during this early period. Most computation was performed in batch processing mode, in which a program and data were submitted to a queue to be processed with scarce computer resources when these resources became available for the job. Users would not have the results of their processing until after the job was submitted to the queue, executed by the computer, and output into a readable format, generally a printout. Input and output devices were also quite slow, hampering text- and character-intensive work characteristic of humanities computing. However, this period also brought to the forefront problems which still affect the digital humanities, including issues of representing words beyond simple strings to include spelling differences and other interpretive problems.