Chapter 20

Enhancing Rationality: Heuristics, Biases, and The Critical Thinking Project

Mark Battersby

1. Introduction

My intention today is to critically explore the implications to the critical thinking movement of the work by cognitive psychologists and behavioral economists, commonly known as the heuristics and bias research.

But first I wish to position the critical thinking movement in the long historical tradition of philosophy that has been devoted to the development and spread of rationality. From Socrates to John Dewey, from 5th century Athens to 21st century Windsor, the promotion of rationality has been recognized as a core philosophical project.

It is a project not always adequately respected and appreciated in contemporary professional philosophy. This is in part because critical thinking was seen as remedial, but in fact promoting rationality is a cross curriculum challenge and responsibility. Despite this lack of disciplinary support, the critical thinking movement has grown to the extent that practically everyone now wants students to learn to “think critically” and many post-secondary institutions identify critical thinking as their key learning outcome. Business also wants employees and especially management to think critically. This acceptance and recognition provides those of us in the critical thinking movement with an opportunity and responsibility not far different from that of the philosophers of the Enlightenment. Enlightenment philosophers virtually changed the course of history by advocating for scientific reasoning and rationality to replace the old deference to church and king. What is sometimes known derisively as the Enlightenment Project, for all its over reach, had a momentous and largely beneficial effect on the thinking and politics of western civilization. The critical thinking movement is the inheritor of this project, and I suggest that we now think of the critical thinking movement as the Critical Thinking Project. But for this analogy to be appropriate, critical thinking instruction must expand to include all of rationality.

2. Expanding the Focus of The Critical Thinking Project

The theory then was that the barrier to rationality was ignorance of the rules of rational argument and that with proper instruction in the rules of reasoning and argumentation, students would be able to identify and resist fallacious arguments — it was principally (well almost) “Logical self defense.”

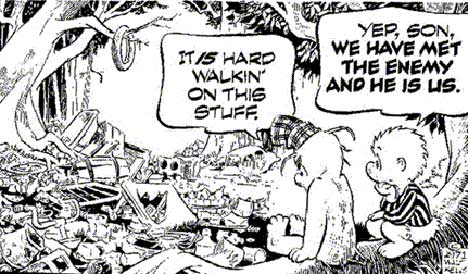

But as the heuristics and biases literature began to permeate the Critical Thinking Project, there was a realization that, as the famous Pogo carton reminds us, we are also the problem. Not that this was exactly a new idea. As Socrates admonished, “Know thyself” was a key prerequisite to rational thought.

The heuristics and biases literature focuses primarily on the inherent biases of our cognitive equipment. The identification of this source of erroneous reasoning adds significant insights useful to critical thinking instruction — insights which are now being recognized in the Critical Thinking /Informal logic literature. But before we make use of this research we must subject it to a critical evaluation.

3. Expanding The Critical Thinking Project 2: Rational Decision Making

Despite Harvey Siegel’s claim that a critical thinker is someone “appropriately moved by reason” (Siegel 2013) and Bob Ennis’ definition of critical thinking as “reasonable reflective thinking focused on deciding what to believe or do” (Ennis 1987), critical thinking has, historically limited itself to a subset of rationality primarily involving epistemological norms such as identifying and avoiding fallacies, argument analysis and evaluation, and, more recently, reasoned judgment. But rationality and critical thinking include not only deciding what to believe but also what to do, as both Ennis and Siegel indicate. Critical thinking is not limited to applied epistemology as I and others have argued, but also includes applied rational decision making.

While the critical thinking movement has failed, by and large, to address rational decision making, neo-classical economics has dominated the concept of rationality as it applies to decision making and used it to promote a narrow-minded, individualistic and self-interested view of rationality known as rational choice theory. The Critical Thinking Project must recover the concept of rationality from the neo-classical economists.

Many of the insights emerging from the heuristic and bias literature are of great use to the Critical Thinking Project. However, the research on decision making biases is undermined by use of the norms of rationality embedded in rational choice theory. I will focus here on the heuristics and biases research on decision making rationality both because it has received less attention than the research on epistemic biases, and more importantly, because this model, which describes rationality as the efficient pursuit of individual self-interest, legitimates an ideological position as if that were rationality itself.

Let me start with the concept of bias.

4. What Is a Bias?

To claim that a person has a bias or is biased in a particular area of judgment is to claim that the person has a tendency to make judgments or engage in actions that violate the appropriate and relevant norms of that area.

Here are a few examples: referees favouring the home team, scientists only attending to supportive information, people believing their experiences to be representative of human experience, favouring male candidates in hiring.

It is obvious that the Achilles heel of this definition is “appropriate and relevant norms.” Short of infinite regress, the norms themselves need rational justification.

The norms of reasoning that are used in the bias and heuristic literature are not limited to the traditional norms of rationality, or the norms of deductive logic. The norms also include the laws of probability theory and norms used in rational choice theory (particularly expected utility). The norms of probability are not contentious, but as indicated, the norms that assume that people should make decisions in accord with expected utility theory i.e., in line with their long term self-interest, are contentious.

5. Tversky and Kahneman

Two Israeli psychologists, Amos Tversky and Daniel Kahneman, did much of the initial research, and created the heuristics and bias nomenclature for this enterprise. Tversky and Kahneman set out to demonstrate the descriptive inaccuracies of the model of human behavior built into neo-classic economics.

As Kahneman recollects:

One day in the early 1970s, Amos handed me a mimeographed essay by a Swiss economist named Bruno Frey, which discussed the psychological assumptions of economic theory. I vividly remember the color of the cover: dark red. Bruno Frey barely recalls writing the piece, but I can still recite its first sentence: “The agent of economic theory is rational, selfish, and his tastes do not change” (Kahneman 2011).

Tversky and Kahneman created a series of ingenious experiments which demonstrated the descriptive inaccuracy of the rational economic agent used in the neo-classical mathematical models of the economy. Their research did not call into question the notion that selfishness was the sole motivation of human behavior, but their research did call into question the extent to which people reasoned in accord with model of rationality used by economists. In the process, they spawned the vast heuristics and bias research. Their work led to the development of a now widely accepted model of human judgment known as the dual process model. The model, as suggested by the title of Daniel Kahneman’s best-selling review of this literature, Thinking, Fast and Slow (Kahneman 2011), states that we have two modes of judgment: an algorithmic/intuitive mode that is quick and a slower more reflective mode—the latter the kind of thinking encouraged in critical thinking courses.

The dominant “fast process” usually serves us well enough and apparently served our antecedents well enough to become genetically embedded in our thinking processes. Of course, not all fast and intuitive processes are “natural.” When we learn to drive a car, we acquire all sorts of quick intuitive processes necessary for effective driving—assessing speed, appropriate following distance etc. Experts also often learn quick intuitive responses that are reliable, e.g., chess masters. But on some occasions and in reference especially to probabilistic reasoning, this fast intuitive process tends to lead to erroneous or biased judgments. These biases have been identified in a wide range of experiments by cognitive psychologists.

6. The Great Rationality Debate

As many of you probably know, there were considerable negative reaction to the early work of Tversky and Kahneman, especially to the inference that their studies showed that people were irrational in their probabilistic judgments. There were basically two arguments: 1. that subjects misunderstood the questions about likelihood and therefore their judgments were reasonable given their understanding of the questions, and 2. that the way that people reasoned must by definition be rational so that their answers did not violate relevant norms of rationality. Without going into all the replies, both objections were credibly addressed by the fact that subjects, once they were shown the relevant calculus, understood why their responses were incorrect. In addition, people who were statistically sophisticated and understood the normatively correct answers still felt the pull of their intuitive answers while conceding that the intuitive judgment was incorrect. Similar objections can arise in relation to people’s deviations from the norms of rational choice theory but, as I will show, those objections are more cogent (Stanovich 2011).

7. Epistemic Biases

I shall turn first to the research on epistemic biases. There are two excellent introductions to this material: the best-selling Thinking, Fast and Slow by Daniel Kahneman (2011) and a more academic and comprehensive text, Thinking and Deciding by John Baron (2000).

Many of the classic experiments are no doubt known to most of you. But let me quickly review the most famous initial results which are also quite relevant to critical thinking. Basically we tend to intuitively judge the likelihood of an event based on a number of factors:

Representativeness: An event that looks like a stereotype is judged to be more likely.

Availability: If the event is easy to imagine, it is judged to be more likely. This ease of imagining can be a function of remembering it happening or remembering hearing about it (the power of the media), or because a description of its happening is plausible (a good story) and easy to imagine.

Vividness: If the event is emotionally powerful, it is judged to be more likely.

Tversky and Kahneman demonstrated that these psychological factors lead to the violation of a basic and quite simple principle of probability, the principle of conjunctive probability: the conjunct of two events is never more probable than either of the events.

This tendency is not just common to the statistically naive. For example, when the following problem of choosing which of two events was more likely was given to graduate students, a majority of them committed the classic fallacy of rating the more complex (but easily imagined) event as more likely.

- A massive flood somewhere in North America next year, in which more than 1,000 people drown

- An earthquake in California sometime next year, causing a flood in which more than 1,000 people drown (Kahneman 2011, p.131).

Choosing 2 over 1 involves violating the conjunctive rule of probability. But when making most judgments of likelihood, we don’t “do the math.” We make an intuitive judgement on the basis of one or more of the heuristics identified above. Availability and vividness can work together to make an event seem even more likely. All these factors (representativeness, narrative plausibility, availability, and even vividness) come into play to empower what critical thinkers know as the fallacy of appeal to anecdotal evidence.

While philosophy has a long tradition of identifying this fallacy, the experiments of Tversky and Kahneman provide experimental illustrations demonstrating just how ubiquitous and powerful is our natural tendency to believe that our experience is and will be “representative” of such experiences generally. Availability is also a function of plausibility—making a plausible causal story, as in the above example, makes it easier to imagine an event and increases our sense of its likelihood. Ironically, the assumption of representativeness tempts even researchers to over generalize from their research to the population in general.

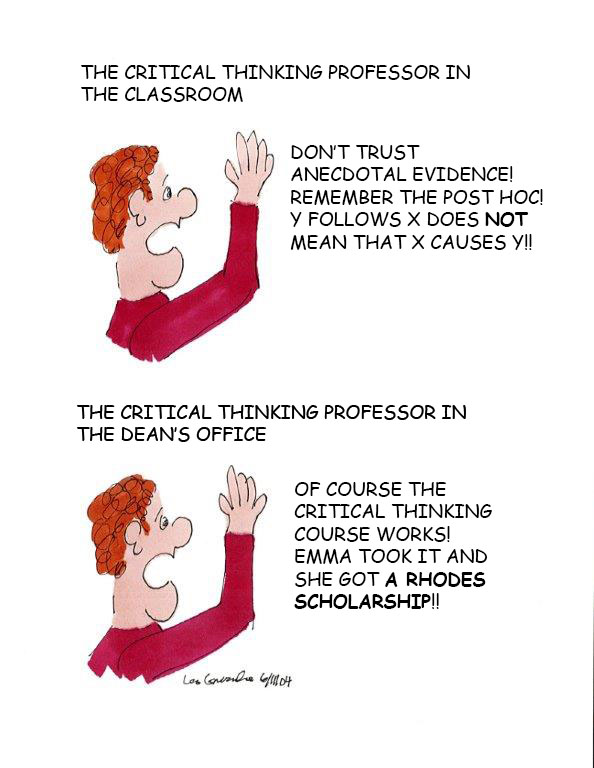

Nor are professors of critical thinking immune from the siren call of anecdotal evidence, as this cartoon by Leo Groake reminds us:

The literature on cognitive biases contains a large number of other epistemic biases relevant to critical thinking, such as:

- base rate neglect,

- anchoring,

- confirmation bias,

- hindsight bias,

- myside bias, etc.

But in this paper I wish to focus on the biases of instrumental rationality that are identified mainly in the research produced by behavioral economists.

8. Instrumental Rationality: Rational Choice Theory and Biases

The norms of rational choice theory, the mathematically elegant theory developed in the early 1950s, provides the theoretic base for most neo-classical economic models. The theory assumes that humans fit (and ought to fit) the model of “homo economicus” or “econs” as they are called in the behavioral economics literature. For econs, all decisions are self-interested, well informed, based on unchanging tastes, and in conformity with expected utility theory—the model that horrified Kahneman when he first read of it. Unfortunately, it is these norms that provide the basis for identifying decision making errors and biases.

While economists admit that rational choice theory is an idealization of actual behavior, they have argued that it is no worse an idealization than Newton’s frictionless plane and is equally theoretically useful. Starting in the late 1970s, the claim that rational choice theory was an appropriate way to build a supposedly empirical economic theory was called into question not only by the research of Tversky and Kahneman but also by the emerging field of behavioural economics. The crash of 2008 may well have been the coup de gras to the view that real world financial actors such as bankers act rationally. But it is important for our purposes to understand that, while behavioral economists have demonstrated the descriptive inaccuracy of the assumption that humans are “econs,” they still accept the associated norms of rationality. As a result, the biases identified in the heuristics and bias literature as decision making irrationalities presume that the description of humans as econs is the normatively correct description of the “rational person.”

The critiques of econs as appropriate models of human beings and rational choice theory as an appropriate descriptive model of human behavior are long standing. Indeed, the idea that all actions are motivated by self-interest was effectively critiqued by Bishop Butler in 18th century. Behavioral economists argue that this view of human nature is factually incorrect, but generally fail to criticize the associated norms–their goal is to identify the descriptive inaccuracy of rational choice theory not criticize its norms.

For example, the entertaining and insightful behavioral economist, Dan Ariely, states in the introduction to his book, The Upside of Irrationality:

. . . there is a flip side to irrationality, one that is actually quite positive. Sometimes we are fortunate in our irrational ability because, among other things, they allow us to adapt to new environments, to trust other people, to enjoy expending effort and to love our kids (Ariely 2010, p.12).

How very odd that the abilities described by Ariely should be characterized as irrational. But not odd if you realize the definition of rationality that he is using. As he says: “From a rational perspective, we should make only decisions that are in our best interest (“should” is the operative word here)”(Ariely 2010, p.5 ).

Kahneman is sensitive to this criticism. He states:

I often cringe when my work with Amos is credited with demonstrating that human choices are irrational, when in fact our research only showed that Humans are not well described by the rational-agent model (Kahneman 2011, p.333).

But as can be seen from this quotation, he does not go as far as to say that the norms of the rational-agent model are faulty.

Before dealing with the obvious moral failures of the “econ” norms of rational behavior, I wish to look at some of the tendencies (so-called biases) identified in the behavioral economic literature that are supposed examples of common human irrationality.

The norms of rational choice are purely “product” norms. They provide criteria for assessing a decision, but not for assessing the decision making process. This is different from many of the norms of rationality used to identify epistemic biases which reference procedural norms e.g., confirmation bias. This focus places significant limitations on the usefulness of rational choice theory as a guide for rational decision making. But first the theory.

The fundamental principle of rational choice theory is that, to be rational, people must be consistent in their preferences. If they prefer A over B and B over C, then they should prefer A over C and should do so over time and in all situations. The principle sounds reasonable enough but its emphasis on unchanging preferences turns out to have significant and dubious implications because it requires our decision making to be indifferent to context. The other key aspect of rational choice theory is the theory of expected utility—a theory based on the notion of a good bet.

9. Expected Utility Theory

While expected utility theory is, in principle, applicable to any outcome, most of the discussion focuses on financial gambles. A good gamble is one which if played in the long run will result in your being ahead of the game, i.e., winning more than losing. The best gamble is the option that will yield the most financial return in the long run. In more mathematical terms: the expected utility of a gamble is equal to the probability of the outcome multiplied by the amount of the outcome minus any cost of the gamble.

There are a number of obvious practical difficulties in acting in accord with rational choice theory. One obvious difficulty is that we are often confronted with decisions without knowing the probability of the various outcomes. The next obvious problem is that the utility of an outcome is subjective. This has led theorist to redefine outcomes in terms of preferences rather than utility. As a result, economists generally talk about preference maximizing not utility maximizing. But since they mainly talk about money, they assume that individual preferences will be to attain the maximum financial benefit.

But even when people know the probabilities and payoffs involved, there are many situations in which most people do not adhere to the norm of expected utility—and quite reasonably so. For example, in most situations the majority of people prefer an outcome that is certain rather than an iffy bet even if the iffy bet would provide a greater payoff in the long run.

10. Certainty Bias — Or a Reasonable Preference?

Tversky and Kahneman used the following question as one of the ways to illicit the certainty effect.

Which of the following options do you prefer?

A. a sure gain of $30

B. 80% chance to win $45 and 20% chance to win nothing

In this case, 78% of participants chose option A while only 22% chose option B (value $36). This illustrates most people’s tendency to favour the more certain bet over the less certain bet despite it greater “expected utility” (the expected value of B exceeds that of A by 20%) (Kahneman 2011, pp.364-365).

The fact that that people violate the norms of expected utility theory does not, of course, prove them irrational. For example, consider the purchase of insurance which, in theory, violates expected utility theory.

11. Loss Aversion: Context Counts

Even before the work of Tversky and Kahneman, it was noted that people favoured certainty over the promise of long term gain. It was thought that this was because people were risk averse. This analysis of people’s decision making was derived in large part from the work of Daniel Bernoulli (1738) who devised a model of risk aversion which used the declining utility of the dollar to also explain apparent deviations from choosing the “best bet.”

But Tversky and Kahneman noted that people were influenced in their assessment of the utility of a financial outcome by considerations other than their current state of wealth. Tversky and Kahneman’s research showed people tended to be loss averse not risk averse. Loss aversion has two implications:

- People are only tempted by a bet in which the gain is much greater than the possible loss.

- If a person sees their situation as a loss, e.g., have already lost a bet or suffered financial reversal, they are now willing to take a greater risk to return to a “no loss situation” than they would if they were not already in a loss situation.

For example, consider the following problems:

Problem 1: Which do you choose?

(a) Get $900 for sure OR (b) 90% chance to get $1,000

Problem 2: Which do you choose?

(a) Lose $900 for sure OR (b) 90% chance to lose $1,000

If you are like most people, you will chose (a) in the first problem but (b) in the second. This tendency can lead to all sorts of risky efforts to make up for losses widely seen, for example in compulsive gamblers, but also stock brokers (Kahneman 2011, p.224).

The inclinations to accept or reject a gamble are mostly intuitive system 1 choices. And they clearly do not accord with the norm of expected utility theory which would ignore the framing of the gamble as loss or gain, i.e., ignore the context in which a decision is being made.

As mentioned, rational choice theory treats context (e.g., history, financial situation, social situation, cultural context) as irrelevant. Decisions that take these types of considerations into account and result in changing preferences will be judged as inconsistent and “biased” by the theory.

12. Percentage Framing

Tversky and Kahneman have also shown other ways that contexts influence our decision making. For example:

Imagine that you are about to purchase a calculator for $15. Another customer tells you that the calculator you wish to buy is on sale for $10 at another store, located 20 minutes’ drive away. Would you make a trip to the other store?

In contrast, imagine this time that you are buying a jacket for $125 and you learn that you can save $5 dollars on the jacket by driving to another store. Would you drive 20 minutes to save the $5?

In one typical experiment, 68% of the respondents were willing to drive to the other branch to save $5 on a $15 calculator, but only 29% of respondents were willing to make the same trip to save $5 on a $125 jacket (Kahneman 2011, p.367).

Irrational? From the economists’ point of view, 5 dollars is 5 dollars and the context (or frame) of the purchase is irrelevant. But not to most humans. Can our tendency to assess a saving in light of the context lead to irrationality? Yes, but is it fundamentally irrational?—only if you are an econ.

13. Mental Accounting: Budget Categories

- Imagine that you have decided to see a play and paid the admission price of $50 per ticket. As you enter the theater, you discover that you have lost the ticket. The seat was not marked, and the ticket cannot be recovered. Would you pay $50 for another ticket? (Yes 46%); No 54%)

- In the alternative, imagine that you have decided to see a play where admission is $50 per ticket. As you enter the theater, you discover that you have lost a $50 bill. Would you still pay $50 for a ticket for the play? (Yes 88%); No 12%) (Kahneman 2011, p.368).

Why are so many people unwilling to spend $50 after having lost a ticket, if they would readily spend that sum after losing an equivalent amount of cash? The difference is our mental accounting. The $50 for the ticket was spent from the play “account”—that money is already spent; the loss of the cash is not posted to the play “account” and it affects the purchase of a ticket only by making the individual feel slightly less affluent.

As Kahneman admits, while this framing violates the economic rationality principle that only the amount of money counts not the context, most people do it.

The normative status of the effects of mental accounting is questionable. It can be argued that the alternative versions of the calculator and ticket problems differ also in substance. In particular, it may be more pleasurable to save $5 on a $15 purchase than on a larger purchase, and it may be more annoying to pay twice for the same ticket than to lose $50 in cash. Regret, frustration, and self-satisfaction can also be affected by framing (Kahneman and Tversky 1982).

So the theory is saved by considerations such as “If such secondary consequences are considered legitimate, then the observed preferences do not violate the criterion of invariance and cannot readily be ruled out as inconsistent or erroneous.” As long as you posit subjective utilities as explanations (and these utilities can be “rationally” influenced by frames), you can save the normative theory. But why not just say that the theory is an inadequate account of the norms of rational decision making?

For econs, all money is money and this sort of mental accounting incorrectly allows the influence of budget category framing. But for those of us who try to keep on budget, or for any bureaucratic institution, budget categories serve a very important and rational purpose.

14. Endowment Effect

The endowment effect is the tendency to value something we have more than we would pay to get it. Another example from Thaler:

One case came from Richard Rosett, the chairman of the economics department and a long time wine collector. He told me that he had bottles in his cellar that he had purchased long ago for $10 that were now worth over $100. In fact, a local wine merchant named Woody was willing to buy some of Rosett’s older bottles at current prices. Rosett said he occasionally drank one of those bottles on a special occasion, but would never dream of paying $100 to acquire one. He also did not sell any of his bottles to Woody. This is illogical. If he is willing to drink a bottle that he could sell for $100, then drinking it has to be worth more than $100. But then, why wouldn’t he also be willing to buy such a bottle? In fact, why did he refuse to buy any bottle that cost anything close to $100? As an economist, Rosett knew such behavior was not rational, but he couldn’t help himself (Thaler 2015, p.17).

While Rosett couldn’t help himself, is it really irrational to value what you have more than what you would currently pay? The emotionally and intellectually rational heuristic—stick with (love?) what you have– seems an eminently sane inclination and supportive of happiness. Irrational?

15. Summary

The model of rationality used by neo-classical economists has a key limit which is the insistence on the irrelevance of context e.g., loss, commitment, ownership, frame, etc. While only a brief review of the research, these examples support the view that the “biases” identified in the research on instrumental rationality do not have the same status as those identified in the studies of epistemic biases. The results of the study of instrumental rationality are best described as common tendencies not biases in the pejorative sense. It may well be that these intuitions which “violate” econ rationality contribute to our long run well-being.

An even more troubling implication of the rational choice approach to decision making is the lack of consideration of moral norms relevant to decision making, for example, fairness.

16. Fairness: The Ultimatum Game

To illustrate this point, take the interesting economic experimental paradigm called the Ultimatum Game. In the Ultimatum Game, there is a sender and a receiver. The sender is given some money, typically $20 and can make any split of the money with a receiver with whom they have no direct contact. The sender decides how to split the money and then offers a share to the receiver. If the receiver accepts the offer, they both get the split money, but if the receiver rejects the offer, neither get the money.

If you are an econ, you take any offer—a buck is buck, but contrary to economic thinking, most receivers refuse offers of anything less than about 40% because of the unfairness.

17. The Snow Shovel Price

Here is another example that illustrates people’s concern with fairness and rejection of supposedly rational economic behavior. Markets obtain equilibrium between supply and demand because people raise prices when demand goes up—at least until new supplies arrive. This is the much extolled method by which a free market economy is supposed to stay in equilibrium between supply and demand. But consider this scenario:

A hardware store has been selling snow shovels for $15. The morning after a large snowstorm, the store raises the price to $20. Rate this action as: Completely fair, acceptable, somewhat unfair, or very unfair.

When a couple of hundred Canadians were given this scenario, 18% judged it acceptable while 82% found this basic economic strategy to be unfair. On the other hand, when the same problem was put to MBAs, 76% judged it acceptable and only 24% unfair. It appears that taking economics can have the effect of making you into a fairness-indifferent econ (Thaler 2015, pp.127-128). It appears that instruction in economics (including the norms of rational choice theory) can have a significantly negative influence on people’s moral sense (See Frank et al 1993).

18. Evaluative Rationality

The lack of fairness as a criterion of rational decision making reflects a more general problem with the rational choice approach to decision making. Not only does the econ notion of rationality have no place for moral considerations such as fairness, it also has no place for reflection on the goals or preferences of actors. Clearly one can have reasonable and unreasonable goals and desires, and one can deliberate about goals rationally or irrationally; most importantly, one can have concerns about collective outcomes that are not reducible to an aggregate of individual preferences (e.g., the environment).

Basically what the theory leaves out is evaluative rationality. Evaluative rationality focuses not on how to efficiently realize chosen ends but rather on the process for rational choice of ends, involving not only a rational assessment of one’s self-interest but also relevant moral considerations.

There are two related issues here: rational choice of individual ends and rational choice of collective ends. Neither is well treated in rational decision theory, although there is work by Kahneman and others on people’s unreliable assessment of how they will feel when they experience certain outcomes (affective forecasting as it is known). In general, people overrate how happy they will be when achieving desired outcomes (cf. lottery winners studies) but also how unhappy they will still be when experiencing misfortunes or disability (Kahneman 2011).

The complexity and subtlety of hedonic experience make it difficult for the decision maker to anticipate the actual experience that outcomes will produce. Many a person who ordered a meal when ravenously hungry has admitted to a big mistake when the fifth course arrived on the table. The common mismatch of decision values and experience values introduces an additional element of uncertainty in many decision problems.

The last chapters of Thinking, Fast and Slow document the extent to which people are generally poor at predicting how they will feel when they achieve or fail to achieve chosen objectives. There are numerous studies that detail how poorly humans are at affective forecasting. For students faced with a wide range of life and career choices, this research can be very helpful in informing reflection on individual choices.

19. Collective Rationality and Citizenship

A more egregious problem with rational choice theory is its lack of concern for the common good. Mapped onto collective decision making, rational choice theory entails a commitment to seeing the common good as maximizing the aggregate satisfaction of individual (selfish) preferences. It is an essential part of the myth of the free market that “rational” econs pursuing their private interests will result in the best possible outcome for all.

But as we are all aware, the pursuit of individual preferences (rational or not) can lead to collective defeat. Examples range from traffic jams to the collapse of the east coast fisheries to, most troublingly, global climate change. Everyone prefers to utilize fossil fuels, and while no one intends to degrade the environment, the pursuit of individual preferences results in conditions that are harmful to everyone.

Thinking that the only consideration in rational decision making is your preferences implies that those concerned about the environment are either irrational or simply that environmentalist just have different “preferences” than those whose preferences are self-interested.

There is work in cognitive psychology that addresses effective deliberative processes which I will briefly review, but that literature does not address questions of fairness, intrinsic values, collective goods, etc. But there is a discipline that does: moral and political philosophy. Recent philosophical work on deliberative democracy treats deliberation about the common good as the fundamental rational element of democracy (Elster 1998).

I propose therefore that the study of evaluative rationality be explicitly added to the corpus of rational reflection addressed by the Critical Thinking Project.

While this is not the place to attempt to articulate the concept of applied rational decision making, it seems clear that it would differ from rational choice theory in rejecting maximizing utility as the only norm and in being a truly usable guide to rational decision making. It would be a set of guidelines to insure that the process of decision making took into account all relevant considerations: factual, moral, political and personal.

20. Group Decision Making

There is research on group decision making, but the notion of collective or political rationality—how we in fact make and how we should make decisions about the collective good is poorly developed. This is because the research tends to assume that the issue facing groups is either epistemological or only to identify the effective means to a given end, not to deliberate about the choice of ends. For example, the studies of the decision making process of juries focus only on questions of epistemic not evaluative rationality (whereas in actual jury deliberations, concerns about the justice of the law may trump factual concerns).

Collective rationality also involves the norms of argumentation. The proper conduct of such discourse is crucial to coming to a reasoned judgment about what to do or believe. To some extent, the issue of collective rationality is addressed in informal logic through the study of argumentation and pragma-dialectics, but there is also work in psychology on the study of group dynamics. Again there is psychological and sociological literature that is useful but needs to be critically evaluated. The Critical Thinking Project should address both the norms of rational discourse and procedures for facilitating group rationality. Perhaps surprisingly, there is research which supports the notion that groups can often be more epistemologically rational when making decisions than individuals. The reason for this is that group discussion can involve participants putting forward differing points of view. The research on individual rationality underlines that the most useful heuristic for rational evaluation is to consider counter evidence and counter arguments. A properly constituted group should have people with alternative points of view or, if necessary, have people assigned as devil’s advocates to make counter arguments and argue for alternative views (Lunenburg 2012).

The problems of confirmation bias, myside bias, even sunk costs can often be addressed effectively in group discussion. In addition, the research suggests that people make the best decisions when they are required to justify them in the process, subjecting them not only to their own critical reflection, but also to that of others. Presumably this is as true or perhaps truer for moral and political reflections.

There are, of course, well known ways in which group decision making can go awry—e.g., the notorious problem of “groupthink.” The research literature provides helpful information on how this can be avoided (Kerr and Tindale 2004; Kerr, MacCoun and Kramer 1996).

Based on the best research on collective decision making, The Critical Thinking Project needs to develop and teach practical and inclusive guidelines for collective rational decision making.

21. The Dialectical Tier: Some Possible Objections

Many of the criticisms of the norms of economic rationality are long standing and widely accepted outside the discipline of economics, but one may question the appropriateness of introducing concern for the common good or criticisms of economics into the Critical Thinking Project. Conservative critics of critical thinking already suspect it is a covert means for teaching liberal ideology.

I have two responses to this anticipated objection:

- Neo-classical economics and rational choice theory are covert ways of introducing ideology under the guise of simple logical principles and need to be countered. As Thomas Piketty comments: “To put it bluntly, the discipline of economics has yet to get over its childish passion for mathematics and for purely theoretical and often highly ideological speculation”

- The push from behavioural economics to revise the behavioural assumptions of economics is an attempt to save economics for its obsessive mathematical idealizations, but not from normative ideology. To teach rationality we will need principles of reasonable decision making and cannot rely on the econs’ view because of its use in the heuristics and bias literature.

Addressing rational decision making as it applies to evaluating ends and to collective decision making requires a broader and less ideological approach to making rational decisions than provided by rational choice theory norms.

Another objection to increasing the ambit of critical thinking to include evaluative and collective decision making is that these areas are highly controversial and do not lend themselves to Critical Thinking Project instruction the way that other norms of reasoning do. Rational choice theory ignores the decision making process, but critical thinking has always focused on deliberative processes for assessing claims and the same approach is appropriate for decision making. In its simplest form, a check list of relevant considerations about ends and means when making a decision could go a long way to making most people’s decision more rational. In the same way, decisions about collective goals can be subject to widely accepted considerations, e.g., respect for minority rights, considerations of fairness and justice, collective well-being, etc.

22. The Critical Thinking Project

The inadequacy of the model of rationality used in economics and now widely popularized in books about human decision making requires that those concerned about rationality and critical thinking expand their efforts and promote a corrective view of rationality.

I propose, therefore, that those in critical thinking adopt what I have called the Critical Thinking Project, to improve people’s reasoning by:

- Expanding the concept of critical thinking to include evaluative rationality and rational decision making in its most inclusive sense.

- Developing an alternative model of rational decision making with usable guidelines for a rational decision making process.

- Making critical use of research coming out of cognitive psychology and behavioral economics to help identify tendencies in human judgment that can lead to irrationality.

- Developing interdisciplinary research projects with researchers who are concerned with the application of reason to judgment and decision making—in particular cognitive psychologists, behavioural economists and applied decision theorist in business faculties.

- Teaching for evaluative rationality and rational decision making as well as argument evaluation, reasonable discourse and reasoned judgment.

Before concluding, let me return to the point I made at the beginning. The increasing acceptance of critical thinking as a central educational concept positions those of us involved in critical thinking to significantly affect the intellectual landscape. The skepticism towards economics caused by the 2008 crash has also created a more receptive public environment for critiques of economics. The popular interest in the heuristics and bias literature also provides an opportunity to discuss and explore standards of rationality. Because many of the cognitive psychology researchers in this area are interested in the application of their research, often under the rubric of “de-biasing,” it should be feasible to find appropriate colleagues for this effort (Fischoff 1981).

In addition, because critical thinking is fundamentally a discipline focused on application, the development of a broad concept of applied rationality should not become mired in theoretical minutia that characterizes so much of philosophical theorizing.

The Critical Thinking Project, with the addition of a focus on rational decision making, has the potential to make a crucial contribution to individual and collective well-being and even the future of the world.

References

Ariely, D. 2010. The Upside of Irrationality. New York: HarperCollins.

Baron, J. 2000. Thinking and Deciding. Cambridge: Cambridge University Press.

Elster, J. 1998. Deliberative Democracy (vol. 1). Cambridge: Cambridge University Press.

Ennis, R H. 1987. “A Taxonomy of Critical Thinking Dispositions and Abilities.” In Teaching Thinking Skills: Theory and Practice, edited by J.B. Baron and R.J. Sternberg. New York: W.H. Freeman.

Fischhoff, B. 1981. Debiasing. (No. PTR-1092-81-3). DECISION RESEARCH EUGENE OR.

Frank, R.H., T. Gilovich and D.T. Regan. 1993. “Does Studying Economics Inhibit Cooperation?” The Journal of Economic Perspectives 7, 2: 159–171.

Kahneman, D. 2011. Thinking, Fast and Slow. Macmillan.

Kahneman, D. and A. Tversky. 1984. “Choices, Values, and Frames.” American Psychologist 39, 4: 341.

Kerr, N.L., R.J. MacCoun and G.P. Kramer. 1996. “Bias in Judgment: Comparing Individuals and Groups.” Psychological Review 103, 4: 687.

Kerr, N.L. and R.S. Tindale. 2004. “Group Performance and Decision Making.” Annu. Rev. Psychol. 55: 623–655.

Kuhn, D. 2005. Education for Thinking. Cambridge, Mass.: Harvard University Press.

Lunenburg, F. 2012. “Devil’s Advocacy and Dialectical Inquiry: Antidotes to Groupthink.” International Journal of Scholarly Academic Intellectual Diversity 14: 1–9.

Schweiger, D.M., W.R. Sandberg and J.W. Raga. 1986. “Group Approaches for Improving Strategic Decision Making: A Comparative Analysis of Dialectical Inquiry, Devil’s Advocacy, and Consensus.” Academy of Management Journal 29, 1: 51-71.

Siegel, H. 2013. Educating Reason: Rationality, Critical Thinking, and Education. New York: Routledge.

Stanovich, K.E. 1999. Who is Rational? Studies of Individual Differences in Reasoning. Psychology Press.

_______. 2009. What Intelligence Tests Miss: The Psychology of Rational Thought. Yale University Press.

_______. 2011. Rationality and the Reflective Mind. Oxford: Oxford University Press.

Stanovich, K.E. and R.F. West. 2000. “Advancing the Rationality Debate.” Behavioral and Brain Sciences 23, 5: 701–717.

Thaler, R.H. 2015. Misbehaving: The Making of Behavioral Economics. WW Norton & Company.

Toplak, M.E., R.F. West and K.E. Stanovich. 2012. Education for rational thought. Enhancing the Quality of Learning: Dispositions, Instruction, and Learning Processes, 51–92.

Tversky, A. and D. Kahneman. 1971. “Belief in the Law of Small Numbers.” Psychological Bulletin 76, 2: 105.

_______. 1981. “The Framing of Decisions and the Psychology of Choice.” Science 211, 4481: 453–458.