5.5 Mitigating Backdoor Attacks

The literature on mitigating backdoor attacks in machine learning models is extensive, particularly compared to other poisoning attacks. The following discusses several categories of defences, including data sanitization, trigger reconstruction, model inspection, and sanitization, alongside their limitations.

Training Data Sanitization

Training data sanitization techniques, like those used for mitigating poisoning attacks targeting availability, are effective against backdoor poisoning. For example, outlier detection in the latent feature space has successfully identified backdoor attacks, particularly in convolutional neural networks for computer vision tasks. Techniques like Activation Clustering aim to cluster training data in representation space, isolating poisoned samples into distinct groups.

Limitation:

Data sanitization yields better outcomes when a significant portion of the training data is poisoned but struggles with stealthy attacks. This introduces a trade-off between the attack’s success and the detectability of the malicious samples.

Trigger Reconstruction

This mitigation strategy focuses on identifying and reconstructing the backdoor trigger, assuming it exists in a fixed position within the poisoned training samples. One of the pioneering techniques in this field, NeuralCleanse, utilizes optimization to discover the most likely backdoor pattern that misclassifies test samples. Later improvements have reduced performance time and introduced the ability to handle multiple triggers within the same model. Another notable system, Artificial Brain Simulation (ABS), models neural activations to reconstruct trigger patterns. Tabor is another technique for trigger reconstruction, which formalizes trigger detection as an optimization problem by searching for the reconstructed trigger that minimizes the loss with respect to the target class of the test sample, + the trigger.

Limitation:

In most cases, these techniques only work with the triggers that exist in a fixed position within the poisoned training samples.

Model Inspection

Before deployment, model inspection evaluates the trained machine learning model to detect potential poisoning. One such approach, NeuronInspect, uses explainability techniques to identify distinguishing features between clean and backdoored models for subsequent outlier detection. DeepInspect uses a conditional generative model to learn the distribution of trigger patterns and applies model patching to remove them.

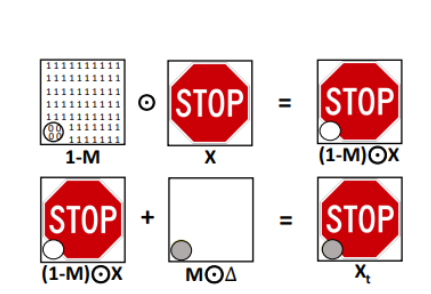

Model Sanitization

Once a backdoor is detected, the question is how to resolve the model. To answer this question, model sanitization can be performed through techniques like pruning, retraining, or fine-tuning to restore the model’s functionality. Fine pruning is a combination of pruning and fine-tuning that detects and prunes the most dormant neurons for the clean samples.

Limitations:

While these techniques work well for convolutional neural networks in computer vision, they have limitations when dealing with more complex backdoor patterns or malware classifiers. Recent advancements in semantic and functional backdoor triggers challenge traditional methods based on fixed trigger patterns. Additionally, the meta-classifier approach can be computationally expensive due to the need to train numerous shadow models.