5.2 How Backdoor Poisoning Works

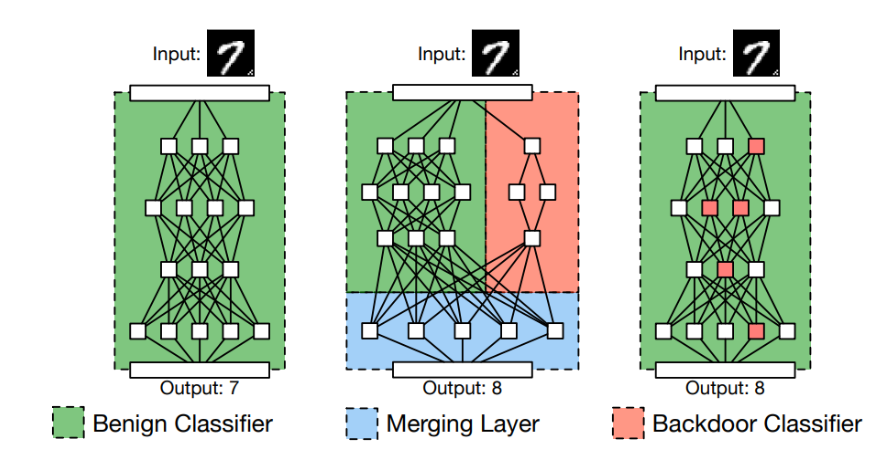

The adversary will follow the following steps during a backdoor poisoning attack:

- Trigger Embedding: The attacker selects a trigger (e.g., a small patch, a specific pattern, or a noise pattern) and embeds it into a subset of the training data.

- Label Manipulation: The labels of the poisoned samples are changed to the target class. (sometimes, the label could be unchanged; instead, a feature collision strategy is used)

- Model Training: The model is trained on the poisoned dataset, learning to associate the trigger with the target class.

- Attack Execution: Any input containing the trigger will be misclassified as the target class during inference.