2.5 Attack Scenarios

The threat model and attack scenarios establish the assumptions regarding the conditions under which an adversary can execute an attack. In the context of data poisoning, three common training scenarios make models vulnerable:

- Training-from-Scratch(TS). The model is trained from scratch with randomly initialized weights. The attacker can add harmful (poisoned) data to mislead the training process.

- Fine-Tuning(FT). A pre-trained model from an untrusted source is refined using new data to adjust a classification function. If this new data comes from an untrusted source, it could introduce hidden manipulations.

- Model Training by a Third Party (MT). Users with limited computing power outsource the training process to a third party while providing the training dataset. The final trained model is provided as an online service accessible via queries or directly to the user. Here, since the feature mapping and classification function are trained by the attacker (third-party trainer), there is a risk that the model could be manipulated. However, users can assess the model’s reliability by validating its accuracy against a separate test dataset before deploying it for real-world use.

Image Description

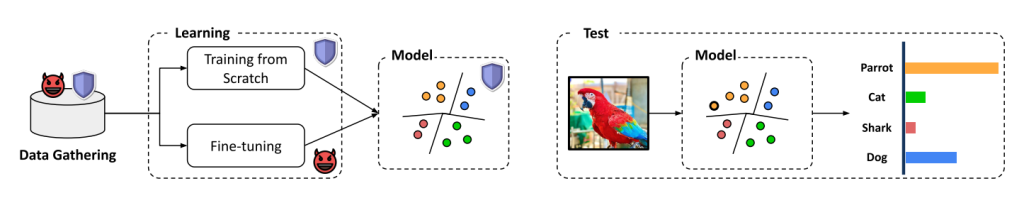

A machine learning pipeline is divided into three main sections: Data Gathering, Learning, and Testing.

Data Gathering: A database icon is shown with a devil emoji (representing potential malicious or biased data) and a shield (representing protective measures). This suggests that the dataset may contain both clean and potentially harmful or biased data.

Learning: The data is used for training a machine learning model using two approaches:

Training from Scratch: Represented by a shield, indicating a more controlled and secure approach.

Fine-tuning: Shown with a devil emoji, suggesting potential vulnerabilities. The output is a trained model that separates data points into different categories, with some parts secured (shield icon) and others possibly compromised (devil emoji).

Testing: A new image (a parrot) is input into the trained model. The model processes the image and classifies it into different categories (Parrot, Cat, Shark, Dog), displaying a bar graph that indicates the model’s confidence in each category. The highest probability corresponds to “Parrot.”

Represents the risks and security measures in machine learning, emphasizing the impact of data integrity and training methodology on model performance.

In training-from-scratch and fine-tuning scenarios, users control training but rely on external datasets, which may be compromised. This often happens when data collection is too costly. The attacker can manipulate training samples to achieve a malicious goal but may lack full knowledge of the original dataset or model structure, potentially reducing the attack’s impact.