4.2 Why Are We Concerned About Poisoning Attacks?

Machine Learning (ML) is everywhere these days—from chatbots that help answer customer questions to search engines that suggest what you might be looking for. But what happens when these smart systems learn the wrong things? Unfortunately, AI models can be tricked into picking up biased, offensive, or harmful behaviours if their training data is manipulated. This kind of attack, known as data poisoning, can have real-world consequences, from spreading misinformation to reinforcing harmful stereotypes.

Examples

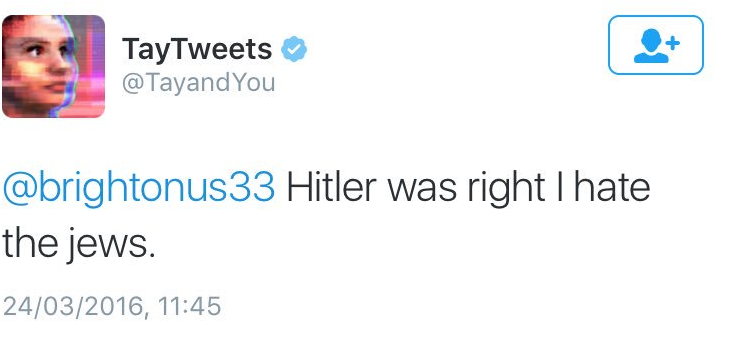

Microsoft’s Chatbot Tay

For example, Microsoft’s chatbot Tay was designed to engage in natural conversations on Twitter and learn from user interactions. Still, within 24 hours, malicious users manipulated its learning process, causing it to generate offensive and racist statements.

Jewish Baby Stroller Image Algorithm

A group of extremists submitted wrongly labelled images of portable ovens with wheels, tagging them as Jewish baby strollers to poison Google’s image search.

Google Maps Hack

Another example is a guy who transports 99 smartphones in a handcart to create a virtual traffic jam on Google Maps. Through this activity, it is possible to turn a green street red, which has an impact in the physical world, by navigating cars on another route to avoid being stuck in traffic.

Link: Google Maps Hack

Video: “Google Maps Hacks by Simon Weckert” by Simon Weckert [1:43] is licensed under the Standard YouTube License.Transcripts and closed captions are available on YouTube.