3.1 Introduction

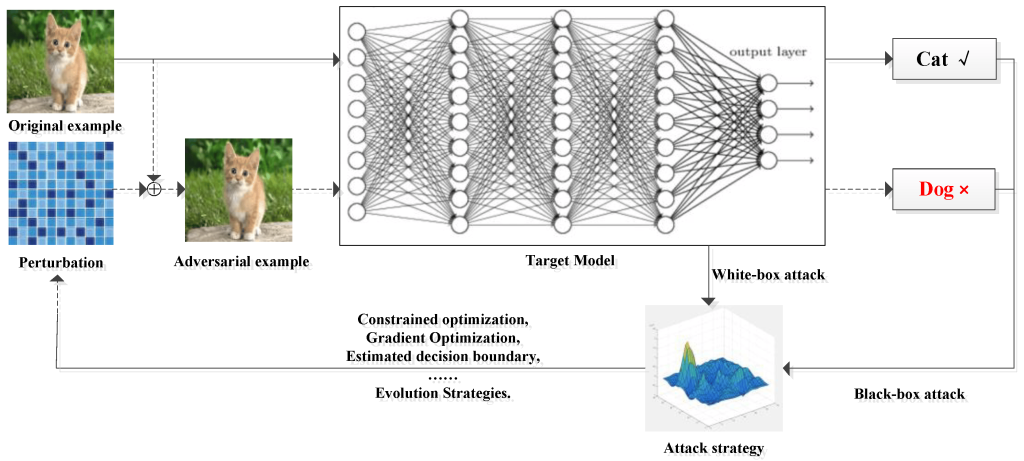

An evasion attack is a test time attack in which the adversary’s goal is to generate adversarial examples, which are defined as testing samples whose classification can be changed at deployment time to an arbitrary class of the attacker’s choice with only minimal perturbation. In the context of image classification, the perturbation of the original sample must be small so that a human cannot observe the transformation of the input. Therefore, while the ML model can be tricked to classify the adversarial example in the target class the attacker selects, humans still recognize it as part of the original class (Figure 3.1.1).

Figure 3.1.1 Description

A diagram illustrating an adversarial attack on a neural network-based image classification model. The process begins with an ‘Original example’ image of a kitten. A perturbation, represented as a tiled blue pattern, is added to the original image to create an ‘Adversarial example,’ which still visually appears as a kitten. This adversarial example is then input into the ‘Target Model,’ a deep neural network, which incorrectly classifies it as a ‘Dog’ instead of a ‘Cat.’ The diagram also shows different attack strategies, including a ‘White-box attack,’ which involves constrained optimization, gradient optimization, estimated decision boundary adjustments, and evolution strategies, as well as a ‘Black-box attack’ relying on an attack strategy plot with a 3D graph.