1.5 Key Concepts in Machine Learning Security

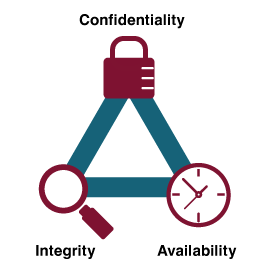

To build secure ML systems, it is essential to understand foundational concepts that underpin the field of ML security. The Information Security Triad or CIA Triad is a model that can be used to help develop security policies. It contains three main components: confidentiality, integrity and availability.

For this, we will refer to the Information Security Triad model, the CIA Triad, which plays a crucial role in defining security policies and development. This model comprises three components: confidentiality, integrity, and availability.

Security Goals

-

Figure 1.5.1 “Security Triad“, D. Bourgeois, CC BY-NC 4.0. Mods: recoloured. Confidentiality: Prevent unauthorized access to sensitive data and models.

- Integrity: Ensure that ML models and data remain unaltered by malicious actors.

- Availability: Maintain uninterrupted access to ML systems and services.

Confidentiality

Confidentiality

Protecting information means you want to be able to restrict access to those who are allowed to see it. This is sometimes referred to as the NTK (Need to Know) principle. Everyone else should be disallowed from learning anything about its contents. This is the essence of confidentiality.

Confidentiality in information security restricts access to the information. In machine learning, security involves ensuring that sensitive data, such as training datasets or model parameters, is protected from unauthorized access. This principle ensures that only individuals with proper authorization or a clear “need-to-know” (NTK) basis can access or analyze the data. For example, an ML model trained on patient records in healthcare must safeguard personal health information to comply with privacy laws like the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. and the Personal Information Protection and Electronic Documents Act (PIPEDA) in Canada. A breach of confidentiality in ML could allow attackers to infer sensitive details about the training data, such as through model inversion or membership inference attacks.

Integrity

Integrity

Integrity is the assurance that the information being accessed has not been altered and truly represents what is intended. Just as a person with integrity means what he or she says and can be trusted to represent the truth consistently, information integrity means information truly represents its intended meaning. Information can lose its integrity through malicious intent, such as when someone who is not authorized makes a change to misrepresent something intentionally. An example of this would be when a hacker is hired to go into the university’s system and change a student’s grade. Integrity can also be lost unintentionally, such as when a computer power surge corrupts a file or someone authorized to make a change accidentally deletes a file or enters incorrect information.

Integrity in machine learning refers to maintaining the trustworthiness and accuracy of the data, models, and predictions. It ensures that datasets and algorithms remain unaltered by unauthorized actors and reflect their intended purpose. For example, if an adversary poisons the training dataset by introducing malicious data, the model could produce biased or incorrect results. Similarly, integrity issues, such as corrupted data from a system failure, may arise unintentionally. Ensuring integrity in ML systems involves robust validation methods, secure data storage, and techniques to detect and mitigate adversarial attacks.

Availability

Availability

Information availability is the third part of the CIA trial. Availability means information can be accessed and modified by anyone authorized to do so in an appropriate timeframe. Depending on the type of information, an appropriate timeframe can mean different things. For example, a stock trader needs information to be available immediately, while a salesperson may be happy to get sales numbers for the day in a report the next morning. Online retailers require their servers to be available twenty-four hours a day, seven days a week. Other companies may not suffer if their web servers are occasionally down for a few minutes.

Availability in ML security ensures that models, training processes, and predictions remain accessible to authorized users in a timely manner. For instance, if an ML-powered fraud detection system is rendered unavailable due to a denial-of-service (DoS) attack, it could lead to financial losses or security breaches. Similarly, cloud-based ML services must ensure continuous availability for real-time predictions or updates. In some scenarios, delays in accessing model predictions can lead to significant operational disruptions. Ensuring availability often involves redundancy, robust infrastructure, and defence mechanisms against attacks that disrupt ML services.

“8.3. The Information Security Triad” from Information Systems for Business and Beyond Copyright © 2022 by Shauna Roch; James Fowler; Barbara Smith; and David Bourgeois is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, except where otherwise noted. Modifications: added some additional content to each factor.