1 Chapter 1: Conceptual foundations

The Essential Neuroscience of Human Consciousness

The Essential Neuroscience of Human Consciousness

Amedeo D’Angiulli

Chapter 1: Conceptual foundations

1.1. Introduction and Working Definitions

1.2. Philosophy you (really) need

1.3. Types of Theories

1.3.1. Dualist theories

1.3.2. Emergentism

1.3.3. Non-material monisms

1.4. From philosophy to science: C-conditions

1.4.1. Challenges to materialism

1.5. The best of all worlds: Functional Emergent Materialism

1.6. From philosophy to science: The necessary and sufficient C-conditions

Box 1. Blind Positivism

Chapter 1: Conceptual Foundations

1.1.Introduction and working definitions

The study of consciousness is by its very essence inter-, multi- as well as trans-disciplinary. Long before the scientific disciplines known to us today were established, this area of inquiry was known as the mind-body problem and was the exclusive domain of philosophy. It was only with the advent of psychology that the word “consciousness” made its way in published scientific work and the related research practices. Shortly after, the word began to be used in many other scientific disciplines such as physiology, medicine, physics, biology, cognitive science, and, neuroscience. To be fair, the word “consciousness” was not defined or used very consistently, (perhaps, presaging one of the major issues of its study). Instead, it was used or confused with other general terms like “mind”, “thought”, “feelings” or “faculty”, especially in older writings.

One of the reasons why words like consciousness, mind, feeling, etc., were so ubiquitous and interchangeable could be the fact that no scientific discipline can really claim ownership of the subject. Everybody wants a piece of it, and who could be to blame? The concept of consciousness is as central to being human as gravity is to the universe; no wonder so many scientists were intrigued by the problem posed by its study. In general, when people get together and talk about consciousness (neighbours, scientists, you and me) in real-life settings, in spite of the variety and subtlety of the meanings we may assign to that term, we generally use it as a synonym of experience and/or awareness. This definition is also the extent to which scientists can agree on a universal definition of consciousness since there is no formal agreement on what it is. However, we do have an intuitive agreement on what consciousness is and how it functions. Due to all the different epistemic perspectives (i.e., views on the nature of our knowledge ) on consciousness (which we will cover shortly), there is no common scientific framework or methodology to go about studying consciousness. Without this, no one agrees on what progress is and how to bring it about. This lack of agreement in what would be the basic tenets of a scientific discipline or even a “science” on its own, is one of the reasons why it can seem so intimidating to approach, and why some simply give up and resort to “mysterianism”, that is, the idea that consciousness is and will always remain a mystery .

For now, let us consider a few core definitions that will get us through at least this section and onto the next. One thing that is generally accepted is that we can distinguish between conscious and unconscious human states (sometimes called minimal contrast). Two aspects that are an important part of human consciousness are subjectivity and intentionality.

Subjectivity is generally called phenomenal consciousness (“what something appears to be like subjectively”). It is the ability to perceive and communicate to others what is going on “inside” you, i.e. what you are experiencing. For example, reading these words right now, which is seeing strings of symbols that hopefully convey meaning to you, when interpreted.

Intentionality is generally interpreted in two ways. The first one is the idea that our consciousness is about something; when conscious, we are conscious of specific things or objects, events and scenes which have an existence (or ontology). These objects give meaning to a reality around us. When reading, you are conscious of real and concrete things such as ink, letters and words, as well as the meanings, images and entities these words refer to in the real world. Compare this to what computers do, constantly processing 1s and 0s but as symbols not representative of something with some meaning that exists in a reality for them. Although, we do not really know if this is even true because we do not know what it feels like to be a computer! Perhaps, as exceptionally exemplified in the movie Ex Machina, there can be after a consciousness of 1s and 0s but we, as humans, have no experiential access to it. All we can do is create thought experiments or “tests” (i.e., Turing test) to make a judgement call using observable (third-person) behavior.

The second interpretation of intentionality is the fact of having intentions; plans, goals or aims towards which our actions are purposely directed. This is the most important one to use when defining consciousness and, for the rest of this book, “Intentionality” will be used with this second interpretation in mind unless specified otherwise.

If at this point you are now feeling discouraged, scared and/or confused, you should consider one crucial point before investing your time further (these two points may determine if you will continue reading or not, and may or not help cope with learning challenges and uneasiness coming to your way). Let us start with a statement that might be evident by now: The study of consciousness is an extremely messy business. For some of the reasons mentioned above (and for many others), a lot of things are written about consciousness that best belong to Ripley’s museum rather than science. The major reason why the study of consciousness appears so weird and fluffy is that it is not easily tractable. That is, consciousness poses issues, problems, puzzles and paradoxes that may have no real solution, and certainly cannot even begin to be solved within a single book or university course, no matter how long.

For example, you could say that cancer is no less “mysterious” than consciousness. However, there is a relatively universal consensus that the best way to understand cancer is to get at its biological underpinnings. Even when researchers consider “alternative medicines”, they know that the answers are ultimately grounded in biology. You might finish a project or a thesis on cancer without finding its cure; but will likely feel somewhat closer to it than before. Well, here is one thing we know for sure about consciousness: that is not going to happen. Consciousness is a kind of topic that can make you quit and abandon in anger. If you do not want to feel this way throughout a course you can stop reading, drop the class and find another one with more definitions, certainty and structure. Alternatively, you can embrace curiosity, uncertainty and open-mindedness. Many very smart people, including Nobel laureates, have pursued this topic in all its intricacies. In the end, they learned about themselves and the world; you could say they reached a “higher state of consciousness”. Ultimately, it is up to you to make a conscious decision to determine if this topic is worth pursuing.

1.2. Philosophy you (really) need

Speaking of frustration, perplexity is at the very core of studying consciousness, so much so that it has been a staple point for centuries. The only thing is, in academic terms, frustrating perplexity has been expressed in various forms and technically in the academic jargon is called philosophy. That is, the discipline of posing questions and issues, discussing them, more or less logically (according to true/false values and formal correctness). The study of consciousness has acted as repository of some of the weirdest questions you can imagine. Questions like “how do you know you are not dreaming right now?”, “Are you conscious right now?”, “How do you know that you exist right now?” “How do you know you are not a zombie?” “Is my laptop conscious?” To the extent that these questions have proven useful or not, the underlying epistemic (used here in short for “epistemologic”) perspectives and belief systems which have guided scholars to give a response have lay the ground to scientific approaches to consciousness.

The best way to appreciate why we need philosophy at this point is considering the fundamental question: “What is consciousness?” Let us now use this question to do an exercise. Write down what you think consciousness is. You may have written down something to the effect that consciousness is a brain process. I think we can agree that what you might have written probably means that all aspects of consciousness can be explained by concrete things or physical-stuff. Before we discuss further, do note there is a subtle approach going on behind the words we use. When we say that “all aspects of X are explained by x”, this is called reduction because it is usually done to simplify the explanation to the simplest, most minimal terms. In the case of the reduction of consciousness to physical-stuff, the reduction is implied down to one material “substance”. Namely, you would be using the philosophical assumption called materialist monism or materialism.

Now, let us consider the case in which you had written that consciousness is a spiritual thing like ideas, feelings and all non-physical things called mind-stuff. In this case, you would be reducing consciousness to one immaterial or ideal substance, i.e., you would be using the philosophical assumption traditionally called ideal monism or idealism.

Reductionist approaches pose several challenges for the study of consciousness in both philosophy and science. In their radical form, followed in the strongest versions, they end up excluding the parts that they do not explain (eliminativism). Supporters of this form of materialism, eliminative materialism, propose that subjectivity and the reportable experiential quality of the contents of consciousness (qualia) such as perception, imagination, dreams, thoughts, etc, do not have any causal role. For example, they do not cause neurobiological changes and have no validity in terms of data. Therefore, they should be ignored. In contrast, the supporters of the extreme version of idealism, also known as mysterianism, deny that consciousness can ever be explained by physical processes, and advance that subjectivity and qualia (what it is like to be you, me, or a bat – the qualities of subjective conscious experiences) are the central things that science should be explaining (and not eliminating).

The battle between different philosophies becomes useful to understand the major theoretical and methodological issues faced when studying consciousness. Before we consider how modern neuroscientific approaches are derived from all sorts of philosophical theories, we need to learn about the main debates among researchers. These form the foundation behind the scientific method followed throughout this book, and they will also clarify the different conditions we use to define consciousness at the end of this chapter.

The stronghold of idealism is that natural laws, biology and physics ultimately cannot explain consciousness because they cannot explain subjectivity in consciousness. In other words, they cannot explain what it is like to be you or me. Only you and I have private and privileged knowledge of ourselves (knowledge argument). This is generally known as first-person perspective: knowledge acquired through careful observation of our immediate, naïve unfiltered subjective experience – or what appears to us (phenomenon) – across time & place (phenomenology). From this perspective, having a brain may be necessary to be conscious but is it not sufficient since consciousness cannot be known from a third-person perspective using objective data. If simply having a brain was sufficient, entities like rats and pigeons would have consciousness due to their brain and objectively observable behaviour. Important note: By this reasoning, Classical (Pavlov’s) and Operant (Skinner’s) Conditioning does not explain consciousness. That an organism has a behavior, observable from a third-person view, is not sufficient to conclude that organism is conscious, may well be a zombie!

Conversely, materialists contend that the first-person perspective is based on unreliable knowledge and methodology to extract it. The first-person perspective is basically a type of report of subjective experience or phenomenology. This view is known to be unreliable in multiple circumstances, such as witness eye-reports in legal cases. Therefore, these critics argue, it cannot be used to study consciousness seriously. Materialists contend that the first-person perspective is, in a way, similar to the third-person perspective. According to them, the goal of science is to reduce the unreliable first-person perspective to the physical-stuff: the brain is necessary and sufficient for consciousness. Without one there is no consciousness, so phenomenology is unneeded.

However, a third alternative exists. While completing the little activity I asked you to do, you might have written down that consciousness is made of both physical and mental stuff. This philosophical position is known as dualism. It has many versions, but the two main ones are substance dualism (positing consciousness as two different substances of different kinds simultaneously) and property or aspect dualism (positing consciousness made of one substance which manifests with two aspects).

Its two aspects are objective knowledge (which reflects the physical-stuff) and subjective knowledge (which reflects the mind-stuff). The main thing to consider about dualism is that it is so appealing at first glance because it doesn’t appear to force us into reductionism; both physical and mind stuff are kept in the definition of consciousness.

For any argument grounded on the origins and nature of knowledge, the method by which we interpret data of all kinds, the main branch involved with this is called epistemology. This scientific-philosophical domain is responsible for explaining theories, i.e. “theories about theories”. The major achievement of this discipline has been to highlight that all theories have what might be called a “hidden philosophical agenda”. When scientists devise experiments and interpret data, not everything can be taken as “objective” since experimenter beliefs can affect them. For example, they can change the way in which they obtain, analyze and explain data. For example, let us consider the vexed topic of Qualia (singular = Quale).

Qualia is a term in philosophical jargon used to describe the intrinsic quality of the experiential mental states. Sensory qualia are the most obvious examples. The environment does not contain colours, sounds or smells; rather, it contains frequencies, amplitudes and certain types of molecules. As many scholars point out, reality would on a microscope appear uniformly pretty much grey. Colours, sounds and smells are the results of mental representations that “translate” physical parameters of this energy. Therefore, these sensory qualities only exist because they are properties that exist as result of us experiencing certain forms of energy at another category or class level, the mental. Other forms of mental events, such as thoughts, also have qualia. That is, the most fundamental and undisputed evidence about consciousness is that every conscious mental event comes with its quale (or that every quale correlates with a conscious mental event). However, the agreement stops here.

Idealists maintain that qualia are the crucial things that define consciousness. For example, Nagel (1974) devised a thought experiment that asks, “Can you know what it is like to be a bat?” This is, of course, a paradoxical tease. You cannot have the conscious experience of being another being, even using imagination and simulation. (Recall the example of not be sure about computers’ consciousness of 1s and 0s.) Only a bat knows what it is like to be a bat, and you would only know by really being one! Critics of idealism usually deny the type of data that idealists use. These critics advance that people’s phenomenological reports of conscious experience might seem, but are not, subjective, private, intrinsic and special. Notably, some cognitive scientists contend that phenomenological reports are a form of knowledge and that properly defining them, using supposed links with the workings of the brain, removes any surrounding qualia or mystery.

Dualists interpret the existence of qualia as evidence of first-person perspective and of methods that are adequate to study it. Although dualism avoids reducing matter to mind or vice versa, it still cannot address the explanatory gap, the relationship between physical phenomenon and conscious experience. Many researchers, including monists in both camps, see this as the hard problem in the study of consciousness (as opposed to the easy problems, like studying attention, memory, etc, on that consciousness is implicitly assumed as already being a part embedded in these sensory, perceptual or cognitive processes). While easy problems can be quite hard, they can be solved using scientific methods.

Thus, much debate has been focused on what is acceptable data in the study of consciousness (objective or subjective) and what are the boundaries of science. In other words, what part of consciousness is the legitimate object of study from a scientific point of view, and what will never be explained by science? As you can imagine, there are all kinds of ideas, opinions and arguments on the topic.

As shown by the qualia debate, epistemology can help understand and recognize the metatheories, big picture theories, beneath our more specific scientific theories (models). This will enable easier understanding when we can categorize the type of explanation used to account for an object of study.

Next, we will use a bit of “critical” epistemology to dissect all the major meta-theories of the moment. This exercise will help understand if and how neuroscience can claim supremacy in the messy crucible that is the study of consciousness, so plagued it is by dangerous philosophical traps.

1.3. Type of Theories (or Theoretical Spectrum)

1.3.1. Dualist theories

Many classic theories of consciousness were initially based on substance dualism, the hypothesis that there are two types of substance entities (matter and mind, brain and thoughts). This type of dualism has since been rejected almost universally. One major difficulty is that substance dualism implies at some level that the brain is not necessary for consciousness. However, changes to the brain affect consciousness, and disease, injury or drugs can alter your state of consciousness (neural dependence). This leads to another major problem, which is the lack of explanation for the interaction between consciousness and body. Substance dualism cannot be specific in explaining what the substances really are (no explanatory power) and at some point, must invent more and more ad hoc assumptions to address violation of neural dependence and lack of specific details regarding the dual substances. This is in flagrant disregard with one of the pillars of scientific reductionism, the principle of Occam’s razor or parsimony (“simpler is better”). Finally, substance dualism does not accommodate the theory of evolution in that it does not explain from where consciousness might come from, why do we have consciousness as is, in the first place? However, the main problem is the lack of explanation for interaction between mind and matter. Substance dualism offers no explanation if, for example, the function of consciousness is to move the body. How is just mentally thinking of moving your arm connected with the collection of neural processes that ends up with the physical outcome of actually moving the arm? The alternative is that consciousness has no function (consciousness is epiphenomenal and has no causal influence on matter), but this would be little bit like cheating the rules of the game, or as we would say in philosophy and science, sidestepping the problem.

Many philosophers and scientists who share a dualist perspective developed a more sophisticated version of it called property dualism. The idea is that mind and matter are two sides of the same coin, two aspects of the same underlying reality that is “neutral”: neither mind nor matter. For example, pain can be described with physical-stuff (neural processes and pathways) and mind-stuff (how it feels like to be in pain). The phenomenon of pain cannot be reduced to one or the other, which are both essential. In its most complex form, this view proposes that there is only one material substance that gives rise to both mental and physical aspects. However, the mental aspects are associated with a certain type of physical aspects (not all), which is the brain.

Property dualism falls again into the same issues as substance dualism and it is important to consider more closely the structure of this theory and the issues that can arise from it because it clarifies the logic of many explanations, not just in philosophy but also in psychology, cognitive science, and neuroscience. The fact that there is one neutral substance that gives to mental and physical stuff is not a problem for function; there is no reason why there must be the same function for mind and matter-stuff. They could be separate, one for physical properties, another for mental aspects. However, the issue of interaction comes back, as there is still no explanation for the causal links between physical and mental properties if they are both irreducible to each other.

1.3.2. Emergentism

Another version of property dualism is based on the idea that consciousness emerges from the physical stuff (emergentism). Emergent properties arise from the combination of elements in structures or systems of relationships, generally complex systems such as networks. They do not exist as single parts but as a result of entanglement in the networks. At the turn of the 20th century, in the early days of psychology, the concepts of networks were first introduced with the German word Gestalt (“The whole is other than the sum of the parts”). For example, wetness (or liquidity) in water is not a property of either oxygen or hydrogen; it is a property emerging from their union. Similarly, consciousness emerges from the interconnections of billions of nerve cells, a neural network. This interesting position implies a causal or deterministic role of the brain which would “produce” consciousness (as in, consciousness = the effect of something else being its cause). However, once consciousness is emergent it becomes different from the physical-stuff it derives from, and it cannot be reduced to or explained in its terms or at the same level. Therefore, the feedback system that causes consciousness or emergent property to influence the physical-stuff is unclear: how is it supposed to occur? Emergentism falls into epiphenomenalism and the problems of dualism. However, if you look closely, a lot of current computational neuroscience uses this position (perhaps unwittingly).

1.3.3. Non-material monisms

As we mentioned earlier, monism attempts to reduce mind to matter (materialism) or matter to mind (non-material monism or idealism). Idealism questions the existence of physical-stuff: if objects are not in the mind, they cease to exist. Since this position ultimately leads to postulating that consciousness derives from a meta-physical entity, it is best dealt with in philosophy or religious studies. I do not know any reputable neuroscience research program that is based on purely idealist epistemology. Though I will occasionally talk about contents of consciousness that are borderline spiritual, such as belief in God and the like, these are objects of study to be explained by neuroscience and not neuroscientific explanations.

1.3.4. Functionalism

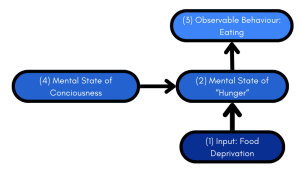

An important position that may be labelled as monist or dualist is functionalism. According to this view, consciousness can be defined in terms of its functional roles. Each mental event is specified by its causal relations to three states or processes: stimulus input, mental events, and behavioural output. Consider the following case:

(1) Input: food deprivation → (2) Mental state of “hunger” → (3) Eating

Consciousness could have a role as a mental state (4) in relation to (2), for example conscious feelings of depression or mood changes (insofar as mood affects eating). For functionalism, this network of relationships determines functional roles of mental states that are stable and consistent over time, such that a mental state in (2) will always correspond to hunger (see Figure 1 below).

Figure 1. Example of functionalist logic.

Contrast the above to behaviourism. For the behaviourist, it is not-eating-for-a-while or (1) that causes both (2) being hungry and (3) eating. Being hungry is epiphenomenal; it does not cause eating and cannot be observed. The consciousness of feeling hungry is a dispositional state; saying “I am hungry” is to say that, given the opportunity, I am likely to seek food and eat it. Functionalists would actually object that other mental states in a causal relationship with (2) will determine whether the individual eats or not. The individual in question may be depressed, on a diet or manic, which could all determine if the individual eats or not.

It is not clear whether functionalism can be classified as dualist or monist. The reason is that functionalism is not bound to assuming that the brain is the physical stuff that implements the mind. In principle, any system that has the same functional organization has a mind. Computers, robots and machines could have a mind. The analogy used to describe functionalism is the distinction between role vs occupant, or in the most popular version software (computer program) vs hardware (physical substratum that executes the program). Consciousness could arise in any system as long as its role has the same computational structure as the human brain with causal relationship of input, internal computations and output. A common analogy is that human consciousness is a program that runs on brains.

There are three major criticisms of functionalism. The first is that functionalism essentially implies that the brain is irrelevant. This assumption of neural autonomy only stands up if one can clearly distinguish the level of software in the mind and the level of hardware in the brain. However, brain and mind have multiple, complex interacting levels, so the analogy is either false or too weak and coarse to work. Engineering has demonstrated that the physical medium in which operations are executed limits how and which operations can be carried. That is, neural structure has some types of computations that cannot be performed using another physical substratum. The second criticism is that functionalism endorses sentient computers. So far this has been a significant failure; in over half a century of research there has been no clear demonstration that computers or machines can come close to this. Until functionalists commit to a research program that can deliver what it promises in a realistic timeline, computers with emotions or a taste for poetry will stay in the realm of science fiction. Lastly, functionalism can be “accused” of the worst forms of epiphenomenalism since qualia are irrelevant and their absence (and the first-person perspective) does not allow us to establish with certainty whether consciousness is present or not. A functionalist that embraces eliminativism can avoid the latter by saying that qualia are not real; however, we will see that eliminativism can be falsified on logical and empirical bases. The details of eliminativism’s logical fallacy are discussed in section 2 of this chapter. (Note: the empirical evidence against eliminativism will be discussed everywhere in the book as it is one of the underlying methodological themes).

1.4. Materialism

The main hypothesis underlying materialism is that mind is matter, consciousness = brain. This is the main position in science and neuroscience today. It comes in two forms: identity theory and eliminative materialism. Identity theory holds that consciousness and qualia exist and that research will discover neural causes and correlates of consciousness and qualia, i.e. they would be brain states. In contrast, the most radical version of materialism, eliminativism, holds that qualia and consciousness are not real and that both will be replaced by neurobiological descriptions and concepts. Both types of materialism aim for the reduction of macrophenomena to microstructures and dynamics, making them a result of element interactions in a system. Thus, consciousness should ultimately be reduced to chemistry, physics and mathematics, eventually progressing (degenerating?) to a few equations one can wear on a T-shirt.

Now, in identity theory the central claim is the following:

(i) Consciousness = Brain

Statement (i) corresponds more precisely to the statement that mental states (or processes) and brain states (or processes) are the same thing. That is, for every state of consciousness there is a brain state that corresponds exactly to it. The statement in (i) does not posit causality, it does not state that the brain causes consciousness. Rather, it states that brain and consciousness are one and the same. To illustrate the point, let’s use a strategy favored by materialists, using analogies from other “hard” sciences like physics. Saying that molecular kinetic energy causes heat is wrong because molecular kinetic energy is heat (temperature). Similarly, light is not caused by electromagnetic radiation; by definition, that’s what light is. In the same fashion, it is absurd to state that the brain causes consciousness, because that would create a causal relationship between the brain at one level and consciousness at another.

In addition to analogies, materialism gives one elegant simple explanation; it uses parsimony, eliminating many of the issues raised by dualism, idealism, qualia, interactionism, the explanatory gap and causal role. Materialism also capitalizes on the overwhelming evidence of neural dependency and respects and follows evolutionary principles. Finally, it can give rise to realistic research programs, with falsifiable and accountable goals.

The point that eliminativism is adding to regular materialism is that the folk knowledge and terminology we use to refer to consciousness will change as materialistic research programs come to be successfully completed. The folk terminology and knowledge will be eliminated by new, more precise, and appropriate knowledge, in turn changing our perception of consciousness. We will not talk about qualia anymore because these will be understood in a totally different way; our own experience of consciousness will be changed by the removal of inappropriate concepts and terms.

1.4.1. Challenges to materialism

Materialism poses several logical and methodological challenges which define the main problems in studying consciousness and its derivative research programs. Consider a typical scenario when studying one aspect of consciousness, in this case visual mental imagery. Suppose I ask you to generate a visual mental image (just like the ones in dreams) of your mother, father, or anything with a physical form. (Note 2% of people, like me, cannot summon up such images (aphantasia); if you are like me and cannot do the task, never mind, just “think” about the loved entity. This example applies to thinking as well). Make it as vivid as possible, like it might appear in your dream.

Now suppose we formulate this process of consciousness you had in terms of a typical materialistic neuroscientific hypothesis like the one in (i):

(ii) The visual mental image X is the brain state/process X

In practice, this means that our research program will have to show that X is a cause of X or a correlate of X. With time, these two relationships (causality or correlation) will be replaced by “=”, so that:

(iii) X = X

Statement (iii) implies symmetry, known in logico-mathematical terms as Leibniz’s law. For X to be identical to X, every property of X must be properties of X, and vice versa. However, this is where problems start because correlation and causation do not obey Leibniz’s law. Even if correlation is symmetrical, reciprocal, and perfect, X correlated with X and vice versa, they can have very different properties. For example, height correlates with weight but it does not follow they are identical. Worse case, causation does not have symmetry since there is an order of determination; if X causes X, then X cannot cause X and does not have to obey to Leibniz’s law to fulfill the relations of cause and effect.

There are two difficulties which originally derive from the challenge summarized above. Identity implies numerical identity (which is what Leibniz’s law establishes), a one-to-one correspondence between brain activity and experience. However, there has never been direct evidence of that in centuries of research in any discipline, and the success of reductionist efforts seems unlikely in the future. Another related issue is that a function or process can be implemented in many ways (multiple realizability). Individual differences and neural plasticity make it so that a given mental activity can be implemented in very different brain processes and states. This is particularly true for complex, high level cognitive functions such as mathematical and arithmetic reasoning. It is then difficult to see how identity can be demonstrated.

Another major weakness of eliminativism is that it has to assume or refer to subjective reports of qualia to define the conscious phenomenon studied, which will be replaced by evidence-based knowledge derived from research. However, this is circular because what is explained is explained by referring to something that was assumed as true at the very start. Therefore, it is not possible to eliminate the qualia that define conscious phenomena because the last explanation will refer to qualia themselves. In cognitive science, a problem that has originated from circularity is the debate surrounding the category error. That is, any part of a studied conscious phenomenon that has been defined in terms of a framework of first-person perspective should be reduced to a third-person perspective, but the first- and third-person perspective are two categories of explanations that are incommensurable. You cannot reduce any simple experience to an action potential. The category “mental” is something different than the category “physical”.

Finally, eliminativism holds something that is absurd. What does it mean that scientific knowledge would “replace” consciousness? Would scientific knowledge be a replacement or improvement for how you are feeling now? Maybe scientific knowledge could give insight and improve our understanding of the experience, but it cannot be a substitute of it. Experience is not a hypothesis; it is evident to you and me for we have the perception of accessing it now. Neuroscience is not needed to tell you how to interpret your experience. In addition, science should not be something for the elite but for everyone, those who pay taxes or donate money to research and institutions. The burden is on science to address people’s convictions and beliefs on their phenomenological experience, not just telling them they are “wrong” and should be discarded.

1.5. The best of all worlds: Functional Emergent Materialism

There is yet another possible epistemological alternative view which I propose and follow in the remainder of this book. It is based on two things. The first is the view of scientific pluralism, according to which in order to get at aspects of reality we cannot use one single view or representation of knowledge or methods, we need multiple ideas and tools. The logical consequence is that there can be multiple “truths” at the same time and they are equally valid. This perspective is very popular for some physicists who work following the many-worlds interpretation of quantum reality. Translating this idea to the study of consciousness, we might say that there are no insurmountable disagreements between the epistemologies of consciousness. Rather, they focus on the importance of a single “real” different aspect of consciousness at the time, but they ignore others which happen at the same or different time! If we are careful by synthesizing the different pieces of puzzle together, the apparent inconsistencies vanish.

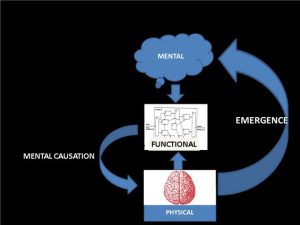

In support of the first element, we can base claims of correspondence between consciousness and the brain by replacing identity with another principle: supervenience. Supervenience is the idea according to which a reality at one level (call it L2) depends on another (call it L1) which evolved first and is primary. L2 exists because of L1 since it evolved from L1. However, L2 evolved in something different which can no longer be completely explained by L1.

To explain consciousness, we could postulate two forms of supervenience which can be combined in the same system or set of relationships. According to one type of supervenience (emergent materialism), basic neural structures are primary and exist without consciousness, they are sufficient for physical functions. In addition, they are necessary for the existence of conscious structures, but the neural structures are only sufficient to explain that consciousness structures exist, they cannot explain how they work.

To explain how conscious structures might work we can introduce another form of supervenience, functional emergentism, according to which consciousness structures, once they exist, due to evolution, by dependence of neural structures have the ability to create functions that can be imposed to organize neural structures and can interact with their physical functions at a middle level. So, the consciousness structures are primary and sufficient for mental functions such as organizing the sequence of the thought to move an arm, and once that thought has been generated at the same time it creates a function that has mental causation, that is, interacts with physical structure by organizing physical function in the brain an the body.

We may dub this perspective (represented in Figure 1) as Functional Emergent Materialism (FEM). This is a form of materialist epistemology which bypasses many of the issues posed by dualism but at the same time combines the antireductionist advantages of dualism with those of functionalism.

Figure 2. Functional Emergent Materialism.

1.6. From philosophy to science: The necessary and sufficient C-conditions

Far from feeling discouraged right now, we should use what we learned from philosophy and epistemology to formulate the most acceptable and reasonable approach we possibly can. Are definitions really needed to get an inquiry on consciousness going? The answer is NO! Some (Crick & Koch 2003, among many others) even suggest that definitions may lead astray. Definitions are not needed nearly as much as a good framework to make progress on neuroscience. The one used here will be called minimal materialism or 2M framework.

My strategy is to use what we learned from logical points of philosophical debates and other disciplines to construct the best possible framework. I propose that such a framework should be parsimonious in making axiomatic (self-evident or unquestionable) starting assumptions, flexible to incorporate many aspects from new evidence, as complete as possible (ie, radical and complete eliminativism are not viable) and should follow pessimistic positivism (see Figure 1; some aspects of consciousness should not be the focus of science, and are best explained with other disciplines such as art, music, religion, or ethics). This apparently mundane proposition has a very important implication for this text which, in the end, is paradoxically similar to accepting some aspects of eliminativism. Esoteric topics will not be dealt with unless it can be done following scientific rules because there is a category error. Re-describing subjective spiritual experience in terms of action potentials cannot explain that subjective experience, only its neural correlates.

The starting point of 2M is to establish the C-conditions, sufficient and necessary conditions according to which we can say a human being is conscious. One main necessary condition to say that you are conscious right now is that you are awake, as in not sleeping. For example, you are currently reading words. Is that sufficient to define all of consciousness? Sometimes I surprise myself, especially when I am bored, by staring at words without really reading or being aware of it. Does it also happen to you? If it does, then you know that being simply awake is not a sufficient c-condition. However, why would wakefulness even be a necessary c-condition? Well, you would see nothing were you currently in dreamless sleep, neither ink on page nor pixels on screen. Thus, there must be a switch-on state, assumed to be awake.

Still, that is not sufficient for you and an observer of you to say that you are conscious. A second important c-condition that can be behaviorally observed from the third-person perspective are background emotions, continuous preverbal emotional signals (body movements, face expression) that show fatigue/boredom/energy, discouragement/enthusiasm, malaise/well-being, anxiety/relaxation; these allow us to presume a subject’s state of mind. For example, if I were looking at you and saw you slouching on the chair, sighing and eyes rolling, I would presume that what you are reading is making you bored.

A third related c-condition is sustained attention, being focused for minutes on situation-appropriate object/event. For example, trying to understand what the words you are reading mean. Finally, a fourth c-condition is purpose, the fact that you have a plan in mind to do something (action) and you are following that plan. At this point, that can be finishing this chapter to see the key concepts you will need to learn for passing the test.

All these conditions are indeed necessary to say someone is conscious. However, these alone are not sufficient. Only you can say how it is to be like you while reading. This is where first-person perspective comes into play. The observer would need you to communicate that you are conscious, indeed confirming you are in the observable c-conditions mentioned earlier. Your communication, however expressed, is your subjective report of phenomenal awareness of: 1) Aspects of the external environment (stimuli, objects, events, and scenes); 2) Changes of the organism in response to the environmental input. You have direct access to both environmental and somatic input through mental images or 1st-order maps: these are neural patterns representing objects/events and changes of the organism or proto-self (Damasio, 2000). These images are modality-specific sensorimotor (visual, auditory, somatic, etc), multiple and associated in an integrated system (associative memory). Although they are reported subjectively, they can be objectifiable or made observable by proxy, identified by a behavior or response correlate that occurs with them.

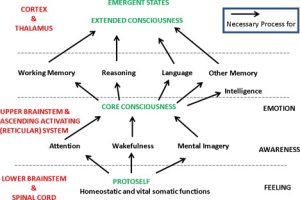

The next step in conscious awareness is that you have a Self. This aspect of consciousness is achieved through the generation of sensorimotor neural and self-neural patterns describing the relationship between objects and the organism’s changes to those objects. These higher order descriptions are 2nd-order neural patterns (2nd-order maps) representing the knowledge that mental images of objects and mental images of organism’s changes are OWNED by you, yourself. According to Damasio (2000), we can then distinguish two main types of consciousness levels plus a more basic pre-conscious level:

- Proto-Self: Rudimentary, unconscious or preconscious sense of self based on simpler functions of the body such as vital and homeostatic functions associated with the body

- Core Consciousness: Consciousness of here and now, biologically simple, not dependent on types of memory or language.

- Extended Consciousness: Biologically complex sense of self in the lifespan, including memory of the past and anticipation of the future; depends on memory, develops ontogenetically and is augmented by language.

Figure 3. Damasio’s model of consciousness.

There are four important corollary aspects that define the relationships observed in Figure 1:

- Core self is transient, limited to here and now interactions with objects and events

- Core consciousness depends on wakefulness and attention (at least low-level)

- Extended self (the traditional idea of self) is autobiographical

- Extended consciousness depends on core consciousness and the basic proto-self

Finally, there is an important connection between emotions and consciousness. Patients with impairment of core consciousness do not reveal emotions by nonverbal expressions or vocalization. They are missing the entire range of emotions. Patients with normal Core Consciousness but impaired Extended Consciousness have normal basic emotions. Therefore, there is apparent co-location of neural structures for emotion and Core Consciousness, which can lead to the following theory: Consciousness started with evolution of 2nd order maps/images and the development of a central hub-network in the brain. It then increased complexity and richness (extended consciousness) as the central hub-network got more and more interconnected to all other structures. There seems to be a clear evolutionary advantage, given the overlap between consciousness and homeostatic functions (attention, emotion and body state regulation) or neural local proximity, it seems that consciousness may be an extension of automatic basic homeostasis for flexibility and planning in response to changes in the environment.

In the remaining of this book, I will explore the idea of the hub-large scale network. I will describe functions and anatomy that may fit the profile for this putative centre of consciousness. Now that we have a working definition for the limits of what will be studied, we need to know more about how to do so. In other words, the next chapter will consider the available tools and methods by which we can have a scientific idea of this thing called consciousness.

Figure 4. Blind Positivism. “A near total faith in the inevitability of scientific progress. Western science worships the God of causality and materialists fall prey to the overly optimistic hope that there are no limits to this endeavor; that eventually the phenomena of consciousness will succumb to the omnipotent methods of empirical research and be admitted into the enlightened kingdom of science.” (Dietrich, 2007, p.57)

Readings

Dietrich, A. (2007). Introduction to Consciousness. New York, NY: Palgrave Macmillan

Damasio, A, & Mayer, K. (2009). Consciousness: An overview of the phenomenon and its possible neural basis. In Laureys, S. and Tononi, G. (Eds) The neurology of consciousness. New York, NY: Academic Press.

Crick & Koch (2003). A framework for consciousness. Nature Neuroscience, 6, 119-126.

Nagel (1974). What is it like to be a bat? Philosophical Review, 83, 435-451.