6 Science and Non-Science

Chapter 6: Science and Non-Science

Intro

So far, we have discussed scientific knowledge, scientific methods, scientific change, and scientific progress. Despite all these philosophical investigations of science, we haven’t yet had a focused discussion on what makes science what it is, or what differentiates it from other human endeavours. We have taken for granted that science is something different – something unique. But what actually makes science different? What makes it unique?

In philosophy this question has been called the demarcation problem. To demarcate something is to set its boundaries or limits, to draw a line between one thing and another. For instance, a white wooden fence demarcates my backyard from the backyard of my neighbour, and a border demarcates the end of one country’s territory and the beginning of another’s. The demarcation problem in the philosophy of science asks:

What is the difference between science and non-science?

In other words, what line or “fence” – if any – separates science from non-science, and where exactly does science begin and non-science end?

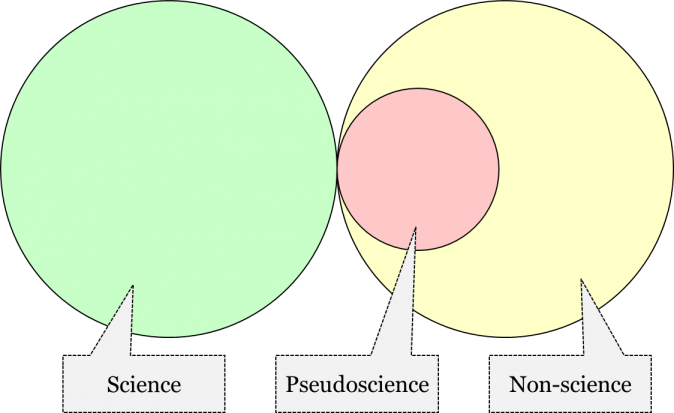

Historically, many philosophers have sought to demarcate science from non-science. However, often, their specific focus has been on the demarcation between science and pseudoscience. Now, what is pseudoscience and how is it different from non-science in general? Pseudoscience is a very specific subspecies of non-science which masks itself as science. Consider, for instance, the champions of intelligent design, who essentially present their argument for the existence of God as a properly scientific theory which is purportedly based on scientific studies in fields such as molecular biology and evolutionary biology but incorporates both blatant and subtle misconceptions about evolutionary biology. Not only is the theory of intelligent design unscientific, but it is pseudoscientific, as it camouflages and presents itself as a legitimate science. In short, while not all non-science is pseudoscience, all pseudoscience is definitely non-science.

While pseudoscience is the most dangerous subspecies of non-science, philosophical discussions of the problem of demarcation aim to extract those features that make science what it is. Thus, they concern the distinction between science and non-science in general, not only that between science and pseudoscience. So, our focus in this chapter is not on pseudoscience exclusively, but on the general demarcation between science and non-science.

Practical Implications

As with most philosophical questions concerning science, this question too has far reaching practical implications. The question of demarcation is of great importance to policy-making, courts, healthcare, education, and journalism, as well as for the proper functioning of grant agencies. To appreciate the practical importance of the problem of demarcation, let’s imagine what would happen if there was no way of telling science from non-science. Let’s consider some of these practical implications in turn.

Suppose a certain epistemic community argues that we are facing a potential environmental disaster: say, an upcoming massive earthquake, an approaching asteroid, or slow but steady global warming. How seriously should we take such a claim? Naturally our reaction would depend on how trustworthy we think the position of this community is. We would probably not be very concerned, if this was a claim championed exclusively by an unscientific – or worse, pseudoscientific – community. However, if the claim about looming disaster was accepted by a scientific community, it would likely have serious effect on our environmental policy and our decisions going forward. But this means that we need to have a way of telling what’s science and what’s not.

The ability to demarcate science from non-science and pseudoscience is equally important in courts, which customarily rely on the testimony of experts from different fields of science. Since litigating sides have a vested interest in the outcome of the litigation, they might be inclined towards using any available “evidence” in their favour, including “evidence” that has no scientific foundation whatsoever. Thus, knowing what’s science and what’s not is very important for the proper function of courts. Consider, for example, the ability to distinguish between claimed evidence obtained by psychic channelling, and evidence obtained by the analysis of DNA found in blood at the scene of the crime.

The demarcation of science from non-science is also crucial for healthcare. It is an unfortunate fact that, in medicine, the promise of an easy profit often attracts those who are quick to offer “treatments” whose therapeutic efficacy hasn’t been properly established. Such “treatments” can have health- and even life-threatening effects. Thus, any proper health care system should use only those treatments whose therapeutic efficacies have been scientifically established. But this assumes a clear understanding as to what’s science and what merely masks itself as such.

A solid educational system is one of the hallmarks of a contemporary civilized society. It is commonly understood that we shouldn’t teach our children any pseudoscience but should build our curricula around knowledge accepted by our scientific community. For that reason, we don’t think astrology, divination, or creation science have any place in school or university curricula. Of course, sometimes we discuss these subjects in history and philosophy of science courses, where they are studied as examples of non-science or as examples of what was once considered scientific but is currently deemed unscientific. Importantly, however, we don’t present them as accepted science. Therefore, as teachers, we must be able to tell pseudoscience from science proper.

In recent years, there have been several organized campaigns to portray pseudoscientific theories as bearing the same level of authority as the theories accepted by proper science. With the advent of social media, such as YouTube or Facebook, this becomes increasingly easy to orchestrate. Consider, for instance, the deniers of climate change or deniers of the efficacy of vaccination who have managed – through orchestrated journalism – to portray their claims as a legitimate stance in a scientific debate. Journalists should be properly educated to know the difference between science and pseudoscience, for otherwise they risk hampering public opinion and dangerously influencing policy-makers. Once again, this requires a philosophical understanding on how to demarcate science from non-science.

Finally, scientific grant agencies heavily relay on certain demarcation criteria when determining what types of research to fund and what types of research not to fund. For instance, these days we clearly wouldn’t fund an astrological project on the specific effect of, say, Jupiter’s moons on a person’s emotional makeup, while we would consider funding a psychological project on the effect of school-related stress on the emotional makeup of a student. Such decisions assume an ability to demarcate a scientific project from unscientific projects.

In brief, the philosophical problem of demarcation between science and non-science is of great practical importance for a contemporary civilized society and its solution is a task of utmost urgency. While hopefully science’s general boundaries have started to come into view as we’ve surveyed it over the last five chapters, in this final philosophical chapter we will attempt to bring them into sharper focus.

What are the Characteristics of a Scientific Theory?

Traditionally, the problem of demarcation has dealt mainly with determining whether certain theories are scientific or not. That is, in order to answer the more general question of distinguishing science and non-science, philosophers have focused on answering the more specific question of identifying features that distinguish scientific theories from unscientific theories. Thus, they have been concerned with the question:

What are the characteristics of a scientific theory?

This more specific question treats the main distinction between science and non-science as a distinction between two different kinds of theories. Philosophers have therefore been trying to determine what features scientific theories have which unscientifictheories lack. Consider for instance the following questions:

Why is the theory of evolution scientific and creationism unscientific?

Is the multiverse theory scientific?

Are homeopathic theories pseudoscience?

Our contemporary scientific community answers questions like these on a regular basis, assessing theories and determining whether those theories fall within the limits of science or sit outside those limits. That is, the scientific community seems to have an implicit set of demarcation criteria that it employs to make these decisions.

You may recall that we mentioned demarcation criteria back in chapter 4 as one of the three components of a scientific method, along with acceptance criteria and compatibility criteria. A scientific method consists of all criteria actually employed in theory assessment Demarcation criteria are a specific subset of those criteria which are employed to assess whether a theory is scientific or not.

So, what are the demarcation criteria that scientists employ to evaluate whether a theory is scientific? First, let’s look at our implicit expectations for what counts as a science and what doesn’t. What are our current demarcation criteria? What criteria does the contemporary scientific community employ to determine which theories are scientific and which are not? Can we discover what they are and make them explicit? We can, but it will take a little bit of work. We’ll discuss a number of different characteristics of scientific theories and see whether those characteristics meet our contemporary implicit demarcation criteria. By considering each of these characteristics individually, one step at a time, hopefully we can refine our initially proposed criteria and build a clearer picture of what our implicit demarcation criteria actually are.

Note that, for the purposes of this exercise, we will focus on attempting to explicate our contemporary demarcation criteria for empirical science (as opposed to formal science). As we have learned in chapter 2, empirical theories consist, not merely of analytic propositions (i.e. definitions of terms and everything that follows from them), but also of synthetic propositions (i.e. claims about the world). This is true by definition: a theory is said to be empirical if it contains at least one synthetic proposition. Therefore, empirical theories are not true by definition; they can either be confirmed by our experiences or contradicted by them. So, propositions like “the Moon orbits the earth at an average distance of 384,400 km”, or “a woodchuck could chuck 500 kg of wood per day”, or “aliens created humanity and manufactured the fossil record to deceive us” are all empirical theories because they could be confirmed or contradicted by experiments and observations. We will therefore aim to find out what our criteria are for determining whether an empirical theory is scientific or not.

First, let us appreciate that not all empirical theories are scientific. Consider the following example:

Theory A: You are currently in Horseheads, New York, USA.

That’s right: we, the authors, are making a claim about you, the reader. Right now. Theory A has all the hallmarks of an empirical theory: It’s not an analytic proposition because it’s not true by definition; depending on your personal circumstances, it might be correct or incorrect. But it’s not based on experience because we, the authors, have no reason to think that you are, in fact, in Horseheads, NY: we’ve never seen you near Hanover Square, and there is no way you’d choose reading your textbook over a day at the Arnot Mall. Theory A is a genuine claim about the world, but it is a claim that is in a sense “cooked-up” and based on no experience whatsoever. Here are two other examples of empirical theories not based on experience:

Theory B: The ancient Romans moved their civilization to an underground location on the far side of the moon.

Theory C: A planet 3 billion light years from Earth also has a company called Netflix.

Therefore, we can safely conclude that not every empirical theory can be said to be scientific. If that is so, then what makes a particular empirical theory scientific? Let’s start out by suggesting something simple.

Suggestion 1: An empirical theory is scientific if it is based on experience.

This seems obvious, or maybe not even worth mentioning. After all, don’t all empirical theories have to be based on experience? Suggestion 1 is based on the fact that we don’t want to consider empirical theories like A, B, and C to be scientific theories. Theories that we can come up with on a whim, grounded in no experience whatsoever, do not strike us as scientific. Rather, we expect that even simple scientific theories must be somehow grounded in our experience of the world.

This basic contemporary criterion that empirical theories be grounded in our experience has deep historical roots but was perhaps most famously attributed to British philosopher John Locke (1632–1704) in his text An Essay Concerning Human Understanding. In this work, Locke attempted to lay out the limits of human understanding, ultimately espousing a philosophical position known today as empiricism. Empiricism is the belief that all synthetic propositions (and consequently, all empirical theories) are justified by our sensory experiences of the world, i.e. by our experiments and observations. Empiricism stands against the position of apriorism (also often referred to as “rationalism”) – another classical conception that was advocated by the likes of René Descartes and Gottfried Wilhelm Leibniz. According to apriorists, there are at least some fundamental synthetic propositions which are knowable independently of experiments and observations, i.e. a priori (in philosophical discussions, “a priori” means “knowable independently of experience”). It is this idea of a priori synthetic propositions that apriorists accept and empiricists deny. Thus, the criterion that all physical, chemical, biological, sociological, and economical theories must be justified by experiments and observations only, can be traced back to empiricism.

But is this basic criterion sufficient to properly demarcate scientific empirical theories from unscientific ones? If an empirical theory is based on experience, does that automatically make it scientific?

Perhaps the main problem with Suggestion 1 can best be illustrated with an example. Consider the contemporary opponents of the use of vaccination, called “anti-vaxxers”. Many anti-vaxxers today accept the following theory:

Theory D: Vaccinations are a major contributing cause of autism.

This theory results from sorting through incredible amounts of medical literature and gathering patient testimonials. Theory D is clearly an empirical theory, and – interestingly – it’s also in some sense based on experience. As such, it seems to satisfy the criterion we came up with in Suggestion 1: Theory D is both empirical and is based on experience.

However, while being based on experience, Theory D also results from willingly ignoring some of the known data on that topic. A small study by Andrew Wakefield, published in The Lancet in 1998, became infamous around 2000-2002 when the UK media caught hold of it. In that article, the author hypothesized an alleged link between the measles vaccine and autism despite a small sample size of only 12 children. Theory D fails to take into account the sea of evidence suggesting both that no such link (between vaccines and autism) exists, and that vaccines are essential to societal health.

In short, Suggestion 1 allows for theories that have “cherry-picked” their data to be considered scientific, since it allows scientific theories to be based on any arbitrarily selected experiences whatsoever. This doesn’t seem to jibe with our implicit demarcation criteria. Theories like Theory D, while based on experience, aren’t generally considered to be scientific. As such, we need to refine the criterion from Suggestion 1 to see if we can avoid the problems illustrated by the anti-vaxxer Theory D. Consider the following alternative:

Suggestion 2: An empirical theory is considered scientific if it explains all the known facts of its domain.

This new suggestion has several interesting features that are worth highlighting.

First, note that it requires a theory to explain all the known facts of its domain, and not only a selected – “cherry-picked” subset of the known facts. By ensuring that a scientific empirical theory explains the “known facts,” Suggestion 2 is clearly committed to being “based on experience”. In this it is similar to Suggestion 1. However, Suggestion 2 also stipulates that a theory must be able to explain all of the known facts of its domain precisely to avoid the cherry-picking exemplified by the anti-vaxxer Theory D. As such, Suggestion 2 excludes theories that clearly cherry-pick their evidence and disqualifies such fabricated theories as unscientific. Therefore, theories which choose to ignore great swathes of relevant data, such as decades of research on the causes of autism, can be deemed unscientific by Suggestion 2.

Also, Suggestion 2 explicitly talks about the facts within a certain domain. A domain is an area (field) of scientific study. For instance, life and living processes are the domain of biology, whereas the Earth’s crust, processes in it, and its history are the domain of geology. By specifying that an empirical theory has to explain the known facts of its domain, Suggestion 2 simply imposes more realistic expectations: it doesn’t expect theories to explain all the known facts from all fields of inquiry. In other words, it doesn’t stipulate that, in order to be scientific, a theory should explain everything. For instance, if an empirical theory is about the causes of autism (like Theory D), then the theory should merely account for the known facts regarding the causes of autism, not the known facts concerning black holes, evolution of species, or inflation.

Does Suggestion 2 hold water? Can we say that it correctly explicates the criteria of demarcation currently employed in empirical science? The short answer is: not quite.

When we look at general empirical theories that we unproblematically consider scientific, like the theory of general relativity or the theory of evolution by natural selection, we notice that even they may fail to meet the stringent requirements of Suggestion 2. Indeed, do our best scientific theories explain all the known facts of their respective domains? Can we reasonably claim that the current biological theories explain all the known biological facts? Similarly, can we say that our accepted physical theories explain all the known physical phenomena?

It is easy to see that even our best accepted scientific theories today cannot account for absolutely every known piece of data in their respective domains. It is a known historical fact that scientific theories rarely succeed in explaining all the known phenomena of their domain. In that sense, our currently accepted theories are no exception.

Take, for example, the theory of evolution by natural selection. Our contemporary scientific community clearly considers evolutionary theory to be a proper scientific theory. However, it is generally accepted that evolution itself is a very slow process. For the first 3.5 billion years of life’s history on Earth, organisms evolved from simple single-celled bacterium-like organisms to simple multicellular organisms like sponges. About 500 million years ago, however, there was a major – relatively sudden (on a geological timescale of millions of years) – diversification of life on Earth which scientists call the Cambrian explosion, wherein we see the beginnings of many of the forms of animal life we are familiar with today, such as arthropods, molluscs, chordates, etc. Nowadays, biologists accept both the existence of the Cambrian explosion and the theory of evolution by natural selection. Nevertheless, the theory doesn’t currently explain the phenomenon of the Cambrian explosion. In short, what we are dealing with here is a well-known fact in the domain of biology, which our accepted biological theory doesn’t explain.

What this example demonstrates is that scientific theories do not always explain all the known facts of its domain. Thus, if we were to apply Suggestion 2 in actual scientific practice, we would have to exclude virtually all of the currently accepted scientific empirical theories. This means that Suggestion 2 cannot possibly be the correct explication of our current implicit demarcation criterion. So, let’s make a minor adjustment:

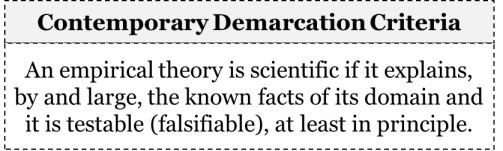

Suggestion 3: An empirical theory is scientific if it explains, by and large, the known facts of its domain.

Like Suggestion 2, this formulation of our contemporary demarcation criterion also ensures that scientific theories are based on experience and can’t merely cherry-pick their data. However, it introduces an important clause – “by and large” – and thus clarifies that an empirical theory simply has to account for the great majority of the known facts of its domain. Note that this new clause is not quantitative: it doesn’t stipulate what percentage of the known facts must be explained. The clause is qualitative, as it requires a theory to explain virtually all but not necessarily all the known facts of its domain. This simple adjustment accomplishes an important task of imposing more realistic requirements. Specifically, unlike Suggestion 2, Suggestion 3 avoids excluding theories like the theory of evolution from science.

How well does Suggestion 3 square with the actual practice of science today? Does it come close to correctly explicating the actual demarcation criteria employed nowadays in empirical science?

To the best of our knowledge, Suggestion 3 seems to be a necessary condition for an empirical theory to be considered scientific today. That is, any theory that the contemporary scientific community deems scientific must explain, by and large, the known facts of its domain. Yet, while the requirement to explain most facts of a certain domain seems to be a necessary condition for being considered a scientific theory today, it is not a sufficient condition. Indeed, while every scientific theory today seems to satisfy the criterion outlined in Suggestion 3, that criterion on its own doesn’t seem to be sufficient to demarcate scientific theories from unscientific theories. The key problem here is that some famous unscientific theories also manage to meet this criterion.

Take, for example, the theory of astrology. Among many other things, astrology claims that processes on the Earth are least partially due to the influence of the stars and planets. Specifically, astrology suggests that a person’s personality and traits depend crucially on the specific arrangement of planets at the moment of their birth. As the existence of such a connection is far from trivial, astrology can be said to contain synthetic propositions, by virtue of which it can be considered an empirical theory. Now, it is clear that astrology is notoriously successful at explaining the known facts of its domain. If the Sun was in the constellation of Taurus at the moment of a person’s birth, and this person happened to be even somewhat persistent, then this would be in perfect accord with what astrology says about Tauruses. But even if this person was not persistent at all, a trained astrologer could still explain the person’s personality traits by referring to the subtle influences of other celestial bodies. No matter how much a person’s personality diverges from the description of their “sign”, astrology somehow always finds a way to explain it. As such, astrology can be considered an empirical theory that explains, by and large, the known facts of its domain, as per Suggestion 3.

This means that while Suggestion 3 seems to faithfully explicate a necessary part of our contemporary demarcation criteria, there must be at least another necessary condition. It should be a condition that the theory of evolution and other scientific theories satisfy, while astrology and other unscientific theories do not. What can this additional condition be?

One idea that comes to mind is that of testability. Indeed, it seems customary in contemporary empirical science to expect a theory to be testable. Thus, it seems that in addition to explaining, by and large, the known facts of their domains, scientific empirical theories are also expected to be testable, at least in principle.

It is important to appreciate that what’s important here is not whether we as a scientific community currently have the technical means and financial resources to test the theory. No, what’s important is whether a theory is testable in principle. In other words, we seem to require that there be a conceivable way of testing a theory, regardless of whether it is or isn’t possible to conduct that testing in practice. Suppose there is a theory that makes some bold claims about the structure and mechanism of a certain subatomic process. Suppose also that the only way of testing the theory that we could think of is by constructing a gigantic particle accelerator the size of the solar system. Clearly, we are not in a position to actually construct such an enormous accelerator for obvious technological and financial reasons. Such issues actually arise in string theory, a pursued attempt to combine quantum mechanics with general relativity theory into a single consistent theory. They are a matter of strong controversy among physicists and philosophers. What seems to matter to scientists is merely the ability of a theory to be tested in principle. In other words, even if we have no way of testing a theory currently, we should at least be able to conceive of a means of testing it. If there is no conceivable way of comparing the predictions of a theory to the results of experiments or observations, then it would be considered untestable, and therefore unscientific.

But what exactly does the requirement of testability imply? How should testability itself be understood? In the philosophy of science, there have been many attempts to clarify the notion of testability. Two opposing notions of testability are particularly notable – verifiability and falsifiability. Let’s consider these in turn.

Among others, Rudolph Carnap suggested that an empirical theory is scientific if it has the possibility of being verified in experiments and observations. For Carnap, a theory was considered verified if predictions of the theory could be confirmed through experience. Take the simple empirical theory:

Theory E: The light in my refrigerator turns off when I close the door.

If I set up a video camera inside the fridge so that I could see that the light does, indeed, turn off whenever I close the door, Carnap would consider the theory to be verified by my experiment. According to Carnap, every scientific theory is like this: we can, in principle, find a way to test and confirm its predictions. This position is called verificationism. According to verificationism, an empirical theory is scientific if it is possible to confirm (verify) the theory through experiments and observations.

Alternatively, Karl Popper suggested that an empirical theory is scientific if it has the possibility of being falsified by experiments and observations. Whereas Carnap focused on the ability of theories to become verified by experience, Popper held that what truly makes a theory scientific is its potential ability to be disconfirmed by experiments and observation. Science, according to Popper, is all about bold conjectures which are tested and tentatively accepted until they are falsified by counterexamples. The ability to withstand any conceivable test, for Popper, is not a virtue but a vice that characterizes all unscientific theories. What makes Theory E scientific, for Popper, is the fact that we can imagine the possibility that what I see on the video camera when I close my fridge might not match my theory. If the light, in fact, does not turn off when I close the door a few times, then Theory E would be considered falsified. What matters here is not whether a theory has or has not actually been falsified, or even if we have the technical means to falsify the theory, but whether its falsification is conceivable, i.e. whether there can, in principle, be an observational outcome that would falsify the theory. According to falsificationism, a theory is scientific if it can conceivably be shown to conflict with the results of experiments and observations.

Falsifiability and verifiability are two distinct interpretations of what it means to be testable. While both verifiability and falsifiability have their issues, the requirement of falsifiability seems to be closer to the current expectations of empirical scientists. Let’s look briefly at the theory of young-Earth creationism to illustrate why.

Young-Earth creationists hold that the Earth, and all life on it, was directly created by God less than 10,000 years ago. While fossils and the layers of the Earth’s crust appear to be millions or billions of years old according to today’s accepted scientific theories, young-Earth creationists believe that they are not. In particular, young-Earth creationists subscribe to:

Theory F: Fossils and rocks were created by God within the last 10,000 years but were made by God to appear like they are over 10,000 years old.

Now, is this theory testable? The answer depends on whether we understand testability as verifiability or falsifiability. Let’s first see if Theory F is verifiable.

By the standards of verificationism, Theory F is verifiable, since it can be tested and confirmed by the data from experiments and/or observations. This is so because any fossil, rock, or core sample that is measured to be older than 10,000 years will actually confirm the theory, since Theory F states that such objects were created to seem that way. Every ancient object further confirms Theory F, and – from the perspective of verificationism – these confirmations would be evidence of the theory’s testability. Therefore, if we were to apply the requirement of verifiability, young-Earth creationism would likely turn out scientific.

In contrast, by the standards of falsificationism, Theory F is not falsifiable; we can try and test it as much as we please, but we can never show that Theory F contradicts the results of experiments and observations. This is so because even if we were to find a trillion-year-old rock, it would not in any way contradict Theory F, since proponents of Theory F would simply respond that God made the rock to seem one trillion years old. Theory F is formulated in such a way that no new data, no new evidence, could ever possibly contradict it. As such, from the perspective of falsificationism, Theory F is untestable and, thus, unscientific.

Understanding a theory’s testability as its falsifiability seems to be the best way to explicate this second condition of our contemporary demarcation criteria: for the contemporary scientific community, to say that a theory is testable is to say that it’s falsifiable, i.e. that it can, in principle, contradict the results of experiments and observations. With this understanding of testability clarified, it seems we have our second necessary condition for a theory to be considered scientific. To sum up, in our contemporary empirical science, we seem to consider a theory scientific if it explains, by and large, the known facts of its domain, and it is testable (falsifiable), at least in principle:

We began this exercise as an attempt to explicate our contemporary criteria for demarcation, and we’ve done a lot of work to distil the contemporary demarcation criteria above. It is important to note that this is merely our attempt at explicating the contemporary demarcation criteria. Just as with any other attempt to explicate a community’s method, our attempt may or may not be successful. Since we were trying to make explicit those implicit criteria employed to demarcate science from non-science, even this two-part criterion might still need to be refined further. It is quite possible that the actual demarcation criteria employed by empirical scientists are much more nuanced and contain many additional clauses and sub-clauses. That being said, we can take our explication as an acceptable first approximation of the contemporary demarcation criteria employed in empirical science.

This brings us to one of the central questions of this chapter. Suppose, for the sake of argument, that the contemporary demarcation criteria are along the lines of our explication above, i.e. that our contemporary empirical science indeed expects scientific theories to explain by and large, the known facts of its domain, and be in principle falsifiable. Now, can we legitimately claim that these same demarcation criteria have been employed in all time periods? That is, could these criteria be the universal and transhistorical criteria of demarcation between scientific and unscientific theories? More generally:

Are there universal and transhistorical criteria for demarcating scientific theories from unscientific theories?

The short answer to this question is no. There are both theoretical and historical reasons to believe that the criteria that scientists employ to demarcate scientific from unscientific theories are neither fixed nor universal. Both the history of science and the laws of scientific change suggest that the criteria of demarcation can differ drastically across time periods and fields of inquiry. Let’s consider the historical and theoretical reasons in turn.

For our theoretical reason, let’s look at the laws of scientific change. Recall the third law of scientific change, the laws ofmethod employment, which states that newly employed methods are the deductive consequences of some subset of other accepted theories and employed methods. As such, when theories change, methods change with them. This holds equally for the criteria of acceptance, criteria of compatibility, and criteria of demarcation. Indeed, since the demarcation criteria are partof the method, demarcation criteria change in the same way that all other criteria do: they become employed when they follow deductively from accepted theories and other employed methods. As such, the demarcation criteria are not immune to change, and therefore our contemporary demarcation criteria – whatever they are – cannot be universal or unchangeable.

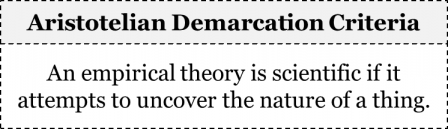

This is also confirmed by historical examples. The historical reason to believe that our contemporary demarcation criteria are neither universal nor unchangeable is that there have been other demarcation criteria employed in the past. Consider, for instance, the criteria of demarcation that were employed by many Aristotelian-Medieval communities. As we already known, one of the essential elements of the Aristotelian-Medieval mosaic was the idea that all things not crafted by humans have a nature, an indispensable quality that makes a thing what it is. It was also accepted that an experienced person can grasp this nature through intuition schooled by experience. We’ve already seen in chapter 4 how the Aristotelian-Medieval method of intuition was a deductive consequence of these two accepted ideas. According to their acceptance criteria, a theory was expected to successfully grasp the nature of a thing in order to become accepted. In their demarcation criteria, they stipulated that a theory should at least attempt to grasp the nature of a thing under study, regardless of whether it actually succeeded in doing so. Thus, we can explicate the Aristotelian-Medieval demarcation criterion as:

Thus, both natural philosophy and natural history were thought to be scientific: while natural philosophy was considered scientific because it attempted to uncover the nature of physical reality, natural history was scientific for attempting to uncover the nature of each creature in the world. Mechanics, however, was not considered scientific precisely because it dealt with things crafted by humans. As opposed to natural things, artificial things were thought to have no intrinsic nature, but were created by a craftsman for the sake of something else. Clocks, for instance, don’t exist for their own sake, but for the sake of timekeeping. Similarly, ships don’t have any nature, but are built to navigate people from place to place. Thus, according to the Aristotelians, the study of these artefacts, mechanics, is not scientific, since there is no nature for it to grasp. We will revisit this distinction between artificial and natural in chapter 7.

It should be clear by now that the same theory could be considered scientific in one mosaic and unscientific in a different mosaic depending on the respective demarcation criteria employed in the two mosaics. For instance, astrology satisfied the Aristotelian-Medieval demarcation criteria, as it clearly attempted to grasp the nature of celestial bodies by studying their effects on the terrestrial realm. It was therefore considered scientific. As we know, astrology is not currently considered scientific since it does not satisfy our current demarcation criteria. What this tells us is that demarcation criteria change through time.

Not only do they change through time, but they can also differ from one field of inquiry to another. For instance, while some fields seem to take the requirement of falsifiability seriously, there are other fields where the very notion of empirical falsification is problematic. This applies not only to formal sciences, such as logic and mathematics, but also to some fields of the social sciences and humanities.

In short, we have both theoretical and historical reasons to believe that there can be no universal demarcation criteria. Because demarcation criteria are part of the method of the time, theories are appraised by different scientific communities at different periods of history using different criteria, and it follows that their appraisals of whether theories are scientific or not may differ.

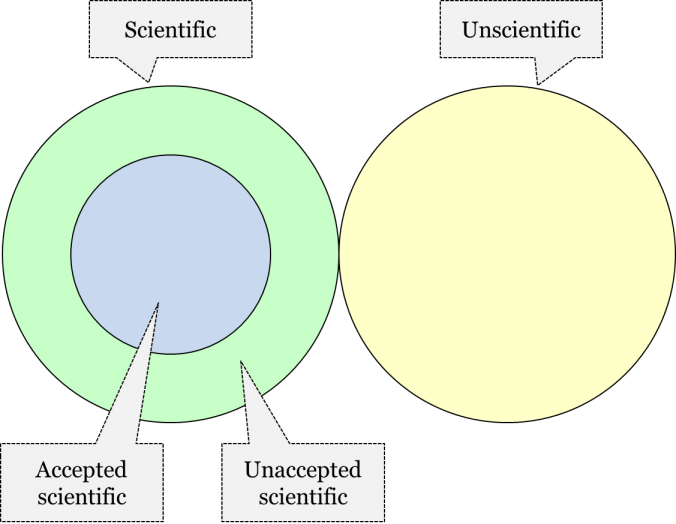

Scientific vs. Unscientific and Accepted vs. Unaccepted

Before we proceed, it is important to restate that demarcation criteria and acceptance criteria are not the same thing, as they play different roles. While demarcation criteria are employed to determine whether a theory is scientific or not, acceptance criteria are employed to determine whether a theory ought to be accepted as the best availabledescription of its object. Importantly, therefore, it is possible for a community to consider a theory to be scientific and to nevertheless leave the theory unaccepted. Here is a Venn diagram illustrating the relations between the categories of unscientific, scientific, accepted, and, unaccepted:

A few examples will help to clarify the distinction. General relativity is considered to be both scientific and accepted, because it passed the strong test of predicting the degree to which starlight would be bent by the gravitational field of the sun, and other subsequent tests. In contrast, string theory is considered by the contemporary scientific community as a scientific theory, but it is not yet accepted as the best available physical theory. Alchemy had a status similar to that of string theory in the Aristotelian-Medieval mosaic. The Aristotelian-Medieval community never accepted alchemy but considered it to be a legitimate science.

Changing the Question: From Theories to Changes

So, to recap, how do we tell if an empirical theory is a scientific theory or not? We do so by consulting the demarcation criteria of that particular community, at that particular time in history. If a theory satisfies the demarcation criteria employed by the community, it is considered scientific; if it doesn’t satisfy the demarcation criteria, it is considered unscientific. What about pseudoscience? A theory is considered pseudoscientific if it is assessed to be unscientific by the scientific community’s employed demarcation criteria but is nevertheless presented as though it were scientific. Just as with any other method, all of these demarcation criteria are changeable; they change in accord with the third law of scientific change.

Now, it is clear that if we look for transhistorical and universal criteria for demarcating theories, we most likely won’t find any, since these criteria – just as any other method – change through time. Thus, if we are to draw a meaningful demarcation line between science and non-science, we need to change our focus from individual theories, to the process of scientific change itself.

As we mentioned earlier, most of the discussion of the demarcation problem in the philosophical literature has centred on theories, and we have just summarized some aspects of that grand debate. However, there have been some philosophers who suggested that the real distinction between science and non-science is to be located in the way science modifies its theories (and methods). Imre Lakatos, a Hungarian-born British philosopher of science, famously argued that it is the transitions from one scientific theory to another that can be qualified as scientific or unscientific, not individual theories themselves. According to Lakatos, what we evaluate as scientific are the steps in the process of scientific change, such as the transition from Descartes’ theory to that of Newton in France ca. 1740, or from that of Newton to that of Einstein ca. 1920. This Lakatosian idea can be extended to apply also to the methods of theory evaluation. How exactly can this be accomplished?

Once we switch our focus from evaluating the scientific status of individual scientific theories to evaluating the transitions in a mosaic, we can pose a number of interesting questions: Can the acceptance of a new theory, or the employment of a new method, happen in a way that strikes us as unscientific? What are the features of scientific changes in a mosaic? What are examples of unscientific changes? In the most general form, our question becomes:

What are the characteristics of the process of scientific change?

So how can we evaluate whether a certain transition in a mosaic was indeed scientific? Let us appreciate that while the criteria of demarcation of scientific theories change through time, the mechanism underlying those changes is universal and transhistorical. As we learned in chapter 4, the laws of scientific change attempt to describe and explain that universal and transhistorical mechanism, to capture the general patterns exhibited by the process of scientific change. Thus, we can look at any episode of scientific change and, using the laws and our knowledge of the intricacies of the respective mosaic at that historical period, determine whether that change was scientific or not. How exactly can we do this?

Violations of the Laws of Scientific Change

We suggest that a modification of the mosaic is scientific if that modification takes place in accord with the laws of scientific change. It is unscientific, if it violates at least one of the laws. If, for instance, a new theory is accepted in accord with the second law, that change would be considered a scientific change. If, however, a theory’s acceptance happened in violation of the second law, then chances are that the acceptance itself was an unscientific step. We suggest that an actual or potential change in the mosaic is considered unscientific if it violates any of the laws of scientific change.

But how can the laws of scientific change be violated? Indeed, the laws of scientific change are meant to be descriptions of general patterns at the organizational level of the scientific mosaic, in the same way that any chemical law is a description of general patterns that hold at the atomic and molecular levels. What, then, do we mean by a “violation” of the laws of scientific change?

When scientists talk about “the laws of nature,” they are generally referring to the regular, law-like patterns that emerge at any specific level of organization, be it the physical level, the chemical, the biological, the psychological, or the social. As you might recall, we made the claim that the laws of scientific change describe the general, law-like patterns that emerge at the level of the scientific mosaic, one level “above” that of the social. The scientific community generally accepts that at the most basic, fundamental level (i.e. that of physical processes) the laws of nature are inviolable. That is, all fundamental physical processes always obey, without exception, the same set of physical laws, and nothing can ever prevent them from doing so. However, for any “higher” level law – such as those of biology, psychology, economics, or sociology – there’s always a chance that they can be violated, because the regularities they describe can have exceptions, usually due to the interference of another (usually “lower”) level process. Laws at levels above the fundamental physical level are therefore considered local and are said to therefore hold only in very specific conditions.

Consider an example. The dinosaurs dominated the global biosphere for hundreds of millions of years, all the while slowly adapting, developing, and evolving according to the general mechanism of evolution… that is, until one large rock (an asteroid) collided with another, larger rock (planet Earth) about 65 million years ago. Suddenly, the very conditions for dinosaur evolution were wiped away. We could say that the slow gradual process of evolutionary change through natural selection was interrupted and replaced by sudden and extraordinary catastrophic change. Alternatively, we could say that the “laws” of evolution were “violated”. This violation only demonstrates that the general patterns of biological evolution emerge and hold only under very specific physical and chemical conditions. This makes the laws of biology as well as any other “higher” level laws local.

A similar hypothetical example can be used to illustrate what a violation of the first law of scientific change, scientific inertia, might consist of. As we know, the law states that any element in the mosaic remains in the mosaic unless it is replaced by other elements. Let’s imagine that, sometime before the creation of the Internet, every palaeontologist in the world had gathered together at DinoCon to discuss the extinction of the dinosaurs. In their enthusiasm, this scientific community brought all of their books and all of their data with them. Then, in an ironic twist of fate, a much smaller asteroid struck the DinoCon convention centre, wiping all of the world’s palaeontologists, and all their data and books, off the face of the Earth. What would happen to their theories? It would seem that paleontological theories would simply be erased from the mosaic and would be replaced by nothing at all. But this removal of paleontological theories is a clear violation of the first law of scientific change. This sudden loss of theories would intuitively strike us as an unscientific change in the mosaic even if we didn’t know anything about the first law. However, with the first law at hand, we can now appreciate exactly why it would strike us as an unscientific change.

How might the other laws of scientific change be violated? Let us consider some hypothetical scenarios illustrating potential violations of the remaining three laws.

The second law can be violated if a new theory is accepted without satisfying the acceptance criteria employed at the time. Let’s consider a theory like that of sound healing, which proposes that the sound vibrations of certain percussive instruments, like gongs and bells, can bring health benefits by rearranging the ions of one’s cell membranes. Today, these claims would have to be assessed by our current acceptance criterion, namely the HD method, and would thus require a confirmed novel prediction, since the relation of sound vibrations to cell ion arrangement is a new causal relation. Suppose that a brutal dictator rose to power, who had a penchant for torturing and executing anyone who disagreed with his beliefs, and that among his most treasured beliefs was a belief in the efficacy of sound healing. If the scientific community were to suddenly accept sound healing with no confirmed novel predictions whatsoever, due to such an influence, we would say that this change had violated the second law. Such a transition – one that did not satisfy the requirements of the employed method due to the action of an unscientific influence, such as fear of the dictator – would clearly strike us as an unscientific step.

The third law can be violated by employing a method that simply does not deductively follow from our other accepted theories and employed methods. As we’ve seen in chapter 4, the method currently employed in drug testing is the so-called double-blind trial method. The method is currently employed, since it is a deductive consequence of our accepted theories of unaccounted effects, the placebo effect, and experimenter’s bias (as well as a number of other relevant theories). Scientists employ this method on a daily basis to determine whether a certain drug is therapeutically effective. Now, imagine a hypothetical situation in which a biomedical community suddenly begin relying solely on patient testimonials instead of performing double-blind trials. Also imagine that they decided to rely on patient testimonials while still accepting the existence of the placebo effect. Such a drastic transition to a new employed method strikes us as an unscientific step and rightly so: if scientists were to employ a new method which is not a deductive consequence of their accepted theories, it would violate the third law of scientific change and would, thus, be an unscientific step.

Finally, the zeroth law can be violated if a pair of theories doesn’t satisfy the compatibility criteria of the mosaic and yet manages to simultaneously persist within a mosaic. It’s important to realize that, whenever a new theory is accepted, the conjunction of the zeroth and first laws dictates that any incompatible theories be immediately rejected. For example, suppose a community accepts the theory “drug X is the single best drug for treating headaches”. If the community accepts a new theory the next day “drug Y is the single best drug for treating headaches,” the drug X theory will be immediately rejected, thanks to the zeroth law. But if two incompatible theories were simultaneously accepted without bringing about any mosaic splits, then the zeroth law would clearly be violated. Say a small community accepts the following theory “Zhi’s absolute favourite colour is blue”, and their compatibility criterion says “the same person can’t have two absolute favourite colours”. Then, a day later – in accord with the second law – they accept “Zhi’s absolute favourite colour is yellow” but, for whatever reason, they fail to reject “Zhi’s absolute favourite colour is blue”. This hypothetical scenario – however absurd – would be a violation of the zeroth law, since it’s a persistent incompatibility between two theories in the same mosaic. We would, of course, require an explanation, invoking non-scientific influences to explain the violation of the law.

Hopefully even without knowing the laws of scientific change many of these examples would have seemed unscientific, or at least odd. But knowing the laws of scientific change, and how they might be violated, helps us to articulate exactly what about each of these changes makes them unscientific. In short, a change in a mosaic strikes us as unscientific if it violates one or more of the laws of scientific change. A change in the mosaic can be considered pseudoscientific if, while violating at least one of the laws, it is also presented as though it has followed them all.

Summary

There are two distinct questions of demarcation. While discussions concerning the demarcation of science and non-science have typically centred on figuring out what makes theories scientific, we have suggested that the most fruitful way forward is to switch the discussion from theories to changes in a mosaic. As such, the problem of demarcation can be specified by two distinct questions. The first question concerns scientific theories:

What are the characteristics of a scientific theory?

As we have explained, this question doesn’t have a universal and transhistorical answer as the criteria of demarcation are changeable. The question that we suggest is this:

What are the characteristics of the process of scientific change?

We have argued that in order to determine whether a certain change was or wasn’t scientific, we should check to see if it violated the laws of scientific change.

Now that we have outlined some of the central problems in the philosophy of science, it is time to proceed to the history of science and consider snapshots of some of the major scientific worldviews.