6.1 Teaching About (the Risks of) AI

This section explores ideas and activities for building student AI literacy: teaching about AI, including risks and concerns about AI use in their learning, work, and lives.

Teaching About AI

Begin by reflecting on what aspects of AI matter most in your course.

Reflection questions for teaching about AI

- What prior knowledge and experience do students have (or need to have) with college-licensed tools?

- What do students know about prompt engineering and how AI works?

- What questions might students ask?

- What are the standards or ground rules you expect for using AI? What is the permitted range of use?

- What do students know about academic integrity and AI use?

- What are the most important risks and concerns of using AI for your students? For your industry?

Ask Students About their AI Knowledge and Experiences

Invite students to share their prior knowledge of using assistive or generative AI. This information can give you a sense of what students know (and do not know) about AI.

- Why might you want to use AI as a student?

- When has AI helped you?

- When has AI not helped you?

- When might it be a good idea to use AI? When is it a bad idea?

You may also wish to invite students to ask questions they have about AI. Using an anonymous question collection tool, which reduces the risk students feel you are trying to catch them using AI, may lead to more submissions.

Download This!

Download and customize these AI literacy starter slide decks to bring the conversation about AI into the classroom. The topics include:

- Introduction to Generative AI Starter Deck: This deck provides a basic overview of generative AI, including videos, information, and activities for reflection on AI use for learning.

- Introduction to GenAI Literacy Skills Starter Deck: This deck aims to build foundational AI literacy skills, including basic prompting skills and risks and considerations of AI use.

- Introduction to Responsible and Safe Use of AI Starter Deck: This deck focuses on the ethical use of AI in academic work, emphasizing the importance of transparency and academic integrity.

Use an Explainer Video

Use a simple video to start a class discussion about how AI works and its capabilities and risks.

See the Faculty Learning Hub post, Videos to Teach and Talk About AI. Choose one, screen as a class, then provide comprehension and/or discussion questions to follow.

Teach About the “AI Sandwich”

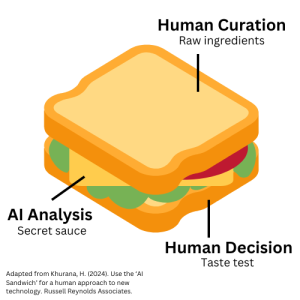

The article “Use the ‘AI Sandwich’ for a Human Approach to New Technology” emphasizes integrating AI with human insight and decision-making to value humanizing work with AI (Russell Reynolds Associates, 2023). Emphasizing the importance of humans driving AI use helps to avoid what Perkins, Roe, and Furze (2024) describe as “the illusion of finality” (p. 12), the tendency to accept AI outputs without any critique of their completeness or authority.

The AI sandwich suggests starting with human insights, such as establishing the criteria for a quality output. AI is then used for efficient creation or analysis. Finally, human judgment is used to finalize decisions: to accept, revise, or reject the AI output. This approach ensures that AI enhances rather than replaces human creativity and critical thinking.

Try a Large Language Model Tutorial

See the LLM Tutorial from the AI Pedagogy Project, which provides a guided demonstration of ChatGPT.

Try going through this seven-step tutorial with the class to learn many dos and don’ts of using AI, debunk common myths, and be prepared to use large language models responsibly.

Note that this tutorial explores OpenAI’s ChatGPT. While the information in the tutorial applies to Conestoga’s Copilot, LLM, students are not encouraged to use ChatGPT for school work. See the AI Evolving Guidelines for Details.

AI As Teammate

Try This!

By asking AI how to ask a question, AI can teach you how to use it better. Watch How Stanford Teaches AI-Powered Creativity in Just 13 Minutes by Jeremy Utley EO (2025) with your students. Try these prompts out with your students, then reflect on AI responses.

- Don’t ask AI, Let it ask you

- “I would like your help and a consult on the topic of…”

- “As an AI expert would you please ask me questions one at a time about …”

- “As an AI, what are some obvious and non-obvious recommendations you can make based on…”

- Do not ‘use’ AI, Treat it as a teammate

- “What are questions I should ask?”

- “What do you need to know from me to get the best response?”

- “What could we practive before I engage in this activity, and what feedback can you give me?”

- Go beyond ‘good enough’ ideas (‘satisficing’)

- What is the difference between “using” AI and “working with” AI?

Share “Do’s and Don’ts” Guidelines

See sample dos and don’ts guidelines below for permitted AI use for learning tasks and/or assignments. Customize these statements to explain your expectations during class. Pair them with written guidelines that are available at the start of class. Or, consider co-creating your own “do’s and don’ts” guidelines together as a class!

Explain the Benefits and Limitations of Using AI

Consider sharing with students some of the benefits and limitations of using AI for learning in your course. You may even ask students what they believe about how generative AI can help or harm their learning!

|

Some Benefits of AI Use for Learning |

Some Limitations of AI Use for Learning |

|

|

| A table with some benefits and limitations of using AI in assignments |

Learn more

For more ideas on talking to students about AI and course assignments, see section 4.4, Explain AI Use for Assignments.

Also, see this student-friendly resource, Elon University’s AI-U: A student guide to navigating college in the artificial intelligence era 1.0 (2024).

Teaching About the Risks of AI Use

AI use has many pitfalls, or hidden dangers, that students should know about. The risks of AI use are broad (economic, environmental, social) and narrow (academic integrity violations, lack of skills development, surface learning).

Reflection questions for eaching about the risks of AI

What risks and “pitfalls” (hidden risks) matter most

- for my course?

- for my industry or field?

- for what I care about?

- for what students care about?

How do we help students avoid AI risks and pitfalls? See 8 ideas and associated activities below.

1. Provide clear and transparent AI Use Guidelines

Give a verbal and written set of clear AI use guidelines when the course starts and then again when introducing specific assignments. This strategy assists students who need clarity about appropriate and inappropriate use, including what constitutes an academic integrity violation. It can also help adult learners understand the “why” of the permitted or restricted level of AI use.

You can also create an accompanying video to support student understanding. This option may be a good fit for asynchronous or hybrid courses. Select this link to view an example.

2. Engage students in active learning to recognize AI’s limitations, myths, and risks

The Downsides of AI: IMPACT RISK

The IMPACT RISK acronym was developed by John Ippolito (2024). It is a mnemonic designed to help remember the potential downsides of generative AI. This acronym helps to remember the broad economic, environmental, and social risks associated with AI. You may wish to focus on ideas that relate specifically to your course or industry.

See the IMPACT/RISK website for an explainer video and infographic (CC 1.0 license).

The Downsides of AI in Learning and Research: LEARN AI

The LEARN acronym was developed by Fatima Zohra (2024). It focuses on elements of risk and potential harm with respect to the use or misuse of AI in the context of higher education.

The LEARN acronym was developed by Fatima Zohra (2024). It focuses on elements of risk and potential harm with respect to the use or misuse of AI in the context of higher education.

Use the accordion below to learn more about the potential problems of using AI for learning and for research, as well as some suggested solutions to address the downsides.

Explore the “Misinformation, Security and More” Section of the GenAI Toolkit

AI makes mistakes, errors, biased outputs, stereotypes, and omissions. AI also risks information and data privacy. The GenAI Toolkit for Students contains a section with information, examples, and activities relating to the risks of GenAI. Consider exploring with your students some of the activities provided:

- H5P Some Considerations of the Harms of LLMs

- Examples of AI hallucinations

- Examples of bias and discrimination

- “AI Can Make Mistakes Too” Video

- The Most Likely Machine algorithm activity

Exploring Bias, Stereotypes, and Differences in AI Outputs

Many myths and misunderstandings exist about how AI works with respect to saving time, creativity, and learning (Salvaggio, 2024). By analyzing AI outputs and comparing AI-generated and human-written content, students can begin to recognize differences in AI outputs with respect to quality, reliability, accuracy, and diversity (Kentucky Chamber, 2024).

See this list of ideas for critiquing AI outputs (text and image).

| Critiquing Text-Based Outputs | Critiquing Image-Based Outputs |

|

|

Try This!

The AI Pedagogy Project provides an LLM Comparison page where users can enter a prompt to compare Claude and ChatGPT’s responses. Use this page to compare answers with Copilot as well. Give it a try!

It is essential to guide and debrief these activities so that students are aware of the harm of stereotypes and bias, including psychological distress, anxiety, depression, and internalized bias, where people adopt the negative messages they hear about their group.

3. Explore Case Studies as Cautionary Tales

Use real-world industry or workplace examples of AI failures to illustrate potential harms. For instance, discuss cases where AI systems have exhibited bias or spread misinformation in your industry or field. Or, discuss the impact of AI errors, mistakes, and fabrications not caught by employees and the ethical, legal, economic, or reputational repercussions. These discussions can help students understand the real-world implications of AI risks.

See the website and sample repository, AI Gone Wrong: An Updated List of AI Errors, Mistakes, and Failures (Drapkin, 2024). See also the AI Incidents Database. More examples may be available in your particular field or industry.

4. Provide Decision-Making Practice Time Using Mini-Scenarios

Create or customize mini-scenarios related to common situations that could arise when students use AI for learning tasks, then discuss them with your students. These can anticipate likely situations in which AI could be used, misused, or overused and the repercussions.

For each scenario, consider asking students to reflect on and discuss the following questions:

- What is the problem in this situation? Who is being negatively impacted or harmed?

- Can AI be used without breaking academic rules?

- How might AI be used without doing all the work?

- How can work be checked to ensure AI-generated content is accurate?

- How can you show you understand the material, not just the answers?

Learn more

See the Faculty Learning Hub post, Use Mini-Scenarios to Talk About AI, for a number of customizable mini-scenarios and associated discussion questions.

5. Reflect on the Negative Consequences of AI (Mis)Use

AI use may have negative effects on the perceptions of others. Ask students to consider the broader consequences of AI use on their reputation and relationships with employers, clients, colleagues, and other stakeholders.

Create a Reflection Discussion or Debate

Do people still expect “human” responses? What happens when people receive information, communications, advice, or research from a “robot” or AI? Is AI doing people more harm than good?

You may wish to facilitate discussions or reflections on the social and ethical implications of AI, exploring scenarios where AI decisions may conflict with human values. Students can debate the ethical considerations of using AI in various contexts, such as hiring or law enforcement. See examples of statements that might be debated:

- “AI does its research in a bubble.”

- “AI erodes human creativity.”

- “AI undermines trust and human relationships.”

- “AI algorithms do more damage than good for society.”

Explore Risks of Using AI in Work and Life

Students can use AI tools available to learn about the risks of AI:

- Google’s Teachable Machine: Train a simple image recognition model using your own images and discuss potential applications and ethical considerations.

- AI Fairness 360 (AIF360): Experiment with fairness metrics and bias mitigation algorithms to see how biases can be identified and addressed in AI systems.

- Fairlearn: Explore how different fairness metrics can impact model performance and outcomes.

- What-If Tool: Investigate model performance on different subsets of data to understand how biases manifest in AI systems.

- FAT Forensics: Evaluate AI systems’ fairness, accountability, and transparency.

- Themis-ml: Understand how different fairness interventions can be applied to machine learning models.

- FairTest: Test models for bias and discrimination.

- TensorFlow Fairness Indicators: Evaluate and improve model performance for fairness criteria.

Try This

What’s the difference between having the technical capabilities of using AI and having “taste”: the ability to use strategy and experience to make good decisions? With students, read the article “Design Taste vs Technical Skills in the Era of AI” (2024) by Sarah Gibbons and Kate Moran. Then, do an in-class activity to discuss why human “taste” goes beyond the technical use of AI:

AI vs. Human Design Challenge

Students compare AI-generated designs with human-created designs to understand the elements of taste and technical skill.

Instructions:

- Divide the class into small groups.

- Assign each group a design task (e.g., creating a logo, writing a short story, composing music).

- Some groups should use AI tools to complete the task, while others should use other methods.

- Each group presents their work to the class.

- Facilitate a discussion comparing the designs’ originality, beauty, and interest versus their technical execution.

Critique and Feedback Session

Students develop discernment and strategic thinking by critiquing AI-generated and human-created works.

Instructions:

- Collect a variety of works created by AI and humans in the students’ field of study.

- Display these works anonymously.

- Have students critique each piece based on criteria such as originality, aesthetic appeal, and strategic execution.

- Facilitate a discussion on the role of taste in evaluating these works and how technical skills contribute to the final product.

6. Help Students Manage Stress, Time, and Workload

Helping students avoid AI’s pitfalls also means giving them resources and support for independent learning. Provide in-class time, completion templates, and recommendations to get help from the college so they are less tempted to use AI.

7. Show Students How To Be an “Active Operator” of AI In Class

Teach about the risks of AI by doing active demos in class and walking students through the work of accessing, prompting, and saving AI outputs. If you invite students to use AI, demo how to critique, describe, and document its use. Remind students that human oversight of AI is essential and that AI outputs should never be used without close critical review and verification.

In the appendix of this resource you will find a list of H5P activities that you can do with your students, or have students complete on their own and in groups, to practice thinking about being an active operator of AI:

- APA Reference: Drag the Words Activity

- Be an Active Operator of AI: If/Then Activity

- What Does an Active Operator of AI Do Activity (Drag the Words)

- Challenging the Myths of GenAI: Flip Card Reflection Activity

Learn More

See the Faculty Learning Hub post Describe and Document AI Use.

8. Foster a Supportive Classroom Environment

Help students know you value their authentic work by showing care and compassion, encouraging their curiosity, and giving them choices in how they can follow their interests. Invite students to share thoughts and questions about AI. This builds trust and encourages open communication, making students more likely to seek guidance and use AI responsibly.

Try This!

Use this Copilot prompt, replacing your own information, to get suggestions for implementing an idea on this page in your class. Replace all bolded items with your own information.

Copilot Prompt:

You are an expert in educational development, and I am seeking your guidance. Your task is to provide me with a concrete strategy for the following. I teach at an Ontario Polytechnic College about {topic} at the level of {credential}. My primary concern about AI use by students is {pitfall}. I want to use {teaching idea} to help students understand the pitfall. The maximum time I have for this activity is {# of minutes}. Please suggest how I can use this teaching idea in my classroom to address this pitfall with students. Ensure that the way I incorporate this idea in the classroom will be practical and learner-centred and will anticipate students’ temptations to rely on AI. Give me clear and specific instructions for facilitating the activity.

Learn More

See the Faculty Learning Hub post, 8 Ways to Guide Students on the Potential Pitfalls of AI. Download the job aid: 8 Ways to Guide Students on the Potential Pitfalls of Gen AI.