3.3 Fundamental Values of Academic Integrity

Fundamental Values of Academic Integrity

One of the most pressing issues of generative AI on education is how it will reshape assessment and potentially redefine the meaning of original work and plagiarism.

The International Centre for Academic Integrity defines Academic Integrity as a mutual commitment to 6 fundamental values: honesty, trust, fairness, respect, responsibility, and courage (ICAI, 2021)

- Honesty: give credit; provide evidence; be truthful

- Trust: clearly state expectations; promote transparency; develop mutual understanding & trust

- Fairness: apply policies equitably; keep an open mind; take responsibility for actions/decisions

- Respect: accept difference; seek open communication; engage in reciprocal feedback

- Responsibility: create, understand, and respect boundaries; engage in difficult conversations

- Courage: take risks; be okay with discomfort; take a stand to address wrongdoings

If you would like to explore further, the full description of each of the fundamental values can be found here.![]()

Who is responsible for Academic Integrity?

The student, the instructor, and the institution all play an important role in creating a culture of Academic Integrity.

The institution is responsible for

- providing clear guidance and support to instructors on how to establish a culture of academic integrity and

- establishing clear processes for when scholastic offenses are suspected.

The instructor is responsible for

- establishing clear guidelines and processes within their courses and

- for creating an environment that builds mutual trust and responsibility among students and instructors.

The student is responsible for decisions and actions they take regarding academic integrity.

Awareness Reflection: An Instructor’s Role

Awareness Reflection: An Instructor’s Role

Instructors have several choices when approaching generative AI though the lens of academic integrity. Should they:

- Ignore it?

- Prohibit it?

- Address it?

Ignoring AI Use

Ignoring generative AI and continuing on as usual may seem like the easiest option for instructors. However, doing so fails to address several fundamental values of Academic Integrity: trust, fairness, responsibility, and courage.

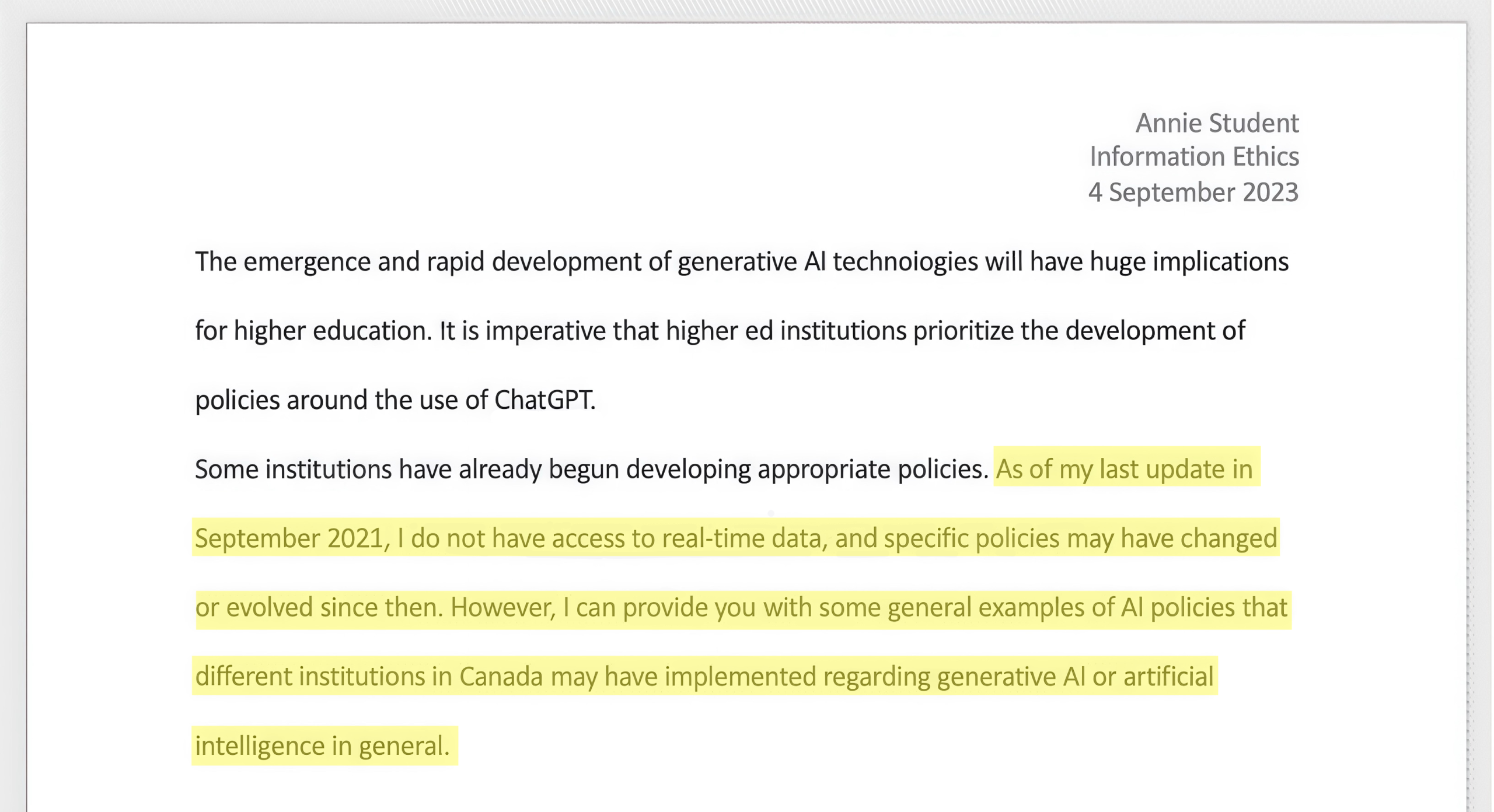

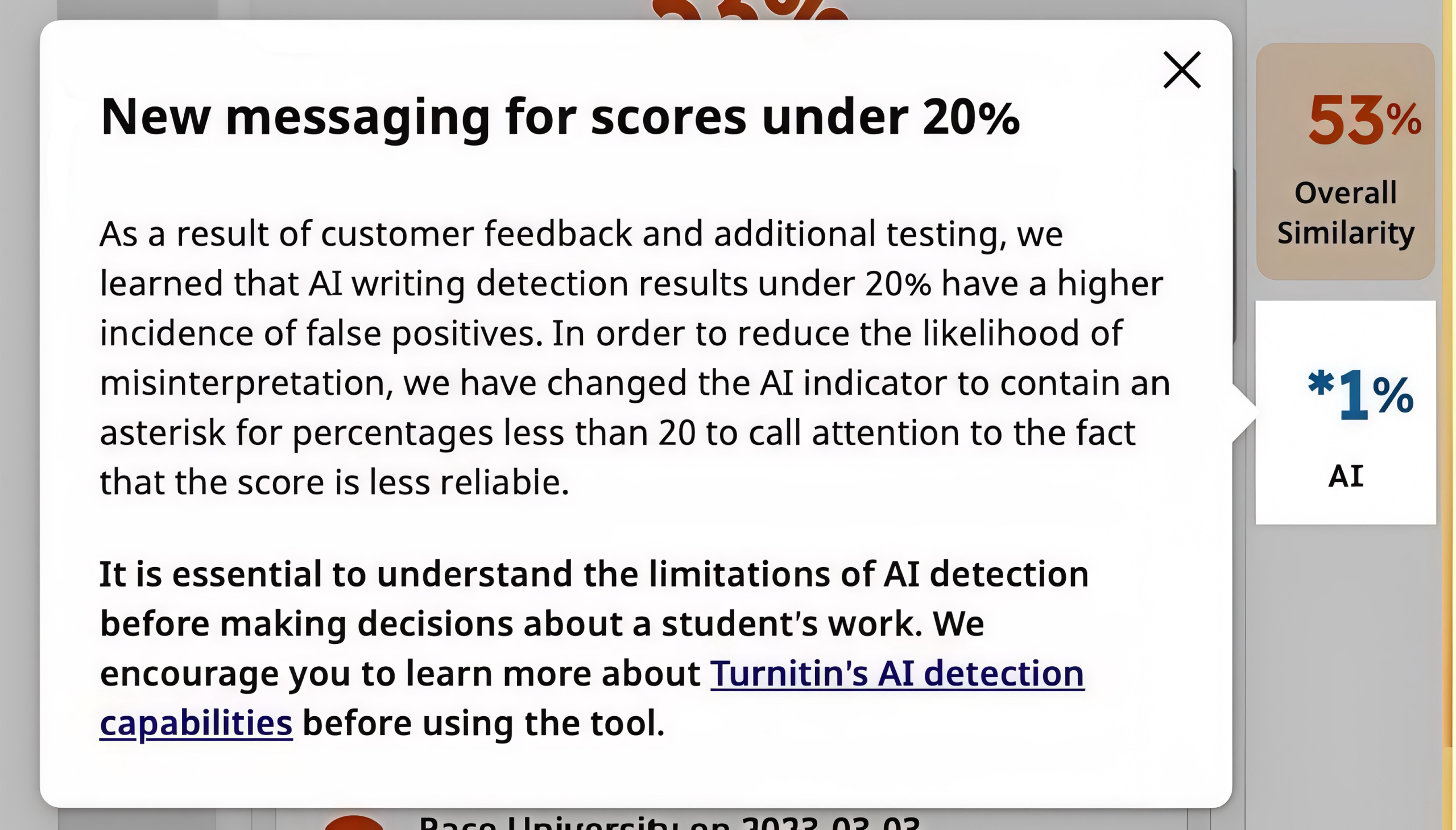

The absence of an AI policy or practices will not prevent its use. You will still encounter student submissions that you suspect (or know) to be generated with the assistance of AI, as demonstrated in the below image. When this happens, you will need to decide how to address it. Without establishing clear expectations around AI use, this process lacks transparency (a core characteristic of trust) and will impede the ability to apply policies equitably (fairness).

Responsible faculty acknowledge the possibility of academic misconduct and create and enforce clear policies around it. Academic Integrity also requires a willingness to take risks and deal with discomfort (courage).

Prohibiting AI Use

It’s clear that institutions and instructors need to take some action in response to generative AI. The immediate reaction of many institutions and instructors may be to implement a blanket ban on AI use.

However, completely prohibiting the use of AI could also been seen to fail to address multiple fundamental values of Academic Integrity. It is also unrealistic, as generative AI tools are embedded in many other applications (such as Microsoft Word and web browsers).

This approach lacks: respect, responsibility, trust, courage, honesty, and fairness.

A blanket ban will contribute to a culture of mistrust as it could be seen to be built on the assumption that students’ most likely use of generative AI tools would be to commit scholastic offenses. It also ignores the opportunities generative AI offers to support and deepen learning.

Some students will absolutely use generative AI in inappropriate ways. However, many other students may benefit from it as a tool to enhance and improve learning and provide supports for diverse learners.

Students will also encounter AI tools in their future careers and studies, so by choosing to not consider AI in our teaching, fields, and course designs, we may be failing to prepare students for the future.

Our goal as instructors is to demonstrate respect for the motivations and goals of all learners while still being able to hold individuals responsible for their actions.

A blanket ban of generative AI lacks Trust in students’ ability to use generative AI responsibly and denies them the opportunity to develop important AI literacies. It also lacks the courage to explore if/how AI can enhance our disciplines and teaching and learning practices. Of course, there may be times when it is not appropriate for students to use generative AI tools, particularly if it interferes with students demonstrating the learning outcomes of the course. The key thing to consider is whether it is necessary to prohibit tools and, if it is, that you can clearly explain to students why.

Detecting generative AI

Another limitation of blanket bans is that it means more time must be spent detecting student use of generative AI. Yet, there is currently no reliable way to identify AI generated content. Current tools for doing so are unreliable and biased.

For example, one study shows that AI detection tools flagged more than half of the submitted essays from non-native speakers as AI-generated (Liang et. al., 2023 ![]() ).

).

Because of these limitations, the use of AI detection tools innately does not provide true evidence of academic misconduct (thus lacking honesty) and will not process all students’ work equitably (thus lacking fairness).

So, what can I do? Address it!

Instructors should explicitly acknowledge the existence of generative AI and the potential impact on teaching and learning activities in their course policies, in conversation with students, and in the design of learning activities and assessment.

- Decide whether you will incorporate AI into your course

- Establish a clear AI policy for your courses that clearly explains expectations around AI use

- If you do provide opportunities for AI use, incorporate AI literacy into your curriculum

- Design activities and assessment deliberately to either resist AI use or to incorporate AI

Establish a Clear AI Policy for your Courses

- Identify if and how students are allowed or encouraged to used generative AI in your course

- Provide clear rationale as to why you made these decisions

- Provide clear guidelines on how to cite / reference AI-assisted work

By establishing clear guidelines, you establish a relationship of trust with your students, you have a clear policy that can be applied equitably to all students (fairness), you create clear boundaries and expectations (responsibility), and you provide students with the skills to use AI honestly.

See the following section for instructions on how to write an AI Policy.

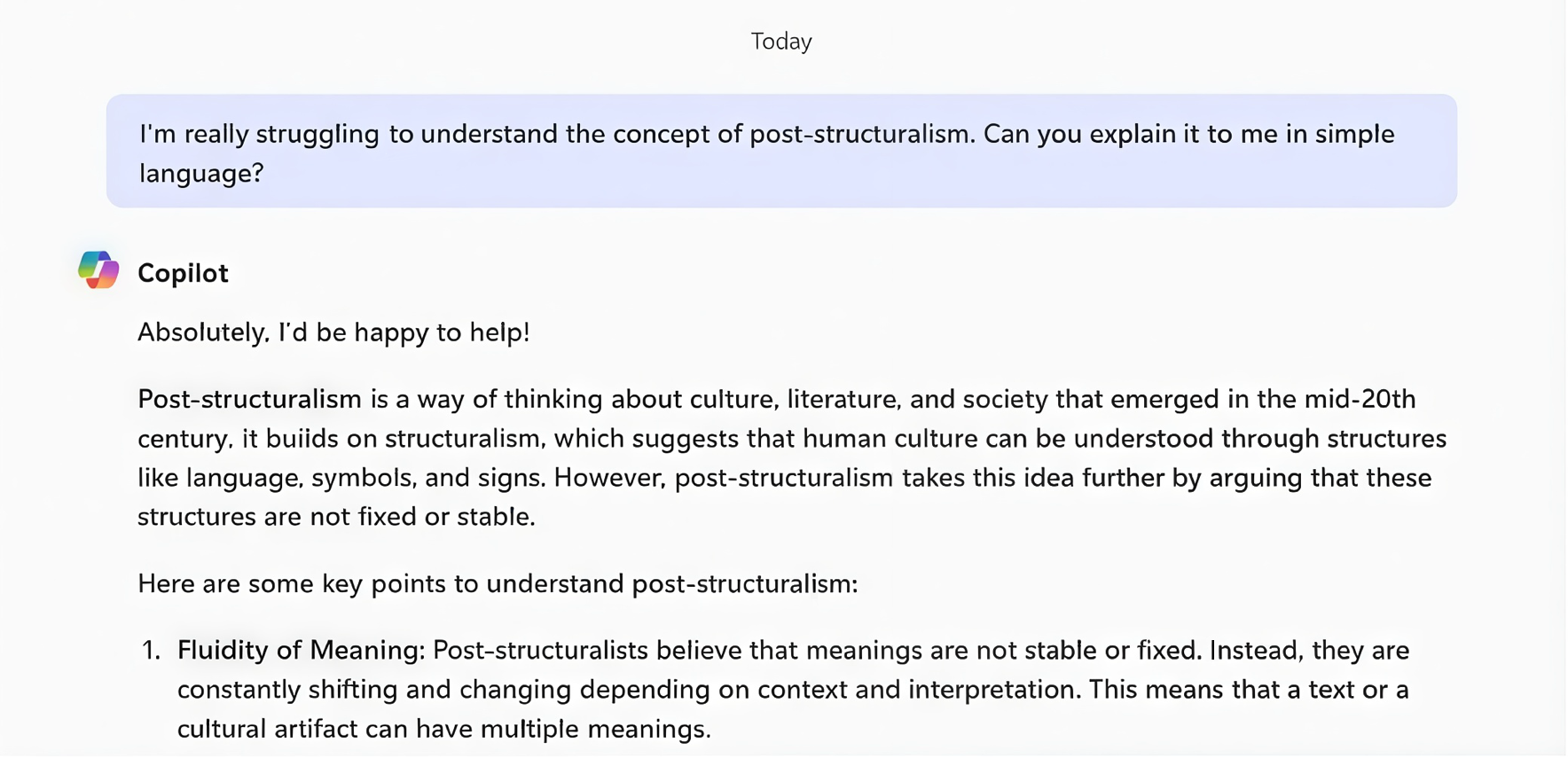

Incorporate AI Literacy into your Curriculum

- Teach students the potential benefits/uses of AI within the context of your course or discipline

- Teach students the ethical concerns and harms of AI

By incorporating AI into your curriculum, you provide students with an opportunity to develop important skills and knowledge related to the ethical use of AI. This takes courage, as it may create discomfort, but it also fosters responsibility and respect.

Design Activities and Assessment Deliberately

- Design AI-Resistant assignments

- Develop assignments that allow students to explore the power and applications of generative AI in their academic work

By designing assignments deliberately to resist the use of AI or to embrace the use of AI, you are fostering mutual trust and respect. You are also exhibiting and encouraging courage, as AI-enhanced assessments may require risk-taking and discomfort. This also gives learners a chance to act responsibly with regards to AI use.

See the section on pedagogy for more information on assessment design.

Below are some optional activities that will enable you to develop some tools and strategies related to generative AI and academic integrity in your courses.

Review this compilation of Classroom Policies for AI Generative Tools. ![]()

Choose 1 or 2 policies that stand out to you. Reflect on whether they adhere to the 6-values of academic integrity.

- Honesty: give credit; provide evidence; be truthful

- Trust: clearly state expectations; promote transparency; develop mutual understanding & trust

- Fairness: apply policies equitably; keep an open mind; take responsibility for actions/decisions

- Respect: accept difference; seek open communication; engage in reciprocal feedback

- Responsibility: create, understand, and respect boundaries; engage in difficult conversations

- Courage: take risks; be okay with discomfort; take a stand to address wrongdoings

Search for ways that AI are being used in your field or discipline. How will your students encounter AI in their future professions? What skills might they require to successfully engage with these tools and practices?

These may be places to start with when introducing students more broadly to the applications of AI.

Summary

- Although Academic Integrity is intricately linked to Academic Misconduct and Scholastic Offenses, establishing a culture of Academic Integrity is more complex than simply preventing cheating.

- Creating this culture is the mutual responsibility of the institution, the instructor and the teacher. It requires attention to 6 values: honesty, trust, fairness, respect, responsibility, and courage.

- Whether or not you choose to use generative AI, it needs to be explicitly discussed and addressed in course policies, course content, and course design.

Generative AI is a type of Artificial Intelligence that creates new content, including text, images, videos, audio, and computer code.

Act of copying the words or ideas of someone else without proper attribution or recognition.

Violations of academic policy that undermine the evaluation process.

An intentional action taken by an individual to deceive their instructor, peers, or institution, often in an attempt to achieve a higher grade, gain course credit, or otherwise be recognized for performance not achieved.