5.3 Critically Appraising AI Outputs

Critically Appraising AI Outputs

As with any source, it’s important to critically evaluate the output from generative AI tools. As discussed, output can be prone to inaccurate or misleading information or biased representation. Traditional tools for evaluating sources ![]() might be helpful, but the nature of generative AI output and the lack of transparency can make it more difficult to assess things like Currency, Authority or even Bias.

might be helpful, but the nature of generative AI output and the lack of transparency can make it more difficult to assess things like Currency, Authority or even Bias.

Fact-Checking AI Output

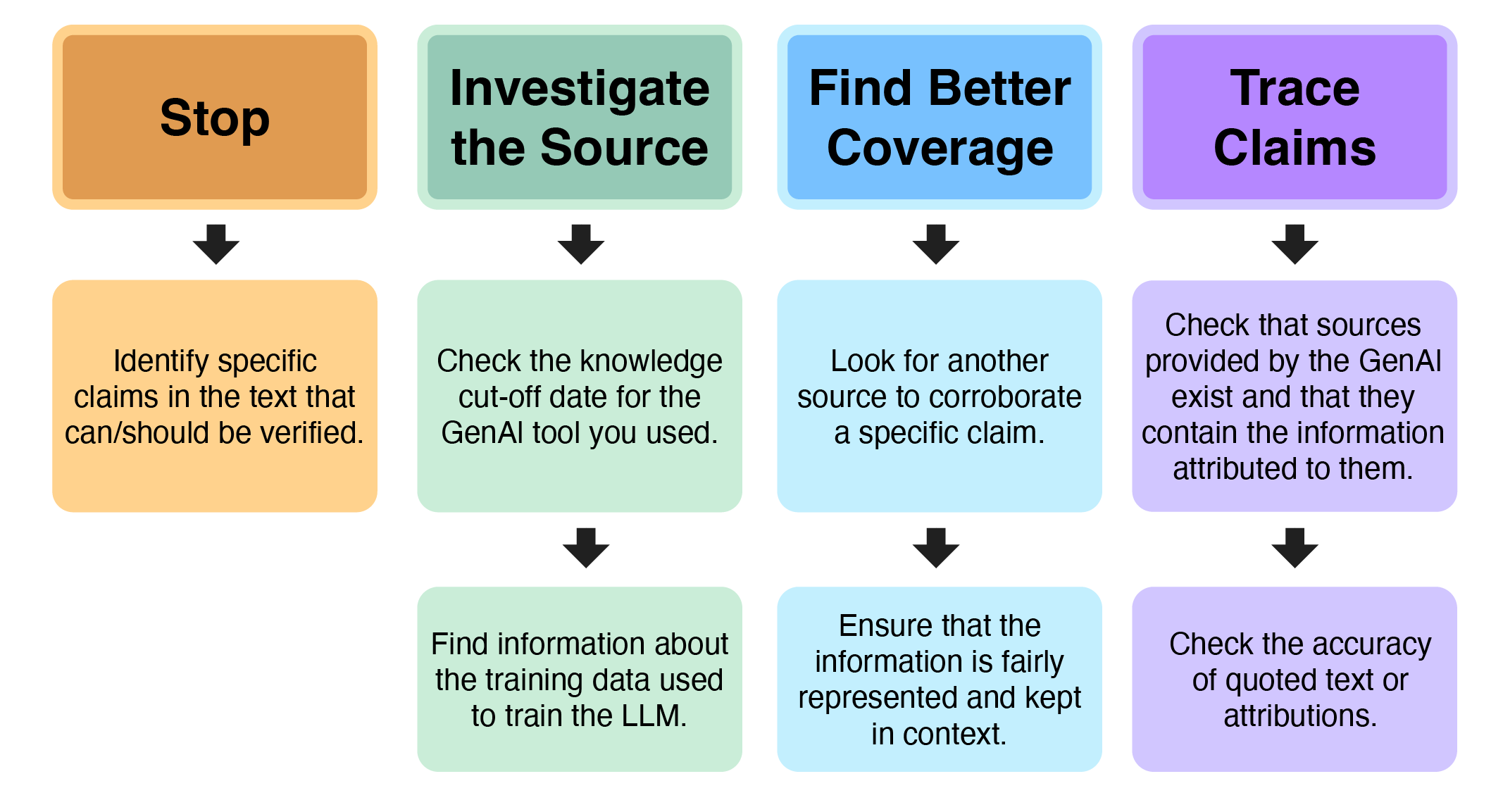

The SIFT method was developed by Michael Caulfield for verifying claims made in online sources (Caulfield, 2019). It can be adapted to assessing the validity of AI output.

Modified SIFT method for assessing AI output.

| Stop | Investigate the Source | Find Better Coverage | Trace Claims |

| Identify specific claims in the text that can/should be verified. | Check the knowledge cut-off date for the GenAI tool you used. | Look for another source to corroborate a specific claim. | Check that sources provided by the GenAI exist and that they contain the information attributed to them. |

| Find information about the training data used to train the LLM. | Ensure that the information is fairly represented and kept in context. | Check the accuracy of quoted text or attributions. |

Identifying AI Bias

Bias in AI generated content can take many forms and, if it goes unchecked, can have real-world consequences by reinforcing inequities, contributing to misinformation or misrepresentation, and excluding diverse perspectives and voices. To help you identify biases in AI-generated output, consider the following questions:

Perspectives

- Does the output include diverse perspectives and representation of diverse groups?

Stereotypes

- Does the content contain stereotypes or oversimplified generalizations?

Language

- Does the output contain language free from discriminatory, ableist or exclusionary terms?

Check out A Guide for Inclusive Language ![]() for more information

for more information

Impact

- Could sharing this content cause harm or reinforce existing social biases?

Generative AI is a type of Artificial Intelligence that creates new content, including text, images, videos, audio, and computer code.

Feedback/Errata