10 Contemporary Worldview

Chapter 10: Contemporary Worldview

Intro

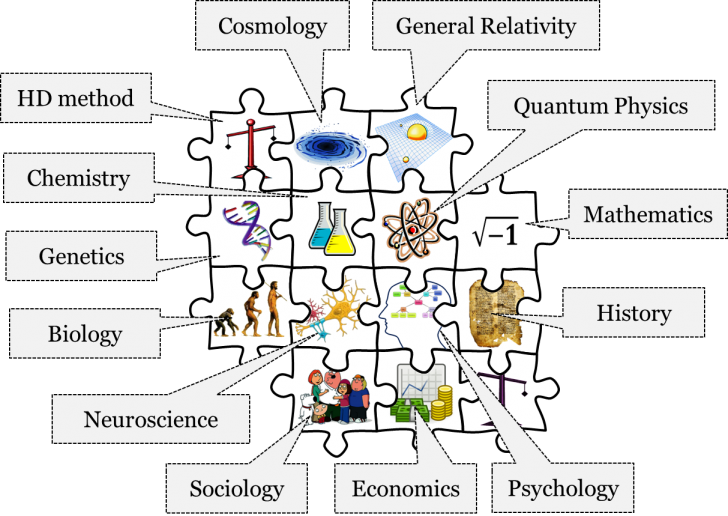

The Contemporary mosaic will probably look more familiar to us than its predecessors. It includes, after all, the theories and methods taught at the very universities you’re attending. We have borne the Contemporary mosaic since about the 1920s. It includes accepted theories like neuroscience, quantum mechanics, special and general relativity, and cosmology, which we will consider in more depth below, in addition to the theories of evolutionary biology, genetics, chemistry, psychology, sociology, economics, and history.

It is important to remember that, as with all previous mosaics, the Contemporary mosaic is in a continuous state of flux. In other words, the mosaic of 1920 is not identical to the mosaic of today. Furthermore, as we stressed in chapter 2, our current set of accepted theories and employed methods are in no way absolutely true. We do think that they’re the best available descriptions of their respective objects, but we are also prepared to replace our best descriptions with even better ones. Consider, for instance, the acceptance of the Higgs boson, the mapping of the human genome, or the discovery of new pyramids in Egypt. So, although we sometimes treat our Contemporary worldview as unchangeable and something already crystalized, it is very much modifiable as we learn more about the world.

Let’s start our focused discussion of the Contemporary worldview with the field of cognitive neuroscience and the metaphysical principles implicit in this discipline.

Cognitive Neuroscience

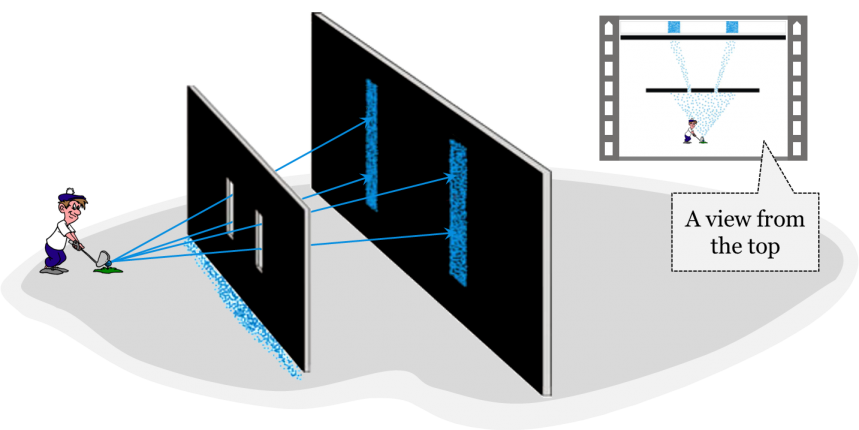

Neuroscience is the study of the nervous system and aims to better understand the functions of the brain. Cognitive science is the study of the mind and its processes. Cognitive neuroscience melds these two fields and seeks to understand the processes of the mind as functions of the brain. The central metaphysical question here is whether the mind can exist independently of the brain or anything material, or whether the mind requires the existence of a material substrate to produce it. In the previous historical chapters, we’ve seen that Cartesians and Newtonians alike accepted the metaphysical conception of dualism, which holds that mind and matter are independent substances. Recall, for instance, how Cartesian mechanistic physiologists couldn’t figure out an acceptable way for the mind to interact with matter. This problem grew more acute in the nineteenth century, when the law of conservation of energy became accepted. The law implies that the physical world is causally closed, and that it is therefore impossible for a non-physical mind to influence a physical brain. Given this constraint, and neuroscientific theories accepted within the Contemporary mosaic, substance dualism is no longer regarded as the best available description of the relationship between mind and matter.

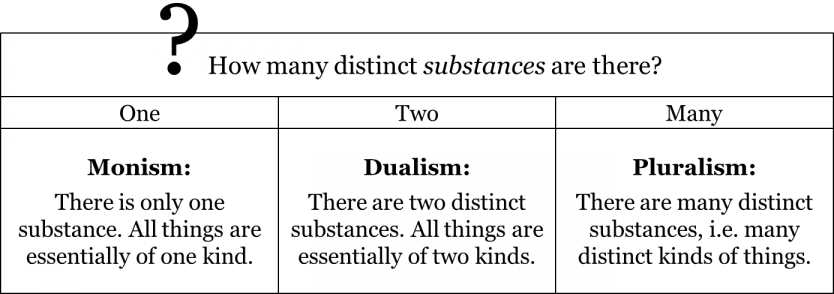

Dualism is a response to a question about the number of distinct independent substances that actually exist. It answers that there are two independent substances. Pluralism – the metaphysical principle implicit in the Aristotelian-Medieval worldview – answers that there are many independent substances. Another possible response is that of monism: that there is only one single independent substance.

Different varieties of monism have a different understanding of the nature of this single independent substance. Let’s consider the various types of monism.

One type of monism is called idealism. Idealism tells us that there is only one independent substance, the mind. In other words, all material things in the world are simply the products or manifestations of the mind. Idealism has two major subtypes: subjective and objective idealism. Subjective idealism suggests that the existence of material objects depends on the perception of those objects by an individual mind, i.e. to be is to be perceived.

Essentially, subjective idealists claim that objects can be said to exist only as perceptions within an individual mind. To bolster this claim, they ask how one imagines an unperceived material object. That is, they ask what something independent of the mind (i.e. something unperceived) looks like. Answering this question, however, is impossible, because as soon as you try to imagine something, you’re relying on a mind. Hence, according to subjective idealism, material objects cannot exist independently of the mind. Subjective idealism is most commonly tied to the Irish philosopher George Berkeley.

Subjective idealism is problematic because it’s not quite clear why different individual minds perceive the same things. For instance, if two people are looking at the same object, they will usually have similar perceptions of that object. Therefore, there must exist some sort of mechanism that guarantees the coherence of perceptions among different individual minds.

To solve this problem, some philosophers postulated the existence of a universal mind, such as that of God, which guarantees that the perceptions of different individual minds will cohere. This step, however, takes us to objective idealism, which holds that everything depends on and exists within the mind of a single, objective perceiver.

Importantly, objective idealism doesn’t deny that material objects exist outside of individual minds. But it does deny that the material world can exist outside of this single, universal mind. This subtype of idealism is associated with German philosopher George Wilhelm Friedrich Hegel. Often, objective idealists understand the material world as a manifestation of this objective, universal mind. In our modern understanding, this is similar to a computer simulation. Within this simulation, a couple of things happen. One, anyone’s perception of the (simulated) material world would be similar to everyone else’s. Two, anyone’s experience of this material world depends on the program that runs the simulation, and not the individual minds within the simulation.

To be clear, idealism does not imply that the material world does not exist. Instead, idealism states that the material world cannot exist without the mind – either subjective or objective. In other words, mind is the only independent substance.

In contrast with idealism, there is another monist position known as materialism. Materialism also tells us that there is only one independent substance, but that this substance is matter – not mind. For materialists, all mental states, thoughts, emotions, memories, and perceptions are the products of some material process. Before modern times, it was very difficult to understand how this might be possible.

A third variety of monism is called neutral monism. According to neutral monism, there is only one independent substance, which is neither purely material nor purely ideal, but a neutral substance which is both material and ideal at the same time. According to neutral monists, everything in the universe is both matter and mind: there is nothing purely material or purely ideal. In other words, everything material is said to have some mental capacities, albeit very minimal. Similarly, everything ideal is said to presuppose one material process or another. In this view, matter and mind are simply two sides of the same coin; nature itself is, in a sense, neutral. One traditional variety of neutral monism is the idea that nature (matter) and God (mind) are essentially one and the same: the divine mind doesn’t exist outside of nature, and nature doesn’t exist independently of the divine mind. One of the most notable champions of this version of neutral monism is the philosopher Baruch Spinoza. A similar view was endorsed by the physicist Albert Einstein. An even more contemporary version of neutral monism suggests that every bit of matter contains within itself information regarding its current state, as well as its past states and, probably, its possible future states. But information, in this view, is something ideal – it is the data “stored” in nature and “processed” by nature. All of nature, in this view, becomes a huge computer that processes vast amounts of information every second to decide what happens to every bit of matter.

So, dualism, idealism, materialism and neutral monism are our options regarding the relationship between mind and matter. The four conceptions are summed up in the following table:

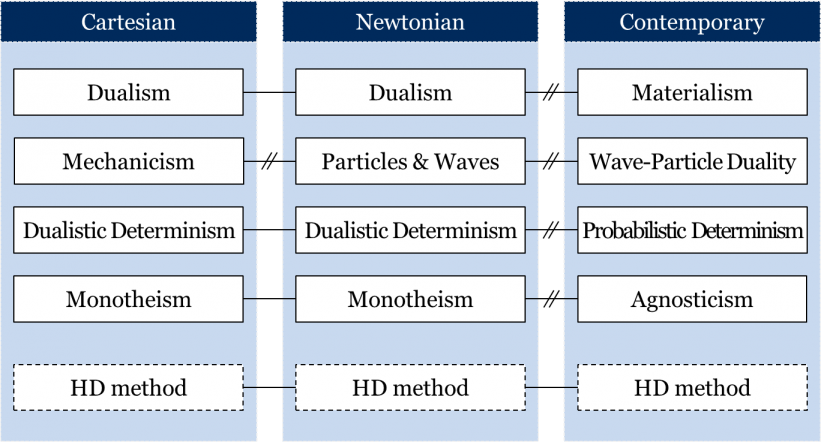

Cartesians and Newtonians both rested within the dualism camp. This was not simply due to their acceptance of Christian theological beliefs about the immortality of the soul. For both Cartesians and Newtonians, material systems were in a sense machine-like, but it seemed inconceivable that our mind could be a machine. Descartes noted that human reason was a ‘universal instrument’ which could be flexibly used in all sorts of situations, whereas all the machines he knew of were rigidly disposed to perform one particular function.

What view of the relationship between mind and matter is part of the Contemporary worldview? What have we learned about the mind in the last century that might shed light on this issue?

Neuroscience began in the nineteenth century when major improvements in microscopes made it possible to understand the microscale structure of living things. The Spanish neuroanatomist Santiago Ramón y Cajal showed that the nervous system, like other bodily systems, was composed of distinct living cells, which he called neurons. Ramón y Cajal supposed that neurons, with their complex tree-like branches, signalled to one another and formed the working units of the brain. By a current estimate, the human brain contains 86 billion neurons. The substance of the brain clearly possessed the organized complexity that one might suppose a thinking machine would need. But how could a machine think?

In the early twentieth century many neuroscientists worked to understand the electrical and chemical signalling mechanisms of neurons. But this work, by itself, provided little insight into how or whether the brain could be the physical basis of the mind. The needed insight came from another field. In 1936, the mathematician Alan Turing proved that it was theoretically possible for a simple machine, now called a Turing machine, to perform any mathematical computation whatsoever, so long as it could be clearly specified. Turing’s finding, in effect, refuted Descartes claim that a machine lacked the flexibility to be a universal instrument. Although Turing’s work was originally intended to address a problem in the philosophical foundations of mathematics, he and others soon realized it also had practical significance. It led directly to the development of the digital electronic computer. By the early twenty-first century, this machine, and a rudimentary form of machine ‘thought’, have become an ever present feature of daily life.

The ability to perform, in seconds, mathematical computations that would take years or centuries for an unaided human being had a profound impact on many areas of science, including, especially the attempt to understand the mind. Early cognitive scientists supposed that the mind literally worked like current computers and functioned by manipulating symbols according to logical rules. This approach met with some successes, as computers could be programmed to perform tasks that would take a great deal of intellectual effort if performed by human beings, like beat grand masters at chess or prove mathematical theorems. But the researchers soon realized that the brain’s style of computation was very different from that of current computers and that understanding the mind would require understanding how the neurons of the brain interacted with one another to create it. By the end of the twentieth century, cognitive science and neuroscience had merged as cognitive neuroscience. Being universal instruments, digital computers could be programmed to simulate idealized networks of interacting neurons. These artificial neural networks have the capacity to learn and proved capable of flexibly learning to perform tasks like recognizing and classifying patterns, tasks which are thought to be critical to their biological counterparts. Rather than simply reacting to its inputs, the brain has been found to be in a state of constant, internally driven activity, forming a dynamical system that constantly anticipates and predicts its inputs.

More evidence that the brain is the physical substrate of the mind comes from new technologies, such as functional magnetic resonance imaging (fMRI) for directly imaging functional processes in living human brains. In essence, fMRI measures blood flow within the brain. An increase of blood flow to a certain area of the brain indicates neural activity and cognitive use of that area of the brain. FMRI enables neuroscientists to image different parts of the brain activated in response to various stimuli. During a behavioural experiment, a neuroscientist might ask a test subject to perform a simple task or recall a memory that elicits certain emotions. Having mapped different brain processes onto different areas of the brain, neuroscientists are able to successfully predict and measure which parts of the subject’s brain will activate in response to their instructions. Thus, they reason that mental tasks, like feeling an emotion or reliving a memory are the result of neural activity in the brain.

Cognitive neuroscientists accept that the brain is the physical substrate of the mind and pursue a theoretical account that seeks to explain cognitive processes like perception, reason, emotion, and decision making on that basis. The claim that mental states are produced by physical processes is incompatible with substance dualism. In fact, if mental states are produced by a physical process, this is a strong indication that the mind does not exist without underlying material processes. In other words, this suggests the view materialism, which states that matter is the only independent substance.

Quantum Mechanics

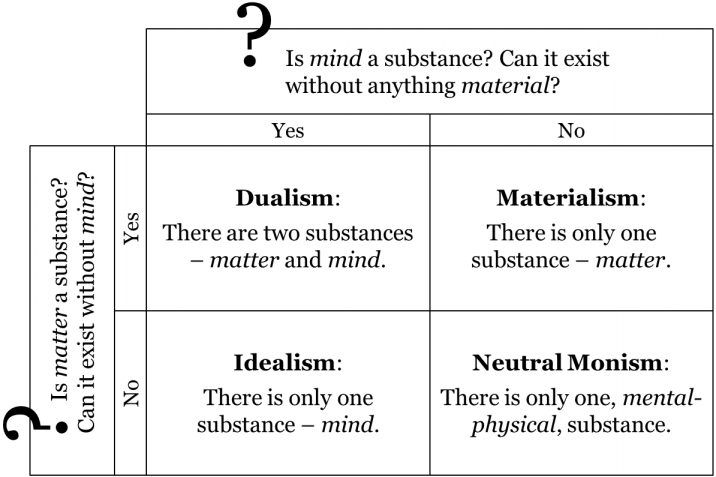

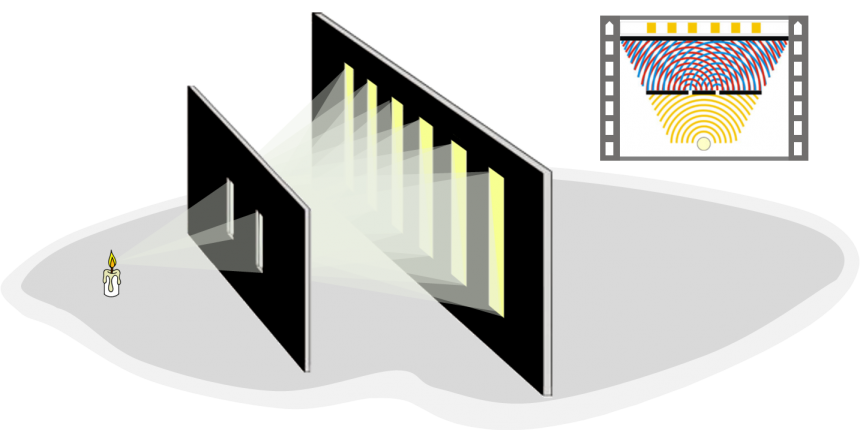

To appreciate that matter is the only substance is one thing, to have a clear understanding of what matter is and what properties it has is a different thing. It is safe to say that the very notion of matter changed through time. To illustrate these changes, we will consider different versions of the famous double-slit experiment. Imagine a wall with a slit in front of another solid wall. Now, consider a golfer hitting balls covered in paint in the direction of the two walls. While some of the balls will likely hit the first wall, others will pass through the slit and leave marks on the back wall. Gradually, a linear shape will emerge on the back wall. Now, let’s add another slit to the front wall. What sort of pattern would we expect in this set-up? We would expect two painted lines on the back wall, like so:

This picture is very much in tune with the predictions of Newtonian mechanics, where, by Newton’s first law, balls are supposed to travel along straight lines if unaffected by any other force.

Recall, however, the change in the Newtonian conception of matter discussed at the end of chapter 9. After the acceptance of the wave theory of light in 1819, the notion of matter was widened to include not only corpuscles, but also waves in a fluid medium called luminiferous ether. Imagine we replaced the golfer with a light source. If light consisted of corpuscles, then, as for the golf balls, one would expect an image of two straight lines on the back screen. However, if the slits are narrow enough, and close enough together, the image caused by light shining through the two slits and depicted on the back screen would not be of two lines. Instead it there would be multiple lines:

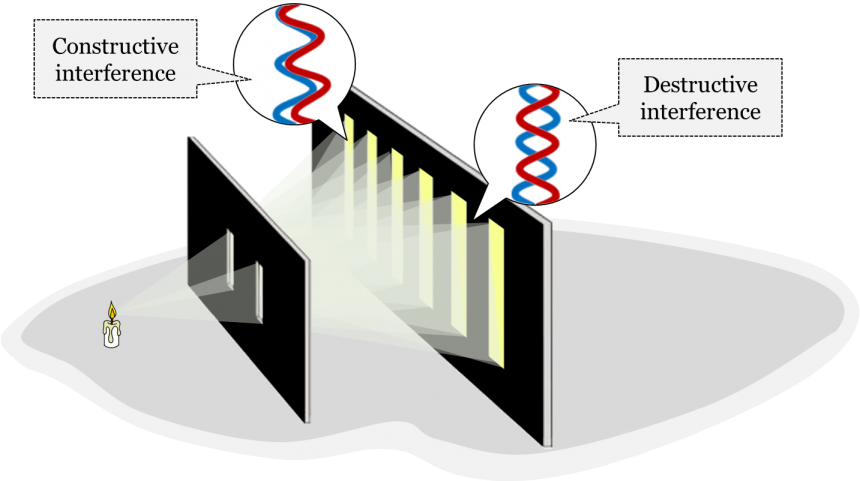

This strange phenomenon was nicely explained by the wave theory of light. According to this theory, light waves undergo a process called diffraction. Diffraction is the bending of a wave around an object or after passing through a hole that is narrow in relation to the wavelength of the light wave. So, rather than continuing in a straight line after passing through a single slit, the wave begins to spread out. In the experiment, however, we have two slits. As the light waves pass through both slits and begin to diffract on the other side of the first screen, they begin to interfere with one another. It’s similar to dropping two stones into a pool of calm water at the same time. The ripples will eventually hit one another and create bigger and smaller waves. This phenomenon is known as interference, which also happens with waves of light.

There are two types of interference. In destructive interference, the crests and troughs of the two waves are out-of-sync and cancel each other out forming darker regions on the screen behind the slits. Constructive interference happens when the crests and troughs of the two waves are in-sync, and the waves reinforce each other forming multiple bright regions on the screen.

The wave theory of light was accepted in the Newtonian worldview up until about the 1880s. After that, Newtonians accepted classical electrodynamics, which was a theory that gave a unified account of both electrical and magnetic phenomena. In this theory, light is still treated as a wave, but this time as electromagnetic radiation, i.e. a wave in an electromagnetic field that propagates through empty space without the need for a medium like the luminiferous ether.

By the 1920s, however, the Newtonian idea that fundamental physical entities were either particles or waves came to be replaced with another idea – the idea of wave-particle duality. To better understand this transition, we need to delve into an element of the Contemporary mosaic: quantum mechanics.

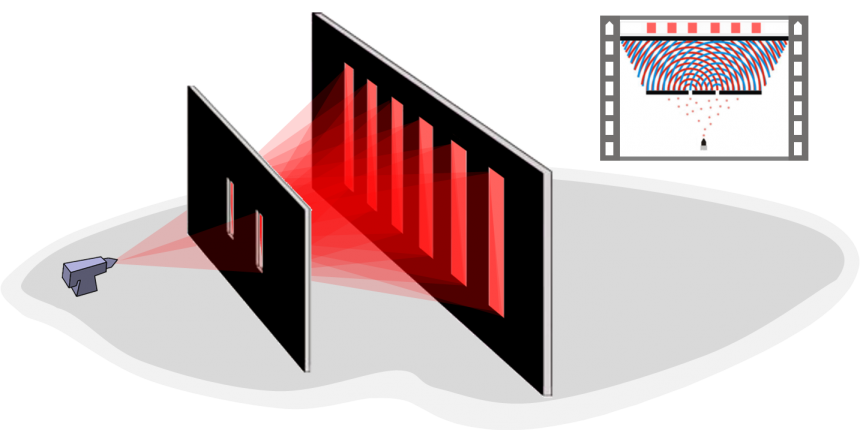

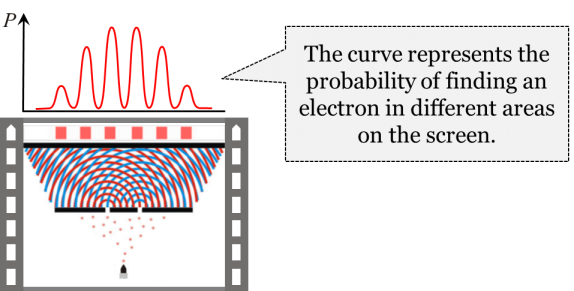

Quantum mechanics is the physical study of the quantum world – the physics of very tiny things. It tries to explain how matter behaves and interacts with energy at the scale of atoms and subatomic particles. The double-slit experiment was conducted again in the 1920s to gain new insight into the nature of quantum phenomena. The task was to find out whether elementary particles such as electrons are corpuscles or waves. The double-slit experiment, in this context, was slightly modified. Instead of a light source, a particle gun was used to fire elementary particles such as electrons. If electrons were corpuscular in nature, then one would expect two straight lines on the back screen. If, on the other hand, electrons were waves, one would expect diffraction patterns on the back screen. A diffraction pattern emerged on the back screen, suggesting that electrons are waves.

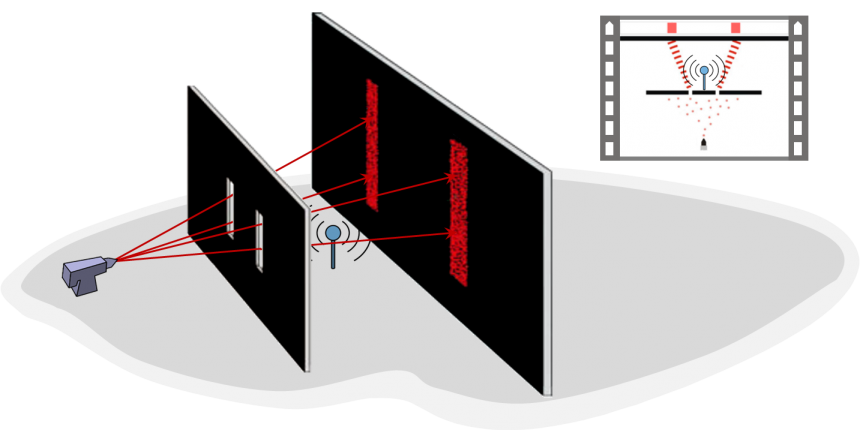

Even if electrons were fired at the screen one at a time, the pattern would still appear. This meant that each electron had to somehow pass through both slits, then diffract and interfere with itself in order to produce the diffraction pattern on the screen. To confirm that each electron passed through both slits, experimenters added a detector next to the slits that would tell us which of the two slits an electron passed through: the detector would indicate to the experimenters whether an electron passed through the left slit, the right slit, both slits, or neither slit. Because of the diffraction patterns they observed, physicists expected the detector to indicate that an electron passed though both slits at once. However, the moment the detector was added to the setup, the diffraction patterns mysteriously disappeared; it was now two straight lines!

Each individual electron was detected to pass through only one of the two slits, but never through both. This was a surprising result, since electrons were expected to pass through both slits. It was a behaviour expected of corpuscles, not waves! What the experimenters realized, though, was that the electrons that were emitted behaved differently depending on the presence or absence of the detector. Whenever the detector was present, the electrons fired behaved like particles: only one detector would go off to indicate something had passed through its slit and no diffraction pattern would emerge. In contrast, whenever the detector was absent, the electrons fired behaved like waves and diffraction patterns would emerge.

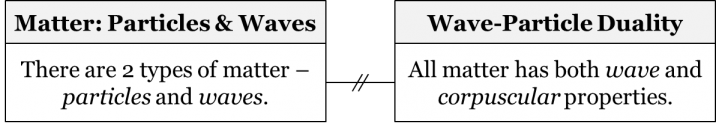

Physicists concluded from this experiment that all matter has both wave and corpuscular (i.e. particle-like) properties. All particles in the Standard Model of particle physics – the currently accepted theory of what basic building blocks of matter exist and how they interact – exhibit this dual behaviour. This includes electrons, photons (“light particles”), and even the (relatively) recently discovered Higgs boson. We consider this dual-behaviour of all matter a metaphysical principle of the Contemporary worldview, and call it wave-particle duality. The metaphysical principle of wave-particle duality came to replace the idea of separately existing waves and particles implicit in the Newtonian worldview in the early 20th century:

As another example of wave-particle duality, consider an experiment concerning the so-called photoelectric effect. The photoelectric effect is a phenomenon whereby electrons are ejected from a metal surface after light is shone onto that surface. The question Einstein sought to answer was whether waves or particles of light were responsible for the ejection of the electrons. To test for this, one would measure both the frequency and intensity of the light. Einstein hypothesized that light’s capacity to eject electrons with a certain energy depended solely on its frequency: no matter how high the light’s intensity, if its frequency didn’t pass a certain threshold, then it would never eject any electrons from the metal surface. This fact was unaccounted for in the wave theory of light, which predicted that increasing the intensity of the light would also eject electrons. Although Newtonians had shown that light behaved like waves, Einstein concluded that particles of light had displaced electrons on the metal surface. Einstein’s theories about the photoelectric effect did not do away with earlier evidence supporting the wave theory of light. Light still exhibited the properties of a wave. Only now, it also exhibited the properties of a particle.

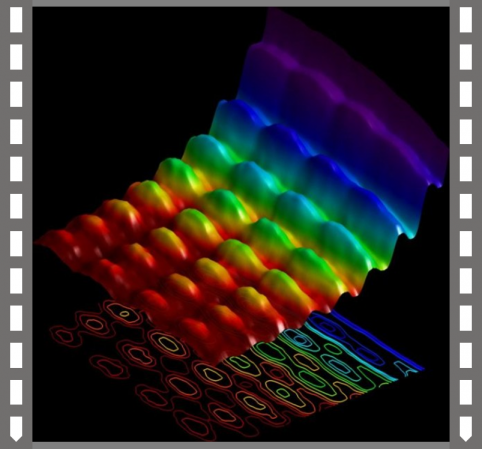

It is accepted in Contemporary quantum physics that light behaves sometimes as a particle and sometimes as a wave. In fact, in 2015, Nature Communications published the first photograph of the wave-particle duality of light, seen below.

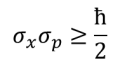

The duality of matter is also expressed in Heisenberg’s uncertainty principle, one of the fundamental principles of quantum physics. If formulated for a particle’s position and momentum, the uncertainty principle states:

The more precise the position (σx), the more uncertain the momentum (σp), and vice versa:

The principle states that when we try to measure the position of an elementary particle, the uncertainty in the particle’s momentum increases, and conversely, when we try to measure the particle’s momentum, the uncertainty in its position increases. The two uncertainties are inversely related. Importantly, the principle is not about our inability to measure things precisely due to mere technological limitations. It has to do with the fact that elementary particles really are fuzzy entities that only become particle-like when they interact with an external system. This fundamental fuzziness is a consequence of the dual wave-particle nature of matter.

In quantum mechanics, the evolution of a quantum system is described by Schrödinger’s wave equation. According to Schrödinger’s wave equation, elementary particles are fuzzy, wavelike entities that nonetheless exhibit a definite particle-like state when they are observed. But, even in identical circumstances, this state is not always the same state. The new theories accepted as part of quantum mechanics meant that scientists of the Contemporary worldview adopted a new perspective on cause and effect relationships, modifying their earlier belief in determinism. So, let’s consider what determinism is all about and briefly highlight what scientists of previous worldviews had to say about the concept, before further delving into what the Contemporary view is on this issue.

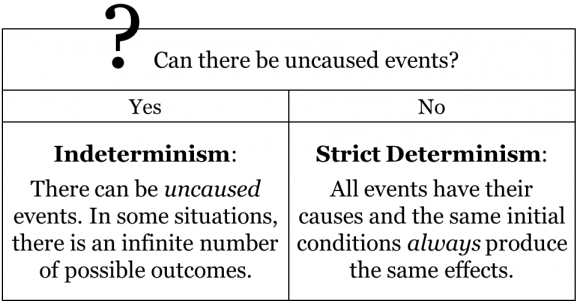

Quantum mechanics affected more than just our views on the nature of matter, but also our understanding of causation. The question of whether the future is completely determined by the past, or whether it is, in some sense, open, has been one of the central questions of metaphysics since the days of Aristotle. Different stances on this issue affect many aspects of one’s worldview, including one’s belief in free will, fate, and predetermination. The question also has serious moral repercussions. For instance, if everything in the universe turns out to be strictly determined by the past course of events, then how can we blame a criminal for their crimes? If their actions were indeed completely determined by the whole past of the universe, then, by some philosophical accounts, it is the universe that’s to be blamed, not the criminal! Just as with other metaphysical questions, the question of causation has had several different answers. The two basic views on causation are determinism and indeterminism.

Determinism (also known as causal determinism, or strict determinism) is essentially the idea that every event is determined by preceding events. More crudely, it means that for every effect, there is always a cause that bring it about. Thus, if we could know the present state of the universe (e.g. the positions, masses, and velocities of all the material particles in the universe), we could, in principle, calculate the future states of the universe. Of course, since we are not omniscient and since we don’t have unlimited measuring and calculating capacities, we may never actually be in a position to make such predictions and know the future with absolute precision. But this is beside the point; what is important here is that, according to strict determinists, nature itself “knows” where it is going: its future is determined by its past.

The opposite of determinism is indeterminism, which suggests that there can be uncaused events, i.e. events that are completely random and don’t follow from the preceding course of events. Note that indeterminists don’t necessarily claim that all events are uncaused. It’s sufficient to accept the existence of some uncaused events to qualify as an indeterminist.

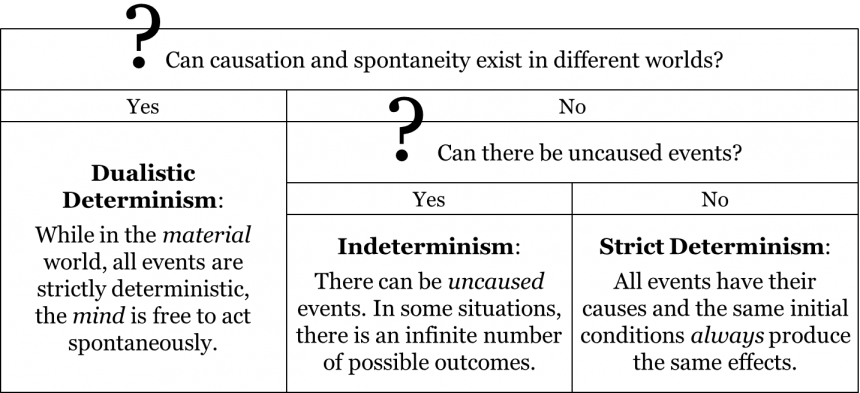

Determinism and indeterminism don’t exhaust the spectrum of views on the issue. One historically popular conception is that of dualistic determinism, which holds that while events in the material world are strictly determined, the human mind has free will and is capable of acting spontaneously. It was this view that was implicit in several worldviews.

In the Aristotelian-Medieval worldview, nearly all events were considered strictly determined, except for those events affected by human free will or divine acts. The Aristotelian-Medieval community believed that all celestial phenomena are strictly deterministic, as they knew that the future positions of stars and planets were predictable. They extended this deterministic view to terrestrial phenomena as well, because the celestial realm exerted influence on the natural processes of the terrestrial realm. That is, medieval scholars held that terrestrial processes unaffected by human free will were also deterministic. However, they also believed that a benevolent God had granted humans free will – an ability to act spontaneously and make decisions that do not always follow from the preceding courses of events. They accepted that many human actions and decisions were uncaused. In other words, the conception of dualistic determinism was implicit in the Aristotelian-Medieval worldview: in the material world all events are deterministic, but the mind is free to act spontaneously. Philosophically speaking, the conception of dualistic determinism differs from both strict determinism and indeterminism in that it assumes that causation and spontaneity can exist in different worlds:

While disagreeing with Aristotelian-Medieval scholars on many metaphysical issues, both Cartesians and Newtonians held a very similar perspective on the issues of causation: both communities accepted dualistic determinism, albeit for their own reasons. Their deterministic stances regarding the material world actually stemmed from the universality of their laws of physics. For instance, in Newton’s physics, if one knows the current arrangement of planets in the solar system, one can at least in principle predict the positions of planets for any given moment in the future. The same goes for any material system, no matter how complex. While it may be virtually impossible for humans to do the actual calculations and make these predictions, according to the laws of Newtonian physics the future of any material system strictly follows from its past. The same holds for Cartesian physics: the same initial conditions always produce the same effects. That said, Cartesians and Newtonians also accepted that the mind has free will to make uncaused decisions and, thus, disrupt the otherwise deterministic course of events. As a result, both Cartesians and Newtonians believed that the future of the world wasn’t strictly deterministic as long as it was affected by creatures with free will. Thus, dualistic determinism was implicit in not only the Aristotelian-Medieval worldview, but in the Cartesian and Newtonian worldviews as well.

Now we can consider how the theories of quantum mechanics and the metaphysical principle of wave-particle duality in the Contemporary worldview affect current scientists’ views on determinism. A quick hint: they’re not dualistic determinists.

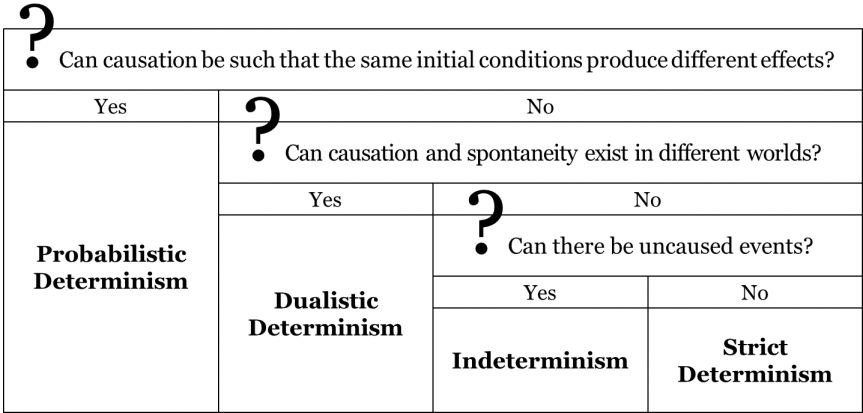

To better understand the stance of the Contemporary worldview on the question of causation, let’s consider the process of radioactive decay. Nowadays, physicists accept that there is a 50% probability that an atom of radium will decay into radon and helium after 1600 years. This period of time is known as the half-life of radium. Say we could isolate one thousand atoms of radium, place them into a sealed container, and open the container again after 1600 years. Statistically speaking, we’re most likely to find about 500 atoms of radium left, while the other 500 atoms will have decayed into radon and helium. What we observe here is that all of the atoms of radium were placed into the same container, yet only half of them decayed while the rest remained intact. The fact that the same initial conditions can lead to two distinct outcomes suggests that the fundamental processes of the world are probably not strictly deterministic. Instead, quantum physics seems to suggest that fundamental processes are determined probabilistically. This is the view of probabilistic determinism, which is implicit in the Contemporary worldview.

According to probabilistic determinism, all events have their causes, but the same initial conditions may produce different effects.

All of these effects are probabilistically determined, in the sense that there is a certain likelihood that a certain course of events will occur, but this course of events is not strictly determined by the past course of events. In our example above, there is a statistical likelihood for decay, i.e. there is a 50% chance of radium decaying after 1600 years. Yet, the theory doesn’t indicate which of the atoms will or will not decay in 1600 years. Probabilistic determinism interprets this as suggesting that future events are determined by past events but not strictly: nature is constrained to a limited number of options.

Consider another example, the double-slit experiment in quantum physics. Essentially, the theory allows us to predict the probability with which an electron will strike a certain region on the wall. That probability will be greater in lighter regions of the diffraction pattern, and smaller in darker regions.

It is impossible to say with certainty where on the back screen a given electron will strike after passing through the slits. But there is a high probability that it will strike in one of the bright bands. According to probabilistic determinism, this is another indication that natural processes are caused probabilistically rather than strictly.

We should be careful not to confuse probabilistic determinism with indeterminism. While both concepts admit to a multiplicity of outcomes following the same initial conditions, there is a clear limit to that number of outcomes under probabilistic determinism. An indeterminist would, technically speaking, be open to the possibility that after 1600 years radium could transform into a bar of gold, or a pile of dirt, or Slimer the slimy green ghost from Ghostbusters. However, a probabilistic determinist places a limitation on the number of potential effects that could follow the same cause and suggests that not everything can happen: nature ‘chooses’ from a limited set of options. In other words, according to probabilistic determinism, there is not an infinite number of possible outcomes given a certain set of initial conditions.

Now, how would a strict determinist react to this? One natural reaction is to suspect that there is something deficient in our knowledge of the initial conditions. Indeed, when quantum mechanics was created in the 1920s, this was precisely the reaction of some famous physicists, including Albert Einstein. While Einstein appreciated that quantum mechanics provided a great improvement in our understanding of the world of elementary particles, he also argued that the theory is deficient as it fails to provide precise predictions of quantum phenomena. According to Einstein and other strict determinists, if half of the atoms of radium end up decaying while the other half do not, then perhaps this is because they didn’t start from the same initial conditions; perhaps there was some unknown cause that leads to only some of the atoms of radium decaying. This is the interpretation of the strict determinist, who believes that all events are strictly caused by past events and the probabilistic nature of our predictions is a result of the lack of knowledge on our part. According to strict determinists, there must be a hidden cause that explains why those particular 500 atoms ended up decaying. Our theories may be incapable of telling us what that hidden cause is, but that is exclusively our problem; nature itself “knows” where it’s going. In this view, future research may reveal that hidden cause and restore the strictly deterministic picture of the world. Strict determinists would compare the case of radioactive decay with that of a coin toss. If we were to toss a coin one thousand times, we would probably observe it landing on heads approximately half the time. Colloquially, we might say that there is a 50/50 chance of a flipped coin landing on heads or tails. However, this is clearly a result of our lack of knowledge of initial conditions. If we were to measure the initial position of the coin before each toss as well as the force applied to the coin, then we would be able to predict precisely whether it will land heads or tails. If we wanted to, we could even construct a contraption that could toss a coin in such a way that it would consistently land on heads. According to strict determinists, the situation with radioactive decay is similar, except that we are not in a position to measure the initial conditions precisely enough to be able to predict the outcome. However, nature “knows” the outcome of the process of decay, just as it “knows” whether a coin will land on heads or tails. In short, strict determinists would deny the idea of something being caused probabilistically; in their view, everything has a cause and follows from that cause in a strict fashion.

How would a probabilistic determinist respond to this? Probabilistic determinists would readily agree that our theories are not perfect descriptions of the world; after all, we are all fallibilists and understand that no empirical theory is perfect. But is this reason enough to ignore what our current theories tell us and instead speculate what our future theories will be like? In the absence of an absolutely correct description of the world, our best bet is to study carefully what our currently accepted theories tell us about the world. Is it conceivable that our future quantum theories will be strictly deterministic and will provide precise predictions of all quantum phenomena? If we are fallibilists, our answer is “yes”: such a scenario is conceivable. But it is equally conceivable that our future theories will be probabilistic – we have no way of forecasting this. Our best option is to stop guessing what our future theories may or may not bring us and focus instead on what our currently accepted theories have to say on the matter. Thus, according to probabilistic determinists, the implications of our current quantum theory should be taken seriously, and probabilistic determinism is one of these implications. On this view, the probabilistic predictions of quantum theory have nothing to do with our lack of knowledge or our failure to predict the outcome: the process is itself probabilistic. Since the theory tells us that radioactive decay is not a strictly deterministic process, then that’s how we should view it. Everything else is a speculation on what the future may have in store.

General Relativity and Cosmology

So, we understand a bit about how physics works at the quantum scale and its implications for the Contemporary worldview, but what about physics at the large cosmological scale? For this, we should refer to general theory of relativity proposed by Einstein in 1915. As we learned in chapter 1, Einstein’s theory of general relativity posits that objects with mass curve the space around them. This applies to all objects with mass but becomes especially apparent in the case of more massive objects, such as the Earth, the Sun, and the Milky Way galaxy, or extremely compressed objects like black holes. The curvature of space around an object depends on its mass and its density. This is why the curvature of space around a massive object compressed to extreme density, like the singularity of a black hole, is such that even rays of light cannot escape. Consequently, according to general relativity, there is no force of gravity and, therefore, objects don’t really attract each other, but merely appear to be attracting each other as a result of moving inertially in a curved space.

Similarly, general relativity predicts that material objects affect time, by making it run slower relative to a clock in a less strongly curved region of space-time. Suppose we have two synchronized clocks; we keep one on the surface of the Earth, and we take the other to the International Space Station, where space-time is less strongly curved than at the Earth’s surface. According to general relativity, the clock in the Space Station will run slightly faster than the one on Earth. This is because time on the Earth runs slightly slower due to the space-time curvature of Earth’s gravitational field. As a result, when the Space Station clock is brought back to the Earth, it will show a time that is slightly ahead of that shown by the one on the Earth. This difference between the elapsed times is known as time dilation. In general, the stronger the gravitational field of the object, the greater the time dilation. For instance, if the Earth were either compressed to a greater density or increased in mass, space-time would be more strongly curved at its surface. The difference between the two clocks would therefore be greater.

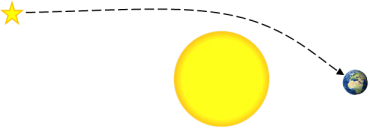

Both the effects of curved space and the effect of time dilation have been experimentally confirmed. It follows from Einstein’s general relativity that even light itself will bend noticeably around very massive objects. Consider a light ray coming from a distant star. According to the theory, it should slightly bend while passing in the vicinity of massive objects. It follows, therefore, that the same star should appear at a different location in space when the light coming from that star passes near the Sun.

To observe this effect, we first take a picture of that specific region of space at night and then take another picture of that same fragment of space when the Sun is in the vicinity. To take the latter picture we will need to wait for a solar eclipse to make sure that the light rays of the Sun don’t obstruct the light rays coming from the distant star. According to general relativity, the stars in the vicinity of the Sun will appear slightly dispersed from the Sun due to the fact that the light rays coming from these stars will bend in the Sun’s vicinity.

The phenomenon of light-bending was one of the novel predictions of general relativity. Thus, when the amount of light bending predicted by Einstein’s theory was first observed by Arthur Eddington in 1919, it was considered a confirmation of general relativity. Since the method of the time was the hypothetico-deductive method, this led to the acceptance of general relativity ca. 1920 and rejection of the Newtonian theory of gravity. Since then several other novel predictions of general relativity have been confirmed. For instance, the phenomenon of time dilation has been confirmed by slight differences in times shown by a pair of precise clocks on the Earth and the Space Station. The phenomenon was also confirmed by the fact that the clocks on GPS satellites run slightly faster than those on the Earth.

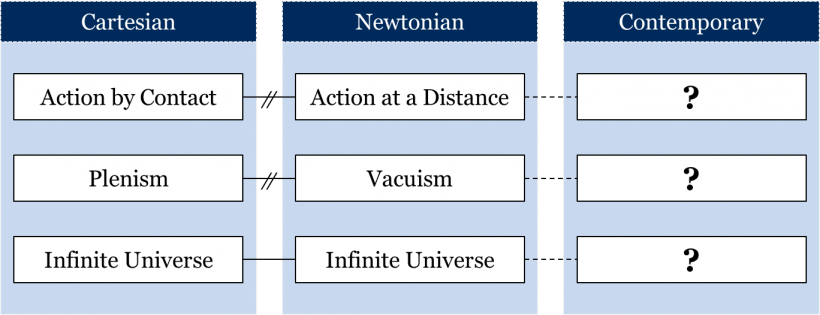

As with any fundamental theory, general relativity has an effect on accepted metaphysical principles. So how does general relativity shape our contemporary metaphysical principles? Explicating the metaphysical principles implicit in the Contemporary worldview is not always an easy task, since our currently accepted theories sometimes seem to suggest conflicting metaphysical principles. The point here is not that contemporary scientists are not in tune with their own theories. It’s instead that it’s difficult to know what principles to extract from our currently accepted theories. In what follows we will outline a number of metaphysical questions to which we won’t provide the contemporary answers. Instead, we will show why the extraction of the answers to these questions is not a simple task.

Let’s start with the question of empty space: according to the Contemporary worldview, can there be empty space? Physicists sometimes speak of the ‘vacuum of outer space’ to emphasize the overall emptiness of the universe. However, when pressed, they might admit that a few hydrogen atoms exist for every cubic meter of outer space, which might be interpreted in tune with the conception of plenism. Yet, between the hydrogen atoms would be nothingness, meaning a very close approximation of a vacuum seems to be possible. On the other hand, observations of cosmic microwave background radiation also suggest that photons leftover from the Big Bang permeate all seeming empty space of the universe. This seems to suggest that a perfect vacuum in nature is impossible after all. Then again, general relativity tells us that space and time lack an independent existence and instead constitute a unified four-dimensional space-time, which is affected by both matter and energy in the universe. The dependence of space-time on matter and energy seems to suggest that there can be no empty space. Quantum mechanics also implies that there is no empty space. In addition to the position and momentum uncertainty relationship mentioned earlier, Heisenberg’s uncertainty principle involves a time and energy uncertainty relationship, which means that the shorter the time interval one considers, the greater the uncertainty regarding the amount of energy in a region of space. In effect, in any region of space, so-called virtual particles are constantly popping into existence and vanishing from existence. This phenomenon is referred to as quantum foam. This surprising effect is a well-tested phenomenon, supported by many experiments. Perhaps, then, plenism is implicit in the Contemporary worldview. Thus, the question is: which metaphysical view – vacuism or plenism – is implicit in our Contemporary worldview?

What about motion in the universe: do objects move exclusively as a result of action by contact, or is action at a distance possible in the Contemporary worldview? On the one hand, we accept nowadays that the speed of light is the ultimate speed in the universe. Traveling faster than the speed of light would violate our current laws of physics. Physics therefore rules out special cases of action at a distance faster than the speed of light, most clearly cases of instantaneous action at a distance. According to general relativity even changes in the curvature of space-time propagate at the speed of light. To appreciate this, consider that the Sun curves the space-time around it. It takes the light rays of the Sun approximately 8 minutes to reach the Earth. If, by any miracle, the Sun were to disappear, it would take approximately 8 minutes for the changes in the curvature of the space-time to propagate and reach the Earth and change the Earth’s orbital motion. The Earth wouldn’t start hurtling away into space immediately. If instantaneous action at a distance is theoretically impossible, then perhaps all matter moves and interacts through actual contact limited by the speed of light.

On the other hand, physicists working on quantum mechanics tell us of quantum entanglement, which – at least at face value – seems to allow for instantaneous action at a distance. Quantum entanglement is a phenomenon whereby a pair of particles at an arbitrary distance apart is entangled such that measuring and manipulating the properties of one particle in the pair causes the other, at any distance away, to instantaneously change as a result. This phenomenon was first experimentally confirmed in 1982 by Alain Aspect. Since then, numerous experiments were conducted with different types of particles, with different properties of particles (e.g. momentum, spin, location), as well as with varied distances between the entangled particles. Every time, manipulating one particle instantaneously changes the other. These experiments with quantum entanglement seem to suggest that instantaneous action at a distance is possible, despite the limitations imposed by the speed of light. This is clearly in conflict with the idea of action by contact that follows from general relativity. Now the question is: do contemporary physicists accept the possibility of action at a distance, or do they manage to reconcile the seeming action at a distance of entangled particles with the idea of action by contact?

Our final question of this section is whether the universe is finite or infinite. As far as the related question of physical boundaries of the universe is concerned, Contemporary science accepts that the universe is boundless, i.e. there are no physical boundaries to the universe. But a universe without boundaries can still be finite if the universe loops back into itself, like the four-dimensional equivalent of a sphere. This kind of a universe will be boundless but finite. Alternatively, the universe might be boundless and infinitely stretching outwards. So, do we accept in the Contemporary worldview that the universe is finite or infinite? Answering this question actually depends on the actual curvature of the space of the universe. Alexander Friedmann suggested three models of the universe, each with its distinct curvature:

Open universe: An open universe has a negative curvature, meaning that space-time curves in two different directions from a single point. While the curvature of a three-dimensional space can be precisely described mathematically, it is difficult (if not impossible) to imagine. It becomes easier if we think in terms of a two-dimensional analogue, in which case a three-dimensional space becomes a two-dimensional surface. In the case of an open universe this surface is curved into a shape like a saddle, or a Pringle chip. Since space-time would never curve back onto itself in this type of universe, the open universe will also be an infinite universe.

Closed universe: A closed universe has a positive curvature, meaning that space-time curves away in the same direction from any single point in the universe. Again, using our two-dimensional analogy, we can think of a closed universe as a surface shaped like a sphere, curving in the same direction from any point. It would also be a finite universe, for the continuous curve would eventually circle back around to itself. If we travelled far enough out into space on a spaceship, we would eventually reach Earth!

Flat universe: A flat universe has, overall, zero curvature, meaning that space-time extends in straight lines in all directions away from any single point. We can think of a flat universe as being shaped like a flat piece of paper, or a flat bedsheet. Such a universe would still have occasional bends here and there around massive objects; however, it would be flat overall. A flat universe would be an infinite universe, as space-time is never curving back onto itself.

All three models of the universe are compatible with general relativity. Yet these three models of the universe propose different geometries of the universe. Now, which of these models best describes the space of our universe? The answer to this question depends on how much mass there is in the universe. If the mass of the universe is greater than a certain threshold, then the universe is curved onto itself and is finite; otherwise it is open and infinite.

One takeaway here is that it’s not always easy to extract the metaphysical principles implicit in a worldview. Often it requires a meticulous analysis of the theories accepted in that mosaic and the consequences that follow from these theories. Thus, the stance of a community towards a metaphysical question may or may not be easy to unearth. The question of empty space, the question of action at a distance, and the question of finite/infinite universe are some of the most challenging metaphysical questions in the context of the Contemporary worldview.

The Big Bang theory

What about the topic of religion in the Contemporary worldview? It’s a strange topic to bring up while discussing the physics of special and general relativity. But in a sense, physicists traversed into the territory of theologians in the twentieth century when they attempted to look at the origins of the universe. The currently accepted theory on the origins of the universe is called the Big Bang theory.

In the late 1800s and early 1900s, astronomers began using a new instrument to observe celestial objects called a spectroscope. Spectroscopes essentially measure the distribution of wavelengths of light being emitted from an astronomical object, allowing astronomers to determine certain features of a star, galaxy, or other celestial body. Because each chemical element has its own characteristic spectral “fingerprint”, the spectroscope can be used to determine the chemical composition of an astronomical object. The spectroscope can also be used to determine whether a light-emitting object is approaching or moving away from the point of observation (i.e. a telescope on or near Earth) by means of the Doppler effect. Imagine every source of light emitting pulses of light waves at regular intervals. Imagine that an object is moving towards the observer. Light waves from such an object will appear shortened in wavelength. Particular spectral “fingerprints” will appear at shorter wavelengths than expected. That is, they will be blueshifted. On the other hand, if the object is moving away from the observer, light waves from the object will be lengthened, and spectral “fingerprints” will be redshifted. One reason we believe that the Andromeda galaxy is on a collision course with our own Milky Way galaxy is that the spectral “fingerprints” of substances in its spectrum are blueshifted when observed with a spectroscope.

However, the vast majority of other galaxies observed in the universe by spectroscope show spectral “fingerprints” that are redshifted. The American astronomer Edwin Hubble showed, in 1929, that the degree of redshift of a galaxy is proportional to its distance from us. Every galaxy or galaxy cluster in the universe is moving away from every other – the universe as a whole is expanding. If the universe is presently expanding, then we can theoretically reverse time to when galaxies were closer together, and ultimately the entire universe was coalesced into a hot, dense early state. According to the Big Bang theory, the universe evolved from such a hot dense early state to its current state. In Einstein’s general theory of relativity, it was space itself that expanded. There was no explosion with matter expanding into a void space. The hot dense early state filled all of space. If we use the equations of general relativity to probe back to the very beginning, they predict that space, time, matter, and energy coalesce at a point of infinite density and zero volume, the singularity. This singularity is like that at the centre of a black hole, so that the Big Bang resembles the collapse of a star in reverse.

The Big Bang theory was confirmed in 1964 by Robert Wilson and Arno Penzias. They discovered the so-called cosmic microwave background radiation; electromagnetic radiation that permeates the whole space of the universe and is thought to be a remnant of the hot dense early stages of the universe following the Big Bang. The detection of this cosmic microwave background radiation was a confirmation of the Big Bang theory; the theory became accepted shortly thereafter.

By reversing time to the origins of the universe, physicists and astronomers were stepping on the toes of theologians who had relegated the creation of the universe to God. In response, some theologians reasoned that, even if God had not created the universe in its present state and the universe is instead a product of the Big Bang, there was still room for God to have initiated that Big Bang. Perhaps God did not create life, but he did start the process that would eventually lead to the creation of life, or so they argued. Since theologians of earlier worldviews claimed authority over matters such as the origins of life and the universe, it would therefore seem that even with the acceptance of the Big Bang theory, monotheism – the belief that there exists only one god, as in the Christian, Jewish, and Islamic faiths – can remain an implicit metaphysical element of the Contemporary worldview for members of the respective communities.

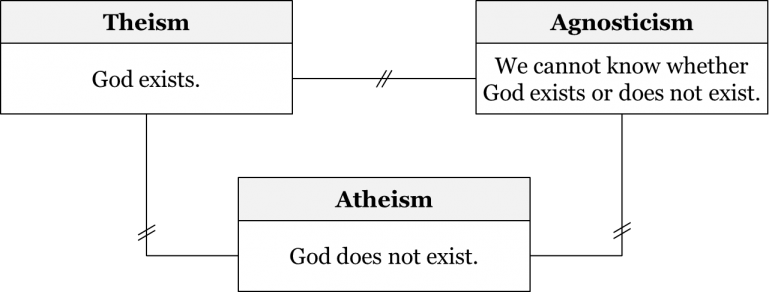

But the Contemporary mosaic doesn’t contain monotheism or any other theistic conception (theism is the general idea that God exists; thus, monotheism is a subtype of theism). One might next reason that we have rejected theology and monotheism from our current mosaic and replaced it with atheism – the belief that God does not exist. But this, too, is not currently accepted. Nowadays, the idea of agnosticism is implicit in the mosaic. Agnosticism is the view that we cannot know whether or not God exists. If asked about what caused the Big Bang, physicists would probably answer that we simply don’t know.

These physicists may be worried about some major unknowns in theories of the early universe. The high density of matter and strongly curved space-time near the beginning of the Big Bang can only be properly understood using Einstein’s general theory of relativity. According to some physicists, the Big Bang singularity may, in fact, represent a breakdown of the theory. If the singularity was indeed physically real, it is a boundary of time. It would then make no sense to ask what came before it, or what caused it. However, there are reasons to doubt that general relativity is alone sufficient to understand the very early universe, as its tiny dimensions also place it within the realm of quantum mechanics. Quantum mechanics and general relativity are logically inconsistent and have never been properly unified. They are accepted within the same mosaic because Einstein’s theory is typically applied to the realm of the very large, and quantum mechanics to that of the very small. The extreme conditions of the Big Bang require the application of both theories in conjunction, something on the very frontiers of current knowledge. Further progress may require the unification of the two theories in a theory of quantum gravity, currently a pursued goal of theoretical physics. Some physicists speculate that the Big Bang may have been a random quantum event, like the appearance of virtual particles in the quantum foam. Others argue for models in which the extreme conditions of the singularity are avoided, and the Big Bang was caused by the collapse of a prior universe. So, the same objections that David Hume raised to the natural theology of his time (see chapter 9) apply with equal force to the idea that God caused the Big Bang or “fine-tuned” the physical properties of the universe to make them compatible with our form of life.

There is another reason why modern physicists and astronomers may be deeply reluctant to accept theological conclusions regarding the Big Bang. The search for signs of other intelligent beings in the cosmos is a pursued goal of modern astronomy. Any claim of such intelligence must meet the criteria of the contemporary employed method summarized by astronomer Carl Sagan’s maxim that “extraordinary claims demand extraordinary evidence”. This search has occasioned a series of false alarms – each one an illustration of David Hume’s worry that unfamiliar natural phenomena can easily be confused with the designed artefacts of a powerful intelligence. By the method currently employed to evaluate hypotheses concerning extra-terrestrial intelligence, no claim that a cosmic phenomenon was engineered by a non-human intelligence would be accepted unless all plausible explanations involving non-intelligent natural phenomena were first ruled out. In the case of theological claims about the Big Bang, the discussion above illustrates why physicists and astronomers, as a community, are unlikely to believe that the standards of this method have been met.

Questions of what happened before the Big Bang are, at best, regarded as highly speculative by contemporary scientists. A strong indication of this reticence among scientists towards religious questions would be to look at successful grant winners in the hard sciences and recognize the types of questions they are trying to answer have nothing to do with God and God’s role in the universe. While individual scientists hold varied religious views, the accepted view of the scientific community appears to be one of agnosticism about the existence of God. God may or not have played a role in the origins of the universe, but this is a question unanswerable by our current mosaic, so we simply state that we do not know.

Answering exactly how and when theology was exiled from the mosaic is quite difficult. Was it with the observation of redshift and subsequent acceptance of the Big Bang theory? Was it the consequence of developments in evolutionary biology that similarly eliminated the role of God in the creation of humanity? Was David Hume’s critique of natural theology the critical factor? At this point, we simply don’t know. Suffice it to say, while monotheism was implicit in the Aristotelian-Medieval, Cartesian, and Newtonian worldviews, agnosticism is implicit in the Contemporary worldview.

Contemporary Methods

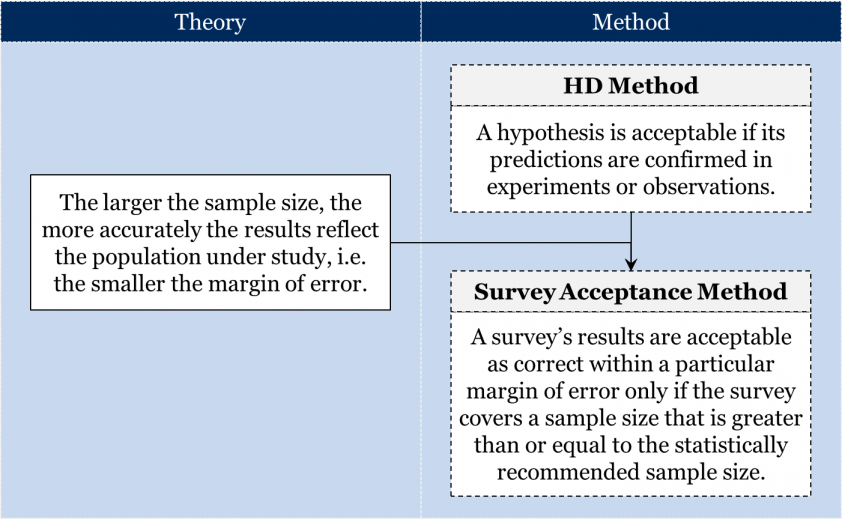

What are the methods employed in the Contemporary worldview? As far as the fundamental requirements of empirical science are concerned, not much has changed since the Newtonian worldview. Contemporary scientists working in the empirical sciences continue to employ the hypothetico-deductive (HD) method. So, in order to become accepted, an empirical theory is usually expected to either provide a confirmed novel prediction (if it happens to introduce changes into the accepted ontology) or be sufficiently accurate and precise (in all other cases).

It is important to note that this doesn’t mean that there have been no changes in methods since the 18th century. In fact, many more concrete methods have become employed since then; each of these methods are based on the general requirements of the HD method. One obvious example is the different drug-testing methods that we considered in chapter 4: all of them were more specific implementations of the requirements of the HD method. For example, the double-blind trial method has the same requirements as the HD method plus a few additional requirements: that the drug’s effect should be confirmed in a double-blind trial. Generally speaking, there are many concrete methods, that impose additional requirements on top of the ones stipulated by the HD method.

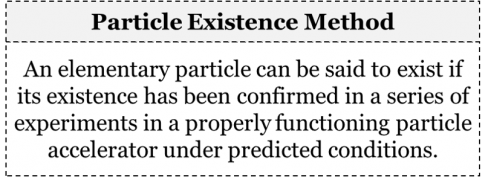

Consider, for instance, our contemporary requirements concerning the acceptability of new elementary particles. Suppose there is a new theory that hypothesizes the existence of a new type of particle. Under what circumstances would we be prepared to accept the existence of that hypothetical particle? We would probably expect the hypothesis to be tested just as the HD method stipulates. Yet, we would likely expect something even more specific, i.e. we would expect the particle to be observed in a series of experiments in a properly functioning particle accelerator under predicted conditions. Thus, we could say that our particle existence method can be explicated along these lines:

Consider another example. Suppose there is a sociological hypothesis that proposes a certain correlation between family size and media consumption: say, that larger families devote less time to consuming media content than smaller ones do. Note that this is not proposing a causal relationship between family size and media consumption time, but merely suggesting a correlation. Now, how would we evaluate this hypothesis? We would probably expect it to be confirmed by a suitable sociological survey of a properly selected population sample. It is clear that we wouldn’t expect 100% of the population to be surveyed; that’s not feasible. Instead, we would expect the survey to cover a certain smaller sample of the population. But how small can the sample size be without rendering the results of the survey unacceptable? This is where our knowledge of probability theory and statistics comes into play. According to the theory of probability, if a sample is selected randomly, then a greater sample size normally results in a more accurate survey. Thus, every such survey will necessarily have a certain margin of error – the range of values within which the figure can be said to correctly reflect the actual state of affairs. The greater the sample size, the smaller the margin of error. There are statistical equations that allow one to calculate the margin of error given the population size and sample size. For example, if we were to study the relationship between family size and media consumption across Canada with its population of about 37 million people, then a sample size of 1,500 would have a margin of error of 2.53%. In short, we would accept the results of a sociological survey as correct within a particular margin of error only if the survey covered a sample size that was greater than or equal to the statistically recommended sample size.

In addition to the HD method, contemporary science also seems to employ the axiomatic-deductive method. According to this method, a proposition is acceptable either if it is an axiom of a theory or if it is a theorem that logically follows from the accepted axioms. This is the method currently employed in formal sciences, such as mathematics or logic. Each mathematical theory postulates a set of axioms which define the mathematical structure under study and then proceed with deducing all sorts of theorems about these structures. Recall, for instance, the axioms of different geometries from chapter 2 which provided different definitions of “triangle”. In each of these different geometries, all the theorems about triangles logically follow from the respective axioms.

This method sounds similar to the intuitive-deductive method, and indeed it is: after all both methods require the theorems to be deductive consequences of the accepted axioms. But a key difference is that the method of intuition requires the axioms to be intuitively true, while the axiomatic-deductive method doesn’t require that. The axiomatic-deductive method considers the axioms to be merely defining the formal object under study regardless of whether they appear intuitively true or counterintuitive.

In brief, it is important to keep in mind that there might be many different methods in different fields of inquiry. Some methods might be very general and applicable to a wide range of phenomena (e.g. the HD method, the axiomatic-deductive method), while other methods may be applicable only to a very specific set of phenomena (e.g. drug-testing methods). In any event, these methods will be the deductive consequences of our accepted theories.

Summary

The following table summarizes some of the metaphysical principles of the Contemporary worldview:

We’ve also left a few question marks here and there:

This concludes our discussion of our four snapshots from the history of science.